Difference between revisions of "CyberShake Workflow Framework"

| (64 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

This page provides documentation on the workflow framework that we use to execute CyberShake. | This page provides documentation on the workflow framework that we use to execute CyberShake. | ||

| − | As of 2018, we use shock.usc.edu as our workflow submit host for CyberShake, but this setup could be replicated on any machine | + | As of 2018, we use shock.usc.edu as our workflow submit host for CyberShake, but this setup could be replicated on any machine. |

== Overview == | == Overview == | ||

| + | |||

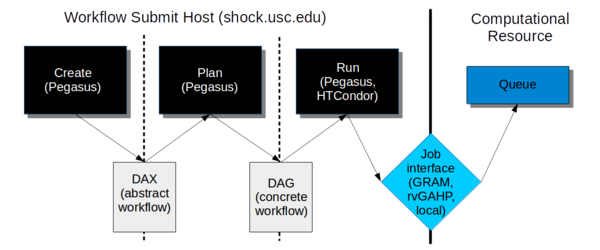

| + | [[File:workflow_overview.png|thumb|600px|Overview of workflow framework]] | ||

The workflow framework consists of three phases: | The workflow framework consists of three phases: | ||

| Line 9: | Line 11: | ||

#<b>Create</b>. In this phase, we use the Pegasus API to create an "abstract description" of the workflow, so named because it does not have the specific paths or runtime configuration information for any system. It is a general description of the tasks to execute in the workflow, the input and output files, and the dependencies between them. This description is called a DAX and is in XML format. | #<b>Create</b>. In this phase, we use the Pegasus API to create an "abstract description" of the workflow, so named because it does not have the specific paths or runtime configuration information for any system. It is a general description of the tasks to execute in the workflow, the input and output files, and the dependencies between them. This description is called a DAX and is in XML format. | ||

#<b>Plan</b>. In this phase, we use the Pegasus planner to take our abstract description and convert it into a "concrete description" for execution on one or more specific computational resources. At this stage, paths to executables and specific configuration information, such as the correct certificate to use, what account to charge, etc. are added into the workflow. This description is called a DAG and consists of multiple files in a format expected by HTCondor. | #<b>Plan</b>. In this phase, we use the Pegasus planner to take our abstract description and convert it into a "concrete description" for execution on one or more specific computational resources. At this stage, paths to executables and specific configuration information, such as the correct certificate to use, what account to charge, etc. are added into the workflow. This description is called a DAG and consists of multiple files in a format expected by HTCondor. | ||

| − | #<b>Run</b>. In this phase, we use Pegasus to hand our DAG off to HTCondor, which supervises the execution of the workflow. HTCondor maintains a queue of jobs. Jobs with all their dependencies satisfied are eligible to run | + | #<b>Run</b>. In this phase, we use Pegasus to hand our DAG off to HTCondor, which supervises the execution of the workflow. HTCondor maintains a queue of jobs. Jobs with all their dependencies satisfied are eligible to run and are sent to their execution system. |

| + | |||

| + | We will go into each phase in more detail below. | ||

== Software Dependencies == | == Software Dependencies == | ||

| + | |||

| + | Before attempting to create and execute a workflow, the following software dependencies must be satisfied. | ||

=== Workflow Submit Host === | === Workflow Submit Host === | ||

| + | |||

| + | In addition to Java 1.7+, we require the following software packages on the workflow submission host: | ||

| + | |||

| + | ==== Pegasus-WMS ==== | ||

| + | |||

| + | Purpose: We use Pegasus to create our workflow description and plan it for execution with HTCondor. Pegasus also provides a number of other features, such as automatically adding transfer jobs, wrapping jobs in kickstart for runtime statistics, using monitord to populate a database with metrics, and registering output files in a catalog. | ||

| + | |||

| + | How to obtain: You can download the latest version (binaries) from https://pegasus.isi.edu/downloads/, or clone the git repository at https://github.com/pegasus-isi/pegasus . Usually we clone the repo, as with some regularity we need new features implemented and tested. We are currently running 4.7.3dev on shock, but you should install the latest version. | ||

| + | |||

| + | Special installation instructions: Follow the instructions to build from source - it's straightforward. On shock, we install Pegasus into /opt/pegasus, and update the default link to point to our preferred version. | ||

| + | |||

| + | ==== HTCondor ==== | ||

| + | |||

| + | Purpose: HTCondor performs the actual execution of the workflow. Part of Condor, DAGMan, maintains a queue and keeps track of job dependencies, submitting jobs to the appropriate resource when it's time for them to run. | ||

| + | |||

| + | How to obtain: HTCondor is available at https://research.cs.wisc.edu/htcondor/downloads/, but installing from a repo is the preferred approach. We are currently running 8.4.8 on shock. You should install the 'Current Stable Release'. | ||

| + | |||

| + | Special installation instructions: We've been asking the system administrator to install it in shared space. | ||

| + | |||

| + | |||

| + | HTCondor has some configuration information which must be setup correctly. On shock, the two configuration files are stored at /etc/condor/condor_config and /etc/condor/condor_config.local. | ||

| + | |||

| + | In condor_config, make the following changes: | ||

| + | |||

| + | *LOCAL_CONFIG_FILE = /path/to/condor_config.local | ||

| + | *ALLOW_READ = * | ||

| + | *ALLOW_WRITE should be set to IPs or hostnames of systems which are allowed to add nodes to the Condor pool. For example, on shock it is currently ALLOW_WRITE = *.ccs.ornl.gov, *.usc.edu | ||

| + | *CONDOR_IDS = uid.gid of 'Condor' user | ||

| + | *All the MAX_*_LOG entries should be set to something fairly large. On shock we use 100000000. | ||

| + | *DAEMON_LIST = MASTER, SCHEDD, COLLECTOR, NEGOTIATOR | ||

| + | *HIGHPORT and LOWPORT should be set to define the port range Condor is allowed to use | ||

| + | *DELEGATE_JOB_GSI_CREDENTIALS=False ; when set to True, Condor sometimes picked up the wrong proxy for file transfers. | ||

| + | |||

| + | In condor_config.local, make the following changes: | ||

| + | |||

| + | *NETWORK_INTERFACE = <machine ip> | ||

| + | *DAGMAN_MAX_SUBMITS_PER_INTERVAL = 80 | ||

| + | *DAGMAN_SUBMIT_DELAY = 1 | ||

| + | *DAGMAN_LOG_ON_NFS_IS_ERROR = False | ||

| + | *START_LOCAL_UNIVERSE = TotalLocalJobsRunning<80 | ||

| + | *START_SCHEDULER_UNIVERSE = TotalSchedulerJobsRunning<200 | ||

| + | *JOB_START_COUNT = 50 | ||

| + | *JOB_START_DELAY = 1 | ||

| + | *MAX_PENDING_STARTD_CONTACTS = 80 | ||

| + | *SCHEDD_INTERVAL = 120 | ||

| + | *RESERVED_SWAP = 500 | ||

| + | *CONDOR_HOST = <workflow management host> | ||

| + | *LOG_ON_NFS_IS_ERROR = False | ||

| + | *DAEMON_LIST = $(DAEMON_LIST) STARTD | ||

| + | *NUM_CPUS = 50 | ||

| + | *START = True | ||

| + | *SUSPEND = False | ||

| + | *CONTINUE = True | ||

| + | *PREEMPT = False | ||

| + | *KILL = False | ||

| + | *REMOTE_GAHP = /path/to/rvgahp_client | ||

| + | *GRIDMANAGER_MAX_SUBMITTED_JOBS_PER_RESOURCE = 100, /tmp/cybershk.titan.sock, 30 | ||

| + | *GRIDMANAGER_MAX_PENDING_SUBMITS_PER_RESOURCE = 20 | ||

| + | *GRIDMANAGER_MAX_JOBMANAGERS_PER_RESOURCE = 30 | ||

| + | |||

| + | ==== Globus Toolkit ==== | ||

| + | |||

| + | Purpose: The Globus Toolkit provides tools for job submission, file transfer, and management of certificates and proxies. | ||

| + | |||

| + | How to obtain: You can download it from http://toolkit.globus.org/toolkit/downloads/latest-stable/ . | ||

| + | |||

| + | Special installation instructions: Configuration files are located in install_dir/etc/*.conf. You should review them, especially the certificate-related configuration, and make sure that they are correct. | ||

| + | |||

| + | The Globus Toolkit reached end-of-life in January 2018, so it's not clear that it will be needed going forward. Currently, it is installed on shock at /usr/local/globus . | ||

| + | |||

| + | ==== rvGAHP ==== | ||

| + | |||

| + | To enable rvGAHP submission for systems which don't support remote authentication using proxies (currently Titan), you should configure rvGAHP on the submission host as described here: https://github.com/juve/rvgahp . | ||

=== Remote Resource === | === Remote Resource === | ||

| + | |||

| + | ==== Pegasus-WMS ==== | ||

| + | |||

| + | On the remote resource, only the Pegasus-worker packages are technically required, but I recommend installing all of Pegasus anyway. This may also require installing Ant. Typically, we install Pegasus to <CyberShake home>/utils/pegasus. | ||

==== GRAM submission ==== | ==== GRAM submission ==== | ||

| + | |||

| + | To support GRAM submission, the Globus Toolkit (http://toolkit.globus.org/toolkit/) needs to be installed on the remote system. It's already installed at Titan and Blue Waters. Since the toolkit reached end-of-life in January 2018, there is some uncertainty as to what we will move to. | ||

==== rvGAHP submission ==== | ==== rvGAHP submission ==== | ||

| + | |||

| + | For rvGAHP submission - our approach for systems which do not permit the use of proxies for remote authentication - you should follow the instructions at https://github.com/juve/rvgahp to set up rvGAHP correctly. | ||

| + | |||

| + | == shock.usc.edu configuration == | ||

| + | |||

| + | On shock, the primary directory for managing workflows is the 'runs' directory, /home/scec-02/cybershk/runs . This is where the create/plan/run scripts, the workflow configuration files, the catalogs, and the workflows themselves are located. Note that because of the way the Java paths in the scripts are set up, if you invoke them from a different directory they may not be able to find the DAX generator. | ||

== Create == | == Create == | ||

| + | |||

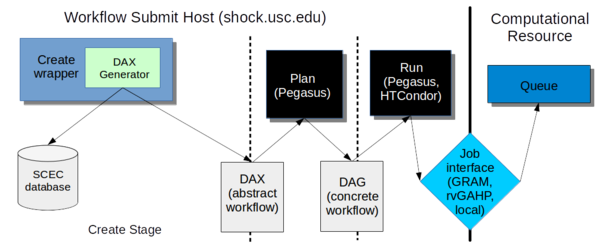

| + | [[File:create.png|thumb|600px|Overview of create stage]] | ||

| + | |||

| + | In the creation stage, we invoke a wrapper script which calls the DAX Generator, which creates the DAX file for a CyberShake workflow. | ||

| + | |||

| + | At creation, we decide which workflow we want to run -- SGT only, post-processing only, or both ('integrated'). The reason for this option is that often we want to run the SGT and post-processing workflows in different places. For example, we might want to run the SGT workflow on Titan because of the large number of GPUs, and then the post-processing on Blue Waters because of its XE nodes. If you create an integrated workflow, both the SGT and post-processing parts will run on the same machine. (This is because of the way that we've set up the planning scripts; this could be changed in the future if needed). | ||

| + | |||

| + | === Wrapper Scripts === | ||

| + | |||

| + | Four wrapper scripts are available, depending on which kind of workflow you are creating. | ||

| + | |||

| + | On shock, all of these wrapper scripts are installed in /home/scec-02/cybershk/runs. Each creates a directory, <site name>_<workflow type>_dax/run_<run_id>, where all the workflow files are located for that run. | ||

| + | |||

| + | ==== create_sgt_dax.sh ==== | ||

| + | |||

| + | <b>Purpose:</b> To create the DAX files for an SGT-only workflow. | ||

| + | |||

| + | <b>Detailed description:</b> create_sgt_dax.sh takes the command-line arguments and, by querying the database determines a Run ID to assign to the run. The entry corresponding to this run in the database is either updated or created. This run ID is then added to the file <site short name>_SGT_dax/run_table.txt. It then compiles the DAX Generator (in case any changes have been made) and runs it with the correct command-line arguments, creating the DAXes. Next, a directory, <site short name>_SGT_dax/run_<run id>, is created, and the DAX files are moved into that directory. Finally, the run in the database is edited to reflect that creation is complete. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #New velocity models are added. The wrapper encodes a mapping between a string and the velocity model ID in lines 129-152, and a new entry must be added for a new model. | ||

| + | #New science parameters need to be tracked. Currently the site name, ERF id, SGT variation id, rupture variation scenario id, velocity model id, frequency, and source frequency are used. If new parameters are important, they would need to be captured, included in the run_table file, and passed to the Run Manager (which would also need to be edited to include it). | ||

| + | #Arguments to the DAX Generator change. | ||

| + | |||

| + | <b>Source code location:</b> http://source.usc.edu/svn/cybershake/import/trunk/runs/create_sgt_dax.sh | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Workflow_Framework#RunManager|Run Manager]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | create_sgt_dax.sh | ||

| + | CyberShake_SGT_DAXGen | ||

| + | |||

| + | <b>Compile instructions:</b>None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre>Usage: ./create_sgt_dax.sh [-h] <-v VELOCITY_MODEL> <-e ERF_ID> <-r RV_ID> <-g SGT_ID> <-f FREQ> <-s SITE> [-q SRC_FREQ] [--sgtargs SGT dax generator args] | ||

| + | -h display this help and exit | ||

| + | -v VELOCITY_MODEL select velocity model, one of v4 (CVM-S4), vh (CVM-H), vsi (CVM-S4.26), vs1 (SCEC 1D model), vhng (CVM-H, no GTL), or vbbp (BBP 1D model). | ||

| + | -e ERF_ID ERF ID | ||

| + | -r RUP_VAR_ID Rupture Variation ID | ||

| + | -g SGT_ID SGT ID | ||

| + | -f FREQ Simulation frequency (0.5 or 1.0 supported) | ||

| + | -q SRC_FREQ Optional: SGT source filter frequency | ||

| + | -s SITE Site short name | ||

| + | |||

| + | Can be followed by optional SGT arguments: | ||

| + | usage: CyberShake_SGT_DAXGen <output filename> <destination directory> | ||

| + | [options] [-f <runID file, one per line> | -r <runID1> <runID2> ... ] | ||

| + | -d,--handoff Run handoff job, which puts SGT into pending file | ||

| + | on shock when completed. | ||

| + | -f <runID_file> File containing list of Run IDs to use. | ||

| + | -h,--help Print help for CyberShake_SGT_DAXGen | ||

| + | -mc <max_cores> Maximum number of cores to use for AWP SGT code. | ||

| + | -mv <minvs> Override the minimum Vs value | ||

| + | -ns,--no-smoothing Turn off smoothing (default is to smooth) | ||

| + | -r <runID_list> List of Run IDs to use. | ||

| + | -sm,--separate-md5 Run md5 jobs separately from PostAWP jobs | ||

| + | (default is to combine). | ||

| + | -sp <spacing> Override the default grid spacing, in km. | ||

| + | -sr,--server <server> Server to use for site parameters and to insert | ||

| + | PSA values into | ||

| + | -ss <sgt_site> Site to run SGT workflows on (optional) | ||

| + | -sv,--split-velocity Use separate velocity generation and merge jobs | ||

| + | (default is to use combined job) | ||

| + | </pre> | ||

| + | |||

| + | <b>Input files:</b> None | ||

| + | |||

| + | <b>Output files:</b> DAX files (schema is defined here: https://pegasus.isi.edu/documentation/schemas/dax-3.6/dax-3.6.html). Specifically, CyberShake_SGT_<site>.dax. | ||

| + | |||

| + | ==== create_stoch_dax.sh ==== | ||

| + | |||

| + | <b>Purpose:</b> To create the DAX files for an stochastic-only post-processing workflow. | ||

| + | |||

| + | <b>Detailed description:</b> create_stoch_dax.sh takes the command-line arguments and, by querying the database determines a Run ID to assign to the run. The entry corresponding to this run in the database is either updated or created. This run ID is then added to the file <site short name>_Stoch_dax/run_table.txt. It then compiles the DAX Generator (in case any changes have been made) and runs it with the correct command-line arguments, including a low-frequency run ID to be augmented, and the DAXes are created. Next, a directory, <site short name>_Stoch_dax/run_<run id>, is created, and the DAX files are moved into that directory. Finally, the run in the database is edited to reflect that creation is complete. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #Arguments to the DAX Generator change. | ||

| + | |||

| + | <b>Source code location:</b> http://source.usc.edu/svn/cybershake/import/trunk/runs/create_stoch_dax.sh | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Workflow_Framework#RunManager|Run Manager]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | create_stoch_dax.sh | ||

| + | CyberShake_Stochastic_DAXGen | ||

| + | |||

| + | <b>Compile instructions:</b>None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre>Usage: ./create_stoch_wf.sh [-h] <-l LF_RUN_ID> <-e ERF_ID> <-r RV_ID> <-f MERGE_FREQ> <-s SITE> [--daxargs DAX generator args] | ||

| + | -h display this help and exit | ||

| + | -l LF_RUN_ID Low-frequency run ID to combine | ||

| + | -e ERF_ID ERF ID | ||

| + | -r RUP_VAR_ID Rupture Variation ID | ||

| + | -f MERGE_FREQ Frequency to merge LF and stochastic results | ||

| + | -s SITE Site short name | ||

| + | |||

| + | Can be followed by DAX arguments: | ||

| + | usage: CyberShake_Stochastic_DAXGen <runID> <directory> | ||

| + | -d,--debug Debug flag. | ||

| + | -h,--help Print help for CyberShake_HF_DAXGen | ||

| + | -mf <merge_frequency> Frequency at which to merge the LF and HF | ||

| + | seismograms. | ||

| + | -nd,--no-duration Omit duration calculations. | ||

| + | -nls,--no-low-site-response Omit site response calculation for | ||

| + | low-frequency seismograms. | ||

| + | -nr,--no-rotd Omit RotD calculations. | ||

| + | -nsr,--no-site-response Omit site response calculation. | ||

| + | </pre> | ||

| + | |||

| + | <b>Input files:</b> None | ||

| + | |||

| + | <b>Output files:</b> DAX files (schema is defined here: https://pegasus.isi.edu/documentation/schemas/dax-3.6/dax-3.6.html). Specifically, CyberShake_Stoch_<site>_top.dax and CyberShake_Stoch_<site>_DB_Products.dax. | ||

| + | |||

| + | ==== create_pp_wf.sh ==== | ||

| + | |||

| + | <b>Purpose:</b> To create the DAX files for a deterministic-only post-processing workflow. | ||

| + | |||

| + | <b>Detailed description:</b> create_pp_wf.sh takes the command-line arguments and, by querying the database determines a Run ID to assign to the run. The entry corresponding to this run in the database is either updated or created. This run ID is then added to the file <site short name>_PP_dax/run_table.txt. It then compiles the DAX Generator (in case any changes have been made) and runs it with the correct command-line arguments, including a low-frequency run ID to be augmented, and the DAXes are created. Next, a directory, <site short name>_PP_dax/run_<run id>, is created, and the DAX files are moved into that directory. Finally, the run in the database is edited to reflect that creation is complete. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #New velocity models are added. The wrapper encodes a mapping between a string and the velocity model ID in lines 110-130, and a new entry must be added for a new model. | ||

| + | #New science parameters need to be tracked. Currently the site name, ERF id, SGT variation id, rupture variation scenario id, velocity model id, frequency, and source frequency are used. If new parameters are important, they would need to be captured, included in the run_table file, and passed to the Run Manager (which would also need to be edited to include it). | ||

| + | #Arguments to the DAX Generator change. | ||

| + | |||

| + | <b>Source code location:</b> http://source.usc.edu/svn/cybershake/import/trunk/runs/create_pp_wf.sh | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Workflow_Framework#RunManager|Run Manager]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | create_pp_wf.sh | ||

| + | CyberShake_PP_DAXGen | ||

| + | |||

| + | <b>Compile instructions:</b>None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | Usage: ./create_pp_wf.sh [-h] <-v VELOCITY MODEL> <-e ERF_ID> <-r RV_ID> <-g SGT_ID> <-f FREQ> <-s SITE> [-q SRC_FREQ] [--ppargs DAX generator args] | ||

| + | -h display this help and exit | ||

| + | -v VELOCITY_MODEL select velocity model, one of v4 (CVM-S4), vh (CVM-H), vsi (CVM-S4.26), vs1 (SCEC 1D model), vhng (CVM-H, no GTL), or vbbp (BBP 1D model). | ||

| + | -e ERF_ID ERF ID | ||

| + | -r RUP_VAR_ID Rupture Variation ID | ||

| + | -g SGT_ID SGT ID | ||

| + | -f FREQ Simulation frequency (0.5 or 1.0 supported) | ||

| + | -s SITE Site short name | ||

| + | -q SRC_FREQ Optional: SGT source filter frequency | ||

| + | |||

| + | Can be followed by optional PP arguments: | ||

| + | usage: CyberShake_PP_DAXGen <runID> <directory> | ||

| + | -d,--debug Debug flag. | ||

| + | -ds,--direct-synth Use DirectSynth code instead of extract_sgt | ||

| + | and SeisPSA to perform post-processing. | ||

| + | -du,--duration Calculate duration metrics and insert them | ||

| + | into the database. | ||

| + | -ff,--file-forward Use file-forwarding option. Requires PMC. | ||

| + | -ge,--global-extract-mpi Use 1 extract SGT MPI job, run as part of pre | ||

| + | workflow. | ||

| + | -h,--help Print help for CyberShake_PP_DAXGen | ||

| + | -hf <high-frequency> Lower-bound frequency cutoff for stochastic | ||

| + | high-frequency seismograms (default 1.0), required for high frequency run | ||

| + | -k,--skip-md5 Skip md5 checksum step. This option should | ||

| + | only be used when debugging. | ||

| + | -lm,--large-mem Use version of SeisPSA which can handle | ||

| + | ruptures with large numbers of points. | ||

| + | -mr <factor> Use SeisPSA version which supports multiple | ||

| + | synthesis tasks per invocation; number of seis_psa jobs per invocation. | ||

| + | -nb,--nonblocking-md5 Move md5 checksum step out of the critical | ||

| + | path. Entire workflow will still abort on error. | ||

| + | -nc,--no-mpi-cluster Do not use pegasus-mpi-cluster | ||

| + | -nf,--no-forward Use no forwarding. | ||

| + | -nh,--no-hierarchy Use directory hierarchy on compute resource | ||

| + | for seismograms and PSA files. | ||

| + | -nh,--no-hf-synth Use separate executables for high-frequency | ||

| + | srf2stoch and hfsim, rather than hfsynth | ||

| + | -ni,--no-insert Don't insert ruptures into database (used for | ||

| + | testing) | ||

| + | -nm,--no-mergepsa Use separate executables for merging broadband | ||

| + | seismograms and PSA, rather than mergePSA | ||

| + | -ns,--no-seispsa Use separate executables for both synthesis | ||

| + | and PSA | ||

| + | -p <partitions> Number of partitions to create. | ||

| + | -ps <pp_site> Site to run PP workflows on (optional) | ||

| + | -q,--sql Create sqlite file containing (source, | ||

| + | rupture, rv) to sub workflow mapping | ||

| + | -r,--rotd Calculate RotD50, the RotD50 angle, and | ||

| + | RotD100 for rupture variations and insert them into the database. | ||

| + | -s,--separate-zip Run zip jobs as separate seismogram and PSA | ||

| + | zip jobs. | ||

| + | -se,--serial-extract Use serial version of extraction code rather | ||

| + | than extract SGT MPI. | ||

| + | -sf,--source-forward Aggregate files at the source level instead of | ||

| + | the default rupture level. | ||

| + | -sp <spacing> Override the default grid spacing, in km. | ||

| + | -sr,--server <server> Server to use for site parameters and to | ||

| + | insert PSA values into | ||

| + | -z,--zip Zip seismogram and PSA files before | ||

| + | transferring. | ||

| + | </pre> | ||

| + | |||

| + | <b>Input files:</b> None | ||

| + | |||

| + | <b>Output files:</b> DAX files (schema is defined here: https://pegasus.isi.edu/documentation/schemas/dax-3.6/dax-3.6.html). Specifically, CyberShake_<site>.dax, CyberShake_<site>_pre.dax, CyberShake_<site>_Synth.dax, CyberShake_<site>_DB_Products.dax, and CyberShake_<site>_post.dax. | ||

| + | |||

| + | ==== create_full_wf.sh ==== | ||

| + | |||

| + | <b>Purpose:</b> To create the DAX files for a deterministic-only full (SGT + post-processing) workflow. | ||

| + | |||

| + | <b>Detailed description:</b> create_full_wf.sh takes the command-line arguments and, by querying the database determines a Run ID to assign to the run. The entry corresponding to this run in the database is either updated or created. This run ID is then added to the file <site short name>_Integrated_dax/run_table.txt. It then compiles the DAX Generator (in case any changes have been made) and runs it with the correct command-line arguments, including a low-frequency run ID to be augmented, and the DAXes are created. Next, a directory, <site short name>_Integrated_dax/run_<run id>, is created, and the DAX files are moved into that directory. Finally, the run in the database is edited to reflect that creation is complete. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #New velocity models are added. The wrapper encodes a mapping between a string and the velocity model ID in lines 107-129, and a new entry must be added for a new model. | ||

| + | #New science parameters need to be tracked. Currently the site name, ERF id, SGT variation id, rupture variation scenario id, velocity model id, frequency, and source frequency are used. If new parameters are important, they would need to be captured, included in the run_table file, and passed to the Run Manager (which would also need to be edited to include it). | ||

| + | #Arguments to the DAX Generator change. | ||

| + | |||

| + | <b>Source code location:</b> http://source.usc.edu/svn/cybershake/import/trunk/runs/create_full_wf.sh | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Workflow_Framework#RunManager|Run Manager]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | create_full_wf.sh | ||

| + | CyberShake_Integrated_DAXGen | ||

| + | |||

| + | <b>Compile instructions:</b>None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | Usage: ./create_full_wf.sh [-h] <-v VELOCITY_MODEL> <-e ERF_ID> <-r RUP_VAR_ID> <-g SGT_ID> <-f FREQ> <-s SITE> [-q SRC_FREQ] [--sgtargs <SGT dax generator args>] [--ppargs <post-processing args>] | ||

| + | -h display this help and exit | ||

| + | -v VELOCITY_MODEL select velocity model, one of v4 (CVM-S4), vh (CVM-H), vsi (CVM-S4.26), vs1 (SCEC 1D model), vhng (CVM-H, no GTL), vbbp (BBP 1D model), vcca1d (CCA 1D model), or vcca (CCA-06 3D model). | ||

| + | -e ERF_ID ERF ID | ||

| + | -r RUP_VAR_ID Rupture Variation ID | ||

| + | -g SGT_ID SGT ID | ||

| + | -f FREQ Simulation frequency (0.5 or 1.0 supported) | ||

| + | -s SITE Site short name | ||

| + | -q SRC_FREQ Optional: SGT source filter frequency | ||

| + | |||

| + | Can be followed by optional arguments: | ||

| + | usage: CyberShake_Integrated_DAXGen <output filename> <destination | ||

| + | directory> [options] [-f <runID file, one per line> | -r <runID1> <runID2> | ||

| + | ... ] | ||

| + | -h,--help Print help for CyberShake_Integrated_DAXGen | ||

| + | --ppargs <ppargs> Arguments to pass through to post-processing. | ||

| + | -rf <runID_file> File containing list of Run IDs to use. | ||

| + | -rl <runID_list> List of Run IDs to use. | ||

| + | --server <server> Server to use for site parameters and to insert | ||

| + | PSA values into | ||

| + | --sgtargs <sgtargs> Arguments to pass through to SGT workflow. | ||

| + | SGT DAX Generator arguments: | ||

| + | usage: CyberShake_SGT_DAXGen <output filename> <destination directory> | ||

| + | [options] [-f <runID file, one per line> | -r <runID1> <runID2> ... ] | ||

| + | -d,--handoff Run handoff job, which puts SGT into pending file | ||

| + | on shock when completed. | ||

| + | -f <runID_file> File containing list of Run IDs to use. | ||

| + | -h,--help Print help for CyberShake_SGT_DAXGen | ||

| + | -mc <max_cores> Maximum number of cores to use for AWP SGT code. | ||

| + | -mv <minvs> Override the minimum Vs value | ||

| + | -ns,--no-smoothing Turn off smoothing (default is to smooth) | ||

| + | -r <runID_list> List of Run IDs to use. | ||

| + | -sm,--separate-md5 Run md5 jobs separately from PostAWP jobs | ||

| + | (default is to combine). | ||

| + | -sp <spacing> Override the default grid spacing, in km. | ||

| + | -sr,--server <server> Server to use for site parameters and to insert | ||

| + | PSA values into | ||

| + | -ss <sgt_site> Site to run SGT workflows on (optional) | ||

| + | -sv,--split-velocity Use separate velocity generation and merge jobs | ||

| + | (default is to use combined job) | ||

| + | PP DAX Generator arguments: | ||

| + | usage: CyberShake_PP_DAXGen <runID> <directory> | ||

| + | -d,--debug Debug flag. | ||

| + | -ds,--direct-synth Use DirectSynth code instead of extract_sgt | ||

| + | and SeisPSA to perform post-processing. | ||

| + | -du,--duration Calculate duration metrics and insert them | ||

| + | into the database. | ||

| + | -ff,--file-forward Use file-forwarding option. Requires PMC. | ||

| + | -ge,--global-extract-mpi Use 1 extract SGT MPI job, run as part of pre | ||

| + | workflow. | ||

| + | -h,--help Print help for CyberShake_PP_DAXGen | ||

| + | -hf <high-frequency> Lower-bound frequency cutoff for stochastic | ||

| + | high-frequency seismograms (default 1.0), required for high frequency run | ||

| + | -k,--skip-md5 Skip md5 checksum step. This option should | ||

| + | only be used when debugging. | ||

| + | -lm,--large-mem Use version of SeisPSA which can handle | ||

| + | ruptures with large numbers of points. | ||

| + | -mr <factor> Use SeisPSA version which supports multiple | ||

| + | synthesis tasks per invocation; number of seis_psa jobs per invocation. | ||

| + | -nb,--nonblocking-md5 Move md5 checksum step out of the critical | ||

| + | path. Entire workflow will still abort on error. | ||

| + | -nc,--no-mpi-cluster Do not use pegasus-mpi-cluster | ||

| + | -nf,--no-forward Use no forwarding. | ||

| + | -nh,--no-hierarchy Use directory hierarchy on compute resource | ||

| + | for seismograms and PSA files. | ||

| + | -nh,--no-hf-synth Use separate executables for high-frequency | ||

| + | srf2stoch and hfsim, rather than hfsynth | ||

| + | -ni,--no-insert Don't insert ruptures into database (used for | ||

| + | testing) | ||

| + | -nm,--no-mergepsa Use separate executables for merging broadband | ||

| + | seismograms and PSA, rather than mergePSA | ||

| + | -ns,--no-seispsa Use separate executables for both synthesis | ||

| + | and PSA | ||

| + | -p <partitions> Number of partitions to create. | ||

| + | -ps <pp_site> Site to run PP workflows on (optional) | ||

| + | -q,--sql Create sqlite file containing (source, | ||

| + | rupture, rv) to sub workflow mapping | ||

| + | -r,--rotd Calculate RotD50, the RotD50 angle, and | ||

| + | RotD100 for rupture variations and insert them into the database. | ||

| + | -s,--separate-zip Run zip jobs as separate seismogram and PSA | ||

| + | zip jobs. | ||

| + | -se,--serial-extract Use serial version of extraction code rather | ||

| + | than extract SGT MPI. | ||

| + | -sf,--source-forward Aggregate files at the source level instead of | ||

| + | the default rupture level. | ||

| + | -sp <spacing> Override the default grid spacing, in km. | ||

| + | -sr,--server <server> Server to use for site parameters and to | ||

| + | insert PSA values into | ||

| + | -z,--zip Zip seismogram and PSA files before | ||

| + | transferring. | ||

| + | </pre> | ||

| + | |||

| + | <b>Input files:</b> None | ||

| + | |||

| + | <b>Output files:</b> DAX files (schema is defined here: https://pegasus.isi.edu/documentation/schemas/dax-3.6/dax-3.6.html).. Specifically, CyberShake_Integrated_<site>.dax, CyberShake_<site>_pre.dax, CyberShake_<site>_Synth.dax, CyberShake_<site>_DB_Products.dax, CyberShake_<site>_post.dax. | ||

| + | |||

| + | === Sample invocations === | ||

| + | |||

| + | Below are sample invocations used for Study 15.4, Study 15.12, and Study 17.3, to demonstrate how the wrapper scripts are commonly used. | ||

| + | |||

| + | |||

| + | ==== Study 15.4 (1 Hz deterministic) ==== | ||

| + | |||

| + | SGT workflow: | ||

| + | ./create_sgt_dax.sh -s PAS -v vsi -e 36 -r 6 -g 8 -f 1.0 -q 2.0 --sgtargs -sm | ||

| + | *Site PAS | ||

| + | *Velocity model CVM-S4.26 | ||

| + | *ERF ID 36 (UCERF 2, 200m spacing) | ||

| + | *Rup Var Scenario ID 6 (G&P 2014, uniform hypocenters) | ||

| + | *SGT Variation ID 8 (AWP-ODC-SGT, GPU version) | ||

| + | *Maximum frequency of 1 Hz | ||

| + | *SGT source filtered at 2 Hz | ||

| + | *Separate md5sums from the PostAWP job (this was needed only for Titan because of limits on the runtime for small jobs) | ||

| + | |||

| + | Integrated workflow: | ||

| + | ./create_full_wf.sh -s PAS -v vsi -e 36 -r 6 -g 8 -f 1.0 -q 2.0 --sgtargs -ss titan -sm --ppargs -ps bluewaters -ds -nb -r | ||

| + | *Site PAS | ||

| + | *Velocity model CVM-S4.26 | ||

| + | *ERF ID 36 (UCERF 2, 200m spacing) | ||

| + | *Rup Var Scenario ID 6 (G&P 2014, uniform hypocenters) | ||

| + | *SGT Variation ID 8 (AWP-ODC-SGT, GPU version) | ||

| + | *Maximum frequency of 1 Hz | ||

| + | *SGT source filtered at 2 Hz | ||

| + | *Using titan for SGT site (used when we plan the AWP sub-workflow, and to update the database) | ||

| + | *Separate md5sums from the PostAWP job (this was needed only for Titan because of limits on the runtime for small jobs) | ||

| + | *Using bluewaters for PP site (used when planning some of the post-processing workflows, and to set the value in the database correctly) | ||

| + | *Use the DirectSynth code for post-processing | ||

| + | *Use non-blocking MD5sum checks in the post-processing workflow | ||

| + | *Calculate RotD as well as component PSA. | ||

| + | |||

| + | ==== Study 15.12 (10 Hz stochastic) ==== | ||

| + | |||

| + | Stoch workflow: | ||

| + | ./create_stoch_wf.sh -s PAS -l 3878 -e 36 -r 6 -f 1.0 --daxargs -mf 1.0 | ||

| + | *Site PAS | ||

| + | *Use Run ID 3878 for low-frequency deterministic | ||

| + | *ERF ID 36 (UCERF 2, 200m spacing) | ||

| + | *Rup Var Scenario ID 6 (G&P 2014, uniform hypocenters) | ||

| + | *Merge deterministic and stochastic results at 1.0 Hz (argument also needed to DAX generator) | ||

| + | |||

| + | ==== Study 17.3 (1 Hz deterministic, different spacing and minimum Vs) ==== | ||

| + | |||

| + | SGT workflow: | ||

| + | ./create_sgt_dax.sh -s OSI -v vcca -e 36 -r 6 -g 8 -f 1.0 -q 2.0 -mv 900.0 -sp 0.175 -d --server moment.usc.edu | ||

| + | *Site OSI | ||

| + | *Velocity model CCA-06 | ||

| + | *ERF ID 36 (UCERF 2, 200m spacing) | ||

| + | *Rup Var Scenario ID 6 (G&P 2014, uniform hypocenters) | ||

| + | *SGT Variation ID 8 (AWP-ODC-SGT, GPU version) | ||

| + | *Maximum frequency of 1 Hz | ||

| + | *SGT source filtered at 2 Hz | ||

| + | *Minimum Vs of 900 m/s | ||

| + | *Grid spacing of 0.175 km | ||

| + | *Include handoff job, so when SGT workflow completes it will be put back into the site queue for the post-processing | ||

| + | *Use moment.usc.edu as the run server | ||

| + | |||

| + | PP workflow: | ||

| + | ./create_pp_wf.sh -s OSI -v vcca1d -e 36 -r 6 -g 8 -f 1.0 -q 2.0 --ppargs -ds -du -nb -r -sp 0.175 --server moment.usc.edu | ||

| + | *Site OSI | ||

| + | *Velocity model CCA 1D | ||

| + | *ERF ID 36 (UCERF 2, 200m spacing) | ||

| + | *Rup Var Scenario ID 6 (G&P 2014, uniform hypocenters) | ||

| + | *SGT Variation ID 8 (AWP-ODC-SGT, GPU version) | ||

| + | *Maximum frequency of 1 Hz | ||

| + | *SGT source filtered at 2 Hz | ||

| + | *Use the DirectSynth code for post-processing | ||

| + | *Calculate durations | ||

| + | *Use non-blocking MD5sum checks in the post-processing workflow | ||

| + | *Calculate RotD as well as component PSA | ||

| + | *Grid spacing of 0.175 km | ||

| + | *Use moment.usc.edu as the run server | ||

| + | |||

| + | Integrated workflow: | ||

| + | ./create_full_wf.sh -s OSI -v vcca -e 36 -r 6 -g 8 -f 1.0 -q 2.0 --server moment.usc.edu --sgtargs -ss titan -mv 900.0 -sp 0.175 --ppargs -ps titan -ds -du -nb -r -sp 0.175 | ||

| + | *Site OSI | ||

| + | *Velocity model CCA-06 | ||

| + | *ERF ID 36 (UCERF 2, 200m spacing) | ||

| + | *Rup Var Scenario ID 6 (G&P 2014, uniform hypocenters) | ||

| + | *SGT Variation ID 8 (AWP-ODC-SGT, GPU version) | ||

| + | *Maximum frequency of 1 Hz | ||

| + | *SGT source filtered at 2 Hz | ||

| + | *Use moment.usc.edu as the run server | ||

| + | *Use Titan for the SGT workflow | ||

| + | *Minimum Vs of 900 m/s | ||

| + | *Grid spacing of 0.175 km | ||

| + | *Use Titan for the PP workflow | ||

| + | *Use the DirectSynth code for post-processing | ||

| + | *Calculate durations | ||

| + | *Use non-blocking MD5sum checks in the post-processing workflow | ||

| + | *Calculate RotD as well as component PSA | ||

| + | *Grid spacing of 0.175 km | ||

=== DAX Generator === | === DAX Generator === | ||

| + | The DAX Generator consists of multiple complex Java classes. It supports a large number of arguments, enabling the user to select the CyberShake science parameters (site, erf, velocity model, rupture variation scenario id, frequency, spacing, minimum vs cutoff) and the technical parameters (which post-processing code to select, which SGT code, etc.) to perform the run with. Different entry classes support creating just an SGT workflow, just a post-processing (+ data product creation) workflow, or both combined into an integrated workflow. | ||

| + | A detailed overview of the DAX Generator is available here: [[CyberShake DAX Generator]]. | ||

== Plan == | == Plan == | ||

| + | |||

| + | The next stage of workflow preparation is called 'planning'. In this stage, the DAX generated in the creation step is converted into Condor-formatted DAG files. As part of this process, the job and file names in the DAX are converted into specific paths in the DAG. Additionally, Pegasus wraps all executables in kickstart, which gathers runtime information on the job, and also adds other jobs such as registration, directory creation, and staging. | ||

| + | |||

| + | === Catalogs === | ||

| + | |||

| + | Before you can successfully plan a workflow, you need to have catalogs, which explain to Pegasus how to resolve the logical names into physical ones, and also sets up any site-specific configuration information. There are three catalogs to consider: | ||

| + | #Transformation Catalog | ||

| + | #Site Catalog | ||

| + | #Replica Catalog | ||

| + | |||

| + | ==== Transformation Catalog (TC) ==== | ||

| + | |||

| + | Full documentation on the TC is available in the [https://pegasus.isi.edu/documentation/transformation.php Pegasus documentation]. Here we will provide a brief overview. | ||

| + | |||

| + | For every combination of executable you want to run and system you want to run it on, there needs to be an entry in the TC. This makes sense; somehow, Pegasus needs to be able to replace the logical name in the DAX with physical paths. We use a text file for the TC. On shock, the TC is located in /home/scec-02/cybershk/runs/config/tc.txt . | ||

| + | |||

| + | A sample entry in the TC looks like this: | ||

| + | <pre> | ||

| + | tr scec::UCVMMesh:1.0 { | ||

| + | site bluewaters { | ||

| + | pfn "/projects/sciteam/baln/CyberShake/software/UCVM/single_exe.py" | ||

| + | arch "x86_64" | ||

| + | os "LINUX" | ||

| + | type "INSTALLED" | ||

| + | profile globus "host_count" "32" | ||

| + | profile globus "count" "1024" | ||

| + | profile globus "maxwalltime" "45" | ||

| + | profile globus "jobtype" "single" | ||

| + | profile globus "nodetype" "xe" | ||

| + | profile globus "project" "baln" | ||

| + | profile pegasus "cores" "1024" | ||

| + | } | ||

| + | site titan { | ||

| + | pfn "/lustre/atlas/proj-shared/geo112/CyberShake/software/UCVM/single_exe.py" | ||

| + | arch "x86_64" | ||

| + | os "LINUX" | ||

| + | type "INSTALLED" | ||

| + | profile globus "hostcount" "96" | ||

| + | profile globus "count" "1536" | ||

| + | profile globus "maxwalltime" "60" | ||

| + | profile globus "jobtype" "single" | ||

| + | profile globus "account" "GEO112" | ||

| + | profile pegasus "cores" "1536" | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | The name of the job is in the format namespace::jobname:version . These values must match the values specified when the DAX is created, or Pegasus won't know they're the same job. | ||

| + | |||

| + | The 'pfn', 'arch', 'os', and 'type' entries are required. Only the 'pfn' (short for Physical File Name) entry should change between systems. The 'profile globus' entries provide a way to give system-specific parameters. For example, you can specify the number of nodes ('count'), the number of cores ('hostcount'), the maximum runtime ('maxwalltime'), and the project to bill. 'jobtype single' means that the entry point - the Python script single_exe.py - will be run serially from the command line. This is what we want, as we call aprun ourselves from the Python script. | ||

| + | |||

| + | If an entry is missing, or the file is not in the correct format, Pegasus will throw an error during planning. | ||

| + | |||

| + | In addition to the CyberShake jobs, a few Pegasus jobs also have entries in the TC, such as pegasus::mpiexec and pegasus::transfer. This provides a way to specify custom wrappers or configuration for these internal Pegasus jobs. | ||

| + | |||

| + | ==== Site Catalog (SC) ==== | ||

| + | |||

| + | Full documentation of the Site Catalog is available in [https://pegasus.isi.edu/documentation/site.php the Pegasus documentation]. | ||

| + | |||

| + | The Site Catalog contains site-specific information in XML format. For example, the URLs of the GridFTP endpoints or GRAM servers, paths to the appropriate X509 certificate, where on disk workflows should be executed, and the paths to Pegasus. | ||

| + | |||

| + | Here are sample Site Catalog entries for Blue Waters (GRAM) and Titan (rvGAHP): | ||

| + | <pre> | ||

| + | <site handle="bluewaters" arch="x86_64" os="LINUX" osrelease="" osversion="" glibc=""> | ||

| + | <grid type="gt5" contact="h2ologin4.ncsa.illinois.edu:2119/jobmanager-fork" scheduler="Fork" jobtype="auxillary" os="LINUX" arch="x86"/> | ||

| + | <grid type="gt5" contact="h2ologin4.ncsa.illinois.edu:2119/jobmanager-pbs" scheduler="PBS" jobtype="compute" os="LINUX" arch="x86"/> | ||

| + | <directory path="/u/sciteam/scottcal/scratch/CyberShake/execdir" type="shared-scratch" free-size="" total-size=""> | ||

| + | <file-server operation="all" url="gsiftp://bw-gridftp.ncsa.illinois.edu:2811/u/sciteam/scottcal/scratch/CyberShake/execdir"> | ||

| + | </file-server> | ||

| + | </directory> | ||

| + | <directory path="/projects/sciteam/baln/CyberShake" type="shared-storage" free-size="" total-size=""> | ||

| + | <file-server operation="all" url="gsiftp://bw-gridftp.ncsa.illinois.edu:2811/projects/sciteam/baln/CyberShake"> | ||

| + | </file-server> | ||

| + | </directory> | ||

| + | <profile namespace="env" key="PEGASUS_HOME" >/projects/sciteam/baln/CyberShake/utils/pegasus/default</profile> | ||

| + | <profile namespace="env" key="X509_USER_PROXY">/tmp/x509up_u33527</profile> | ||

| + | <profile namespace="env" key="X509_CERT_DIR">/etc/grid-security/certificates</profile> | ||

| + | <profile namespace="pegasus" key="X509_USER_PROXY">/tmp/x509up_u33527</profile> | ||

| + | </site> | ||

| + | <site handle="titan" arch="x86_64" os="LINUX" osrelease="" osversion="" glibc=""> | ||

| + | <directory path="/lustre/atlas/proj-shared/geo112/execdir" type="shared-scratch" free-size="" total-size=""> | ||

| + | <file-server operation="all" url="gsiftp://gridftp.ccs.ornl.gov/lustre/atlas/proj-shared/geo112/execdir"> | ||

| + | </file-server> | ||

| + | </directory> | ||

| + | <directory path="/lustre/atlas/proj-shared/geo112/CyberShake/data" type="shared-storage" free-size="" total-size=""> | ||

| + | <file-server operation="all" url="gsiftp://gridftp.ccs.ornl.gov/lustre/atlas/proj-shared/geo112/CyberShake/data"> | ||

| + | </file-server> | ||

| + | </directory> | ||

| + | <profile namespace="pegasus" key="style">glite</profile> | ||

| + | <profile namespace="pegasus" key="change.dir">true</profile> | ||

| + | <profile namespace="condor" key="grid_resource">batch pbs /tmp/cybershk.titan.sock</profile> | ||

| + | <profile namespace="pegasus" key="queue">batch</profile> | ||

| + | <profile namespace="condor" key="batch_queue">batch</profile> | ||

| + | <profile namespace="env" key="PEGASUS_HOME" >/lustre/atlas/proj-shared/geo112/CyberShake/utils/pegasus/default-service</profile> | ||

| + | <profile namespace="pegasus" key="X509_USER_PROXY" >/tmp/x509up_u7588</profile> | ||

| + | <profile namespace="globus" key="hostcount">1</profile> | ||

| + | <profile namespace="globus" key="maxwalltime">30</profile> | ||

| + | </site> | ||

| + | </pre> | ||

| + | |||

| + | For both systems, we specify directory paths + GridFTP servers for shared-scratch and shared-storage, which are expected by Pegasus. We also provides paths to PEGASUS_HOME and the X509_USER_PROXY. On Blue Waters, we also provide URLs for the GRAM endpoints, and specify X509_CERT_DIR, the path to use for grid certificates. On Titan, we add some special configuration keys to enable rvGAHP, and also provide defaults for hostcount and maxwalltime (these would be overwritten by the TC). The SC uses the same 'namespace key value' idea as the TC. | ||

| + | |||

| + | Every computational resource you wish to run on, and every site listed in the TC, must have an entry in the SC. There are several versions of the SC on shock, but the current one is at /home/scec-02/cybershk/runs/config/sites.xml.full . | ||

| + | |||

| + | ==== Replica Catalog (RC) ==== | ||

| + | |||

| + | Full documentation available at [https://pegasus.isi.edu/documentation/replica.php the Pegasus documentation]. | ||

| + | |||

| + | The Replica Catalog keeps track of where input and output data files which are used in Pegasus workflows (the ones you specify in the DAX generator) are located. This is done via mappings between logical file names (LFN) and physical file names (PFN). We give each entry a pool=<remote system> entry also, so that Pegasus knows which system things are located on. The string used for the remote system must match the string used in the TC and SC. | ||

| + | |||

| + | For example, an entry in the RC might be: | ||

| + | <pre> | ||

| + | e36_rv6_0_0.txt gsiftp://bw-gridftp.ncsa.illinois.edu/projects/sciteam/baln/CyberShake/ruptures/Ruptures_erf36/0/0/0_0.txt site="bluewaters" | ||

| + | e36_rv6_0_0.txt gsiftp://gridftp.ccs.ornl.gov/lustre/atlas/proj-shared/geo112/CyberShake/ruptures/Ruptures_erf36/0/0/0_0.txt site="titan" | ||

| + | </pre> | ||

| + | |||

| + | This is an entry for one of the rupture geometry files, 'e36_rv6_0_0.txt'. There are two locations for this file, one on Blue Waters and one on Titan. When a workflow is planned, Pegasus takes the LFNs specified in the DAX, the system you want to run on, and goes to the RC to determine where the input file is located. If the file is located on the execution system, Pegasus will make a symlink; if not, it will stage the file in. This means that every external input file (files which are not generated as part of the workflow) must have at least 1 entry in the RC, or you'll get an error. | ||

| + | |||

| + | The tool for interacting with the RC is <b>pegasus-rc-client</b>, part of the Pegasus software. It supports lookups, insertions, and deletions. Typically, we produce an file in the following format: | ||

| + | <pre> | ||

| + | <lfn> <pfn> pool=<remote system name> | ||

| + | </pre> | ||

| + | |||

| + | This file can then be given to pegasus-rc-client for bulk additions. <b>This is required every time we create a new set of rupture geometries, with a new ERF ID and rupture variation scenario ID, and individual SRFs if we are not generating them in memory. This should be done for each execution system to avoid extra file transfers.</b> | ||

| + | |||

| + | The RC is also responsible for outputs. To simplify telling Pegasus where files should be transferred, we use regular expressions to express where the output files should go. A file in the format <lfn> <pfn> pool=<system> regex="true" is used to specify the outputs for the different file types without needing to include each output file explicitly. Full details about this file format can be found in the Pegasus documentation [https://pegasus.isi.edu/documentation/replica.php#rc-regex here.] | ||

| + | |||

| + | Here are the current contents of the output regex (at /home/scec-02/cybershk/runs/config/rc.regex.output): | ||

| + | <pre> | ||

| + | "(\\w+)_f[xyz]_(\\d+)\\.sgt.*" gsiftp://bw-gridftp.ncsa.illinois.edu:2811/scratch/sciteam/scottcal/SGT_Storage/[1]/[0] pool="bluewaters" regex="true" | ||

| + | "Seismogram_(\\w+)_(\\d+)_(\\d+)_(\\d+)\\.grm" gsiftp://hpc-transfer.usc.edu/home/scec-04/tera3d/CyberShake/data/PPFiles/[1]/[2]/Seismogram_[1]_[3]_[4].grm pool="shock" regex="true" | ||

| + | "PeakVals_(\\w+)_(\\d+)_(\\d+)_(\\d+)\\.bsa" gsiftp://hpc-transfer.usc.edu/home/scec-04/tera3d/CyberShake/data/PPFiles/[1]/[2]/PeakVals_[1]_[3]_[4].bsa pool="shock" regex="true" | ||

| + | "RotD_(\\w+)_(\\d+)_(\\d+)_(\\d+)\\.rotd" gsiftp://hpc-transfer.usc.edu/home/scec-04/tera3d/CyberShake/data/PPFiles/[1]/[2]/RotD_[1]_[3]_[4].rotd pool="shock" regex="true" | ||

| + | "Duration_(\\w+)_(\\d+)_(\\d+)_(\\d+)\\.dur" gsiftp://hpc-transfer.usc.edu/home/scec-04/tera3d/CyberShake/data/PPFiles/[1]/[2]/Duration_[1]_[3]_[4].dur pool="shock" regex="true" | ||

| + | "(\\d+)/Seismogram_(\\w+)_(\\d+)_(\\d+)_(\\d+)_bb\\.grm" gsiftp://hpc-transfer.usc.edu/home/scec-04/tera3d/CyberShake/data/PPFiles/[2]/[3]/Seismogram_[2]_[4]_[5]_bb.grm pool="shock" regex="true" | ||

| + | "(\\d+)/PeakVals_(\\w+)_(\\d+)_(\\d+)_(\\d+)_bb\\.bsa" gsiftp://hpc-transfer.usc.edu/home/scec-04/tera3d/CyberShake/data/PPFiles/[2]/[3]/PeakVals_[2]_[4]_[5].bsa pool="shock" regex="true" | ||

| + | "(\\d+)/RotD_(\\w+)_(\\d+)_(\\d+)_(\\d+)_bb\\.rotd" gsiftp://hpc-transfer.usc.edu/home/scec-04/tera3d/CyberShake/data/PPFiles/[2]/[3]/RotD_[2]_[4]_[5].rotd pool="shock" regex="true" | ||

| + | "(\\d+)/Duration_(\\w+)_(\\d+)_(\\d+)_(\\d+)_bb\\.dur" gsiftp://hpc-transfer.usc.edu/home/scec-04/tera3d/CyberShake/data/PPFiles/[2]/[3]/Duration_[2]_[4]_[5].dur pool="shock" regex="true" | ||

| + | "(\\d+)/Seismogram_(\\w+)_(\\d+)_(\\d+)_(\\d+)_site_response\\.grm" gsiftp://hpc-transfer.usc.edu/home/scec-04/tera3d/CyberShake/data/PPFiles/[2]/[3]/Seismogram_[2]_[4]_[5]_det_site_response.grm pool="shock" regex="true" | ||

| + | </pre> | ||

| + | |||

| + | The first entry is for SGTs, then follow the LF entries, then the BB entries. [0] refers to the entire string matched, and [1], [2], etc. to the match in the first set of parentheses, the 2nd set, and so on. That way we can preserve the site, source, and rupture information in the output file name. | ||

| + | |||

| + | Pegasus knows to use this file because we point to it in the properties file, described next. | ||

| + | |||

| + | === Properties file === | ||

| + | |||

| + | The properties file is a key input to the planning stage. It specifies how to access the SC, TC, and RC, and also provides other information used in creating and wrapping the workflow tasks. Full documentation is available on [https://pegasus.isi.edu/documentation/configuration.php the Pegasus site]. | ||

| + | |||

| + | Currently we use a few different properties files depending on which workflow is being generated and on which system. All properties files are on shock on /home/scec-02/cybershk/runs/config. | ||

| + | *properties.full is the default. | ||

| + | *properties.bluewaters is used for integrated, post-processing, and stochastic workflows on Blue Waters. | ||

| + | *properties.sgt is used for the SGT workflows. | ||

| + | |||

| + | All the properties files share some entries. Some of these entries may have been deprecated; these properties have been accumulated since the beginning of CyberShake using Pegasus. | ||

| + | |||

| + | *RC access parameters | ||

| + | pegasus.catalog.replica = JDBCRC | ||

| + | pegasus.catalog.replica.db.driver = MySQL | ||

| + | pegasus.catalog.replica.db.url = jdbc:mysql://localhost:3306/replica_catalog | ||

| + | pegasus.catalog.replica.db.user = globus | ||

| + | pegasus.catalog.replica.db.password = GoTrojans! | ||

| + | *SC access parameters | ||

| + | pegasus.catalog.site = XML3 | ||

| + | pegasus.catalog.site.file = /home/scec-02/cybershk/runs/config/sites.xml.full | ||

| + | *TC access parameters | ||

| + | pegasus.catalog.transformation = Text | ||

| + | pegasus.catalog.transformation.file = /home/scec-02/cybershk/runs/config/tc.txt | ||

| + | *When using MPIExec | ||

| + | pegasus.clusterer.job.aggregator = MPIExec | ||

| + | pegasus.clusterer.job.aggregator.seqexec.log = false | ||

| + | *How to map output files to their storage location | ||

| + | pegasus.dir.storage.mapper = Replica | ||

| + | pegasus.dir.storage.mapper.replica = Regex | ||

| + | pegasus.dir.storage.mapper.replica.file = /home/scec-02/cybershk/runs/config/rc.regex.output | ||

| + | pegasus.dir.submit.mapper = Flat | ||

| + | *To add the account argument for running on Titan | ||

| + | pegasus.glite.arguments = -A GEO112 | ||

| + | *Capture events live using monitord (monitord keeps track of provenance and metadata info) | ||

| + | pegasus.monitord.events = true | ||

| + | *DAGMan parameters | ||

| + | dagman.maxidle = 200 | ||

| + | dagman.maxpre = 2 | ||

| + | dagman.retry = 2 | ||

| + | *How to create working directories | ||

| + | pegasus.dir.storage.deep = false | ||

| + | pegasus.dir.useTimestamp = true | ||

| + | pegasus.dir.submit.logs = /scratch | ||

| + | *How many jobs to break stagein and stageout into | ||

| + | pegasus.stagein.clusters = 1 | ||

| + | pegasus.stageout.clusters = 1 | ||

| + | *Which jobs to run exitcode for | ||

| + | pegasus.exitcode.scope = all | ||

| + | *Arguments relating to kickstart | ||

| + | pegasus.gridstart.label = true | ||

| + | pegasus.gridstart.arguments = -q | ||

| + | pegasus.gridstart.kickstart.stat = false | ||

| + | *Sites and parameters to use in data transfer | ||

| + | pegasus.transfer.force = true | ||

| + | pegasus.transfer.inter.thirdparty.sites = hpc,shock,abe,ranger,kraken,stampede | ||

| + | pegasus.transfer.stagein.thirdparty.sites = ranger,kraken,stampede | ||

| + | pegasus.transfer.stageout.thirdparty.sites = ranger,kraken,stampede | ||

| + | pegasus.transfer.*.thirdparty.remote = ranger,kraken,stampede | ||

| + | pegasus.transfer.links = true | ||

| + | pegasus.transfer.refiner = Bundle | ||

| + | pegasus.transfer.stageout.thirdparty.sites = * | ||

| + | pegasus.transfer.throttle.processes = 1 | ||

| + | pegasus.transfer.throttle.streams = 4 | ||

| + | pegasus.transfer.threads = 8 | ||

| + | |||

| + | The following are custom for different properties files: | ||

| + | |||

| + | ==== full properties file ==== | ||

| + | *Cleanup scope and X509 user proxy (Scott's on XSEDE) are used | ||

| + | pegasus.file.cleanup.scope = fullahead | ||

| + | pegasus.local.env=X509_USER_PROXY=/tmp/x509up_u456264 | ||

| + | |||

| + | ==== sgt properties file ==== | ||

| + | *Cleanup scope, X509 user proxy (Scott's on XSEDE), and additional sites added to transfers. | ||

| + | pegasus.file.cleanup.scope = deferred | ||

| + | pegasus.condor.arguments.quote = false | ||

| + | pegasus.local.env=X509_USER_PROXY=/tmp/x509up_u456264 | ||

| + | pegasus.transfer.inter.thirdparty.sites = hpc,shock,abe,ranger,kraken,stampede,bluewaters,titan | ||

| + | pegasus.transfer.stagein.thirdparty.sites = ranger,kraken,stampede,bluewaters,titan | ||

| + | pegasus.transfer.stageout.thirdparty.sites = ranger,kraken,stampede,bluewaters,titan | ||

| + | pegasus.transfer.*.thirdparty.remote = ranger,kraken,stampede,bluewaters,titan | ||

| + | |||

| + | ==== bluewaters properties file ==== | ||

| + | *X509 proxy (Scott's on Blue Waters), how the cache file is used with the RC, other sites | ||

| + | pegasus.local.env=X509_USER_PROXY=/tmp/x509up_u33527 | ||

| + | pegasus.catalog.replica.cache.asrc=true | ||

| + | pegasus.transfer.inter.thirdparty.sites = hpc,mercury,shock,sdsc,abe,ranger,stampede,bluewaters | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #New sites are added (need to be added to the pegasus.transfer.*.sites arguments) | ||

| + | #RC, TC, SC catalog locations are changed | ||

| + | #Different grid certificates need to be used | ||

| + | |||

| + | === Scripts === | ||

| + | |||

| + | As in the Create stage, we run bash scripts which wrap the pegasus-plan command. | ||

| + | |||

| + | ==== SGT workflow ==== | ||

| + | |||

| + | <b>Purpose:</b> To plan an SGT-only workflow. | ||

| + | |||

| + | <b>Detailed description:</b> plan_sgt.sh takes command-line arguments with the DAX to plan, runs pegasus-plan, and updates the record in the database to reflect that planning has occurred. The TC catalog is also recorded. Some changes are made to the properties files as well: for example, pegasus.exitcode.scope is set to non in the top-level properties file, but all in the subdax files. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #Different dynamic configuration options need to be set. | ||

| + | |||

| + | <b>Source code location:</b> http://source.usc.edu/svn/cybershake/import/trunk/runs/plan_sgt.sh | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Workflow_Framework#RunManager|Run Manager]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | plan_sgt.sh | ||

| + | |||

| + | <b>Compile instructions:</b>None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | Usage: ./plan_sgt.sh <SGT_dir | site run_id> remote_site | ||

| + | Example: ./plan_sgt.sh 1234543210_SGT_dax ranger | ||

| + | Example: ./plan_sgt.sh USC 1359 stampede | ||

| + | </pre> | ||

| + | |||

| + | <b>Input files:</b> None specified, but requires DAX files in directory. | ||

| + | |||

| + | <b>Output files:</b> DAG files. | ||

| + | |||

| + | ==== PP workflow ==== | ||

| + | |||

| + | <b>Purpose:</b> To plan an PP-only workflow. | ||

| + | |||

| + | <b>Detailed description:</b> plan_pp.sh takes command-line arguments with the DAX to plan, runs pegasus-plan, and updates the record in the database to reflect that planning has occurred. The TC catalog is also recorded. Some changes are made to the properties files as well: for example, pegasus.exitcode.scope is set to non in the top-level properties file, but all in the subdax files. Also, a separate properties file is selected if executing on Blue Waters. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #Different dynamic configuration options need to be set. | ||

| + | |||

| + | <b>Source code location:</b> http://source.usc.edu/svn/cybershake/import/trunk/runs/plan_pp.sh | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Workflow_Framework#RunManager|Run Manager]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | plan_pp.sh | ||

| + | |||

| + | <b>Compile instructions:</b>None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | Usage: ./plan_pp.sh <site> <run_id> <remote_site> <gridshib user> <gridshib user email> | ||

| + | Example: ./plan_pp.sh USC 135 abe scottcal scottcal@usc.edu | ||

| + | </pre> | ||

| + | |||

| + | <b>Input files:</b> None specified, but requires DAX files in directory. | ||

| + | |||

| + | <b>Output files:</b> DAG files. | ||

| + | |||

| + | ==== Stochastic workflow ==== | ||

| + | |||

| + | <b>Purpose:</b> To plan an stochastic-only workflow. | ||

| + | |||

| + | <b>Detailed description:</b> plan_stoch.sh takes command-line arguments with the DAX to plan, runs pegasus-plan, and updates the record in the database to reflect that planning has occurred. The TC catalog is also recorded. Some changes are made to the properties files as well: for example, pegasus.exitcode.scope is set to non in the top-level properties file, but all in the subdax files, and a different user certificate is selected depending on the execution system. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #Different dynamic configuration options need to be set. | ||

| + | |||

| + | <b>Source code location:</b> http://source.usc.edu/svn/cybershake/import/trunk/runs/plan_stoch.sh | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Workflow_Framework#RunManager|Run Manager]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | plan_stoch.sh | ||

| + | |||

| + | <b>Compile instructions:</b>None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | Usage: ./plan_stoch.sh <site> <run_id> <remote_site> | ||

| + | Example: ./plan_stoch.sh USC 135 abe | ||

| + | </pre> | ||

| + | |||

| + | <b>Input files:</b> None specified, but requires DAX files in directory. | ||

| + | |||

| + | <b>Output files:</b> DAG files. | ||

| + | |||

| + | |||

| + | ==== Integrated workflow ==== | ||

| + | |||

| + | <b>Purpose:</b> To plan a full CyberShake workflow. | ||

| + | |||

| + | <b>Detailed description:</b> plan_full.sh takes command-line arguments with the DAX to plan, runs pegasus-plan, and updates the record in the database to reflect that planning has occurred. The TC catalog is also recorded. Some changes are made to the properties files as well: for example, pegasus.exitcode.scope is set to none in the top-level properties file, but all in the subdax files, and a different user certificate is selected depending on the execution system. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #Different dynamic configuration options need to be set. | ||

| + | |||

| + | <b>Source code location:</b> http://source.usc.edu/svn/cybershake/import/trunk/runs/plan_full.sh | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Workflow_Framework#RunManager|Run Manager]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | plan_full.sh | ||

| + | |||

| + | <b>Compile instructions:</b>None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | Usage: ./plan_full.sh <integrated directory> | <site> <run_id> <remote_site> | ||

| + | Example: ./plan_full.sh USC 2494 stampede | ||

| + | </pre> | ||

| + | |||

| + | <b>Input files:</b> None specified, but requires DAX files in directory. | ||

| + | |||

| + | <b>Output files:</b> DAG files. | ||

== Run == | == Run == | ||

| − | === X509 certificates === | + | The final step of workflow preparation is to run the workflow. This takes the planned workflow, containing Condor DAG and submit scripts, and submits it to Condor to manage the real-time execution. After running the workflow, jobs will appear in the Condor queue and can be monitored (see [[CyberShake Workflow Monitoring]] for details). This can be either the initial submission of a workflow, or a restart if there has been a failure. |

| + | |||

| + | === SGT Workflow === | ||

| + | |||

| + | <b>Purpose:</b> To run an SGT workflow. | ||

| + | |||

| + | <b>Detailed description:</b> run_sgt.sh takes a site and run ID and submits a workflow to Pegasus. The user can also specify that the run is a restart, which changes the acceptable state transitions used in the Run Manager. After the workflow is submitted using pegasus-run, the run state is updated to running in the Run Manager. There is also the option to turn on notifications to send email updates with workflow status changes. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #The run manager executables are changed. | ||

| + | |||

| + | <b>Source code location:</b> http://source.usc.edu/svn/cybershake/import/trunk/runs/run_sgt.sh | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Workflow_Framework#RunManager|Run Manager]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | run_sgt.sh | ||

| + | |||

| + | <b>Compile instructions:</b>None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | Usage: ./run_sgt.sh [-r] [-n notify_user] <site run_id | -t timestamp> | ||

| + | Example: ./run_sgt.sh USC 2494 | ||

| + | </pre> | ||

| + | |||

| + | <b>Input files:</b> None specified, but requires DAG files in directory. | ||

| + | |||

| + | <b>Output files:</b> None. | ||

| + | |||

| + | === PP Workflow === | ||

| + | |||

| + | <b>Purpose:</b> To run a PP workflow. | ||

| + | |||

| + | <b>Detailed description:</b> run_pp.sh takes a site and run ID and submits a workflow to Pegasus. The user can also specify that the run is a restart, which changes the acceptable state transitions used in the Run Manager. After the workflow is submitted using pegasus-run, the run state is updated to running in the Run Manager. The condor config files are saved as part of the metadata. There is also the option to turn on notifications to send email updates with workflow status changes. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #The run manager executables are changed. | ||

| + | |||

| + | <b>Source code location:</b> http://source.usc.edu/svn/cybershake/import/trunk/runs/run_pp.sh | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Workflow_Framework#RunManager|Run Manager]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | run_pp.sh | ||

| + | |||

| + | <b>Compile instructions:</b>None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | Usage: ./run_pp.sh [-r] [-n notify_user] <site run_id> | ||

| + | Example: ./run_pp.sh USC 2494 | ||

| + | </pre> | ||

| + | |||

| + | <b>Input files:</b> None specified, but requires DAG files in directory. | ||

| + | |||

| + | <b>Output files:</b> None. | ||

| + | |||

| + | === Stochastic Workflow === | ||

| + | |||

| + | <b>Purpose:</b> To run a stochastic post-processing workflow. | ||

| + | |||

| + | <b>Detailed description:</b> run_stoch.sh takes a site and run ID and submits a workflow to Pegasus. The user can also specify that the run is a restart, which changes the acceptable state transitions used in the Run Manager. After the workflow is submitted using pegasus-run, the run state is updated to running in the Run Manager. The condor config files are saved as part of the metadata. There is also the option to turn on notifications to send email updates with workflow status changes. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #The run manager executables are changed. | ||

| + | |||

| + | <b>Source code location:</b> http://source.usc.edu/svn/cybershake/import/trunk/runs/run_stoch.sh | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Workflow_Framework#RunManager|Run Manager]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | run_stoch.sh | ||

| + | |||

| + | <b>Compile instructions:</b>None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | Usage: ./run_stoch.sh [-r] [-n notify_user] <site run_id> | ||

| + | Example: ./run_stoch.sh USC 2494 | ||

| + | </pre> | ||

| + | |||

| + | <b>Input files:</b> None specified, but requires DAG files in directory. | ||

| + | |||

| + | <b>Output files:</b> None. | ||

| + | |||

| + | === Integrated Workflow === | ||

| + | |||

| + | <b>Purpose:</b> To run an integrated (SGT + PP) workflow. | ||

| + | |||

| + | <b>Detailed description:</b> run_full.sh takes a site and run ID and submits a workflow to Pegasus. The user can also specify that the run is a restart, which changes the acceptable state transitions used in the Run Manager. After the workflow is submitted using pegasus-run, the run state is updated to running in the Run Manager. The condor config files are saved as part of the metadata. There is also the option to turn on notifications to send email updates with workflow status changes. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #The run manager executables are changed. | ||

| + | |||

| + | <b>Source code location:</b> http://source.usc.edu/svn/cybershake/import/trunk/runs/run_full.sh | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Workflow_Framework#RunManager|Run Manager]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | run_full.sh | ||

| + | |||

| + | <b>Compile instructions:</b>None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | Usage: ./run_full.sh [-r] [-n notify_user] <site run_id | -t timestamp > | ||

| + | Example: ./run_full.sh USC 2494 | ||

| + | </pre> | ||

| + | |||

| + | <b>Input files:</b> None specified, but requires DAG files in directory. | ||

| + | |||

| + | <b>Output files:</b> None. | ||

| + | |||

| + | == Typical invocation == | ||

| + | |||

| + | Below are typical invocations for a few common use cases. Note that if you're running using an RSQSim ERF, you should include -bbox in the SGT arguments. | ||

| + | |||

| + | === SGT followed by PP workflow === | ||

| + | |||

| + | This is for a site with our currently most frequently used parameters (velocity model CVM-S5, ERF ID 36, rupture variation scenario ID 6 for uniform spacing, SGT ID 8 for AWP GPU, frequency 1.0 Hz, filter frequency 2.0 Hz, moment.usc.edu as the run server, separate MD5 sums so we don't exceed bin 5 queue times on Titan, DirectSynth for the post-processing, calculate durations, non-blocking MD5 sum checks in the post-processing, calculate RotD50, use moment.usc.edu as the run server) | ||

| + | |||

| + | <pre>./create_sgt_dax.sh -s <site> -v vsi -e 36 -r 6 -g 8 -f 1.000000 -q 2.000000 --server moment.usc.edu --sgtargs -sm | ||

| + | ./plan_sgt.sh <site> <run ID> <remote system> | ||

| + | ./run_sgt.sh <site> <run ID> | ||

| + | ./create_pp_wf.sh -s <site> -v vsi -e 36 -r 6 -g 8 -f 1.0 -q 2.0 -ds -du -nb -r --server moment.usc.edu | ||

| + | ./plan_pp.sh <site> <run ID> <remote system> <user> <email> | ||

| + | ./run_pp.sh <site> <run_id> | ||

| + | </pre> | ||

| + | |||

| + | === Integrated workflow === | ||

| + | |||

| + | This is for a site with our currently most frequently used parameters (velocity model CVM-S5, ERF ID 36, rupture variation scenario ID 6 for uniform spacing, SGT ID 8 for AWP GPU, frequency 1.0 Hz, filter frequency 2.0 Hz, moment.usc.edu as the run server, separate MD5 sums so we don't exceed bin 5 queue times on Titan, DirectSynth for the post-processing, calculate durations, non-blocking MD5 sum checks in the post-processing, calculate RotD50). We specify the SGT and PP site, but it's not required. | ||

| + | |||

| + | <pre> | ||

| + | ./create_full_wf.sh -s <site> -v vsi -e 36 -r 6 -g 8 -f 1.000000 -q 2.000000 --server moment.usc.edu --sgtargs -ss <remote system> --ppargs -ps <remote system> -ds -du -nb -r | ||

| + | ./plan_full.sh <site> <run ID> <remote system> | ||

| + | ./run_full.sh <site> <run ID> | ||

| + | </pre> | ||

| + | |||

| + | == X509 certificates == | ||

| + | |||

| + | When using GRAM, GridFTP, or Globus Online, X509 proxies are used to authenticate. The idea is that once a user logs into a resource, the user can obtain a 'proxy', which the user can present instead of a password or SSH key to authenticate themselves to a remote system. These proxies are generated from certificates; the key difference is that the proxies are only valid for a limited period of time, so even if they're compromised they do not lead to permanent access to the system. Since X509 certificates are built on trust chains, any system which authenticates using your X509 proxy must ultimately trust the entity which signed your proxy. | ||

| + | |||

| + | In practice, this usually means doing some variation of the following: | ||

| + | # Log into the system you want to obtain a proxy for. You may need to log into a separate server to get a proxy (for example, on Titan, you must log into one of the data transfer nodes [dtn].) | ||

| + | # Run myproxy-logon -t 300:00, or some similar variant. | ||

| + | # Transfer the generated certificate back to your submit host (shock.usc.edu) | ||

| + | # If you are working with a new system, make sure that the correct certificate authority (CA) certificates authorizing the entities signing the certificate are installed. Typically these are installed in /etc/grid-security/certificates; you can usually override these with your own certificates in ~/.globus/certificates, particularly useful on systems for which we don't have write access to /etc/grid-security/certificates. You want to make sure you have a .0 and .signing_policy for your certificates; the .crl_url and .r0 are more trouble than they're worth. | ||

| + | |||

| + | Your proxy must be valid in order to be useful. You can check validity by running the command: | ||

| + | grid-proxy-info -f <proxy path> | ||

| + | |||

| + | Check the 'timeleft' field to make sure it is not 00:00:00. For CyberShake production runs, we have a cronjob which checks certificates every hour and emails Scott if any have <24 hours left. Note that on Titan the longest duration proxy they will issue is 72 hours. On Stampede2 and Blue Waters, it's about 11 days. If suddenly jobs stop working, or file transfers fail, checking certificate validity is a good place to start. | ||

| + | |||

| + | == Pegasus-MPI-Cluster == | ||

| + | |||

| + | == Additional Workflow Jobs == | ||

| + | |||

| + | == Run Manager == | ||

| + | |||