Difference between revisions of "CyberShake migration to Summit"

| Line 262: | Line 262: | ||

When I first tried running DirectSynth on Summit, I saw seg faults in the workers somewhere in the FFTW package, with both the intel and gcc compilers. Switching from fftw v3.3.8 to fftw v3.3.5 seems to have fixed this issue. I could not replicate this problem when running the TEST site, only a full-sized site (s2257). | When I first tried running DirectSynth on Summit, I saw seg faults in the workers somewhere in the FFTW package, with both the intel and gcc compilers. Switching from fftw v3.3.8 to fftw v3.3.5 seems to have fixed this issue. I could not replicate this problem when running the TEST site, only a full-sized site (s2257). | ||

| − | In point-to-point comparisons between the timeseries, we see differences | + | In point-to-point comparisons between the timeseries, we see differences. For most rupture variations, these differences are no larger that a few percent, but there are a few source/rupture/RV combinations with much bigger differences, in excess of 100% (for example, 0.803838 compared to -2.545603) and with a clear visual difference at the peaks and valleys. |

| + | |||

| + | === itshift === | ||

| + | |||

| + | The small differences seem to be the result of different values for itshift in timeshift_sgt(), in jbsim/sgt_subs.c around line 1225: | ||

itshift = (int)(tsh/sgtheadptr->dt + 0.5); | itshift = (int)(tsh/sgtheadptr->dt + 0.5); | ||

| Line 271: | Line 275: | ||

backt0 = backt0 + *rupt - *tstart; (line 1160) | backt0 = backt0 + *rupt - *tstart; (line 1160) | ||

| − | *rupt is a parameter, tinit, from the SRF, read in get_srfpars(). For one point I examined, on the reference system (Blue Waters), the tinit value is 12.824999. On Summit, it is 12.825000. This slight difference leads Blue Waters to round itshift down, and on Summit it is rounded up. If itshift is different by 1, it shifts the entire timeseries for that single rupture point by 1 timestep. When this is added into the overall seismogram, it leads to differences. | + | *rupt is a parameter, tinit, from the SRF, read in get_srfpars(). For one point I examined, on the reference system (Blue Waters), the tinit value is 12.824999. On Summit, it is 12.825000. This slight difference leads Blue Waters to round itshift down, and on Summit it is rounded up. If itshift is different by 1, it shifts the entire timeseries for that single rupture point by 1 timestep. When this is added into the overall seismogram, it leads to differences. , but these differences do not seem to be consequential overall: the RotD values agree to a tolerance of 5e-4, suggesting very little impact on the hazard curves. |

| − | |||

| − | |||

| − | |||

| − | + | === tinit times === | |

Revision as of 16:22, 16 August 2019

This page is being used to gather information on the effort to migrate CyberShake from Titan to Summit.

Software Status

Initially, we are running each phase on Summit and comparing the output with that on Titan.

- PreCVM: OK

- UCVM: OK

- Smoothing: OK

- PreSGT: OK

- PreAWP: OK

- AWP-GPU-SGT: OK

- Rupture Generator:

- DirectSynth:

UCVM

Summit and Titan results agree very well, to floating-point error:

Average absolute difference: 3e-13 Average absolute percentage difference: 6.748571e-15%% Largest difference: 0.00, at index 6882517392. Largest percent difference: 0.00%, at index 2816011138 Absolute percentage difference histogram: 0.0001 0.0010 0.0100 0.1000 0.3000 1.0000 3.0000 10.0000 100.0000 1000.0000 17388000000 0 0 0 0 0 0 0 0 0 0 100.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00%

Smoothing

Summit and Titan results agree closely:

Average absolute difference: 7e-02 Average absolute percentage difference: 1.620595e-03% Largest difference: 7.58, at index 11054242335. Largest percent difference: 0.11%, at index 9972096119 Absolute percentage difference histogram: 0.0001 0.0010 0.0100 0.1000 0.3000 1.0000 3.0000 10.0000 100.0000 1000.0000 16586999210 800953496 47294 0 0 0 0 0 0 0 0 95.39% 4.61% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 95.39% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00%

This difference seems to be due to floating-point error. A float is used to accumulate values before averaging, and now that we are using 20 km smoothing with 100 m spacing, enough data is stored that some of the precision is lost.

If we compare Summit using double-precision to Titan using double-precision, we're back to negligible error:

Average absolute difference: 2e-12 Average absolute percentage difference: 4.053278e-14% Largest difference: 0.00, at index 1287951102. Largest percent difference: 0.00%, at index 1287951102 Absolute percentage difference histogram: 0.0001 0.0010 0.0100 0.1000 0.3000 1.0000 3.0000 10.0000 100.0000 1000.0000 17388000000 0 0 0 0 0 0 0 0 0 0 100.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00%

AWP-GPU-SGT

We arbitrarily selected site s2257 and ran the X-component (the AWP X-component, which is the RWG Y-component) on Summit, to compare to the results on Titan.

Numerical comparison

When comparing all SGT points (test-reference), we get:

Average diff = 1.317764e-12, average percent diff = -3.324251% Largest diff of 0.000150 at float index 1718660606. Largest percent diff of 5017404928.000000% at float index 8636382051.

When we only consider points greater than 1e-10 (peak SGT values are usually 1e-4 to 1e-3), we get:

Average diff = 1.317764e-12, average percent diff = 0.133713%, average absolute percent diff = 12.979661% Largest diff of 0.000150 at float index 1718660606. Largest percent diff of 7148245.500000% at float index 1626932526.

The average percent diff is much less than before, but we included the absolute percent diff, which reveals that many points differ by considerable amounts and there was some canceling going on when looking at the average percent diff.

As a result of this, we decided to more closely investigate what is causing the differences between the two systems.

Initial plots

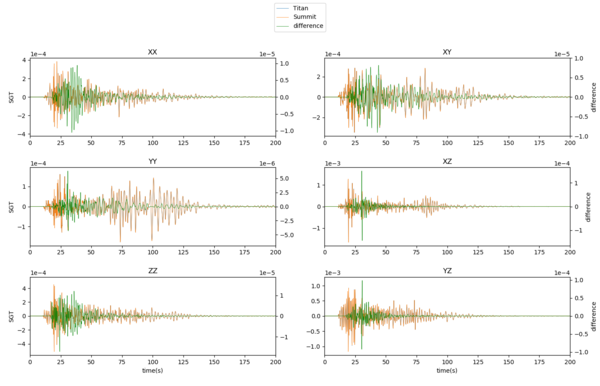

We identified point ID 71610 as the point which contains the largest difference between the test and reference SGTs.

Below are plots of this point, comparing the Titan and Summit results and the difference. The zoomed-in version focuses on the 25 seconds with the largest differences.

CUDA versions

The default version of CUDA on Titan is 9.1.85 and on Summit it's 9.2.148. We tried rebuilding the AWP code on Summit with 9.1.85 and GCC and rerunning. It didn't make much difference:

Average diff = 1.272838e-12, average percent diff = 0.133971%, average absolute percent diff = 12.971045% Largest diff of 0.000150 at float index 1718660606. Largest percent diff of 7148275.000000% at float index 1626932526

X and Y-only work distribution

We tried distributing the work only across X (PX=120, PY=1, PZ=1)but got similar differences.

X:

Average diff = 1.317764e-12, average percent diff = 0.133713%, average absolute percent diff = 12.979661% Largest diff of 0.000150 at float index 1718660606. Largest percent diff of 7148245.500000% at float index 1626932526.

We also tried it across Y (PX=1, PY=210, PZ=1). This resulted in a memory error:

*** Error in `/gpfs/alpine/proj-shared/geo112/CyberShake/software/AWP-GPU-SGT/bin/pmcl3d': free(): invalid size: 0x0000000040728bc0 *** ======= Backtrace: ========= /lib64/libc.so.6(cfree+0x4a0)[0x2000004f9cd0] /gpfs/alpine/proj-shared/geo112/CyberShake/software/AWP-GPU-SGT/bin/pmcl3d[0x10008d34] /gpfs/alpine/proj-shared/geo112/CyberShake/software/AWP-GPU-SGT/bin/pmcl3d[0x10008774] /lib64/libc.so.6(+0x25100)[0x200000485100] /lib64/libc.so.6(__libc_start_main+0xc4)[0x2000004852f4]

Smaller SGTs

We tried generating SGTs for the SMALL site, which are about 6% the size of s2257, on both Titan and Summit. We actually saw even larger average differences:

Average diff = 6.027801e-12, average percent diff = -1.673267%, average absolute percent diff = 21.367271% Largest diff of 0.000067 at float index 175412388. Largest percent diff of 2091817.000000% at float index 1363132518.

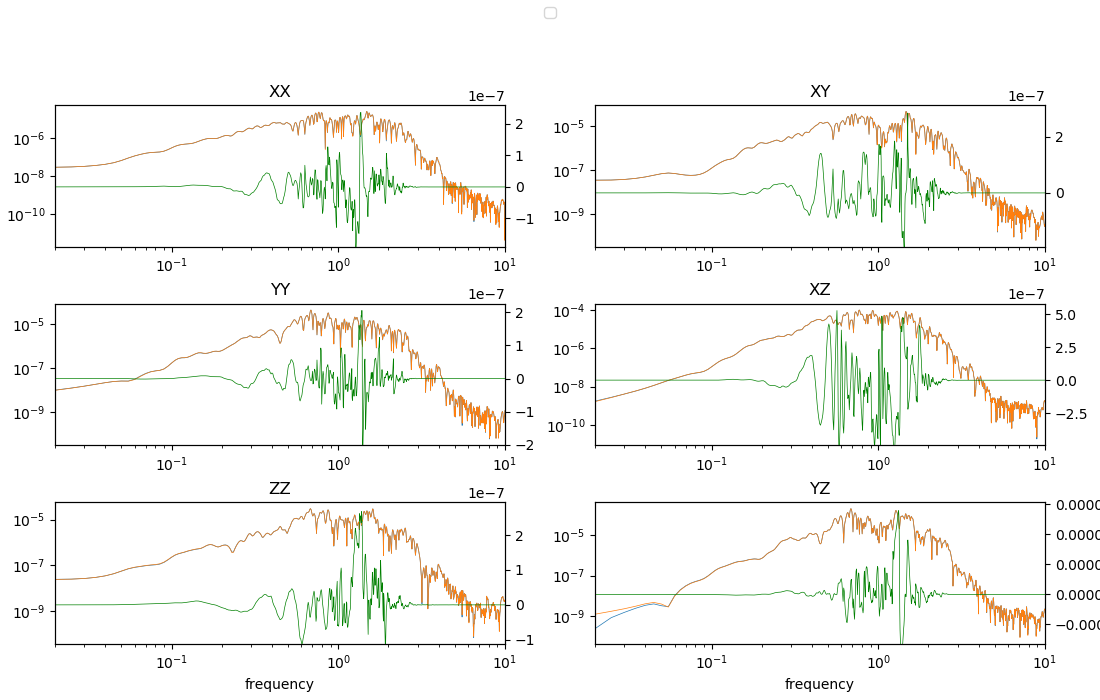

Frequency content

We did a frequency content analysis on the largest difference SGT from the SMALL site:

Ossian's test

Ossian O'Reilly created a simple, single-GPU test here on Github.

Running his test on Titan and Summit, and running his comparison script, I obtained the following agreement:

Difference (titan, x=1, y=1) (summit, x=1, y=1), l2 (abs): 2.050260e-04 l2 (rel) 2.090467e-06 l1 (abs) 1.097141e-01 l1 (rel) 7.702747e-06 linf (abs) 6.659655e-06 linf (rel) 1.872744e-06

Comparing the same two outputs using my scripts, using a cutoff for percent comparisons of 1e-6:

Average diff = 6.023823e-10, average percent diff = -0.026654%, average absolute percent diff = 0.452920%

Largest diff of 0.000007 at float index 128648.

Largest percent diff of 69.524002% at float index 3874621.

Absolute percentage difference histogram:

0.0001 0.0010 0.0100 0.1000 0.3000 1.0000 3.0000 10.00 100.00 1000.0

138147 187512 179189 202769 122466 110331 57253 27862 7452 0 0

13.37% 18.15% 17.35% 19.63% 11.86% 10.68% 5.54% 2.70% 0.72% 0.00% 0.00%

13.37% 31.53% 48.87% 68.50% 80.36% 91.04% 96.58% 99.28% 100.00% 100.00 100.00%

Approximately half the points have a percent difference less than 0.01%, 90% less than 0.3%, and 99% less than 3%.

These two comparisons show us different information: point-to-point comparison can be biased by small values which have little impact on the result. For example, ~80% of the points have values less than 1e-6, but the largest values are greater than 1. L1 comparison can be biased by the largest points, which carry much more weight than points 1 or 2 orders of magnitude lower, which are still important for agreement.

I tried running my code vs Ossian's code on Summit. The difference is how y_rank_F and y_rank_B are handled in Ossian's version. We see differences similar to those above:

Average diff = 3.603304e-09, average percent diff = -0.000870%, average absolute percent diff = 0.585565% Largest diff of 0.000475 at float index 3272633. Largest percent diff of 16670.455078% at float index 3272726. Absolute percentage difference histogram: 0.0001 0.0010 0.0100 0.1000 0.3000 1.0000 3.0000 10.0000 100.0000 1000.0000 67238 114994 199943 268809 145799 121488 68547 38022 7930 84 1 6.51% 11.13% 19.36% 26.03% 14.12% 11.76% 6.64% 3.68% 0.77% 0.01% 0.00% 6.51% 17.64% 37.00% 63.03% 77.14% 88.91% 95.54% 99.22% 99.99% 100.00% 100.00%

Additional tests

Comparison using Ossian's parameters with CyberShake code on Titan vs Summit, no optimization:

Average diff = 3.000922e-09, average percent diff = 0.013876%, average absolute percent diff = 0.650546% Largest diff of 0.000475 at float index 3272633. Largest percent diff of 16670.525391% at float index 3272726. Absolute percentage difference histogram: 0.0001 0.0010 0.0100 0.1000 0.3000 1.0000 3.0000 10.0000 100.0000 1000.0000 72818 114632 210094 283026 131370 108666 63794 36609 11761 84 1 7.05% 11.10% 20.34% 27.40% 12.72% 10.52% 6.18% 3.54% 1.14% 0.01% 0.00% 7.05% 18.15% 38.49% 65.89% 78.61% 89.13% 95.31% 98.85% 99.99% 100.00% 100.00%

Bug fix

Ossian identified a bug in most versions of AWP, including the SGT code. The bug is in pmcl3d.c, in lines 267 and 268:

err = MPI_Cart_shift(MC1, 0, 1, &x_rank_L, &x_rank_R ); err = MPI_Cart_shift(MC1, 1, 1, &y_rank_F, &y_rank_B );

If the process calling these functions is a boundary process - that is, it has no previous neighbor in the Y-direction, for example - the neighbor variable (y_rank_F, y_rank_B, x_rank_L, x_rank_R) is set to MPI_UNDEFINED. On Titan, MPI_UNDEFINED is -1, but on Summit it is only required to be less than 0.

Later, the kernel to perform data exchange is called if the neighbor variable is not -1. On Titan, this means the kernel is not called when there is no neighbor, but on Summit it is. Additionally, the buffer used in this exchange is uninitialized, so the effect on Summit is that random values are exchanged with the boundary process, leading to errors.

To fix this, Ossian included the following lines of code in pmcl3d.c, line 273:

// If any neighboring rank is out of bounds, then MPI_Cart_shift sets the

// destination argument to a negative number. We use the convention that -1

// denotes ranks out of bounds.

if (x_rank_L < 0) {

x_rank_L = -1;

}

if (x_rank_R < 0 ) {

x_rank_R = -1;

}

if (y_rank_F < 0) {

y_rank_F = -1;

}

if (y_rank_B < 0) {

y_rank_B = -1;

}

This ensures the kernel is not called if there is no neighbor. Additionally, all device memory is now initialized to 0.

Verification tests

Following the implementation of Ossian's bug fix, the results on Titan and Summit came into much better agreement.

For a full-scale SGT calculated at site s2257, we see the following differences (minimum differences at 1e-6):

Average diff = -7.335352e-13, average percent diff = 0.000036%, average absolute percent diff = 0.038536% Largest diff of 0.000006 at float index 240929422. Largest percent diff of 338.659698% at float index 41967549362. Absolute percentage difference histogram: 0.0001 0.0010 0.0100 0.1000 0.3000 1.0000 3.0000 10.0000 100.0000 1000.0000 478231446 1819753594 2113474276 1241972140 295957433 157813438 27593530 2496656 151869 503 0 7.79% 29.65% 34.44% 20.24% 4.82% 2.57% 0.45% 0.04% 0.00% 0.00% 0.00% 7.79% 37.44% 71.88% 92.11% 96.94% 99.51% 99.96% 100.00% 100.00% 100.00% 100.00%

Repeating with a cutoff of 1e-10:

19702828989 points used for percent comparison, of 43417008000 total. Average diff = -7.335352e-13, average percent diff = 0.000288%, average absolute percent diff = 0.491180% Largest diff of 0.000006 at float index 240929422. Largest percent diff of 538614.875000% at float index 42529049490. Absolute percentage difference histogram: 0.0001 0.0010 0.0100 0.1000 0.3000 1.0000 3.0000 10.0000 100.0000 1000.0000 559826373 2204732859 3735746248 6725999521 3262492383 2066082069 753902072 280090672 106501047 7143593 312152 2.84% 11.19% 18.96% 34.14% 16.56% 10.49% 3.83% 1.42% 0.54% 0.04% 0.00% 2.84% 14.03% 32.99% 67.13% 83.69% 94.17% 98.00% 99.42% 99.96% 100.00% 100.00%

We also compared Titan before the bugfix to Titan after (cutoff of 1e-10). The results are identical.

19702828989 points used for percent comparison, of 43417008000 total. Average diff = 0.000000e+00, average percent diff = 0.000000%, average absolute percent diff = 0.000000% Largest diff of 0.000000 at float index -1. Largest percent diff of 0.000000% at float index -1. Absolute percentage difference histogram: 0.0001 0.0010 0.0100 0.1000 0.3000 1.0000 3.0000 10.0000 100.0000 1000.0000 19702828989 0 0 0 0 0 0 0 0 0 0 100.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 0.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00% 100.00%

DirectSynth

When I first tried running DirectSynth on Summit, I saw seg faults in the workers somewhere in the FFTW package, with both the intel and gcc compilers. Switching from fftw v3.3.8 to fftw v3.3.5 seems to have fixed this issue. I could not replicate this problem when running the TEST site, only a full-sized site (s2257).

In point-to-point comparisons between the timeseries, we see differences. For most rupture variations, these differences are no larger that a few percent, but there are a few source/rupture/RV combinations with much bigger differences, in excess of 100% (for example, 0.803838 compared to -2.545603) and with a clear visual difference at the peaks and valleys.

itshift

The small differences seem to be the result of different values for itshift in timeshift_sgt(), in jbsim/sgt_subs.c around line 1225:

itshift = (int)(tsh/sgtheadptr->dt + 0.5);

Since a cast to int is involved, the difference comes from this floor: if tsh/sgtheadptr->dt is slightly more or slightly less that *.5, it will cause itshift to differ by 1. tsh comes from:

tsh = t0[ig] + *bt0; (line 1218)

- bt0 is passed into timeshift_sgt() from sum_sgt(), where is is called backt0, and it is defined as:

backt0 = backt0 + *rupt - *tstart; (line 1160)

- rupt is a parameter, tinit, from the SRF, read in get_srfpars(). For one point I examined, on the reference system (Blue Waters), the tinit value is 12.824999. On Summit, it is 12.825000. This slight difference leads Blue Waters to round itshift down, and on Summit it is rounded up. If itshift is different by 1, it shifts the entire timeseries for that single rupture point by 1 timestep. When this is added into the overall seismogram, it leads to differences. , but these differences do not seem to be consequential overall: the RotD values agree to a tolerance of 5e-4, suggesting very little impact on the hazard curves.