Difference between revisions of "CyberShake Study 22.12"

| (182 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | CyberShake Study 22. | + | CyberShake Study 22.12 is a study in Southern California which includes deterministic low-frequency (0-1 Hz) and stochastic high-frequency (1-50 Hz) simulations. We used the [[Rupture_Variation_Generator_v5.5.2|Graves & Pitarka (2022)]] rupture generator and the high frequency modules from the SCEC Broadband Platform v22.4. |

== Status == | == Status == | ||

| − | This study is | + | This study is complete. We are currently performing analysis of the results. |

== Data Products == | == Data Products == | ||

| − | + | === Low-frequency === | |

| + | |||

| + | *[https://opensha.usc.edu/ftp/kmilner/markdown/cybershake-analysis/study_22_12_lf/hazard_maps/ Low-frequency hazard maps, including comparisons to Study 15.4] | ||

| + | |||

| + | ==== Change from Study 15.4 ==== | ||

| + | |||

| + | Here's a table with the overall averaged differences between Study 15.4 and 22.12 at 2% in 50 years. | ||

| + | |||

| + | Negative difference and ratios less than 1 indicate smaller Study 22.12 results. | ||

| + | |||

| + | {| border="1" cellpadding="5" | ||

| + | ! Period !! Difference (22.12-15.4) !! Ratio (22.12/15.4) | ||

| + | |- | ||

| + | ! 2 sec | ||

| + | | 0.0445 g | ||

| + | | 1.162 | ||

| + | |- | ||

| + | ! 3 sec | ||

| + | | -0.00883 g | ||

| + | | 0.988 | ||

| + | |- | ||

| + | ! 5 sec | ||

| + | | -0.0200 g | ||

| + | | 0.0901 | ||

| + | |- | ||

| + | ! 10 sec | ||

| + | | -0.00846 | ||

| + | | 0.882 | ||

| + | |} | ||

| + | |||

| + | === Broadband === | ||

| + | |||

| + | *[https://opensha.usc.edu/ftp/kmilner/markdown/cybershake-analysis/study_22_12_hf/hazard_maps/ Broadband hazard maps, including comparisons to Study 15.12] | ||

== Science Goals == | == Science Goals == | ||

| Line 16: | Line 48: | ||

*Calculate an updated broadband CyberShake model. | *Calculate an updated broadband CyberShake model. | ||

*Sample variability in rupture velocity as part of the rupture generator. | *Sample variability in rupture velocity as part of the rupture generator. | ||

| + | *Increase hypocentral density, from 4.5 km to 4 km. | ||

== Technical Goals == | == Technical Goals == | ||

| Line 23: | Line 56: | ||

*Use an optimized OpenMP version of the post-processing code. | *Use an optimized OpenMP version of the post-processing code. | ||

*Bundle the SGT and post-processing jobs to run on large Condor glide-ins, taking advantage of queue policies favoring large jobs. | *Bundle the SGT and post-processing jobs to run on large Condor glide-ins, taking advantage of queue policies favoring large jobs. | ||

| + | *Perform the largest CyberShake study to date. | ||

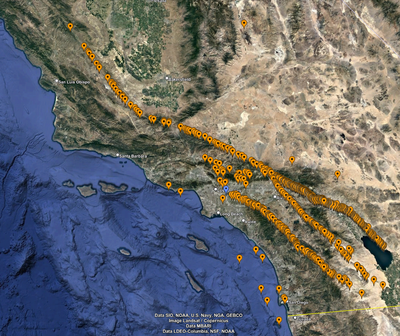

== Sites == | == Sites == | ||

| Line 28: | Line 62: | ||

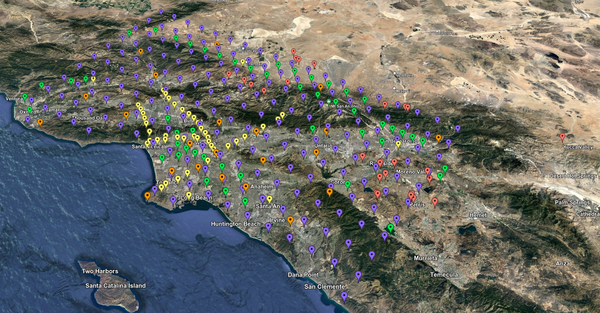

We will use the standard 335 southern California sites (same as Study 21.12). The order of execution will be: | We will use the standard 335 southern California sites (same as Study 21.12). The order of execution will be: | ||

| + | *Stress test sites ([[Media:Study_22_12_stress_test_sites_names.kml|site list here]]) | ||

*Sites of interest | *Sites of interest | ||

*20 km grid | *20 km grid | ||

| Line 42: | Line 77: | ||

== Velocity Model == | == Velocity Model == | ||

| + | |||

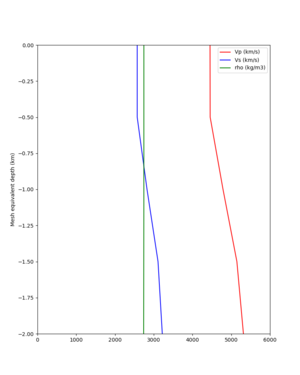

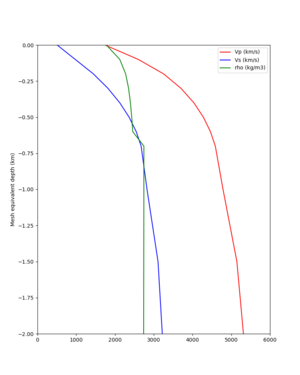

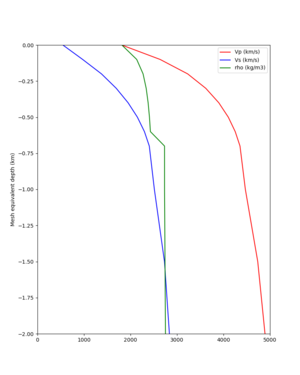

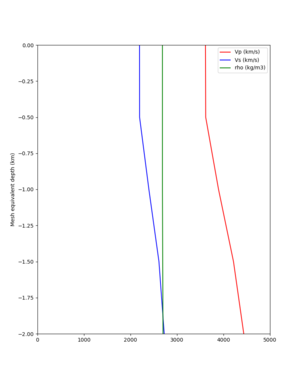

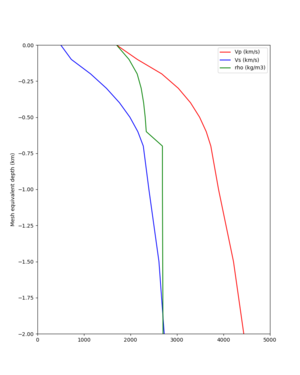

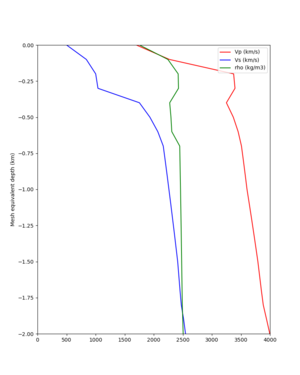

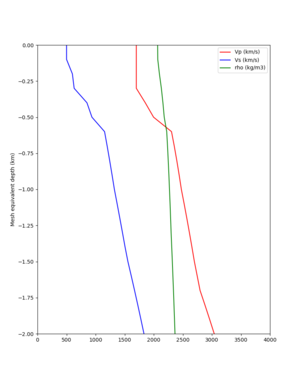

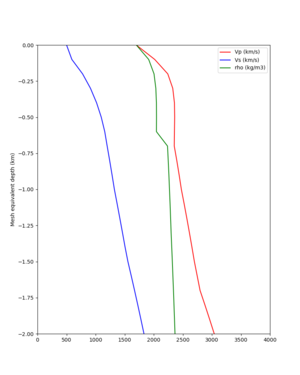

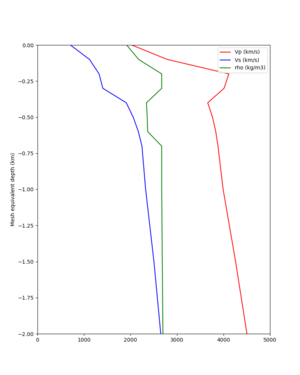

| + | <b>Summary: We are using a velocity model which is a combination of CVM-S4.26.M01 and the Ely-Jordan GTL: | ||

| + | <ul> | ||

| + | <li>At each mesh point in the top 700m, we calculate the Vs value from CVM-S4.26.M01, and from the Ely-Jordan GTL using a taper down to 700m.</li> | ||

| + | <li>We select the approach which produces the smallest Vs value, and we use the Vp, Vs, and rho from that approach.</li> | ||

| + | <li>We also preserve the Vp/Vs ratio, so if the Vs minimum of 500 m/s is applied, Vp will be scaled so the Vp/Vs ratio is the same.</li> | ||

| + | </ul> | ||

| + | </b> | ||

We are planning to use CVM-S4.26 with a GTL applied, and the CVM-S4 1D background model outside of the region boundaries. | We are planning to use CVM-S4.26 with a GTL applied, and the CVM-S4 1D background model outside of the region boundaries. | ||

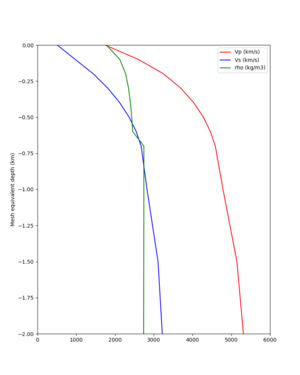

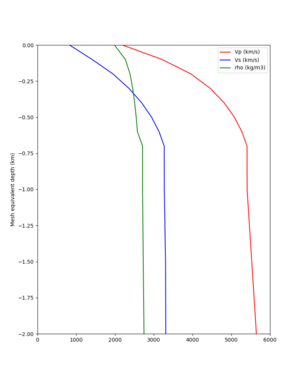

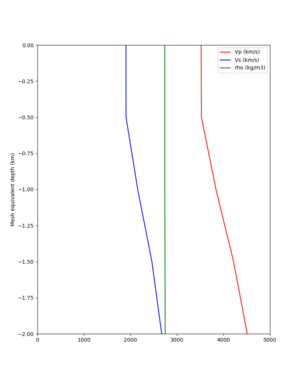

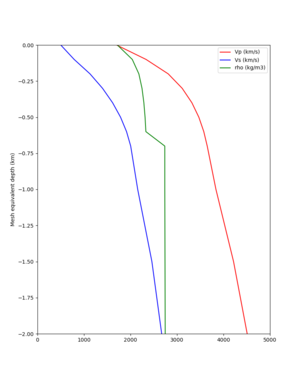

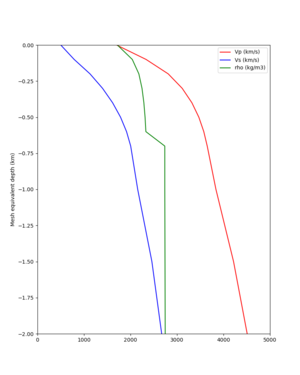

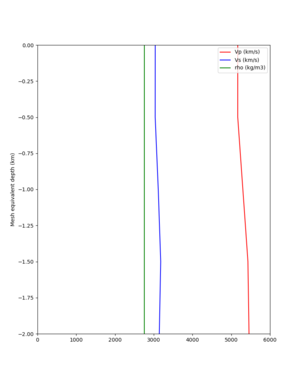

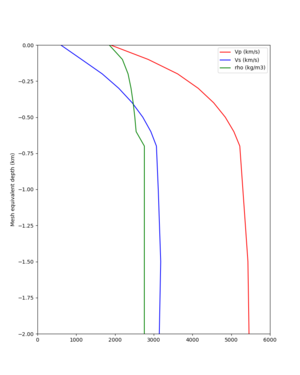

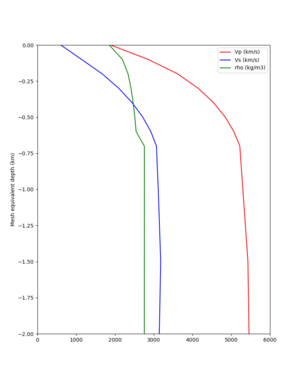

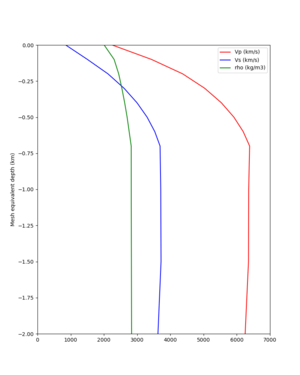

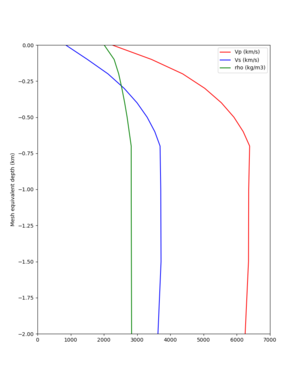

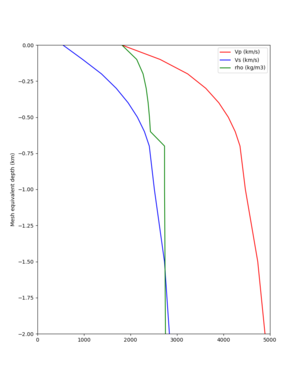

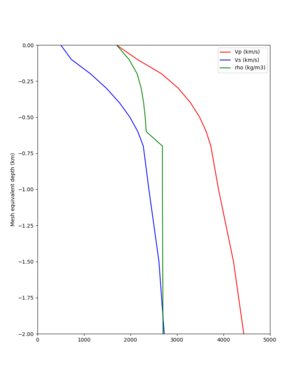

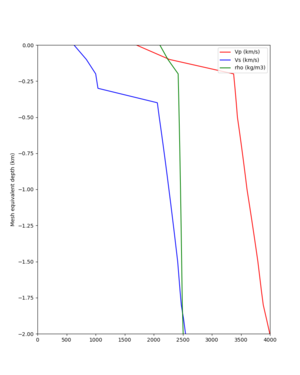

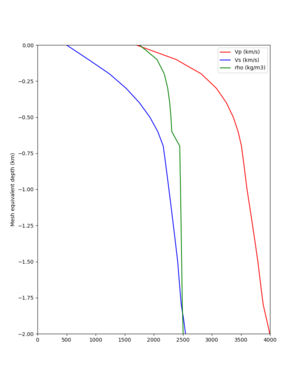

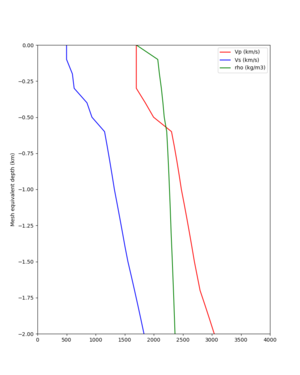

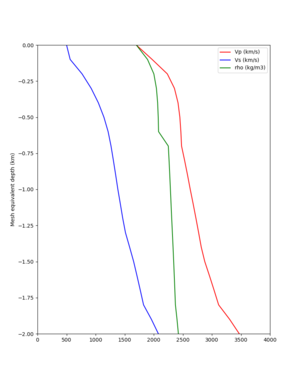

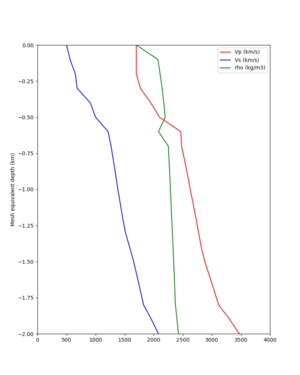

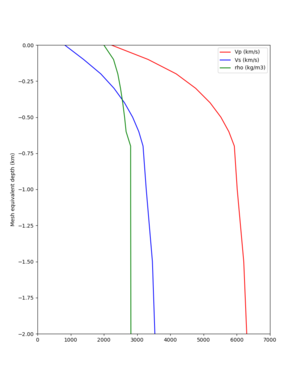

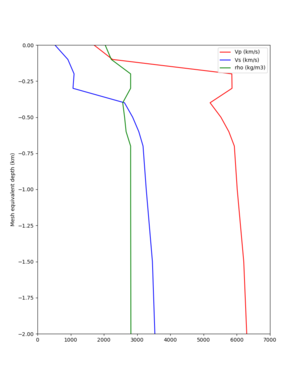

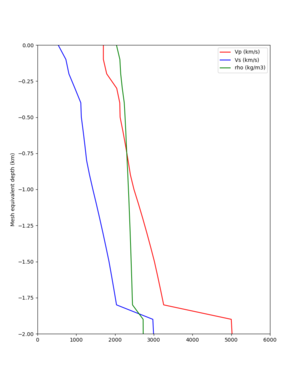

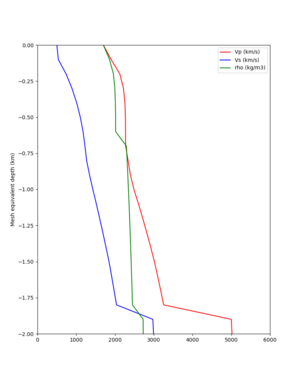

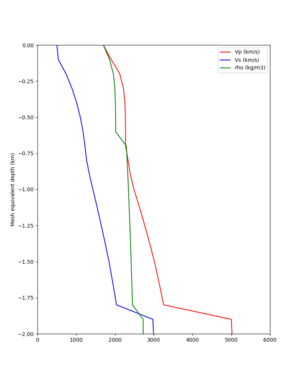

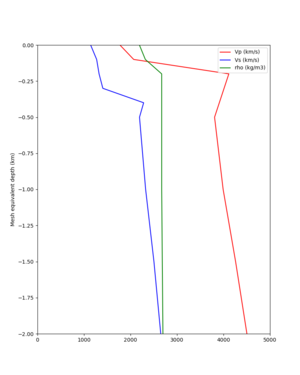

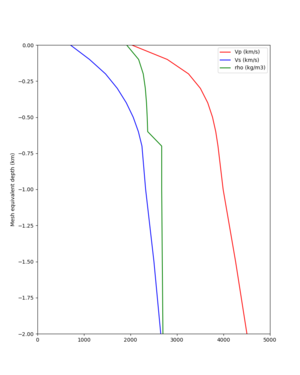

We are investigating applying the Ely-Jordan GTL down to 700 m instead of the default of 350m. We extracted profiles for a series of southern California CyberShake sites, with no GTL, a GTL applied down to 350m, and a GTL applied down to 700m. | We are investigating applying the Ely-Jordan GTL down to 700 m instead of the default of 350m. We extracted profiles for a series of southern California CyberShake sites, with no GTL, a GTL applied down to 350m, and a GTL applied down to 700m. | ||

| + | |||

| + | === Velocity Profiles === | ||

| + | |||

| + | Corners of the Simulation region are: | ||

| + | |||

| + | <pre> | ||

| + | -119.38,34.13 | ||

| + | -118.75,35.08 | ||

| + | -116.85,34.19 | ||

| + | -117.5,33.25 | ||

| + | </pre> | ||

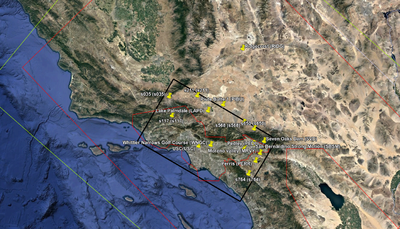

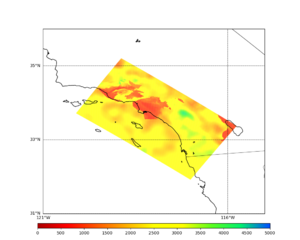

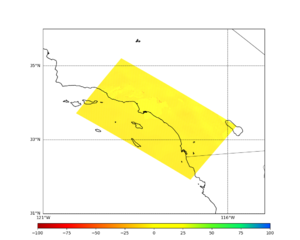

Sites (the CVM-S4.26 basins are outlined in red): | Sites (the CVM-S4.26 basins are outlined in red): | ||

| Line 56: | Line 110: | ||

{| | {| | ||

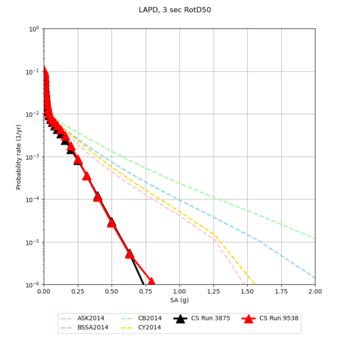

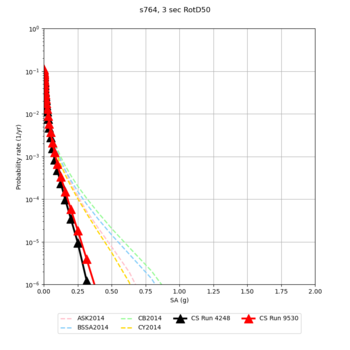

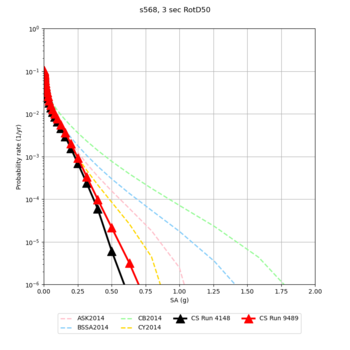

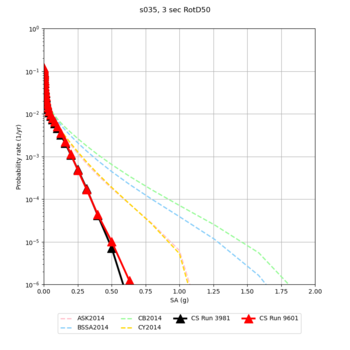

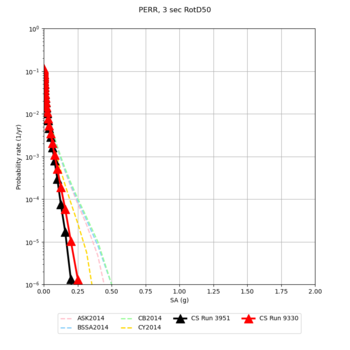

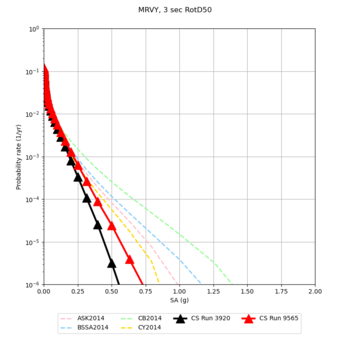

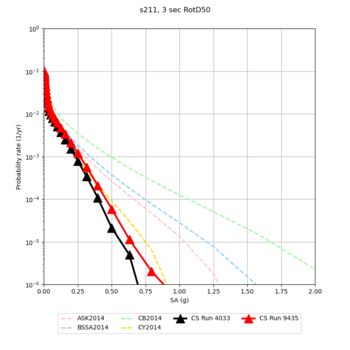

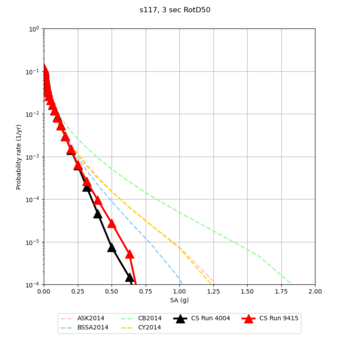

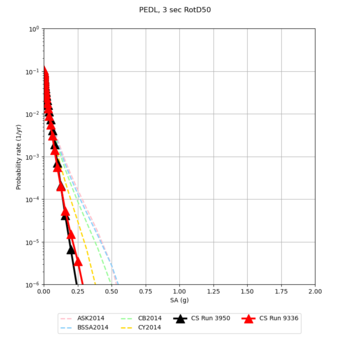

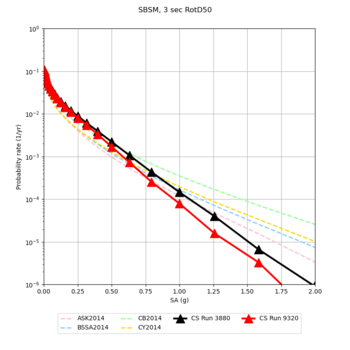

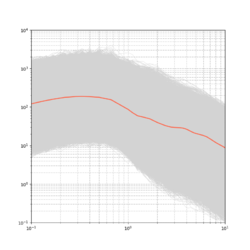

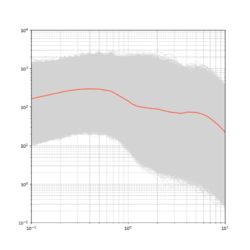

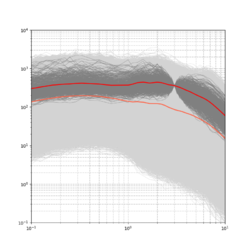

| − | ! Site !! No GTL !! 700m GTL !! Smaller value, extracted from mesh | + | ! Site !! No GTL !! 700m GTL !! Smaller value, extracted from mesh !! 3 sec comparison curve<br/>Study 15.4 w/no taper and v3.3.1, <span style="color:red">Study 22.12 w/taper and v5.5.2</span> |

|- | |- | ||

! LAPD | ! LAPD | ||

| Line 62: | Line 116: | ||

| [[File:cvmsi_elygtl_ely_z_700m_LAPD.png|thumb|300px]] | | [[File:cvmsi_elygtl_ely_z_700m_LAPD.png|thumb|300px]] | ||

| [[File:LAPD_ifless_profile.png|thumb|300px]] | | [[File:LAPD_ifless_profile.png|thumb|300px]] | ||

| + | | [[File:LAPD_3875_v_9538_3sec.png|thumb|350px]] | ||

|- | |- | ||

<!-- ! s650 | <!-- ! s650 | ||

| Line 71: | Line 126: | ||

| [[File:cvmsi_elygtl_ely_z_700m_s764.png|thumb|300px]] | | [[File:cvmsi_elygtl_ely_z_700m_s764.png|thumb|300px]] | ||

| [[File:s764_ifless_profile.png|thumb|300px]] | | [[File:s764_ifless_profile.png|thumb|300px]] | ||

| + | | [[File:s764_4248_v_9530_3sec.png|thumb|350px]] | ||

|- | |- | ||

! s568 | ! s568 | ||

| Line 76: | Line 132: | ||

| [[File:cvmsi_elygtl_ely_z_700m_s568.png|thumb|300px]] | | [[File:cvmsi_elygtl_ely_z_700m_s568.png|thumb|300px]] | ||

| [[File:s568_ifless_profile.png|thumb|300px]] | | [[File:s568_ifless_profile.png|thumb|300px]] | ||

| + | | [[File:s568_4148_v_9489_3sec.png|thumb|350px]] | ||

|- | |- | ||

! s035 | ! s035 | ||

| Line 81: | Line 138: | ||

| [[File:cvmsi_elygtl_ely_z_700m_s035.png|thumb|300px]] | | [[File:cvmsi_elygtl_ely_z_700m_s035.png|thumb|300px]] | ||

| [[File:s035_ifless_profile.png|thumb|300px]] | | [[File:s035_ifless_profile.png|thumb|300px]] | ||

| + | | [[File:s035_3981_v_9601_3sec.png|thumb|350px]] | ||

|- | |- | ||

<!-- ! RIDG | <!-- ! RIDG | ||

| Line 90: | Line 148: | ||

| [[File:cvmsi_elygtl_ely_z_700m_PERR.png|thumb|300px]] | | [[File:cvmsi_elygtl_ely_z_700m_PERR.png|thumb|300px]] | ||

| [[File:PERR_ifless_profile.png|thumb|300px]] | | [[File:PERR_ifless_profile.png|thumb|300px]] | ||

| + | | [[File:PERR_3951_v_9330_3sec.png|thumb|350px]] | ||

|- | |- | ||

! MRVY | ! MRVY | ||

| Line 95: | Line 154: | ||

| [[File:cvmsi_elygtl_ely_z_700m_MRVY.png|thumb|300px]] | | [[File:cvmsi_elygtl_ely_z_700m_MRVY.png|thumb|300px]] | ||

| [[File:MRVY_ifless_profile.png|thumb|300px]] | | [[File:MRVY_ifless_profile.png|thumb|300px]] | ||

| + | | [[File:MRVY_3920_v_9565_3sec.png|thumb|350px]] | ||

|- | |- | ||

! s211 | ! s211 | ||

| Line 100: | Line 160: | ||

| [[File:cvmsi_elygtl_ely_z_700m_s211.png|thumb|300px]] | | [[File:cvmsi_elygtl_ely_z_700m_s211.png|thumb|300px]] | ||

| [[File:s211_ifless_profile.png|thumb|300px]] | | [[File:s211_ifless_profile.png|thumb|300px]] | ||

| + | | [[File:s211_4033_v_9435_3sec.png|thumb|350px]] | ||

|- | |- | ||

<!-- ! PIBU | <!-- ! PIBU | ||

| Line 110: | Line 171: | ||

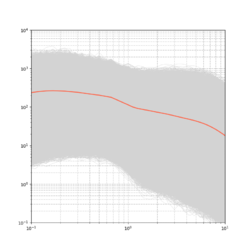

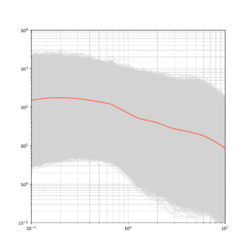

{| | {| | ||

| − | ! Site !! No GTL !! 700m GTL !! Smaller value | + | ! Site !! No GTL !! 700m GTL !! Smaller value !! 3 sec comparison curve<br/>Study 15.4 w/no taper and v3.3.1, <span style="color:red">Study 22.12 w/taper and v5.5.2</span> |

|- | |- | ||

! s117 | ! s117 | ||

| Line 116: | Line 177: | ||

| [[File:cvmsi_elygtl_ely_z_700m_s117.png|thumb|300px]] | | [[File:cvmsi_elygtl_ely_z_700m_s117.png|thumb|300px]] | ||

| [[File:s117_ifless_profile.png|thumb|300px]] | | [[File:s117_ifless_profile.png|thumb|300px]] | ||

| + | | [[File:s117_4004_v_9415_3sec.png|thumb|350px]] | ||

|- | |- | ||

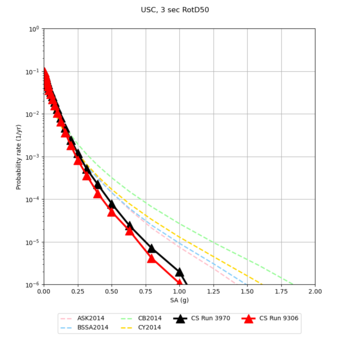

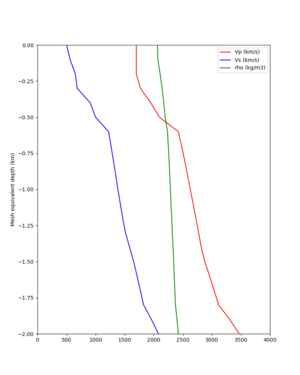

! USC | ! USC | ||

| Line 121: | Line 183: | ||

| [[File:cvmsi_elygtl_ely_z_700m_USC.png|thumb|300px]] | | [[File:cvmsi_elygtl_ely_z_700m_USC.png|thumb|300px]] | ||

| [[File:USC_ifless_profile.png|thumb|300px]] | | [[File:USC_ifless_profile.png|thumb|300px]] | ||

| + | | [[File:USC_3970_v_9306_3sec.png|thumb|350px]] | ||

|- | |- | ||

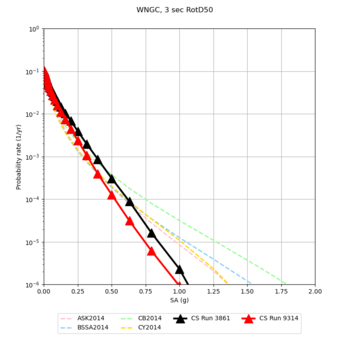

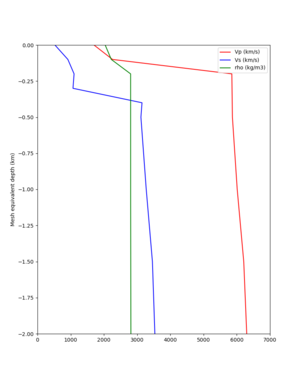

! WNGC | ! WNGC | ||

| Line 126: | Line 189: | ||

| [[File:cvmsi_elygtl_ely_z_700m_WNGC.png|thumb|300px]] | | [[File:cvmsi_elygtl_ely_z_700m_WNGC.png|thumb|300px]] | ||

| [[File:WNGC_ifless_profile.png|thumb|300px]] | | [[File:WNGC_ifless_profile.png|thumb|300px]] | ||

| + | | [[File:WNGC_3861_v_9314_3sec.png|thumb|350px]] | ||

|- | |- | ||

! PEDL | ! PEDL | ||

| Line 131: | Line 195: | ||

| [[File:cvmsi_elygtl_ely_z_700m_PEDL.png|thumb|300px]] | | [[File:cvmsi_elygtl_ely_z_700m_PEDL.png|thumb|300px]] | ||

| [[File:PEDL_ifless_profile.png|thumb|300px]] | | [[File:PEDL_ifless_profile.png|thumb|300px]] | ||

| + | | [[File:PEDL_3950_v_9336_3sec.png|thumb|350px]] | ||

|- | |- | ||

! SBSM | ! SBSM | ||

| Line 136: | Line 201: | ||

| [[File:cvmsi_elygtl_ely_z_700m_SBSM.png|thumb|300px]] | | [[File:cvmsi_elygtl_ely_z_700m_SBSM.png|thumb|300px]] | ||

| [[File:SBSM_ifless_profile.png|thumb|300px]] | | [[File:SBSM_ifless_profile.png|thumb|300px]] | ||

| + | | [[File:SBSM_3880_v_9320_3sec.png|thumb|350px]] | ||

|- | |- | ||

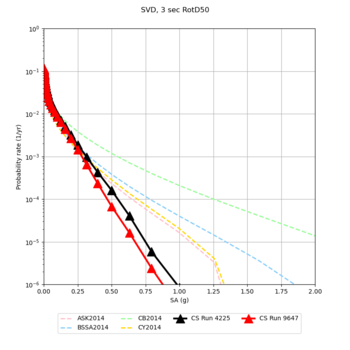

! SVD | ! SVD | ||

| Line 141: | Line 207: | ||

| [[File:cvmsi_elygtl_ely_z_700m_SVD.png|thumb|300px]] | | [[File:cvmsi_elygtl_ely_z_700m_SVD.png|thumb|300px]] | ||

| [[File:SVD_ifless_profile.png|thumb|300px]] | | [[File:SVD_ifless_profile.png|thumb|300px]] | ||

| + | | [[File:SVD_4225_v_9647_3sec.png|thumb|350px]] | ||

|- | |- | ||

|} | |} | ||

| − | === | + | === Merged Taper Algorithm === |

| − | Our | + | Our algorithm for generating the velocity model, which we are calling the 'merged taper', is as follows: |

#Set the surface mesh point to a depth of 25m. | #Set the surface mesh point to a depth of 25m. | ||

#Query the CVM-S4.26.M01 model ('cvmsi' string in UCVM) for each grid point. | #Query the CVM-S4.26.M01 model ('cvmsi' string in UCVM) for each grid point. | ||

| − | #Calculate the Ely taper at that point using 700m as the transition depth. | + | #Calculate the Ely taper at that point using 700m as the transition depth. Note that the Ely taper uses the Thompson Vs30 values in constraining the taper near the surface. |

#Compare the values before and after the taper modification; at each grid point down to the transition depth, use the value from the method with the lower Vs value. | #Compare the values before and after the taper modification; at each grid point down to the transition depth, use the value from the method with the lower Vs value. | ||

#Check values for Vp/Vs ratio, minimum Vs, Inf/NaNs, etc. | #Check values for Vp/Vs ratio, minimum Vs, Inf/NaNs, etc. | ||

| Line 156: | Line 223: | ||

=== Value constraints === | === Value constraints === | ||

| − | We impose the following constraints on velocity mesh values | + | We impose the following constraints (in this order) on velocity mesh values. The ones in bold are new for Study 22.12. |

| + | #Vs >= 500 m/s. If lower, <b>Calculate the Vp/Vs ratio. Set Vs=500, and Vp=Vs*(Vp/Vs ratio) [which in this case is Vp=500*(Vp/Vs ratio)].</b> | ||

#Vp >= 1700 m/s. If lower, Vp is set to 1700. | #Vp >= 1700 m/s. If lower, Vp is set to 1700. | ||

| − | + | #rho = 1700 km/m3. If lower, rho is set to 1700. | |

| − | #rho | ||

#Vp/Vs >= 1.45. If not, Vs is set to Vp/1.45. | #Vp/Vs >= 1.45. If not, Vs is set to Vp/1.45. | ||

| − | |||

| − | |||

| − | |||

| − | |||

=== Cross-sections === | === Cross-sections === | ||

| Line 234: | Line 297: | ||

In the future we plan for this approach to be implemented in UCVM, and we can simplify the CyberShake query code. | In the future we plan for this approach to be implemented in UCVM, and we can simplify the CyberShake query code. | ||

| + | |||

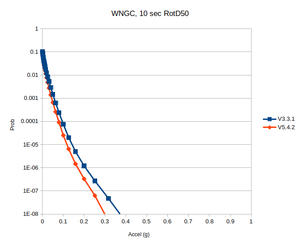

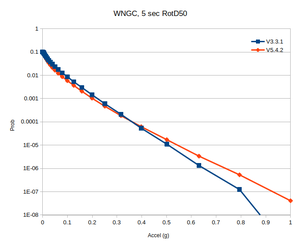

| + | === Impact on hazard === | ||

| + | |||

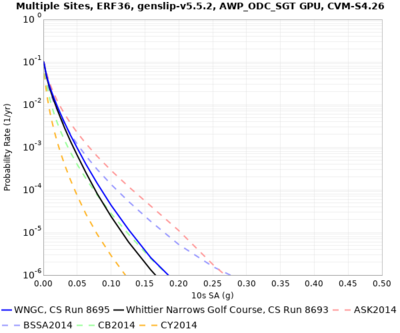

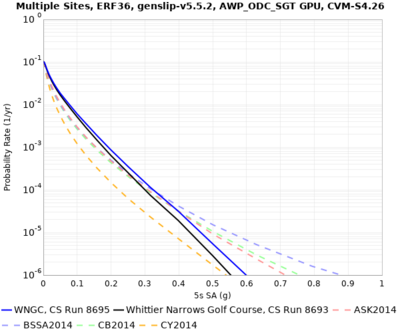

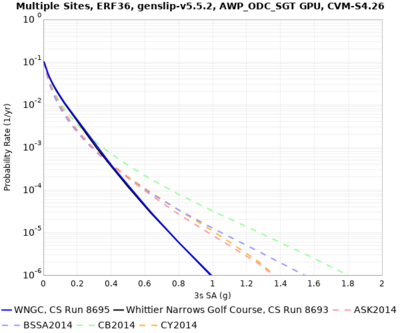

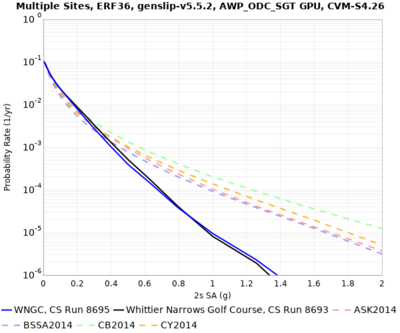

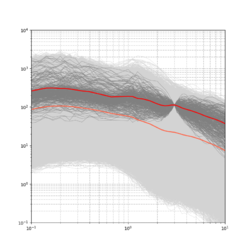

| + | Below are hazard curves calculated with the old approach, and with the implementation described above, for WNGC. | ||

| + | |||

| + | {| | ||

| + | | [[File:WNGC_run8695_v_8693_10sec.png|thumb|black=using CVM-S4.26.M01,blue=using approach with smaller Vs|400px]] | ||

| + | | [[File:WNGC_run8695_v_8693_5sec.png|thumb|black=using CVM-S4.26.M01,blue=using approach with smaller Vs|400px]] | ||

| + | | [[File:WNGC_run8695_v_8693_3sec.png|thumb|black=using CVM-S4.26.M01,blue=using approach with smaller Vs|400px]] | ||

| + | | [[File:WNGC_run8695_v_8693_2sec.png|thumb|black=using CVM-S4.26.M01,blue=using approach with smaller Vs|400px]] | ||

| + | |} | ||

| + | |||

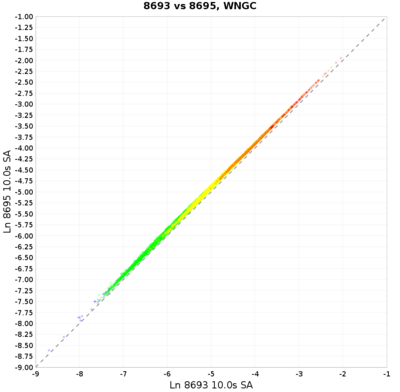

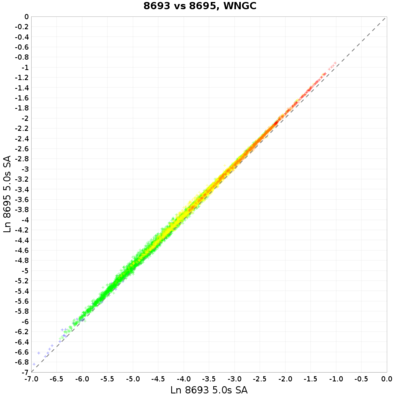

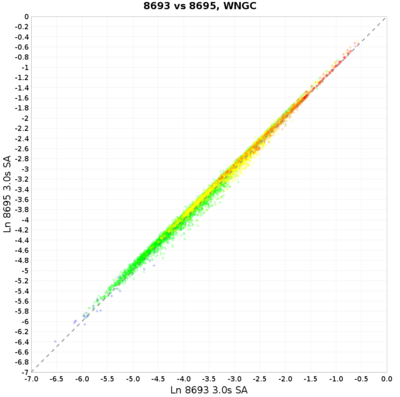

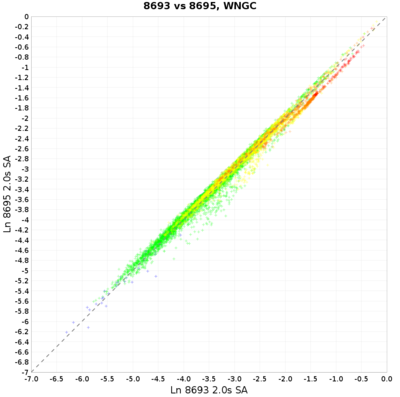

| + | These are scatterplots for the above WNGC curves. | ||

| + | |||

| + | {| | ||

| + | | [[File:WNGC_run8695_v_8693_scatter_10sec.png|thumb|black=using CVM-S4.26.M01,blue=using approach with smaller Vs|400px]] | ||

| + | | [[File:WNGC_run8695_v_8693_scatter_5sec.png|thumb|black=using CVM-S4.26.M01,blue=using approach with smaller Vs|400px]] | ||

| + | | [[File:WNGC_run8695_v_8693_scatter_3sec.png|thumb|black=using CVM-S4.26.M01,blue=using approach with smaller Vs|400px]] | ||

| + | | [[File:WNGC_run8695_v_8693_scatter_2sec.png|thumb|black=using CVM-S4.26.M01,blue=using approach with smaller Vs|400px]] | ||

| + | |} | ||

| + | |||

| + | === Velocity Model Info === | ||

| + | |||

| + | For Study 22.12, we track and use a number of velocity model-related parameters, in the database and as input to the broadband codes. Below we track where values come from and how they are used. | ||

| + | |||

| + | ==== Broadband codes ==== | ||

| + | |||

| + | *The code that is used to calculate high-frequency seismograms takes three parameters: vs30, vref, and vpga. vref and vpga are 500 m/s. Vs30 comes from the 'Target_Vs30' parameter in the database, which itself comes from the Thompson et al. (2020) model. | ||

| + | *The low-frequency site response code also has three parameters: vs30, vref, and vpga. Vs30 also comes from the 'Target_Vs30' parameter in the database, which itself comes from the Thompson (2020) model. Vref is calculated as ('Model_Vs30' database parameter) * (VsD500)/(Vs500). Vpga is 500.0. | ||

| + | **Model_Vs30 is calculated using a slowness average of the top 30 m from UCVM. | ||

| + | **VsD500 is the slowness average of the grid points at the surface, 100m, 200m, 300m, 400m, and 500m depth. Note that for this study, the surface grid point is populated by querying UCVM at a depth of 25m. | ||

| + | **Vs500 is the slowness average of the top 500m from UCVM. | ||

| + | |||

| + | ==== Database values ==== | ||

| + | |||

| + | The following values related to velocity structure are tracked in the database: | ||

| + | |||

| + | *Model_Vs30: This Vs30 value is calculated by taking a slowness average at 1-meter increments from [0.5, 29.5] and querying UCVM, with the merged taper applied. This value is populated in low-frequency runs, and copied over to the corresponding broadband run. | ||

| + | *Mesh_Vsitop_ID: This identifies what algorithm was used to populate the surface grid point. For Study 22.12, we query the model at a depth of (grid spacing/4), or 25m. | ||

| + | *Mesh_Vsitop: The value of the surface grid point at the site, populated using the algorithm specified in Mesh_Vsitop_ID. | ||

| + | *Minimum_Vs: Minimum Vs value used in generating the mesh. For Study 22.12, this is 500 m/s. | ||

| + | *Wills_Vs30: Wills Vs30 value. Currently this parameter is unpopulated. | ||

| + | *Z1_0: Z1.0 value. This is calculated by querying UCVM with the merged taper at 10m increments. If there is more than one crossing of 1000 m/s, we select the second crossing. If there is only 1 crossing, we use that. | ||

| + | *Z2_5: Z2.5 value. This is calculated by querying UCVM with the merged taper at 10m increments. If there is more than one crossing of 2500 m/s, we select the second crossing. If there is only 1 crossing, we use that. | ||

| + | *Vref_eff_ID: This identifies the algorithm used to calculate the effective vref value used in the low-frequency site response. For Study 22.12, it is Model_Vs30 * VsD500/Vs500. | ||

| + | *Vref_eff: This is the value obtained when following the algorithm identified in Vref_eff_ID | ||

| + | *Vs30_Source: Source of the Target_Vs30 value. For Study 22.12, this is Thompson et al. (2020) | ||

| + | *Target_Vs30: This is the value used for Vs30 when performing site response. It's populated using the source in Vs30_Source. | ||

| + | |||

| + | == Rupture Generator == | ||

| + | |||

| + | <b>Summary: We are using the Graves & Pitarka generator v5.5.2, the same version as used in the BBP v22.4, for Study 22.12.</b> | ||

| + | |||

| + | === Initial Findings === | ||

| + | |||

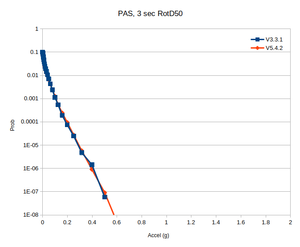

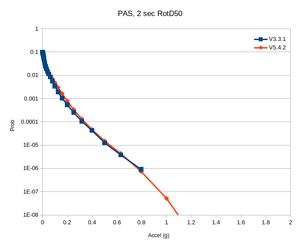

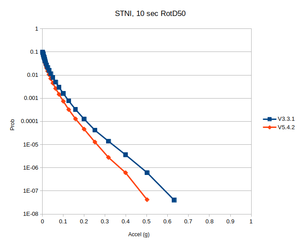

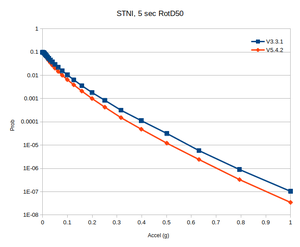

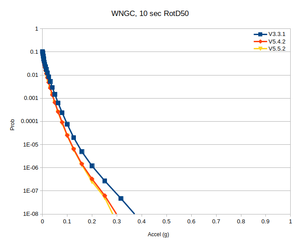

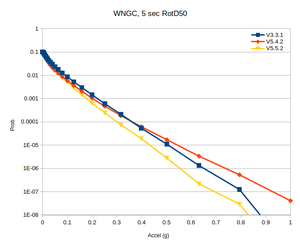

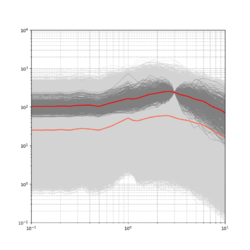

| + | In determining what rupture generator to use for this study, we performed tests with WNGC, USC, PAS, and STNI, and compared hazard curves generated with v5.4.2 to those from Study 15.4: | ||

| + | |||

| + | {| | ||

| + | ! Site !! 10 sec !! 5 sec !! 3 sec !! 2 sec | ||

| + | |- | ||

| + | ! WNGC | ||

| + | | [[File:WNGC_run8685_v_3861_10sec.png|thumb|300px]] | ||

| + | | [[File:WNGC_run8685_v_3861_5sec.png|thumb|300px]] | ||

| + | | [[File:WNGC_run8685_v_3861_3sec.png|thumb|300px]] | ||

| + | | [[File:WNGC_run8685_v_3861_2sec.png|thumb|300px]] | ||

| + | |- | ||

| + | ! USC | ||

| + | | [[File:USC_run8691_v_3970_10sec.png|thumb|300px]] | ||

| + | | [[File:USC_run8691_v_3970_5sec.png|thumb|300px]] | ||

| + | | [[File:USC_run8691_v_3970_3sec.png|thumb|300px]] | ||

| + | | [[File:USC_run8691_v_3970_2sec.png|thumb|300px]] | ||

| + | |- | ||

| + | ! PAS | ||

| + | | [[File:PAS_run8687_v_3878_10sec.png|thumb|300px]] | ||

| + | | [[File:PAS_run8687_v_3878_5sec.png|thumb|300px]] | ||

| + | | [[File:PAS_run8687_v_3878_3sec.png|thumb|300px]] | ||

| + | | [[File:PAS_run8687_v_3878_2sec.png|thumb|300px]] | ||

| + | |- | ||

| + | ! STNI | ||

| + | | [[File:STNI_run8689_v_3873_10sec.png|thumb|300px]] | ||

| + | | [[File:STNI_run8689_v_3873_5sec.png|thumb|300px]] | ||

| + | | [[File:STNI_run8689_v_3873_3sec.png|thumb|300px]] | ||

| + | | [[File:STNI_run8689_v_3873_2sec.png|thumb|300px]] | ||

| + | |} | ||

| + | |||

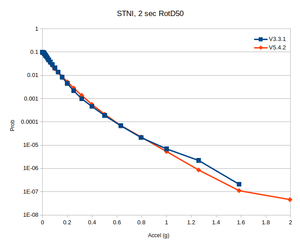

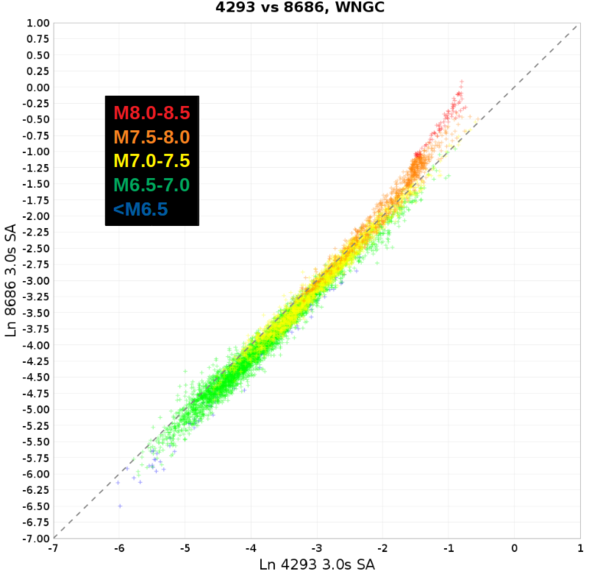

| + | Digging in further, it appears the elevated hazard curves for WNGC at 2 and 3 seconds are predominately due to large-magnitude southern San Andreas events producing larger ground motions. | ||

| + | |||

| + | {| | ||

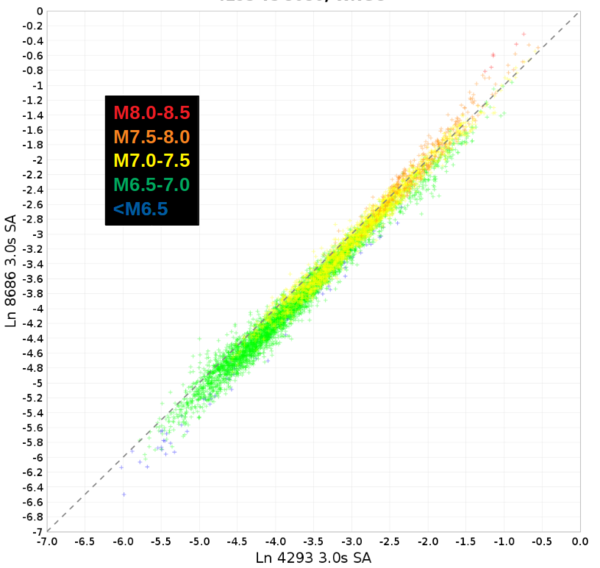

| + | | [[File:WNGC_8686_v_4293_scatter_colorbymag_3sec.png|thumb|600px|Scatter plot comparing the mean 3sec RotD50 for each rupture, between v3.3.1 and v5.4.2]] | ||

| + | |- | ||

| + | | [[File:WNGC_8686_v_4293_scatter_colorbymag_3sec_noSAFM8.png|thumb|600px|Scatter plot comparing the mean 3sec RotD50 for each rupture, between v3.3.1 and v5.4.2, SAF events>M8 excluded]] | ||

| + | |- | ||

| + | | [[File:WNGC_8686_v_4293_scatter_colorbymag_3sec_noSAFM7_5.png|thumb|600px|Scatter plot comparing the mean 3sec RotD50 for each rupture, between v3.3.1 and v5.4.2, SAF events>M7.5 excluded]] | ||

| + | |} | ||

| + | |||

| + | USC, PAS, and STNI also all see larger ground motions at 2-3 seconds from these same events, but the effect isn't as strong, so it only shows up in the tails of the hazard curves. | ||

| + | |||

| + | We honed in on source 68, rupture 7, a M8.45 on the southern San Andreas which produced the largest WNGC ground motions at 3 seconds, up to 5.1g. We calculated spectral plots for v3.3.1 and v5.4.2 for all 4 sites: | ||

| + | |||

| + | {| | ||

| + | ! Site !! v3.3.1 !! v5.4.2 | ||

| + | |- | ||

| + | ! WNGC | ||

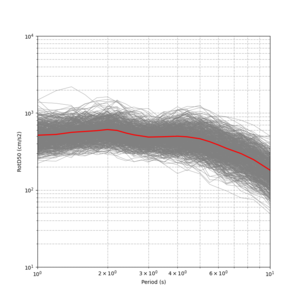

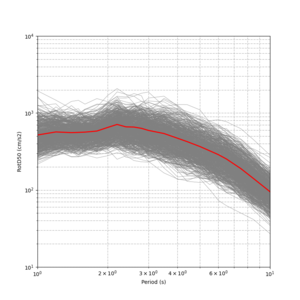

| + | | [[File:WNGC_3861_v3.3.1_spectral_s68_r7.png|thumb|300px]] | ||

| + | | [[File:WNGC_8685_v5.4.2_spectral_s68_r7.png|thumb|300px]] | ||

| + | |- | ||

| + | ! USC | ||

| + | | [[File:USC_3970_v3.3.1_spectral_s68_r7.png|thumb|300px]] | ||

| + | | [[File:USC_8691_v5.4.2_spectral_s68_r7.png|thumb|300px]] | ||

| + | |- | ||

| + | ! PAS | ||

| + | | [[File:PAS_3878_v3.3.1_spectral_s68_r7.png|thumb|300px]] | ||

| + | | [[File:PAS_8687_v5.4.2_spectral_s68_r7.png|thumb|300px]] | ||

| + | |- | ||

| + | ! STNI | ||

| + | | [[File:STNI_3873_v3.3.1_spectral_s68_r7.png|thumb|300px]] | ||

| + | | [[File:STNI_8689_v5.4.2_spectral_s68_r7.png|thumb|300px]] | ||

| + | |} | ||

| + | |||

| + | === v5.5.2 === | ||

| + | |||

| + | We decided to try v5.5.2 of the rupture generator, which typically has less spectral content at short periods: | ||

| + | |||

| + | {| | ||

| + | ! Site !! v3.3.1 !! v5.4.2 !! v5.5.2 | ||

| + | |- | ||

| + | ! WNGC | ||

| + | | [[File:WNGC_3861_v3.3.1_spectral_s68_r7.png|thumb|300px]] | ||

| + | | [[File:WNGC_8685_v5.4.2_spectral_s68_r7.png|thumb|300px]] | ||

| + | | [[File:WNGC_8693_v5.5.2_spectral_s68_r7.png|thumb|300px]] | ||

| + | |} | ||

| + | |||

| + | ==== Hazard Curves ==== | ||

| + | |||

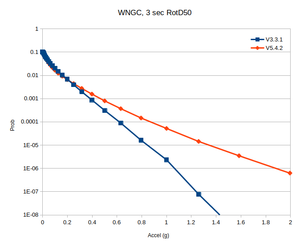

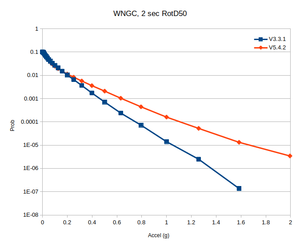

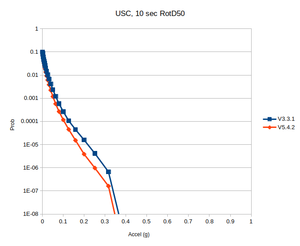

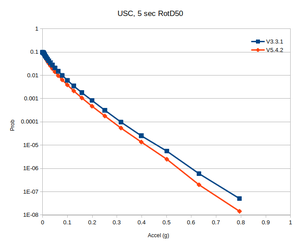

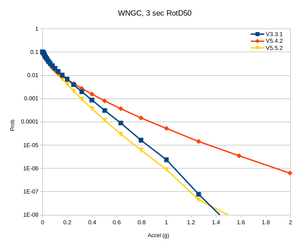

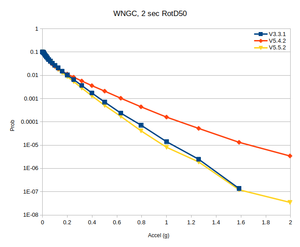

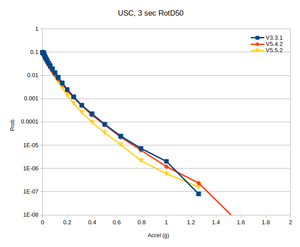

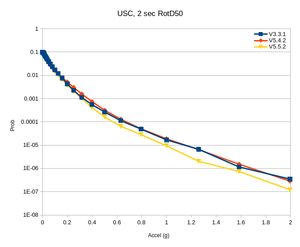

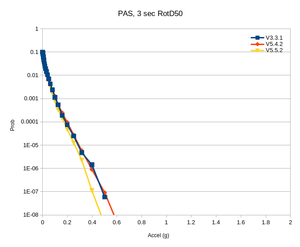

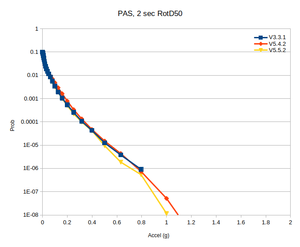

| + | Comparison hazard curves for the three rupture generators, using no taper | ||

| + | |||

| + | {| | ||

| + | ! Site !! 10 sec !! 5 sec !! 3 sec !! 2 sec | ||

| + | |- | ||

| + | ! WNGC | ||

| + | | [[File:WNGC_rg_compare_10sec.png|thumb|300px]] | ||

| + | | [[File:WNGC_rg_compare_5sec.png|thumb|300px]] | ||

| + | | [[File:WNGC_rg_compare_3sec.png|thumb|300px]] | ||

| + | | [[File:WNGC_rg_compare_2sec.png|thumb|300px]] | ||

| + | |- | ||

| + | ! USC | ||

| + | | [[File:USC_rg_compare_notaper_10sec.png|thumb|300px]] | ||

| + | | [[File:USC_rg_compare_notaper_5sec.png|thumb|300px]] | ||

| + | | [[File:USC_rg_compare_notaper_3sec.png|thumb|300px]] | ||

| + | | [[File:USC_rg_compare_notaper_2sec.png|thumb|300px]] | ||

| + | |} | ||

| + | |||

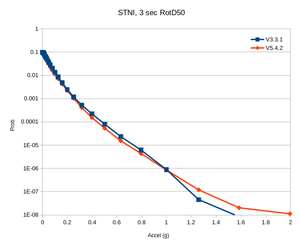

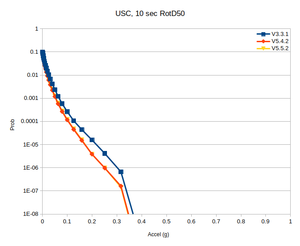

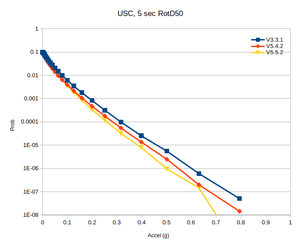

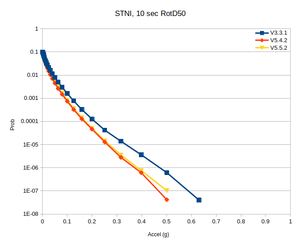

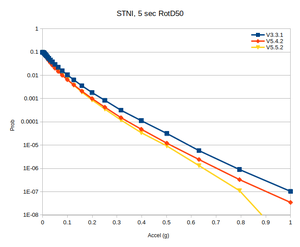

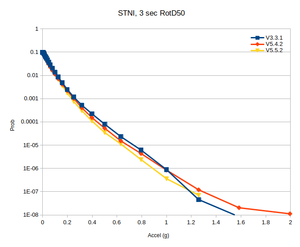

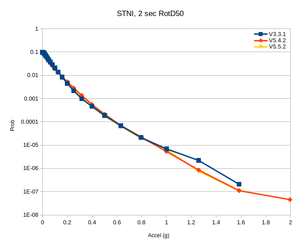

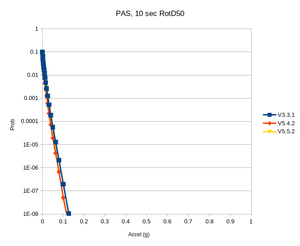

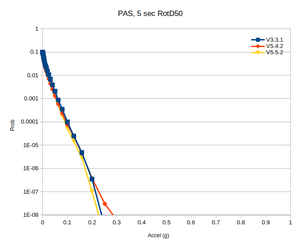

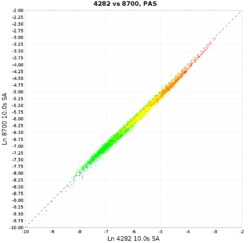

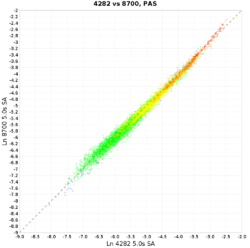

| + | Comparison curves for the three rupture generators, using the smaller value in the velocity model. | ||

| + | |||

| + | {| | ||

| + | ! Site !! 10 sec !! 5 sec !! 3 sec !! 2 sec | ||

| + | |- | ||

| + | ! STNI | ||

| + | | [[File:STNI_rg_compare_taper_10sec.png|thumb|300px]] | ||

| + | | [[File:STNI_rg_compare_taper_5sec.png|thumb|300px]] | ||

| + | | [[File:STNI_rg_compare_taper_3sec.png|thumb|300px]] | ||

| + | | [[File:STNI_rg_compare_taper_2sec.png|thumb|300px]] | ||

| + | |- | ||

| + | ! PAS | ||

| + | | [[File:PAS_rg_compare_taper_10sec.png|thumb|300px]] | ||

| + | | [[File:PAS_rg_compare_taper_5sec.png|thumb|300px]] | ||

| + | | [[File:PAS_rg_compare_taper_3sec.png|thumb|300px]] | ||

| + | | [[File:PAS_rg_compare_taper_2sec.png|thumb|300px]] | ||

| + | |} | ||

| + | |||

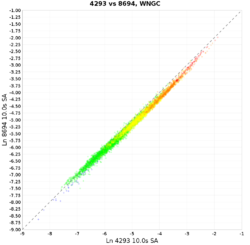

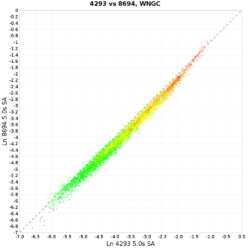

| + | ==== Scatter Plots ==== | ||

| + | |||

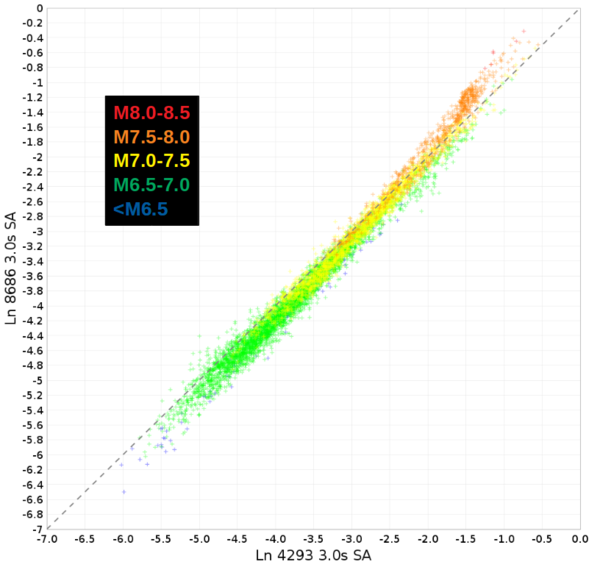

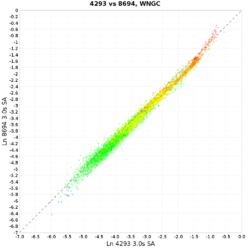

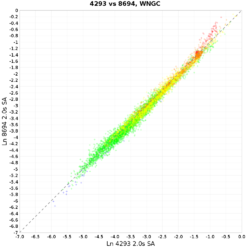

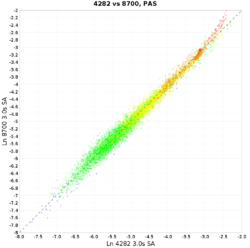

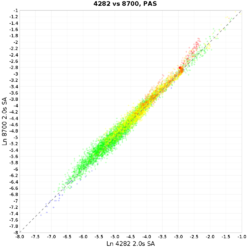

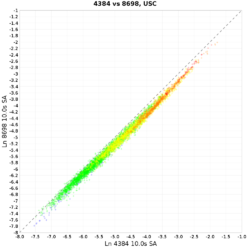

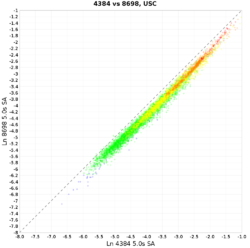

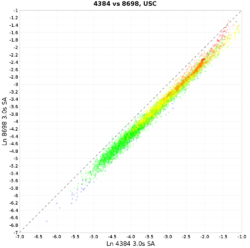

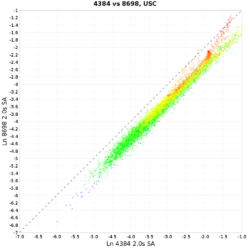

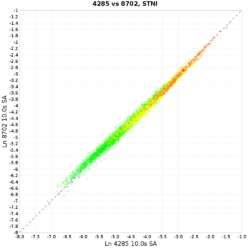

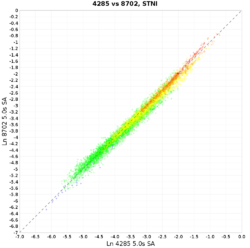

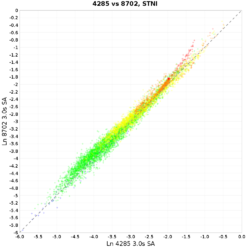

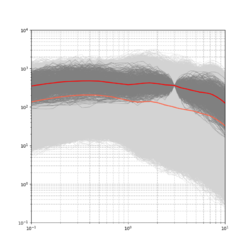

| + | Scatter plots, comparing results with v5.5.2 to results from Study 15.12 by plotting the mean ground motion for each rupture. The colors are magnitude bins (blue: <M6.5; green: M6.5-7; yellow: M7-7.5; orange: M7.5-8; red: M8+). | ||

| + | |||

| + | {| | ||

| + | ! Site !! 10 sec !! 5 sec !! 3 sec !! 2 sec | ||

| + | |- | ||

| + | ! WNGC | ||

| + | | [[File:WNGC_8694_v_4293_scatter_10sec.png|thumb|250px]] | ||

| + | | [[File:WNGC_8694_v_4293_scatter_5sec.png|thumb|250px]] | ||

| + | | [[File:WNGC_8694_v_4293_scatter_3sec.png|thumb|250px]] | ||

| + | | [[File:WNGC_8694_v_4293_scatter_2sec.png|thumb|250px]] | ||

| + | |- | ||

| + | ! PAS | ||

| + | | [[File:PAS_8700_v_4282_scatter_10sec.png|thumb|250px]] | ||

| + | | [[File:PAS_8700_v_4282_scatter_5sec.png|thumb|250px]] | ||

| + | | [[File:PAS_8700_v_4282_scatter_3sec.png|thumb|250px]] | ||

| + | | [[File:PAS_8700_v_4282_scatter_2sec.png|thumb|250px]] | ||

| + | |- | ||

| + | ! USC | ||

| + | | [[File:USC_8698_v_4384_scatter_10sec.png|thumb|250px]] | ||

| + | | [[File:USC_8698_v_4384_scatter_5sec.png|thumb|250px]] | ||

| + | | [[File:USC_8698_v_4384_scatter_3sec.png|thumb|250px]] | ||

| + | | [[File:USC_8698_v_4384_scatter_2sec.png|thumb|250px]] | ||

| + | |- | ||

| + | ! STNI | ||

| + | | [[File:STNI_8702_v_4285_scatter_10sec.png|thumb|250px]] | ||

| + | | [[File:STNI_8702_v_4285_scatter_5sec.png|thumb|250px]] | ||

| + | | [[File:STNI_8702_v_4285_scatter_3sec.png|thumb|250px]] | ||

| + | | [[File:STNI_8702_v_4285_scatter_2sec.png|thumb|250px]] | ||

| + | |} | ||

| + | |||

| + | We also modified the risetime_coef to 2.3, from the previous default of 1.6. | ||

== High-frequency codes == | == High-frequency codes == | ||

| Line 379: | Line 645: | ||

|} | |} | ||

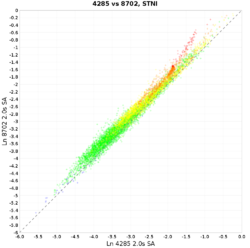

| − | == | + | === v5.5.2 === |

| + | |||

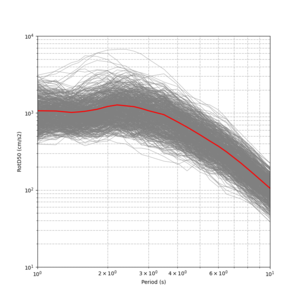

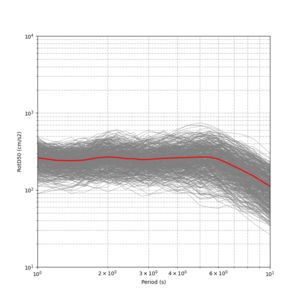

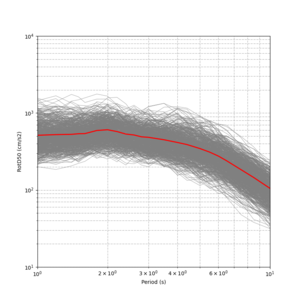

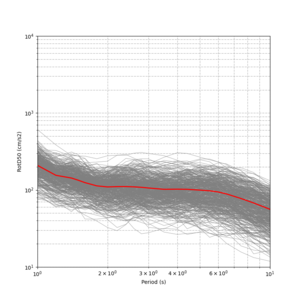

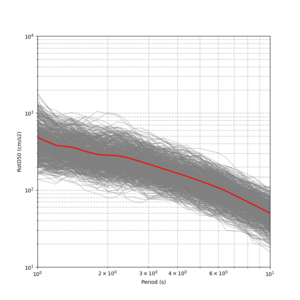

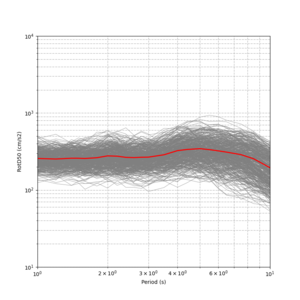

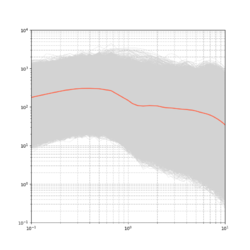

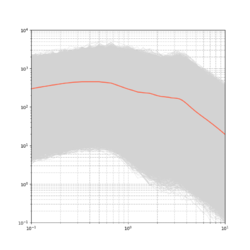

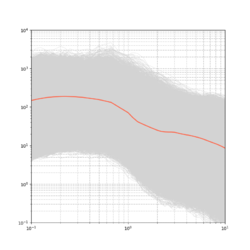

| + | We created spectral plots using results produced with rupture generator v5.5.2. These results have a much less noticeable slope around 1 sec. | ||

| + | |||

| + | {| | ||

| + | ! WNGC !! PAS !! USC !! STNI | ||

| + | |- | ||

| + | | [[File:WNGC_run8694_full_spectra.png|thumb|250px]] | ||

| + | | [[File:PAS_run8700_full_spectra.png|thumb|250px]] | ||

| + | | [[File:USC_run8698_full_spectra.png|thumb|250px]] | ||

| + | | [[File:STNI_run8702_full_spectra.png|thumb|250px]] | ||

| + | |} | ||

| + | |||

| + | == Seismogram Length == | ||

| + | |||

| + | Originally we proposed a seismogram length of 500 sec. We examined seismograms for our most distance event-station pairs for this study and concluded that most stop having any meaningful signal by around 325 sec. Thus, we are reducing the seismogram length to 400 sec to ensure some margin of error. | ||

| + | |||

| + | == Validation == | ||

| + | |||

| + | The extensive validation efforts performed in preparation for this study are documented in [[Broadband_CyberShake_Validation]]. | ||

== Updates and Enhancements == | == Updates and Enhancements == | ||

| + | |||

| + | *New velocity model created, including Ely taper to 700m and tweaked rules for applying minimum values. | ||

| + | *Updated to rupture generator v5.5.2. | ||

| + | *CyberShake broadband codes now linked directly against BBP codes | ||

| + | *Added -ffast-math compiler option to hb_high code for modest speedup. | ||

| + | *Migrated to using OpenMP version of DirectSynth code. | ||

| + | *Optimized rupture generator v5.5.2. | ||

| + | *Verification was performed against historic events, including Northridge and Landers. | ||

| + | |||

| + | === Lessons Learned from Previous Studies === | ||

| + | |||

| + | <ul> | ||

| + | <li><i>Create new velocity model ID for composite model, capturing metadata.</i></li> | ||

| + | We created a new velocity model ID to capture the model used for Study 22.12. | ||

| + | <li><i>Clear disk space before study begins to avoid disk contention.</i></li> | ||

| + | On moment, we migrated old studies to focal and optimized. | ||

| + | At CARC, we migrated old studies to OLCF HPSS. | ||

| + | <li><i>In addition to disk space, check local inode usage.</i></li> | ||

| + | CARC and shock checked; plenty of inodes are available. | ||

| + | </ul> | ||

== Output Data Products == | == Output Data Products == | ||

| Line 391: | Line 696: | ||

==== Deterministic ==== | ==== Deterministic ==== | ||

| − | *Seismograms: 2-component seismograms, | + | *Seismograms: 2-component seismograms, 8000 timesteps (400 sec) each. Study 18.8 used 10k timesteps, but without the sSAF events 8k seems fine. |

*PSA: X and Y spectral acceleration at 44 periods (10, 9.5, 9, 8.5, 8, 7.5, 7, 6.5, 6, 5.5, 5, 4.8, 4.6, 4.4, 4.2, 4, 3.8, 3.6, 3.4, 3.2, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.66667, 1.42857, 1.25, 1.11111, 1, .66667, .5, .4, .33333, .285714, .25, .22222, .2, .16667, .142857, .125, .11111, .1 sec) | *PSA: X and Y spectral acceleration at 44 periods (10, 9.5, 9, 8.5, 8, 7.5, 7, 6.5, 6, 5.5, 5, 4.8, 4.6, 4.4, 4.2, 4, 3.8, 3.6, 3.4, 3.2, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.66667, 1.42857, 1.25, 1.11111, 1, .66667, .5, .4, .33333, .285714, .25, .22222, .2, .16667, .142857, .125, .11111, .1 sec) | ||

*RotD: PGV, and RotD50, the RotD50 azimuth, and RotD100 at 25 periods (20, 15, 12, 10, 8.5, 7.5, 6.5, 6, 5.5, 5, 4.4, 4, 3.5, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.7, 1.5, 1.3, 1.2, 1.1, 1) | *RotD: PGV, and RotD50, the RotD50 azimuth, and RotD100 at 25 periods (20, 15, 12, 10, 8.5, 7.5, 6.5, 6, 5.5, 5, 4.4, 4, 3.5, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.7, 1.5, 1.3, 1.2, 1.1, 1) | ||

*Durations: for X and Y components, energy integral, Arias intensity, cumulative absolute velocity (CAV), and for both velocity and acceleration, 5-75%, 5-95%, and 20-80%. | *Durations: for X and Y components, energy integral, Arias intensity, cumulative absolute velocity (CAV), and for both velocity and acceleration, 5-75%, 5-95%, and 20-80%. | ||

| − | ==== | + | ==== Broadband ==== |

| − | *Seismograms: 2-component seismograms, | + | *Seismograms: 2-component seismograms, 40000 timesteps (400 sec) each. |

*PSA: X and Y spectral acceleration at 44 periods (10, 9.5, 9, 8.5, 8, 7.5, 7, 6.5, 6, 5.5, 5, 4.8, 4.6, 4.4, 4.2, 4, 3.8, 3.6, 3.4, 3.2, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.66667, 1.42857, 1.25, 1.11111, 1, .66667, .5, .4, .33333, .285714, .25, .22222, .2, .16667, .142857, .125, .11111, .1 sec) | *PSA: X and Y spectral acceleration at 44 periods (10, 9.5, 9, 8.5, 8, 7.5, 7, 6.5, 6, 5.5, 5, 4.8, 4.6, 4.4, 4.2, 4, 3.8, 3.6, 3.4, 3.2, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.66667, 1.42857, 1.25, 1.11111, 1, .66667, .5, .4, .33333, .285714, .25, .22222, .2, .16667, .142857, .125, .11111, .1 sec) | ||

*RotD: PGA, PGV, and RotD50, the RotD50 azimuth, and RotD100 at 66 periods (20, 15, 12, 10, 8.5, 7.5, 6.5, 6, 5.5, 5, 4.4, 4, 3.5, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.7, 1.5, 1.3, 1.2, 1.1, 1, 0.85, 0.75, 0.65, 0.6, 0.55, 0.5, 0.45, 0.4, 0.35, 0.3, 0.28, 0.26, 0.24, 0.22, 0.2, 0.17, 0.15, 0.13, 0.12, 0.11, 0.1, 0.085, 0.075, 0.065, 0.06, 0.055, 0.05, 0.045, 0.04, 0.035, 0.032, 0.029, 0.025, 0.022, 0.02, 0.017, 0.015, 0.013, 0.012, 0.011, 0.01) | *RotD: PGA, PGV, and RotD50, the RotD50 azimuth, and RotD100 at 66 periods (20, 15, 12, 10, 8.5, 7.5, 6.5, 6, 5.5, 5, 4.4, 4, 3.5, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.7, 1.5, 1.3, 1.2, 1.1, 1, 0.85, 0.75, 0.65, 0.6, 0.55, 0.5, 0.45, 0.4, 0.35, 0.3, 0.28, 0.26, 0.24, 0.22, 0.2, 0.17, 0.15, 0.13, 0.12, 0.11, 0.1, 0.085, 0.075, 0.065, 0.06, 0.055, 0.05, 0.045, 0.04, 0.035, 0.032, 0.029, 0.025, 0.022, 0.02, 0.017, 0.015, 0.013, 0.012, 0.011, 0.01) | ||

| Line 409: | Line 714: | ||

==== Deterministic ==== | ==== Deterministic ==== | ||

| − | *RotD50 and RotD100 for | + | *RotD50 and RotD100 for 6 periods (10, 7.5, 5, 4, 3, 2) |

*Duration: acceleration 5-75% and 5-95%, for both X and Y | *Duration: acceleration 5-75% and 5-95%, for both X and Y | ||

| − | ==== | + | ==== Broadband ==== |

| − | *RotD50 and RotD100 for PGA, PGV, and 19 periods (10, 7.5, 5, 4, 3, 2, 1, 0.75, 0.5, 0.4, 0.3, 0.2, 0.1, 0.075, 0.05, 0.04, 0.03, 0.02, 0.01 | + | *RotD50 and RotD100 for PGA, PGV, and 19 periods (10, 7.5, 5, 4, 3, 2, 1, 0.75, 0.5, 0.4, 0.3, 0.2, 0.1, 0.075, 0.05, 0.04, 0.03, 0.02, 0.01) |

*Duration: acceleration 5-75% and 5-95%, for both X and Y | *Duration: acceleration 5-75% and 5-95%, for both X and Y | ||

| Line 438: | Line 743: | ||

|- | |- | ||

! 4 site average | ! 4 site average | ||

| − | | | + | | 31000 || 92 || 792 |

|} | |} | ||

| − | + | 792 node-hours x 335 sites + 10% overrun margin gives an estimate of 292k node-hours for post-processing. | |

{| class="wikitable" | {| class="wikitable" | ||

| Line 448: | Line 753: | ||

|- | |- | ||

! 4 site average | ! 4 site average | ||

| − | | | + | | 31500 || 92 || 805 |

| − | |||

| − | |||

| − | |||

|} | |} | ||

| + | |||

| + | 805 node-hrs x 335 sites + 10% overrun margin gives an estimate of 297k node-hours for broadband calculations. | ||

=== Data Estimates === | === Data Estimates === | ||

==== Summit ==== | ==== Summit ==== | ||

| + | |||

| + | On average, each site contains 626,000 rupture variations. | ||

| + | |||

| + | These estimates assume a 400 sec seismogram. | ||

{| class="wikitable" | {| class="wikitable" | ||

| Line 463: | Line 771: | ||

|- | |- | ||

! 4 site average (GB) | ! 4 site average (GB) | ||

| − | | 177 || 1533 || 1533 || | + | | 177 || 1533 || 1533 || 225 |

|- | |- | ||

! Total for 335 sites (TB) | ! Total for 335 sites (TB) | ||

| − | | 57.8 || 501.4 || 501.4 || | + | | 57.8 || 501.4 || 501.4 || 73.6 |

|} | |} | ||

==== CARC ==== | ==== CARC ==== | ||

| − | We estimate | + | We estimate 225.6 GB/site x 335 sites = 73.8 TB in output data, which will be transferred back to CARC. After transferring Study 13.4 and 14.2, we have 66 TB free at CARC, so additional data will need to be moved. |

==== shock-carc ==== | ==== shock-carc ==== | ||

| − | The study should use approximately | + | The study should use approximately 737 GB in workflow log space on /home/shock. This drive has approximately 1.5 TB free. |

==== moment database ==== | ==== moment database ==== | ||

| Line 481: | Line 789: | ||

The PeakAmplitudes table uses approximately 99 bytes per entry. | The PeakAmplitudes table uses approximately 99 bytes per entry. | ||

| − | 99 bytes/entry * 34 entries/event (11 det + 25 stoch) * 622,636 events/site * 335 sites = 654 GB. The drive on moment with the mysql database has 771 GB free, so we will plan to migrate | + | 99 bytes/entry * 34 entries/event (11 det + 25 stoch) * 622,636 events/site * 335 sites = 654 GB. The drive on moment with the mysql database has 771 GB free, so we will plan to migrate Study 18.8 off of moment to free up additional room. |

== Lessons Learned == | == Lessons Learned == | ||

| + | |||

| + | * Make sure database space estimates include both RotD50 and RotD100 values, and also include the space required for indices. | ||

| + | |||

| + | * Add sanity checks on all intensity measures inserted, not just the geometric means (which aren't being inserted anymore). | ||

| + | |||

| + | * Test all types of bundled glidein jobs before the production run. (The AWP jobs ran fine, but we had issues with the DirectSynth jobs). | ||

| + | |||

| + | * Make sure all appropriate velocity fields in the DB are being populated. | ||

| + | |||

| + | * Come up with a solution to the out/err file issue with the BB runs. With file-per-worker, PMC gets a seg fault when the final output is combined. With file-per-task, we end up with 160k files and the filesystem becomes angry. | ||

== Stress Test == | == Stress Test == | ||

| + | |||

| + | As of 12/17/22, user callag has run 13627 jobs and consumed 71936.9 node-hours on Summit. | ||

| + | |||

| + | /home/shock on shock-carc has 1544778288 blocks free. | ||

| + | |||

| + | The stress test started at 15:47:42 PST on 12/17/22. | ||

| + | |||

| + | The stress test completed at 13:32:20 PST on 1/7/23, for an elapsed time of 501.7 hours. | ||

| + | |||

| + | As of 1/10/23, user callag has run 14204 jobs and consumed 115430.8 node-hours on Summit. | ||

| + | |||

| + | This works out to 2174.7 node-hours per site, 29.7% more than the initial estimate of 1675.8. | ||

| + | |||

| + | === Issues Discovered === | ||

| + | |||

| + | *<i>The cronjob submission script on shock-carc was assigning Summit as the SGT and PP host, meaning that summit-pilot wasn't considered in planning.</i> | ||

| + | We removed assignment of SGT and PP host at workflow creation time. | ||

| + | *<i>Pegasus was trying to transfer some files from CARC to shock-carc /tmp. Since CARC is accessed via the GO protocol, but /tmp isn't served through GO, there doesn't seem to be a way to do this.</i> | ||

| + | Reverted to custom pegasus-transfer wrapper until Pegasus group can modify Pegasus so that files aren't transferred to /tmp which aren't used in the planning process. This will probably be some time in January. | ||

| + | *<i>Runtimes for DirectSynth jobs are much slower (over 10 hours) than in testing.</i> | ||

| + | Increased the permitted memory size from 1.5 GB to 1.8 GB, to reduce the number of tasks and (hopefully) get better data reuse. | ||

| + | *<i>Velocity info job, which determines Vs30, Vs500, and VsD500 for site correction, doesn't apply the taper when calculating these values.</i> | ||

| + | Modified the velocity info job to use the taper when calculating various velocity parameters. | ||

| + | *<i>Velocity params job, which populated the database with Vs30, Z1.0, and Z2.5, doesn't apply the taper.</i> | ||

| + | Modified the velocity params job to use the taper. | ||

| + | *<i>Velocity parameters aren't being added to the broadband runs, just the low-frequency runs.</i> | ||

| + | Added a new job to the DAX generator to insert the Vs30 value from the velocity info file, and copy Z1.0 and Z2.5 from the low-frequency run. | ||

| + | *<i>Minimum Vs value isn't being added to the DB.</i> | ||

| + | Modified the Run Manager to insert a default minimum Vs value of 500 m/s in the DB when a new run is created. | ||

| + | |||

| + | == Events During Study == | ||

| + | |||

| + | *For some reason (slow filesystem?), several of the serial jobs, like PreCVM and PreSGT, kept failing due to wallclock time expiration. I adjusted the wallclock times up to avoid this. | ||

| + | |||

| + | *In testing, runtimes for the AWP_SGT jobs were around 35 minutes, so we set our pilot job run times to 40 minutes. However, this was not enough time for the AWP_SGT jobs during production, so the work would start over with every pilot job. We increased runtime to 45 minutes, but that still wasn't enough for some jobs, so we increased it again to 60 minutes. This comes at the cost of increased overhead, since the time between the end of the job and the hour is basically lost, but it is better than having jobs never finish. | ||

| + | |||

| + | *We finished the SGT calculations on 1/26. | ||

| + | |||

| + | *On 2/1 we found and fixed an issue with the OpenSHA calculation of locations, which resulted in a mismatch between the CyberShake DB source ID/rupture ID and the source ID/rupture ID in OpenSHA. This affected scatterplots and disaggregation calculations, so all disagg calcs done before this date need to be redone. | ||

| + | |||

| + | *On 2/2 we found that the DirectSynth jobs running in the pilot jobs which are bundled into 10 are segfaulting within the first ~10 sec, before any log files are written. We confirmed this issue doesn't happen with the single-node glidein jobs, and reverted back to those. We will try tests with bundles of 2 jobs to see if we can identify the issue. | ||

| + | |||

| + | *We started getting excellent throughput during the week of 1/30. So as not to use up the allocation, we paused deterministic post-processing calculations on 2/8. We got approval to 2/10 to continue running without penalty, and resumed the deterministic post-processing then. | ||

| + | |||

| + | *We finished the deterministic calculations on 2/22. | ||

| + | |||

| + | *On 2/27, we got an email from OLCF about excessive load from the BB jobs on the GPFS filesystem. We are writing more files with the BB jobs - about 150k per run - but a bigger issue may be the file-per-task I/O that we turned on, increasing the number of err & out files from 6k to 160k. We turned that on to try to prevent the segfaults we were seeing when the workflow finished and the manager was trying to merge all the I/O back in. However, we may need to take that hit so we can maintain a higher number of jobs. Currently OLCF has reduced us to 4 simultaneous jobs, which means it will take about 2 weeks to finish up the BB runs. | ||

| + | |||

| + | *Looking at the initial hazard map, we identified 3 sites with way-too-low ground motions. Digging in, all the RotD values are O(1e-30). This is because the SGTs were not transferred correctly, and even though the check jobs failed, the DirectSynth job ran, the files were transferred back to CARC, the data was inserted into the DB, and curves were generated. The check that we have on the intensity measures upon insertion is only for the geometric mean values, so this should be added to the RotD values as well. | ||

| + | |||

| + | *The broadband calculations finished on 3/6, with the rerun of s003. We just have the DB insertions for about 70% of the BB runs remaining. | ||

| + | |||

| + | === Database Space Issue === | ||

| + | |||

| + | On 2/23, we began noticing the free space on moment rapidly disappearing. In 24 hours it was reduced from 310 GB to 140 GB, with still about 75% of the broadband runs remaining. | ||

| + | |||

| + | Additionally, this coincided with many errors with inserting data. What we see happening is that the loading of amplitudes aborts after awhile, but doesn't return an error. Then, when the check job runs, not all the needed rupture variations are present, and the workflow aborts at this step. | ||

| + | |||

| + | The space issue happened because we made two mistakes when calculating the data size. The first is that we did not take into account the size of the indices for each entry in the PeakAmplitudes table, which is about the same size as the data itself. The second is that we forgot that we were also inserting RotD100 values for all 21 broadband periods, not just RotD50, so that's another factor of 2. | ||

| + | |||

| + | With these new values, data + index sizes for the PeakAmplitudes table average 269 bytes/row. Each broadband run has about 620k rupture variations, and we're inserting 21 periods * 2 RotD values + 4 durations for each variation. This works out to 269 bytes/IM * 46 IMs/variation * 622k variations/site = 7.2 GB/site, or 2.4 TB for the entire study. | ||

| + | |||

| + | ==== Mitigation ==== | ||

| + | |||

| + | The immediate issues are: | ||

| + | #Failed database insertions are preventing sites from finishing. | ||

| + | #Moment will run out of space before the study completes. | ||

| + | |||

| + | These are the planned steps to be able to resume the study: | ||

| + | #Pause the broadband runs. | ||

| + | #Create a cronjob on shock-carc which will kill workflows when they hit the Load_Amps stage. This will avoid using extra space on the DB, but will make it easy to resume once more space is freed. | ||

| + | #Restart broadband workflows, knowing they'll be stopped when they get to the database stage. | ||

| + | |||

| + | Ultimately, we need to populate moment to be able to support access and comparisons. Currently, we need 1800 GB and we have 100 GB. | ||

| + | #Drop Study 17.3 from the PeakAmps table. This study has 4.5 billion rows. There is some uncertainty about how much space this will free up, but it's a minimum of 1140 GB. This increases our available space to 1240 GB. | ||

| + | #Assess how much space is available. If more space is required, consider dropping some of the test runs in the DB which we no longer need. | ||

| + | #Remove cronjob which kills workflows in Load_Amps stage. | ||

| + | #Restart workflows which were stopped at Load_Amps stage. | ||

| + | #Clean up and restart workflows with database insertion issues. | ||

| + | |||

| + | On 3/12, we ran out of database space with 93 sites remaining to be inserted, about 670 GB. | ||

| + | #I reduced the size of the 2 log files from 2 GB to 1 GB, freeing up 2 GB on the database disk. | ||

| + | #I created a temporary file 1 GB in size, so we could delete in in the future and have a little breathing room. | ||

| + | #I switched MariaDB's temporary space from /export/moment, which is basically full, to /tmp, which has about 60 GB of space. | ||

| + | #Since Study 15.12 has already been migrated to focal, we will delete Study 15.12 off moment. This should free up approximately 1460 GB, which should be plenty of space. We could consider stopping after about 200 deleted sites to speed up inserts. | ||

| + | #We will then restart workflow population. | ||

| + | #Once the study is complete, we will attach a temporary drive of 500 GB - 1 TB to moment to use as mysql temp, and then run optimize table to clean up the fragmentation and get back disk space. | ||

| + | #Once disk space is reclaimed, the temporary drive can be removed and we can begin migrating data into sqlite databases and moment-carc. | ||

| + | |||

| + | ==== Status ==== | ||

| + | |||

| + | On 2/24, moment has 134 GB free. We've stopped running new workflows and are just letting the ones currently running finish. | ||

| + | |||

| + | We began the Study 17.3 deletions the evening of 2/24. We expect they will take about a week. | ||

| + | |||

| + | We resumed the workflows the evening of 2/24, with a cronjob on shock to kill workflows when they hit the Load_Amps stage. This is for workflows starting with ID 9896 | ||

| + | |||

| + | On 2/27, we started cleaning up the runs which had incomplete inserts. | ||

| + | |||

| + | The Study 17.3 deletions finished on 3/3. We then ran 'analyze table' to see if that updates the information_schema info, but it didn't. Based on the number of rows deleted, we estimate we've freed around 1100 GB. | ||

| + | |||

| + | On 3/4, we queued up deletions of all the runs with incomplete inserts. | ||

| + | |||

| + | On 3/12, we ran out of disk space on moment, with about 25% of the broadband runs remaining to be inserted. We started deleting Study 15.12 off moment. To give it a head start, we found some runs which haven't finished their BB processing, so we turned back on the cronjob which kills workflows at the LoadAmps stage and are resuming the BB processing for these jobs. | ||

== Performance Metrics == | == Performance Metrics == | ||

| + | |||

| + | At the start of the main study, user callag had run 14466 jobs and used 116506.7 node-hours on the GEO112 allocation on Summit. | ||

| + | |||

| + | The main study began on 1/17/23 at 22:34:11 PST. | ||

| + | |||

| + | The main study ended on 4/4/23 at 04:46:40 PDT, for a main study elapsed time of 1829.2 hours. | ||

| + | |||

| + | At the end of the main study, user callag had run 25320 jobs and used 845339.1 node-hours on the GEO112 allocation. | ||

| + | |||

| + | === Application Level Metrics === | ||

| + | |||

| + | *We used 728832.4 node-hours for the main study + 43493.9 for the stress test = <b>772326.3</b> node-hours total. | ||

| + | *We used 772326.3 node-hrs / 2330.9 hrs = an average of <b>331.3</b> nodes throughout the study, or 7.1% of Summit. | ||

| + | *We ran a total of 965 top-level workflows: 20 for the stress test sites + 3*315 for the other sites. | ||

| + | *We ran 20*83 + 315*(26+27+31) = 28,120 jobs successfully (retries are tracked elsewhere). | ||

| + | **Integrated workflow: 10 jobs (3 dir create, 1 stage) + AWP + PreDS + DS + BB + 2*DB + post = 83 | ||

| + | **SGT workflow: 6 jobs (2 dir create, 1 stage) + AWP = 26 | ||

| + | **PP workflow: 1 job (1 dir create) + PreDS + DS + Post + DB = 27 | ||

| + | **Stoch workflow: 8 jobs (3 dir create, 1 stage) + BB + DB + Post = 31 | ||

| + | **AWP subworkflow: 20 jobs (2 dir create, 3 stage, 2 register) | ||

| + | **PreDS subworkflow: 7 jobs (2 dir create, 1 stage) | ||

| + | **DS subworkflow: 6 jobs (1 dir create, 3 stage, 2 register) | ||

| + | **BB subworkflow: 10 jobs (2 dir create, 3 stage, 1 register) | ||

| + | **DB subworkflow: 10 jobs (2 dir create) | ||

| + | **Post subworkflow: 3 jobs (1 dir create) | ||

| + | |||

| + | == Site Response Analysis == | ||

| + | |||

| + | After the study completed, we did additional analysis on the impact of the low-frequency site response. This work is documented here: [[Low Frequency Site Response]]. | ||

== Production Checklist == | == Production Checklist == | ||

| Line 493: | Line 944: | ||

=== Science to-dos === | === Science to-dos === | ||

| − | *Run WNGC and USC with updated velocity model. | + | *<s>Run WNGC and USC with updated velocity model.</s> |

| − | *Redo validation for Northridge and Landers with updated velocity model. | + | *<s>Redo validation for Northridge and Landers with updated velocity model, risetime_coef=2.3, and hb_high v6.1.1.</s> |

| − | *For each validation event, calculate BBP results, CS results | + | *<s>For each validation event, calculate BBP results, CS results</s> |

| − | *Check for spectral discontinuities around 1 Hz | + | *<s>Check for spectral discontinuities around 1 Hz</s> |

*<s>Decide if we should stick with rvfrac=0.8 or allow it to vary</s> | *<s>Decide if we should stick with rvfrac=0.8 or allow it to vary</s> | ||

| − | *Determine appropriate SGT, low-frequency, and high-frequency seismogram durations | + | *<s>Determine appropriate SGT, low-frequency, and high-frequency seismogram durations</s> |

| − | *Update high-frequency Vs30 to use released Thompson values | + | *<s>Update high-frequency Vs30 to use released Thompson values</s> |

| + | *<s>Modify RupGen-v5.5.2 into CyberShake API</s> | ||

| + | *<s>Test RupGen-v5.5.2</s> | ||

| + | *<s>Update to using v6.1.1 of hb_high.</s> | ||

| + | *<s>Add metadata to DB for merged taper velocity model.</s> | ||

| + | *<s>Check boundary conditions.</s> | ||

| + | *<s>Check that Te-Yang's kernel fix is being used.</s> | ||

=== Technical to-dos === | === Technical to-dos === | ||

| Line 506: | Line 963: | ||

*<s>Switch to using github repo version of CyberShake on Summit</s> | *<s>Switch to using github repo version of CyberShake on Summit</s> | ||

*<s>Update to latest UCVM (v22.7)</s> | *<s>Update to latest UCVM (v22.7)</s> | ||

| − | *Switch to optimized version of rupture generator | + | *<s>Switch to optimized version of rupture generator</s> |

| − | *Test DirectSynth code with fixed memory leak from Frontera | + | *<s>Test DirectSynth code with fixed memory leak from Frontera</s> |

| − | *Switch to using Pegasus-supported interface to Globus transfers | + | *<s>Switch to using Pegasus-supported interface to Globus transfers.</s> |

| − | *Test bundled glide-in jobs for SGT and DirectSynth jobs | + | *<s>Test bundled glide-in jobs for SGT and DirectSynth jobs</s> |

| − | *Fix seg fault in PMC running broadband processing | + | *<s>Fix seg fault in PMC running broadband processing</s> |

| − | *Update curve generation to generate curves with more points | + | *<s>Update curve generation to generate curves with more points</s> |

*<s>Test OpenMP version of DirectSynth</s> | *<s>Test OpenMP version of DirectSynth</s> | ||

| − | *Migrate Study | + | *<s>Migrate Study 18.8 from moment to focal.</s> |

| + | *<s>Delete Study 18.8 off of moment.</s> | ||

| + | *<s>Optimize PeakAmplitudes table on moment.</s> | ||

| + | *<s>Move Study 13.4 output files from CARC to OLCF HPSS.</s> | ||

| + | *<s>Move Study 14.2 output files from CARC to OLCF HPSS.</s> | ||

| + | *<s>Switch to using OMP version of DS code by default.</s> | ||

| + | *<s>Review wiki content.</s> | ||

| + | *<s>Switch to using Python 3.9 on shock.</s> | ||

| + | *<s>Confirm PGA and PGV are being calculated and inserted into the DB.</s> | ||

| + | *<s>Drop rupture variations and seeds tables for RV ID 9, in case we need to put back together for SQLite running.</s> | ||

| + | *<s>Change to Pegasus 5.0.3 on shock-carc.</s> | ||

| + | *<s>Tag code.</s> | ||

== Presentations, Posters, and Papers == | == Presentations, Posters, and Papers == | ||

| + | |||

| + | Science Readiness Review: [[File:Study_22_12_Science_Readiness_Review.pdf|PDF]], [[:File:Study_22_12_Science_Readiness_Review.odp|ODP]] | ||

| + | |||

| + | Technical Readiness Review: [[File:Study_22_12_Technical_Readiness_Review.pdf|PDF]], [[:File:Study_22_12_Technical_Readiness_Review.odp|ODP]] | ||

| + | |||

| + | == References == | ||

| + | |||

| + | Details about the Graves & Pitarka stochastic approach used in the SCEC Broadband Platform, v22.4, the same as is used in this study: [https://doi.org/10.1785/0120210138] | ||

| + | |||

| + | The merged taper was based off work by Hu, Olsen, and Day showing that a 700-1000m taper improves the fit between synthetics and data for La Habra: [https://doi.org/10.1093/gji/ggac175] | ||

Latest revision as of 22:05, 20 August 2024

CyberShake Study 22.12 is a study in Southern California which includes deterministic low-frequency (0-1 Hz) and stochastic high-frequency (1-50 Hz) simulations. We used the Graves & Pitarka (2022) rupture generator and the high frequency modules from the SCEC Broadband Platform v22.4.

Contents

- 1 Status

- 2 Data Products

- 3 Science Goals

- 4 Technical Goals

- 5 Sites

- 6 Velocity Model

- 7 Rupture Generator

- 8 High-frequency codes

- 9 Spectral Content around 1 Hz

- 10 Seismogram Length

- 11 Validation

- 12 Updates and Enhancements

- 13 Output Data Products

- 14 Computational and Data Estimates

- 15 Lessons Learned

- 16 Stress Test

- 17 Events During Study

- 18 Performance Metrics

- 19 Site Response Analysis

- 20 Production Checklist

- 21 Presentations, Posters, and Papers

- 22 References

Status

This study is complete. We are currently performing analysis of the results.

Data Products

Low-frequency

Change from Study 15.4

Here's a table with the overall averaged differences between Study 15.4 and 22.12 at 2% in 50 years.

Negative difference and ratios less than 1 indicate smaller Study 22.12 results.

| Period | Difference (22.12-15.4) | Ratio (22.12/15.4) |

|---|---|---|

| 2 sec | 0.0445 g | 1.162 |

| 3 sec | -0.00883 g | 0.988 |

| 5 sec | -0.0200 g | 0.0901 |

| 10 sec | -0.00846 | 0.882 |

Broadband

Science Goals

The science goals for this study are:

- Calculate a regional CyberShake model for southern California using an updated rupture generator.

- Calculate an updated broadband CyberShake model.

- Sample variability in rupture velocity as part of the rupture generator.

- Increase hypocentral density, from 4.5 km to 4 km.

Technical Goals

The technical goals for this study are:

- Use an optimized OpenMP version of the post-processing code.

- Bundle the SGT and post-processing jobs to run on large Condor glide-ins, taking advantage of queue policies favoring large jobs.

- Perform the largest CyberShake study to date.

Sites

We will use the standard 335 southern California sites (same as Study 21.12). The order of execution will be:

- Stress test sites (site list here)

- Sites of interest

- 20 km grid

- 10 km grid

- Selected 5 km grid sites

Velocity Model

Summary: We are using a velocity model which is a combination of CVM-S4.26.M01 and the Ely-Jordan GTL:

- At each mesh point in the top 700m, we calculate the Vs value from CVM-S4.26.M01, and from the Ely-Jordan GTL using a taper down to 700m.

- We select the approach which produces the smallest Vs value, and we use the Vp, Vs, and rho from that approach.

- We also preserve the Vp/Vs ratio, so if the Vs minimum of 500 m/s is applied, Vp will be scaled so the Vp/Vs ratio is the same.

We are planning to use CVM-S4.26 with a GTL applied, and the CVM-S4 1D background model outside of the region boundaries.

We are investigating applying the Ely-Jordan GTL down to 700 m instead of the default of 350m. We extracted profiles for a series of southern California CyberShake sites, with no GTL, a GTL applied down to 350m, and a GTL applied down to 700m.

Velocity Profiles

Corners of the Simulation region are:

-119.38,34.13 -118.75,35.08 -116.85,34.19 -117.5,33.25

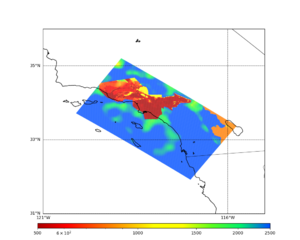

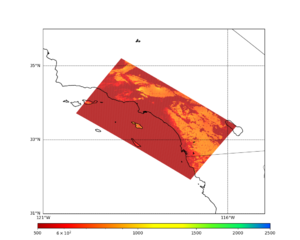

Sites (the CVM-S4.26 basins are outlined in red):

These are for sites outside of the CVM-S4.26 basins:

| Site | No GTL | 700m GTL | Smaller value, extracted from mesh | 3 sec comparison curve Study 15.4 w/no taper and v3.3.1, Study 22.12 w/taper and v5.5.2 |

|---|---|---|---|---|

| LAPD | ||||

| s764 | ||||

| s568 | ||||

| s035 | ||||

| PERR | ||||

| MRVY | ||||

| s211 |

These are for sites inside of the CVM-S4.26 basins:

| Site | No GTL | 700m GTL | Smaller value | 3 sec comparison curve Study 15.4 w/no taper and v3.3.1, Study 22.12 w/taper and v5.5.2 |

|---|---|---|---|---|

| s117 | ||||

| USC | ||||

| WNGC | ||||

| PEDL | ||||

| SBSM | ||||

| SVD |

Merged Taper Algorithm

Our algorithm for generating the velocity model, which we are calling the 'merged taper', is as follows:

- Set the surface mesh point to a depth of 25m.

- Query the CVM-S4.26.M01 model ('cvmsi' string in UCVM) for each grid point.

- Calculate the Ely taper at that point using 700m as the transition depth. Note that the Ely taper uses the Thompson Vs30 values in constraining the taper near the surface.

- Compare the values before and after the taper modification; at each grid point down to the transition depth, use the value from the method with the lower Vs value.

- Check values for Vp/Vs ratio, minimum Vs, Inf/NaNs, etc.

Value constraints

We impose the following constraints (in this order) on velocity mesh values. The ones in bold are new for Study 22.12.

- Vs >= 500 m/s. If lower, Calculate the Vp/Vs ratio. Set Vs=500, and Vp=Vs*(Vp/Vs ratio) [which in this case is Vp=500*(Vp/Vs ratio)].

- Vp >= 1700 m/s. If lower, Vp is set to 1700.

- rho = 1700 km/m3. If lower, rho is set to 1700.

- Vp/Vs >= 1.45. If not, Vs is set to Vp/1.45.

Cross-sections

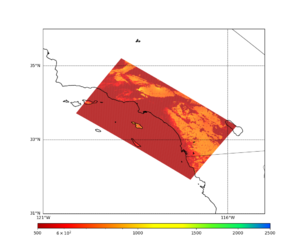

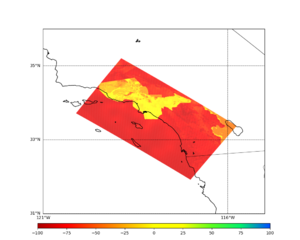

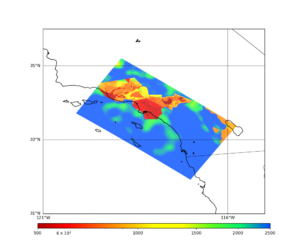

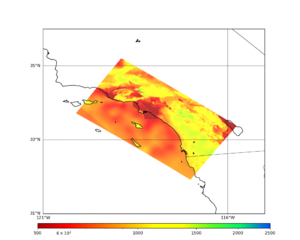

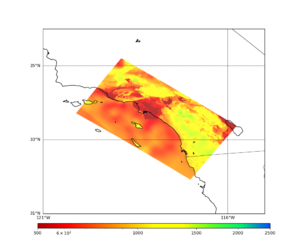

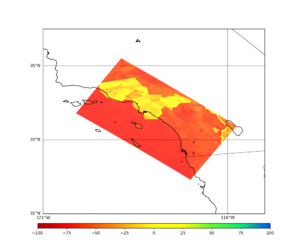

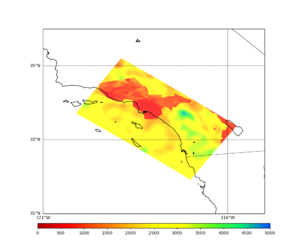

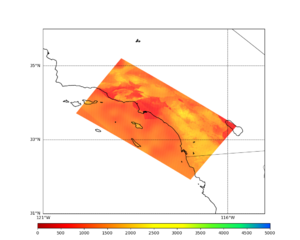

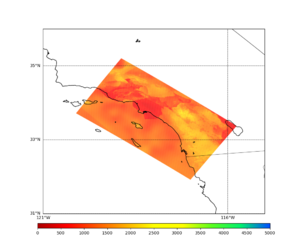

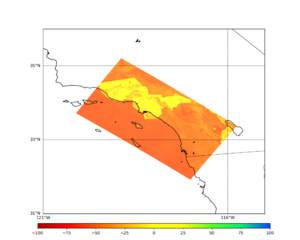

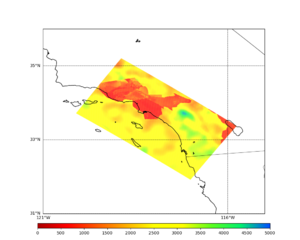

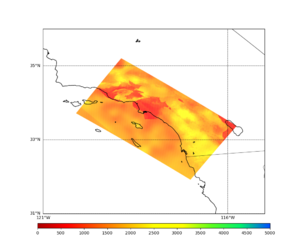

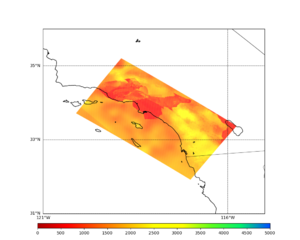

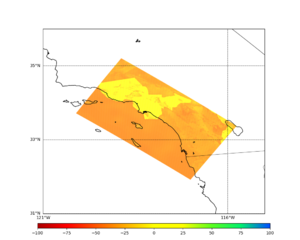

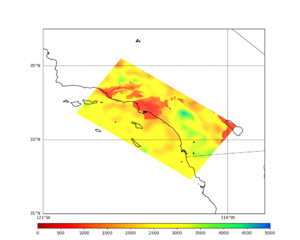

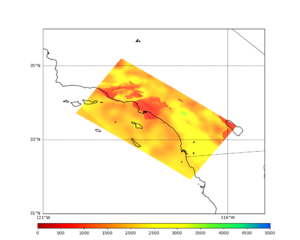

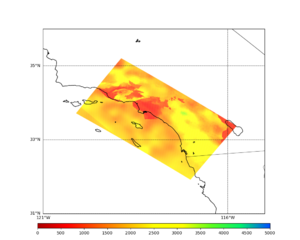

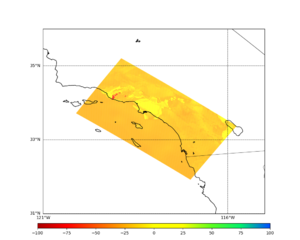

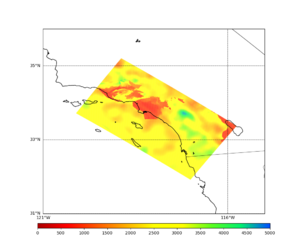

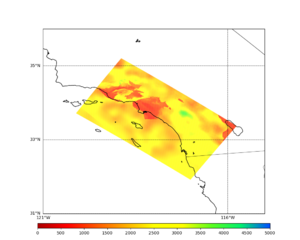

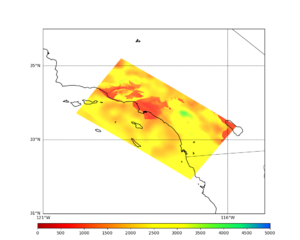

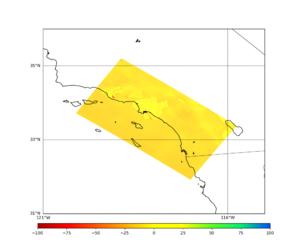

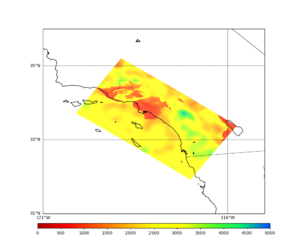

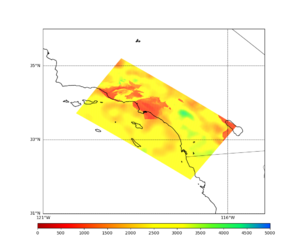

Below are cross-sections of the TEST site velocity model at depths down to 600m for CVM-S4.26.M01, the taper to 700m, the lesser of the two values, and the % difference.

Note that the top two slices use a different color scale.

| Depth | CVM-S4.26.M01 | Ely taper to 700m | Selecting smaller value | % difference, smaller value vs S4.26.M01 |

|---|---|---|---|---|

| 0m (queried at 25m depth) | ||||

| 100m | ||||

| 200m | ||||

| 300m | ||||

| 400m | ||||

| 500m | ||||

| 600m |

Implementation details

To support the Ely taper approach where the smaller value is selected, the following algorithmic changes were made to the CyberShake mesh generation code. Since the UCVM C API doesn't support removing models, we couldn't just add the 'elygtl' model - it would then be included in every query, and we need to run queries with and without it to make the comparison. We also must include the Ely interpolator, since all that querying the elygtl model does is populates the gtl part of the properties with the Vs30 value, and it's the interpolator which takes this and the crustal model info and generates the taper.

- The decomposition is done by either X-parallel or Y-parallel stripes, which have a constant depth. Check the depth to see if it is shallower than the transition depth.

- If so, initialize the elygtl model using ucvm_elygtl_model_init(), if uninitialized. Set the id to UCVM_MAX_MODELS-1. There's no way to get model id info from UCVM, so we use UCVM_MAX_MODELS-1 as it is 29, we are very unlikely to load 29 models, and therefore unlikely to have an id conflict.

- Change the depth value of the points to query to the transition depth. This is because we need to know the crustal model Vs value at the transition depth, so that the Ely interpolator can match it.

- Query the crustal model again, with the modified depths.

- Modify the 'domain' parameter in the properties data structure to UCVM_DOMAIN_INTERP, to indicate that we will be using an interpolator.

- Change the depth value of the points to query back to the correct depth, so that the interpolator can work correctly on them.

- Query the elygtl using ucvm_elygtl_model_query() and the correct depth.

- Call the interpolator for each point independently, using the correct depth.

- For each point, choose to use either the original crustal data or the Ely taper data, depending on which has the lower Vs.

In the future we plan for this approach to be implemented in UCVM, and we can simplify the CyberShake query code.

Impact on hazard

Below are hazard curves calculated with the old approach, and with the implementation described above, for WNGC.

These are scatterplots for the above WNGC curves.

Velocity Model Info

For Study 22.12, we track and use a number of velocity model-related parameters, in the database and as input to the broadband codes. Below we track where values come from and how they are used.

Broadband codes

- The code that is used to calculate high-frequency seismograms takes three parameters: vs30, vref, and vpga. vref and vpga are 500 m/s. Vs30 comes from the 'Target_Vs30' parameter in the database, which itself comes from the Thompson et al. (2020) model.

- The low-frequency site response code also has three parameters: vs30, vref, and vpga. Vs30 also comes from the 'Target_Vs30' parameter in the database, which itself comes from the Thompson (2020) model. Vref is calculated as ('Model_Vs30' database parameter) * (VsD500)/(Vs500). Vpga is 500.0.

- Model_Vs30 is calculated using a slowness average of the top 30 m from UCVM.

- VsD500 is the slowness average of the grid points at the surface, 100m, 200m, 300m, 400m, and 500m depth. Note that for this study, the surface grid point is populated by querying UCVM at a depth of 25m.

- Vs500 is the slowness average of the top 500m from UCVM.

Database values

The following values related to velocity structure are tracked in the database:

- Model_Vs30: This Vs30 value is calculated by taking a slowness average at 1-meter increments from [0.5, 29.5] and querying UCVM, with the merged taper applied. This value is populated in low-frequency runs, and copied over to the corresponding broadband run.

- Mesh_Vsitop_ID: This identifies what algorithm was used to populate the surface grid point. For Study 22.12, we query the model at a depth of (grid spacing/4), or 25m.

- Mesh_Vsitop: The value of the surface grid point at the site, populated using the algorithm specified in Mesh_Vsitop_ID.

- Minimum_Vs: Minimum Vs value used in generating the mesh. For Study 22.12, this is 500 m/s.

- Wills_Vs30: Wills Vs30 value. Currently this parameter is unpopulated.

- Z1_0: Z1.0 value. This is calculated by querying UCVM with the merged taper at 10m increments. If there is more than one crossing of 1000 m/s, we select the second crossing. If there is only 1 crossing, we use that.

- Z2_5: Z2.5 value. This is calculated by querying UCVM with the merged taper at 10m increments. If there is more than one crossing of 2500 m/s, we select the second crossing. If there is only 1 crossing, we use that.

- Vref_eff_ID: This identifies the algorithm used to calculate the effective vref value used in the low-frequency site response. For Study 22.12, it is Model_Vs30 * VsD500/Vs500.

- Vref_eff: This is the value obtained when following the algorithm identified in Vref_eff_ID

- Vs30_Source: Source of the Target_Vs30 value. For Study 22.12, this is Thompson et al. (2020)

- Target_Vs30: This is the value used for Vs30 when performing site response. It's populated using the source in Vs30_Source.

Rupture Generator

Summary: We are using the Graves & Pitarka generator v5.5.2, the same version as used in the BBP v22.4, for Study 22.12.

Initial Findings

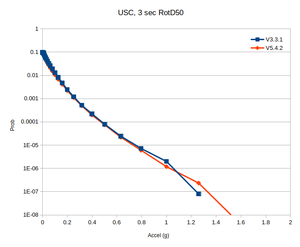

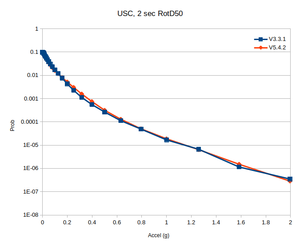

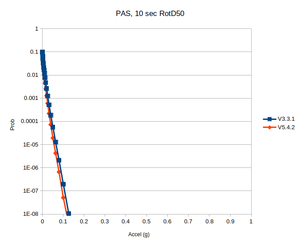

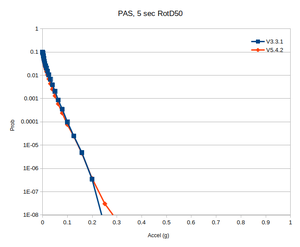

In determining what rupture generator to use for this study, we performed tests with WNGC, USC, PAS, and STNI, and compared hazard curves generated with v5.4.2 to those from Study 15.4:

| Site | 10 sec | 5 sec | 3 sec | 2 sec |

|---|---|---|---|---|

| WNGC | ||||

| USC | ||||

| PAS | ||||

| STNI |

Digging in further, it appears the elevated hazard curves for WNGC at 2 and 3 seconds are predominately due to large-magnitude southern San Andreas events producing larger ground motions.

USC, PAS, and STNI also all see larger ground motions at 2-3 seconds from these same events, but the effect isn't as strong, so it only shows up in the tails of the hazard curves.

We honed in on source 68, rupture 7, a M8.45 on the southern San Andreas which produced the largest WNGC ground motions at 3 seconds, up to 5.1g. We calculated spectral plots for v3.3.1 and v5.4.2 for all 4 sites:

| Site | v3.3.1 | v5.4.2 |

|---|---|---|

| WNGC | ||

| USC | ||

| PAS | ||

| STNI |

v5.5.2

We decided to try v5.5.2 of the rupture generator, which typically has less spectral content at short periods:

| Site | v3.3.1 | v5.4.2 | v5.5.2 |

|---|---|---|---|

| WNGC |

Hazard Curves

Comparison hazard curves for the three rupture generators, using no taper

| Site | 10 sec | 5 sec | 3 sec | 2 sec |

|---|---|---|---|---|

| WNGC | ||||

| USC |

Comparison curves for the three rupture generators, using the smaller value in the velocity model.

| Site | 10 sec | 5 sec | 3 sec | 2 sec |

|---|---|---|---|---|

| STNI | ||||

| PAS |

Scatter Plots

Scatter plots, comparing results with v5.5.2 to results from Study 15.12 by plotting the mean ground motion for each rupture. The colors are magnitude bins (blue: <M6.5; green: M6.5-7; yellow: M7-7.5; orange: M7.5-8; red: M8+).

| Site | 10 sec | 5 sec | 3 sec | 2 sec |

|---|---|---|---|---|

| WNGC | ||||

| PAS | ||||

| USC | ||||

| STNI |

We also modified the risetime_coef to 2.3, from the previous default of 1.6.

High-frequency codes

For this study, we will use the Graves & Pitarka high frequency module (hb_high) from the Broadband Platform v22.4, hb_high_v6.0.5. We will use the following parameters. Parameters in bold have been changed for this study.

| Parameter | Value |

|---|---|

| stress_average | 50 |

| rayset | 2,1,2 |

| siteamp | 1 |

| nbu | 4 (not used) |

| ifft | 0 (not used) |

| flol | 0.02 (not used) |

| fhil | 19.9 (not used) |

| irand | Seed used for generating SRF |

| tlen | Seismogram length, in sec |

| dt | 0.01 |

| fmax | 10 (not used) |

| kappa | 0.04 |

| qfexp | 0.6 |

| mean_rvfac | 0.775 |

| range_rvfac | 0.1 |

| rvfac | Calculated using BBP hfsims_cfg.py code |

| shal_rvfac | 0.6 |

| deep_rvfac | 0.6 |

| czero | 2 |

| c_alpha | -99 |

| sm | -1 |

| vr | -1 |

| vsmoho | 999.9 |

| nlskip | -99 |

| vpsig | 0 |

| vshsig | 0 |

| rhosig | 0 |

| qssig | 0 |

| icflag | 1 |

| velname | -1 |

| fa_sig1 | 0 |

| fa_sig2 | 0 |

| rvsig1 | 0.1 |

| ipdur_model | 11 |

| ispar_adjust | 1 |

| targ_mag | -1 |

| fault_area | -1 |

| default_c0 | 57 |

| default_c1 | 34 |

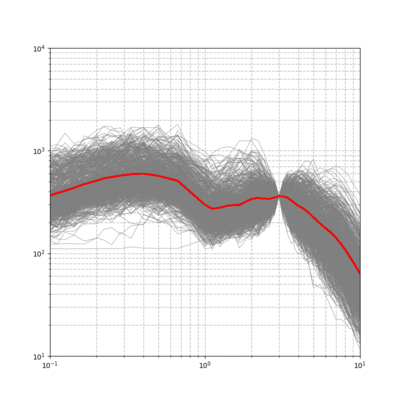

Spectral Content around 1 Hz

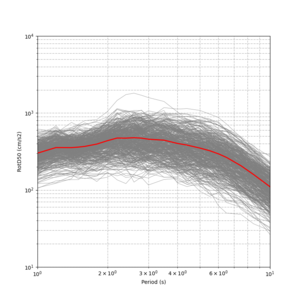

We investigated the spectral content of the Broadband CyberShake results in the 0.5-3 second range, to look for any discontinuities.

The plot below is from WNGC, Study 15.12 (run ID 4293).

Below is a plot of the hypocenters from the 706 rupture variations which meet the target.

These events have a different distribution than the rupture variations as a whole.

| Fault | Percent of target RVs | Percent of all RVs |

|---|---|---|

| San Andreas | 60 | 44 |

| Elsinore | 21 | 9 |

| San Jacinto | 13 | 8 |

| Other | 6 | 39 |

Additionally, 88% of the selected events have a magnitude greater than the average for their source. 4% are average, and 8% are lower.

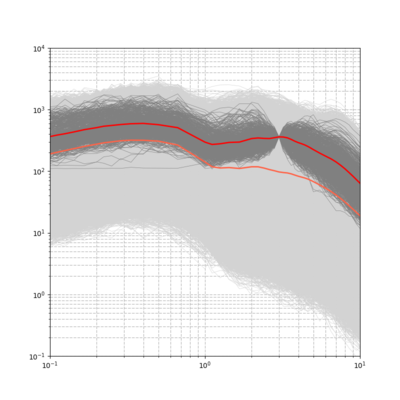

Below is a spectral plot but which includes all rupture variations and the overall mean (in orange).

Additional Sites

We created spectral plots for 7 additional sites (STNI, SBSM, PAS, LGU, LBP, ALP, PLS), located here:

| STNI | SBSM | PAS | LGU | LBP | ALP | PLS |

|---|---|---|---|---|---|---|

v5.5.2

We created spectral plots using results produced with rupture generator v5.5.2. These results have a much less noticeable slope around 1 sec.

| WNGC | PAS | USC | STNI |

|---|---|---|---|

Seismogram Length

Originally we proposed a seismogram length of 500 sec. We examined seismograms for our most distance event-station pairs for this study and concluded that most stop having any meaningful signal by around 325 sec. Thus, we are reducing the seismogram length to 400 sec to ensure some margin of error.

Validation

The extensive validation efforts performed in preparation for this study are documented in Broadband_CyberShake_Validation.

Updates and Enhancements

- New velocity model created, including Ely taper to 700m and tweaked rules for applying minimum values.

- Updated to rupture generator v5.5.2.

- CyberShake broadband codes now linked directly against BBP codes

- Added -ffast-math compiler option to hb_high code for modest speedup.

- Migrated to using OpenMP version of DirectSynth code.

- Optimized rupture generator v5.5.2.

- Verification was performed against historic events, including Northridge and Landers.

Lessons Learned from Previous Studies

- Create new velocity model ID for composite model, capturing metadata. We created a new velocity model ID to capture the model used for Study 22.12.

- Clear disk space before study begins to avoid disk contention. On moment, we migrated old studies to focal and optimized. At CARC, we migrated old studies to OLCF HPSS.

- In addition to disk space, check local inode usage. CARC and shock checked; plenty of inodes are available.

Output Data Products

File-based data products

We plan to produce the following data products, which will be stored at CARC:

Deterministic

- Seismograms: 2-component seismograms, 8000 timesteps (400 sec) each. Study 18.8 used 10k timesteps, but without the sSAF events 8k seems fine.

- PSA: X and Y spectral acceleration at 44 periods (10, 9.5, 9, 8.5, 8, 7.5, 7, 6.5, 6, 5.5, 5, 4.8, 4.6, 4.4, 4.2, 4, 3.8, 3.6, 3.4, 3.2, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.66667, 1.42857, 1.25, 1.11111, 1, .66667, .5, .4, .33333, .285714, .25, .22222, .2, .16667, .142857, .125, .11111, .1 sec)

- RotD: PGV, and RotD50, the RotD50 azimuth, and RotD100 at 25 periods (20, 15, 12, 10, 8.5, 7.5, 6.5, 6, 5.5, 5, 4.4, 4, 3.5, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.7, 1.5, 1.3, 1.2, 1.1, 1)

- Durations: for X and Y components, energy integral, Arias intensity, cumulative absolute velocity (CAV), and for both velocity and acceleration, 5-75%, 5-95%, and 20-80%.

Broadband

- Seismograms: 2-component seismograms, 40000 timesteps (400 sec) each.

- PSA: X and Y spectral acceleration at 44 periods (10, 9.5, 9, 8.5, 8, 7.5, 7, 6.5, 6, 5.5, 5, 4.8, 4.6, 4.4, 4.2, 4, 3.8, 3.6, 3.4, 3.2, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.66667, 1.42857, 1.25, 1.11111, 1, .66667, .5, .4, .33333, .285714, .25, .22222, .2, .16667, .142857, .125, .11111, .1 sec)

- RotD: PGA, PGV, and RotD50, the RotD50 azimuth, and RotD100 at 66 periods (20, 15, 12, 10, 8.5, 7.5, 6.5, 6, 5.5, 5, 4.4, 4, 3.5, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.7, 1.5, 1.3, 1.2, 1.1, 1, 0.85, 0.75, 0.65, 0.6, 0.55, 0.5, 0.45, 0.4, 0.35, 0.3, 0.28, 0.26, 0.24, 0.22, 0.2, 0.17, 0.15, 0.13, 0.12, 0.11, 0.1, 0.085, 0.075, 0.065, 0.06, 0.055, 0.05, 0.045, 0.04, 0.035, 0.032, 0.029, 0.025, 0.022, 0.02, 0.017, 0.015, 0.013, 0.012, 0.011, 0.01)

- Durations: for X and Y components, energy integral, Arias intensity, cumulative absolute velocity (CAV), and for both velocity and acceleration, 5-75%, 5-95%, and 20-80%.

Database data products

We plan to store the following data products in the database on moment:

Deterministic

- RotD50 and RotD100 for 6 periods (10, 7.5, 5, 4, 3, 2)

- Duration: acceleration 5-75% and 5-95%, for both X and Y

Broadband

- RotD50 and RotD100 for PGA, PGV, and 19 periods (10, 7.5, 5, 4, 3, 2, 1, 0.75, 0.5, 0.4, 0.3, 0.2, 0.1, 0.075, 0.05, 0.04, 0.03, 0.02, 0.01)

- Duration: acceleration 5-75% and 5-95%, for both X and Y

Computational and Data Estimates

Computational Estimates

We based these estimates on scaling from the average of sites USC, STNI, PAS, and WNGC, which have within 1% of the average number of variations per site.

| UCVM runtime | UCVM nodes | SGT runtime (both components) | SGT nodes | Other SGT workflow jobs | Summit Total | |

|---|---|---|---|---|---|---|

| 4 site average | 436 sec | 50 | 3776 sec | 67 | 8700 node-sec | 78.8 node-hrs |

78.8 node-hrs x 335 sites + 10% overrun margin gives us an estimate of 29.0k node-hours for SGT calculation.

| DirectSynth runtime | DirectSynth nodes | Summit Total | |

|---|---|---|---|

| 4 site average | 31000 | 92 | 792 |

792 node-hours x 335 sites + 10% overrun margin gives an estimate of 292k node-hours for post-processing.

| PMC runtime | PMC nodes | Summit Total | |

|---|---|---|---|

| 4 site average | 31500 | 92 | 805 |

805 node-hrs x 335 sites + 10% overrun margin gives an estimate of 297k node-hours for broadband calculations.

Data Estimates

Summit

On average, each site contains 626,000 rupture variations.

These estimates assume a 400 sec seismogram.

| Velocity mesh | SGTs size | Temp data | Output data | |

|---|---|---|---|---|

| 4 site average (GB) | 177 | 1533 | 1533 | 225 |

| Total for 335 sites (TB) | 57.8 | 501.4 | 501.4 | 73.6 |

CARC

We estimate 225.6 GB/site x 335 sites = 73.8 TB in output data, which will be transferred back to CARC. After transferring Study 13.4 and 14.2, we have 66 TB free at CARC, so additional data will need to be moved.

shock-carc

The study should use approximately 737 GB in workflow log space on /home/shock. This drive has approximately 1.5 TB free.

moment database

The PeakAmplitudes table uses approximately 99 bytes per entry.

99 bytes/entry * 34 entries/event (11 det + 25 stoch) * 622,636 events/site * 335 sites = 654 GB. The drive on moment with the mysql database has 771 GB free, so we will plan to migrate Study 18.8 off of moment to free up additional room.

Lessons Learned

- Make sure database space estimates include both RotD50 and RotD100 values, and also include the space required for indices.

- Add sanity checks on all intensity measures inserted, not just the geometric means (which aren't being inserted anymore).

- Test all types of bundled glidein jobs before the production run. (The AWP jobs ran fine, but we had issues with the DirectSynth jobs).

- Make sure all appropriate velocity fields in the DB are being populated.

- Come up with a solution to the out/err file issue with the BB runs. With file-per-worker, PMC gets a seg fault when the final output is combined. With file-per-task, we end up with 160k files and the filesystem becomes angry.

Stress Test

As of 12/17/22, user callag has run 13627 jobs and consumed 71936.9 node-hours on Summit.

/home/shock on shock-carc has 1544778288 blocks free.

The stress test started at 15:47:42 PST on 12/17/22.

The stress test completed at 13:32:20 PST on 1/7/23, for an elapsed time of 501.7 hours.

As of 1/10/23, user callag has run 14204 jobs and consumed 115430.8 node-hours on Summit.

This works out to 2174.7 node-hours per site, 29.7% more than the initial estimate of 1675.8.

Issues Discovered

- The cronjob submission script on shock-carc was assigning Summit as the SGT and PP host, meaning that summit-pilot wasn't considered in planning.

We removed assignment of SGT and PP host at workflow creation time.

- Pegasus was trying to transfer some files from CARC to shock-carc /tmp. Since CARC is accessed via the GO protocol, but /tmp isn't served through GO, there doesn't seem to be a way to do this.