Difference between revisions of "CyberShake Code Base"

| (107 intermediate revisions by the same user not shown) | |||

| Line 11: | Line 11: | ||

== Code Installation == | == Code Installation == | ||

| − | Historically, we have selected a root directory for CyberShake, then created the subdirectories 'software' for all the code, 'ruptures' for the rupture files, and 'utils' for workflow tools. Each code listed below, along with the configuration file, should be checked out into the 'software' subdirectory. | + | Historically, we have selected a root directory for CyberShake, then created the subdirectories 'software' for all the code, 'ruptures' for the rupture files, 'logs' for log files, and 'utils' for workflow tools. This is typically set up in unpurged storage space, so once installed purging isn't a worry. Each code listed below, along with the configuration file, should be checked out into the 'software' subdirectory. |

| + | |||

| + | In terms of compilers, you should use the GNU compilers unless specifically directed otherwise. | ||

| + | |||

| + | Most of the codes below contain a main directory. Inside that is a bin directory, with binaries; a src directory with code requiring compilation; and wrappers, in the main directory. | ||

| + | |||

| + | If you are looking for compilation instructions, a general guide is available [[CyberShake compilation guide | here]]. | ||

=== Configuration file === | === Configuration file === | ||

| Line 18: | Line 24: | ||

The configuration file is available at: | The configuration file is available at: | ||

| − | + | https://github.com/SCECcode/cybershake-core/cybershake.cfg | |

| − | Obviously, this file must be edited to be correct for the install. | + | Obviously, this file must be edited to be correct for the install. |

| − | + | The keys that CyberShake currently expects to find are: | |

| − | + | *CS_PATH = /path/to/CyberShake/software/directory | |

| + | *SCRATCH_PATH = /path/to/shared/scratch | ||

| + | *TMP_PATH = /path/to/tmp (can be node-local, or shared with scratch | ||

| + | *RUPTURE_PATH = /path/to/CyberShake/rupture/directory | ||

| + | *MPI_CMD = ibrun or aprun or mpiexec | ||

| + | *LOG_PATH = /path/to/CyberShake/logs/directory | ||

| + | |||

| + | To interact with cybershake.cfg, the CyberShake codes use a Python script to deliver cybershake.cfg entries as key-value pairs, located here: | ||

| + | https://github.com/SCECcode/cybershake-core/config.py | ||

Several CyberShake codes import config, then use it to read out the cybershake.cfg file. | Several CyberShake codes import config, then use it to read out the cybershake.cfg file. | ||

| + | |||

| + | === Compiler file === | ||

| + | |||

| + | A long time ago, Gideon Juve created a compiler file, Compilers.mk, which contains information about which compilers should be used for which system. This file should also be downloaded using 'svn export' and installed in the software directory, from | ||

| + | https://github.com/SCECcode/cybershake-core/Compilers.mk | ||

| + | |||

| + | Some of the makefiles reference this file. This can - and should - be updated to reflect new systems. | ||

== SGT-related codes == | == SGT-related codes == | ||

| Line 38: | Line 59: | ||

<b>Needs to be changed if:</b> | <b>Needs to be changed if:</b> | ||

| − | #The CyberShake volume depth needs to be changed, so as to have the right number of grid points. That is set in the genGrid() function in GenGrid_py/gen_grid.py. | + | #The CyberShake volume depth needs to be changed, so as to have the right number of grid points. That is set in the genGrid() function in GenGrid_py/gen_grid.py, in km. |

#X and Y padding needs to be altered. That is set using 'bound_pad' in Modelbox/get_modelbox.py, around line 70. | #X and Y padding needs to be altered. That is set using 'bound_pad' in Modelbox/get_modelbox.py, around line 70. | ||

#The rotation of the simulation volume needs to be changed. That is set using 'model_rot' in Modelbox/get_modelbox.py, around line 70. | #The rotation of the simulation volume needs to be changed. That is set using 'model_rot' in Modelbox/get_modelbox.py, around line 70. | ||

| Line 44: | Line 65: | ||

#The divisibility needs for GPU simulations change (currently, we need the dimensions to be evenly divisible by the number of GPUs used in that dimension. That is in Modelbox/get_modelbox.py, around line 250. | #The divisibility needs for GPU simulations change (currently, we need the dimensions to be evenly divisible by the number of GPUs used in that dimension. That is in Modelbox/get_modelbox.py, around line 250. | ||

| − | <b>Source code location:</b> | + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/PreCVM/ |

<b>Author:</b> Rob Graves, wrapped by Scott Callaghan | <b>Author:</b> Rob Graves, wrapped by Scott Callaghan | ||

| − | <b>Dependencies:</b> Getpar, MySQLdb for Python | + | <b>Dependencies:</b> [[CyberShake_Code_Base#Getpar|Getpar]], [[CyberShake_Code_Base#MySQLdb|MySQLdb for Python]] |

<b>Executable chain:</b> | <b>Executable chain:</b> | ||

| Line 57: | Line 78: | ||

GenGrid_py/bin/gen_model_cords | GenGrid_py/bin/gen_model_cords | ||

| − | <b>Compile instructions:</b>Run 'make' in the Modelbox/src and the | + | <b>Compile instructions:</b>Run 'make' in the Modelbox/src and the GenGrid_py/src directories. |

<b>Usage:</b> | <b>Usage:</b> | ||

| Line 91: | Line 112: | ||

<b>Purpose:</b> To generate a populated velocity mesh for a CyberShake simulation volume. | <b>Purpose:</b> To generate a populated velocity mesh for a CyberShake simulation volume. | ||

| − | <b>Detailed description:</b> UCVM takes the volume defined by PreCVM and queries the [[UCVM]] software to populate the volume. The resulting mesh is then checked for Vp/Vs ratio, minimum Vp/Vs/rho, and for no Infs or NaNs. The data is outputted in either Graves (RWG) format or AWP format. | + | <b>Detailed description:</b> UCVM takes the volume defined by PreCVM and queries the [[UCVM]] software, using the C API, to populate the volume. The resulting mesh is then checked for Vp/Vs ratio, minimum Vp/Vs/rho, and for no Infs or NaNs. The data is outputted in either Graves (RWG) format or AWP format. This code also produces log files, which will be written to the CyberShake logs directory/GenLog/site/v_mpi-<processor number>.log. This can be useful if there's an error and you aren't sure why. |

<b>Needs to be changed if:</b> | <b>Needs to be changed if:</b> | ||

#New velocity models are added. Velocity models are specified in the DAX and passed through the wrapper scripts into the C code and then ultimately to UCVM, so an if statement must be added to around line 250 (and around line 450 if it's applicable for no GTL). | #New velocity models are added. Velocity models are specified in the DAX and passed through the wrapper scripts into the C code and then ultimately to UCVM, so an if statement must be added to around line 250 (and around line 450 if it's applicable for no GTL). | ||

#The backend UCVM substantially changes. If we move to the Python implementation, for example. | #The backend UCVM substantially changes. If we move to the Python implementation, for example. | ||

| + | #If additional models are added, new libraries may need to be added to the makefile. | ||

| − | <b>Source code location:</b> | + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/UCVM |

<b>Author:</b> Scott Callaghan | <b>Author:</b> Scott Callaghan | ||

| − | <b>Dependencies:</b> Getpar, UCVM | + | <b>Dependencies:</b> [[CyberShake_Code_Base#Getpar|Getpar]], [[CyberShake_Code_Base#UCVM|UCVM]] |

<b>Executable chain:</b> | <b>Executable chain:</b> | ||

single_exe.py | single_exe.py | ||

| − | + | single_exe.csh | |

bin/ucvm-single-mpi | bin/ucvm-single-mpi | ||

| − | <b>Compile instructions:</b> | + | <b>Compile instructions:</b>The makefile needs to be edited so that "UCVM_HOME" points to the UCVM home directory. Then run 'make' in the UCVM/src directory. |

<b>Usage:</b> | <b>Usage:</b> | ||

| Line 146: | Line 168: | ||

#We start using velocity models with boundaries aren't perpendicular to the earth's surface. | #We start using velocity models with boundaries aren't perpendicular to the earth's surface. | ||

| − | <b>Source code location:</b> | + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/UCVM/smoothing |

<b>Author:</b> Scott Callaghan | <b>Author:</b> Scott Callaghan | ||

| − | <b>Dependencies:</b> UCVM | + | <b>Dependencies:</b> [[CyberShake_Code_Base#UCVM|UCVM]] |

<b>Executable chain:</b> | <b>Executable chain:</b> | ||

| Line 158: | Line 180: | ||

smoothing/smooth_mpi | smoothing/smooth_mpi | ||

| − | <b>Compile instructions:</b>Run 'make' in the smoothing directory, and make sure that | + | <b>Compile instructions:</b>Run 'make' in the smoothing directory, and make sure that determine_surface_model has been compiled in the UCVM/src directory. You may need to change the compiler; currently it uses 'cc'. |

<b>Usage:</b> | <b>Usage:</b> | ||

| Line 189: | Line 211: | ||

<b>Needs to be changed if:</b> | <b>Needs to be changed if:</b> | ||

#We change our approach for saving adaptive mesh points. | #We change our approach for saving adaptive mesh points. | ||

| + | #We change the location of the rupture geometry files, currently assumed to be <rupture root>/Ruptures_erf<erf ID> . This is specified in presgt.py, line 167. | ||

| + | #The directory hierarchy and naming scheme for rupture geometry files, currently <src id>/<rup id>/<src id>_<rup_id>.txt, changes. This is specified in faultlist_py/CreateFaultList.py, line 36. | ||

| + | #The number of header lines in the rupture geometry file changes. This would require changing the nheader value, currently 6, specified in faultlist_py/CreateFaultList.py, line 36. | ||

#We switch to RSQSim ruptures, or other ruptures in which the geometry isn't planar. Modifications would be required to gen_sgtgrid.c. | #We switch to RSQSim ruptures, or other ruptures in which the geometry isn't planar. Modifications would be required to gen_sgtgrid.c. | ||

| − | <b>Source code location:</b> | + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/PreSgt |

<b>Author:</b> Rob Graves, heavily modified by Scott Callaghan | <b>Author:</b> Rob Graves, heavily modified by Scott Callaghan | ||

| − | <b>Dependencies:</b> Getpar, libcfu, MySQLdb for Python | + | <b>Dependencies:</b> [[CyberShake_Code_Base#Getpar|Getpar]], [[CyberShake_Code_Base#libcfu|libcfu]], [[CyberShake_Code_Base#MySQLdb|MySQLdb for Python]] |

<b>Executable chain:</b> | <b>Executable chain:</b> | ||

| Line 219: | Line 244: | ||

<b>Purpose:</b> To generate input files in a format that AWP-ODC expects. | <b>Purpose:</b> To generate input files in a format that AWP-ODC expects. | ||

| − | <b>Detailed description:</b> PreAWP | + | <b>Detailed description:</b> PreAWP performs a number of steps: |

| + | #An IN3D parameter file is produced, needed for AWP-ODC. | ||

| + | #A file with the SGT coordinates to save in AWP format is produced. Since RWG and AWP use different coordinate systems, a coordinate transformation (X->Y, Y->X, zero-indexing->one-indexing) is performed on the SGT coordinates file. | ||

| + | #The velocity file in translated to AWP format, if it isn't in AWP format already. | ||

| + | #The correct source, based on the dt and nt, is selected. The source must be generated manually ahead of time. Details about source generation are given [[CyberShake Code Base#Impulse source descriptions | here]]. | ||

| + | #Striping for the output file is also set up here. | ||

| + | #Files are symlinked into the directory structure that AWP expects. Note that slightly different versions of this exist for the CPU and GPU implementations of AWP-ODC-SGT. | ||

<b>Needs to be changed if:</b> | <b>Needs to be changed if:</b> | ||

| + | #The path to the Lustre striping command (lfs) changes. This path is hard-coded in build_awp_inputs.py, line 14. Note that this is the path to lfs on the compute node, NOT the login node. | ||

#The AWP code changes its input format. | #The AWP code changes its input format. | ||

| − | <b>Source code location:</b> | + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/AWP-HIP-SGT/utils/ (HIP GPU) or https://github.com/SCECcode/cybershake-core/AWP-GPU-SGT/utils/ (CUDA GPU) or https://github.com/SCECcode/cybershake-core/AWP-ODC-SGT/utils/ (CPU), AND also https://github.com/SCECcode/cybershake-core/SgtHead |

<b>Author:</b> Scott Callaghan | <b>Author:</b> Scott Callaghan | ||

| Line 282: | Line 314: | ||

#New science or features are added to the AWP code. | #New science or features are added to the AWP code. | ||

| − | <b>Source code location:</b> | + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/AWP-ODC-SGT |

<b>Author:</b> Kim Olsen, Steve Day, Yifeng Cui, various students and post-docs, wrapped by Scott Callaghan | <b>Author:</b> Kim Olsen, Steve Day, Yifeng Cui, various students and post-docs, wrapped by Scott Callaghan | ||

| − | <b>Dependencies:</b> iobuf | + | <b>Dependencies:</b> iobuf module |

<b>Executable chain:</b> | <b>Executable chain:</b> | ||

| Line 304: | Line 336: | ||

<b>Output files:</b> [[CyberShake_Code_Base#AWP SGT|AWP SGT file]]. | <b>Output files:</b> [[CyberShake_Code_Base#AWP SGT|AWP SGT file]]. | ||

| − | === AWP-ODC-SGT, GPU version === | + | === AWP-ODC-SGT, CUDA GPU version === |

<b>Purpose:</b> To perform SGT synthesis | <b>Purpose:</b> To perform SGT synthesis | ||

| Line 313: | Line 345: | ||

#New science or features are added to the AWP code. | #New science or features are added to the AWP code. | ||

| − | <b>Source code location:</b> | + | <b>Source code location:</b> hhttps://github.com/SCECcode/cybershake-core/AWP-GPU-SGT |

<b>Author:</b> Kim Olsen, Steve Day, Yifeng Cui, various students and post-docs, wrapped by Scott Callaghan | <b>Author:</b> Kim Olsen, Steve Day, Yifeng Cui, various students and post-docs, wrapped by Scott Callaghan | ||

| − | <b>Dependencies:</b> CUDA toolkit | + | <b>Dependencies:</b> CUDA toolkit module |

<b>Executable chain:</b> | <b>Executable chain:</b> | ||

| Line 390: | Line 422: | ||

<b>Purpose:</b> To prepare the AWP results for use in post-processing. | <b>Purpose:</b> To prepare the AWP results for use in post-processing. | ||

| − | <b>Detailed description:</b> PostAWP reformats the AWP output files into the SGT component order expected by RWG ( | + | <b>Detailed description:</b> PostAWP prepares the outputs of AWP so that they can be used with the RWG-authored post-processing code. Specifically, it undoes the AWP coordinate transformation and reformats the AWP output files into the SGT component order expected by RWG (XX->YY, YY->XX, XZ->-YZ, YZ->-XZ, and all SGTs are doubled if we are calculating the Z-component), creates separate SGT header files, and calculates MD5 sums on the SGT files. Calculating the header information requires a number of input files, since lambda, mu, and the location of the impulse must all be included. The MD5 sums can be calculated separately, using the MD5 wrapper RunMD5sum. |

<b>Needs to be changed if:</b> | <b>Needs to be changed if:</b> | ||

| Line 397: | Line 429: | ||

#We decide not to calculate MD5 sums | #We decide not to calculate MD5 sums | ||

| − | <b>Source code location:</b> | + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/AWP-HIP-SGT/utils/prepare_for_pp.py (this will work for the CPU version of AWP also, despite the path); https://github.com/SCECcode/cybershake-core/SgtHead |

<b>Author:</b> Scott Callaghan | <b>Author:</b> Scott Callaghan | ||

| − | <b>Dependencies:</b> Getpar | + | <b>Dependencies:</b> [[CyberShake_Code_Base#Getpar|Getpar]] |

<b>Executable chain:</b> | <b>Executable chain:</b> | ||

| Line 419: | Line 451: | ||

<b>Output files:</b> [[CyberShake_Code_Base#RWG SGT|RWG SGT file]], [[CyberShake_Code_Base#SGT header file|SGT header file]] | <b>Output files:</b> [[CyberShake_Code_Base#RWG SGT|RWG SGT file]], [[CyberShake_Code_Base#SGT header file|SGT header file]] | ||

| − | |||

=== RunMD5sum === | === RunMD5sum === | ||

| Line 430: | Line 461: | ||

#We change hash algorithms | #We change hash algorithms | ||

| − | <b>Source code location:</b> | + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/SgtHead/run_md5sum.sh |

<b>Author:</b> Scott Callaghan | <b>Author:</b> Scott Callaghan | ||

| Line 446: | Line 477: | ||

<b>Typical run configuration:</b> Serial; for a 750 GB SGT, takes about 70 minutes. | <b>Typical run configuration:</b> Serial; for a 750 GB SGT, takes about 70 minutes. | ||

| − | <b>Input files:</b> [[CyberShake_Code_Base# | + | <b>Input files:</b> [[CyberShake_Code_Base#RWG SGT|RWG SGT file]] |

<b>Output files:</b> MD5sum, with filename <RWG SGT filename>.md5 | <b>Output files:</b> MD5sum, with filename <RWG SGT filename>.md5 | ||

| + | |||

| + | === NanCheck === | ||

| + | |||

| + | <b>Purpose:</b> Check the SGTs for anomalies before the post-processing. | ||

| + | |||

| + | <b>Detailed description:</b> This code checks to be sure the SGTs are the expected size, then checks for NaNs or too many consecutive zeros in the SGT files. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #We change the number of timesteps in the SGT file. Currently this is hardcoded, but it should be a command-line parameter. | ||

| + | #We want to add additional checks. | ||

| + | |||

| + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/SgtTest/ | ||

| + | |||

| + | <b>Author:</b> Rob Graves, Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Code_Base#Getpar|Getpar]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | perform_checks.py | ||

| + | bin/check_for_nans | ||

| + | |||

| + | <b>Compile instructions:</b> Run 'make' in SgtTest/src . | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre>Usage: ./perform_checks.py <SGT file> <SGT header file></pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial; for a 750 GB SGT, takes about 45 minutes. | ||

| + | |||

| + | <b>Input files:</b> [[CyberShake_Code_Base#AWP SGT|AWP SGT file]], [[CyberShake_Code_Base#Sgt Coords|RWG coordinate file]], [[CyberShake_Code_Base#IN3D | IN3D file]] | ||

| + | |||

| + | <b>Output files:</b> none | ||

| + | |||

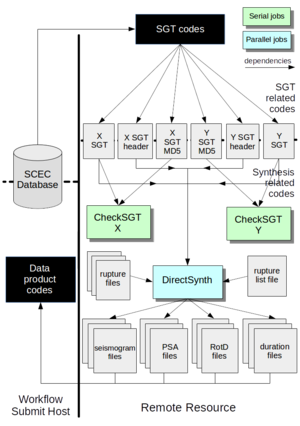

| + | == PP-related codes == | ||

| + | |||

| + | The following codes are related to the post-processing part of the workflow. | ||

| + | |||

| + | [[File:PP_workflow_stages.png|thumb|right|300px|Overview of the codes involved in the PP part of CyberShake, [http://hypocenter.usc.edu/research/cybershake/full_PP_workflow.odg source file (ODG)]]] | ||

| + | |||

| + | |||

| + | === CheckSgt === | ||

| + | |||

| + | <b>Purpose:</b> To check the MD5 sums of the SGT files to be sure they match. | ||

| + | |||

| + | <b>Detailed description:</b> CheckSgt takes the SGT files and their corresponding MD5 sums and checks for agreement. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #We change hashing algorithms. | ||

| + | #We decide to add additional sanity checks to the beginning of the post-processing. | ||

| + | |||

| + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/CheckSgt | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> none | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | CheckSgt.py | ||

| + | |||

| + | <b>Compile instructions:</b> none | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre>Usage: ./CheckSgt.py <sgt file> <md5 file> | ||

| + | </pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial; for a 750 GB SGT, takes about 90 minutes. | ||

| + | |||

| + | <b>Input files:</b> [[CyberShake_Code_Base#RWG SGT|RWG SGT]], SGT MD5 sums | ||

| + | |||

| + | <b>Output files:</b> None | ||

| + | |||

| + | === DirectSynth === | ||

| + | |||

| + | DirectSynth is the code we currently use to perform the post-processing. For historical reasons, all of the codes used for CyberShake post-processing are documented here: [https://scec.usc.edu/it/Post-processing_options CyberShake post-processing options] (login required). | ||

| + | |||

| + | <b>Purpose:</b> To perform reciprocity calculations and produce seismograms, intensity measures, and duration measures. | ||

| + | |||

| + | <b>Detailed description:</b> DirectSynth reads in the SGTs across a group of processes, and hands out tasks (synthesis jobs) to worker processes. These worker processes read in rupture geometry information from disk and call the RupGen-api to generate full slip histories in memory. The workers request SGTs from the reader processes over MPI. X and Y component PSA calculations are performed from the resultant seismograms, and RotD and duration calculations are also performed, if requested. More details about the approach used are available at [[DirectSynth]]. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #We have new intensity measures or other calculations per seismogram to perform. | ||

| + | #We decide to change the post-processing algorithm. | ||

| + | #The wrapper needs to be modified if we want to set different custom environment variables. | ||

| + | |||

| + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/DirectSynth | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan, original seismogram synthesis code by Rob Graves, X and Y component PSA code by David Okaya, RotD code by Christine Goulet | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake Code Base#Getpar | Getpar]], [[CyberShake Code Base#libcfu | libcfu]], [[CyberShake Code Base#RupGen-api-v3.3.1 | RupGen-api-v3.3.1, [[CyberShake Code Base#FFTW | FFTW]], [[CyberShake Code Base#libmemcached | libmemcached]] (optional) and [[CyberShake Code Base#memcached | memcached]] (optional) | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | direct_synth_v3.3.1.py (current version, uses the Graves & Pitarka (2014) rupture generator) | ||

| + | utils/pegasus_wrappers/invoke_memcached.sh | ||

| + | memcached | ||

| + | bin/direct_synth | ||

| + | |||

| + | <b>Compile instructions:</b> | ||

| + | #Compile RupGen-api first. | ||

| + | #Edit the makefile in DirectSynth/src . Check the following variables: | ||

| + | ##BASE_DIR should point to the top-level CyberShake install directory | ||

| + | ##LIBCFU should point to the libcfu install directory | ||

| + | ##V3_3_1_RG_LIB should point to the RupGen-api-3.3.1/lib directory | ||

| + | ##LDLIBS should have the correct paths to the libcfu and libmemcached lib directories | ||

| + | ##V3_3_1_RG_INC should point to the RupGen-api-3.3.1/include directory | ||

| + | ##IFLAGS should have the correct paths to the libcfu and libmemcached include directories | ||

| + | #Run 'make direct_synth_v3.3.1' in DirectSynth/src. | ||

| + | |||

| + | You will also need to edit the hard-coded paths to memcached in direct_synth_v3.3.1.py, in lines 15 and 24. | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | direct_synth_v3.3.1.py | ||

| + | stat=<site short name> | ||

| + | slat=<site lat> | ||

| + | slon=<site lon> | ||

| + | run_id=<run id> | ||

| + | sgt_handlers=<number of SGT handler processes; must be enough for the SGTs to be read into memory> | ||

| + | debug=<print logs for each process; 1 is yes, 0 no> | ||

| + | max_buf_mb=<buffer size in MB for each worker to use for storing SGT information> | ||

| + | rupture_spacing=<'uniform' or 'random' hypocenter spacing> | ||

| + | ntout=<nt for seismograms> | ||

| + | dtout=<dt for seismograms> | ||

| + | rup_list_file=<input file containing ruptures to process> | ||

| + | sgt_xfile=<input SGT X file> | ||

| + | sgt_yfile=<input SGT Y file> | ||

| + | x_header=<input SGT X header> | ||

| + | y_header=<input SGT Y header> | ||

| + | det_max_freq=<maximum frequency of deterministic part> | ||

| + | stoch_max_freq=<maximum frequency of stochastic part> | ||

| + | run_psa=<'1' to run X and Y component PSA, '0' to not> | ||

| + | run_rotd=<'1' to run RotD calculations, '0' to not> | ||

| + | run_durations=<'1' to run duration calculation, '0' to not> | ||

| + | simulation_out_pointsX=<'2', the number of components> | ||

| + | simulation_out_pointsY=1 | ||

| + | simulation_out_timesamples=<same as ntout> | ||

| + | simulation_out_timeskip=<same as dtout> | ||

| + | surfseis_rspectra_seismogram_units=cmpersec | ||

| + | surfseis_rspectra_output_units=cmpersec2 | ||

| + | surfseis_rspectra_output_type=aa | ||

| + | surfseis_rspectra_period=all | ||

| + | surfseis_rspectra_apply_filter_highHZ=<high filter, 5.0 for 1 Hz runs, 20.0 or higher for 10 Hz runs> | ||

| + | surfseis_rspectra_apply_byteswap=no | ||

| + | </pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Parallel, typically on 3840 processors; for 750 GB SGTs with ~7000 ruptures, takes about 12 hours. | ||

| + | |||

| + | <b>Input files:</b> [[CyberShake_Code_Base#RWG SGT|RWG SGT]], [[CyberShake_Code_Base#SGT header file|SGT headers]], [[CyberShake_Code_Base#rupture list file|rupture list file]], rupture geometry files | ||

| + | |||

| + | <b>Output files:</b> [[Accessing_CyberShake_Seismograms#Reading_Seismogram_Files | Seismograms]], [[Accessing_CyberShake_Peak_Acceleration_Data#Reading_Peak_Acceleration_Files | PSA files]], [[Accessing_CyberShake_Peak_Acceleration_Data#Reading_Peak_Acceleration_Files | RotD files]], [[Accessing_CyberShake_Duration_Data | Duration files]] | ||

| + | |||

| + | == Data Product Codes == | ||

| + | |||

| + | The software in this section takes the data products produced by the SGT and post-processing stages, adds some of it to the database, and creates final data products. Note that all these codes should be installed on a server close to the database, to reduce insertion and query time. Currently these are all installed on SCEC disks and accessed from shock.usc.edu. | ||

| + | |||

| + | [[File:Data_workflow_stages.png|thumb|right|300px|Overview of the codes involved in the data product of CyberShake, [http://hypocenter.usc.edu/research/cybershake/full_data_workflow.odg source file (ODG)]]] | ||

| + | |||

| + | === Load Amps === | ||

| + | |||

| + | <b>Purpose:</b> Load data from output files into the database. | ||

| + | |||

| + | <b>Detailed description:</b> This code loads either PSA, RotD, or Duration data into the database, depending on command-line options. It also performs sanity checks on the PSA data being inserted: values must be between 0.008 and 8400 cm/s2. If they are less than 0.008, some will still be passed through if it's a small magnitude event at large distances. If this constraint is violated, it will abort. Note that if LoadAmps needs to be rerun, sometimes the database must be cleaned out first, as data from the previous attempt may have inserted successfully and will cause duplicate key errors if you try to insert the same data again. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #We change the sanity checks on data inserts. | ||

| + | #We modify the format of the PSA, RotD, or Duration files. | ||

| + | #We add new types of data to insert. | ||

| + | #We change the database schema. | ||

| + | #We add a new server. To add a new server, in addition to providing a command-line option for it, you will need to create a Hibernate config file. You can start with moment.cfg.xml or focal.cfg.xml and edit lines 7-16 appropriately. | ||

| + | |||

| + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/CyberCommands | ||

| + | |||

| + | <b>Author:</b> Joshua Garcia, Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> LoadAmps calls CyberCommands, a Java code with a long list of dependencies (all of these are checked into the Java project): | ||

| + | |||

| + | *Ant | ||

| + | *Apache Commons | ||

| + | *Hibernate | ||

| + | *MySQL bindings | ||

| + | *Xerces | ||

| + | *DOM4J | ||

| + | *Log4J | ||

| + | *Java 1.6+ | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | insert_dir.sh | ||

| + | CyberLoadAmps_SC | ||

| + | cybercommands_SC.jar | ||

| + | CyberLoadamps.java | ||

| + | |||

| + | <b>Compile instructions:</b> Check out CyberCommands into Eclipse. Create the cybercommands_SC.jar file using Eclipse's JAR build framework and the cybercommands_SC.jardesc description file. Install cybercommand_SC.jar and the required JAR files on the server. Point insert_dir.sh to CyberLoadAmps_SC to cybercommands_SC.jar. | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre>Usage: CyberLoadAmps [-r | -d | -z | -u][-c] [-d] [-periods periods] [-run RunID] [-p directory] [-server name] [-z] [-help] [-i insertion_values] | ||

| + | [-u] [-f] | ||

| + | -i <insertion_values> Which values to insert - | ||

| + | gm: geometric mean PSA data (default) | ||

| + | xy: X and Y component PSA data | ||

| + | gmxy: Geometric mean and X and Y components | ||

| + | -run <RunID> Run ID - this option is required | ||

| + | -p <directory> file path with spectral acceleration files, | ||

| + | either top-level directory or zip file - this option is required | ||

| + | -server <name> server name (focal, surface, intensity, moment, | ||

| + | or csep-x) - this option is required | ||

| + | -periods <periods> Comma-delimited periods to insert | ||

| + | -c Convert values from g to cm/sec^2 | ||

| + | -d Assume one BSA file per rupture, with embedded | ||

| + | header information. | ||

| + | -f Don't apply value checks to insertion values; use | ||

| + | with care!. | ||

| + | -help print this message | ||

| + | -r Read rotd files (instead of bsa.) | ||

| + | -u Read duration files (instead of bsa.) | ||

| + | -z Read zip files instead of bsa.</pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial; for 5 periods, takes about 10 minutes. It's wildly dependent on the database and contention from other database processes. | ||

| + | |||

| + | <b>Input files:</b> [[Accessing_CyberShake_Peak_Acceleration_Data#Reading_Peak_Acceleration_Files | PSA files]], [[Accessing_CyberShake_Peak_Acceleration_Data#Reading_Peak_Acceleration_Files | RotD files]], [[Accessing_CyberShake_Duration_Data | Duration files]] | ||

| + | |||

| + | <b>Output files:</b> none | ||

| + | |||

| + | === Check DB Site === | ||

| + | |||

| + | <b>Purpose:</b> Verify that data was correctly loaded into the database. | ||

| + | |||

| + | <b>Detailed description:</b> This code takes a list of components (or type IDs) to check for a run ID, and verifies that there is one entry for every rupture variation. If some rupture variations are missing, a file is produced which lists the missing source, rupture, rupture variation tuples. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #Username, password, or database host are changed. | ||

| + | #We change the database schema. | ||

| + | |||

| + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/db/CheckDBDataForSite.java and DBConnect.java | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan (CheckDBDataForSite.java), Nitin Gupta, Vipin Gupta, Phil Maechling (DBConnect.java) | ||

| + | |||

| + | <b>Dependencies:</b> Both are checked into the CyberShake project: | ||

| + | |||

| + | *Apache Commons | ||

| + | *MySQL bindings | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | check_db.sh | ||

| + | CheckDBDataForSite.java | ||

| + | |||

| + | <b>Compile instructions:</b> Check out CheckDBDataForSite.java and DBConnect.java. Compile them by running 'javac -classpath mysql-connector-java-5.0.5-bin.jar:commons-cli-1.0.jar DBConnect.java CheckDBDataForSite.java'. The paths to the MySQL bindings jar and the Apache Commons jar may be different depending on your installation. | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre>usage: CheckDBDataForSite | ||

| + | -p <periods> Comma-separated list of periods to check, for geometric | ||

| + | and rotd. | ||

| + | -t <type_ids> Comma-separated list of type IDs to check, for duration. | ||

| + | -c <component> Component type (geometric, rotd, duration) to check. | ||

| + | -h,--help Print help for CheckDBDataForSite | ||

| + | -o <output> Path to output file, if something is missing (required). | ||

| + | -r <run_id> Run ID to check (required). | ||

| + | -s <server> DB server to query against. | ||

| + | </pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial; typically takes just a few seconds. It's wildly dependent on the database and contention from other database processes. | ||

| + | |||

| + | <b>Input files:</b> none | ||

| + | |||

| + | <b>Output files:</b> [[CyberShake_Code_Base#Missing variations file | Missing variations file]] | ||

| + | |||

| + | === DB Report === | ||

| + | |||

| + | <b>Purpose:</b> Produce a database report, a data product which Rob Graves used for a time. | ||

| + | |||

| + | <b>Detailed description:</b> This code takes a run ID, queries the database for PSA values for all components, and writes the output to a text file. The list of periods and the DB config parameters are specified in an XML config file. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #Username, password, or database host are changed: the DB connection parameters in default.xml would need to be edited. | ||

| + | #We want results for different periods: edit default.xml. | ||

| + | #We change the database schema. | ||

| + | |||

| + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/reports/db_report_gen.py . default.xml in the same directory is also needed, and can be generated by editing and running conf_get.py, also in the same directory. | ||

| + | |||

| + | <b>Author:</b> Kevin Milner | ||

| + | |||

| + | <b>Dependencies:</b> MySQLdb | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | db_report_gen.py | ||

| + | |||

| + | <b>Compile instructions:</b> None, all code is Python. | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre>Usage: db_report_gen.py [options] SITE_SHORT_NAME | ||

| + | |||

| + | NOTE: defaults are loaded from defaults.xml and can be edited manually | ||

| + | or overridden with conf_gen.py | ||

| + | |||

| + | Options: | ||

| + | -h, --help show this help message and exit | ||

| + | -e ERF_ID, --erfID=ERF_ID | ||

| + | ERF ID for Report (default = none) | ||

| + | -f FILENAME, --file=FILENAME | ||

| + | Store Results to a file instead of STDOUT. If a | ||

| + | directory is given, a name will be auto generated. | ||

| + | -i, --id Flag for specifying site ID instead of Short Name | ||

| + | (default uses Short Name) | ||

| + | --hypo, --hpyocenter Flag for appending hypocenter locations to result | ||

| + | -l LIMIT, --limit=LIMIT | ||

| + | Limit the total number of rusults, or 0 for no limit | ||

| + | (default = 0) | ||

| + | -o, --sort SLOW: Force SQL Order By statement for sorting. It | ||

| + | will probably come out sorted, but if it doesn't, you | ||

| + | can use this. (default will not sort) | ||

| + | -p PERIODS, --periods=PERIODS | ||

| + | Comma separated period values (default = 3.0,5.0,10.0) | ||

| + | --pr, --print-runs Print run IDs for site and optionally ERF/Rup Var | ||

| + | Scen/SGT Var IDs | ||

| + | -r RUP_VAR_SCENARIO_ID, --rupVarID=RUP_VAR_SCENARIO_ID | ||

| + | Rupture Variation Scenario ID for Report (default = | ||

| + | none) | ||

| + | --ri=RUN_ID, --runID=RUN_ID | ||

| + | Allows you to specify a run ID to use (default uses | ||

| + | latest compatible run ID) | ||

| + | -R RUPTURE, --rupture=RUPTURE | ||

| + | Only give information on specified rupture. Must be | ||

| + | acompanied by -S/--source flag (default shows all | ||

| + | ruptures) | ||

| + | -s SGT_VAR_ID, --sgtVarID=SGT_VAR_ID | ||

| + | SGT Variation ID for Report (default = none) | ||

| + | -S SOURCE, --source=SOURCE | ||

| + | Only give information on specified source. To specify | ||

| + | rupture, see -R option (default shows all sources) | ||

| + | --s_im, --sort-ims Sort output by IM value (increasing)...may be slow! | ||

| + | -v, --verbose Verbosity Flag (default = False) | ||

| + | </pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial; about 1 minute. It's wildly dependent on the database and contention from other database processes. | ||

| + | |||

| + | <b>Input files:</b> default.xml | ||

| + | |||

| + | <b>Output files:</b> [[CyberShake_Code_Base#DB Report file | DB Report file]] | ||

| + | |||

| + | === Curve Calc === | ||

| + | |||

| + | <b>Purpose:</b> Calculate CyberShake hazard curves alongside comparison GMPEs. | ||

| + | |||

| + | <b>Detailed description:</b> This code takes a run ID, component, and period, queries the database for the appropriate IM values, and calculates a hazard curve in the desired format. Comparison GMPE curves can also be plotted. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #Username, password, or database host are changed. | ||

| + | #We change the database schema. | ||

| + | #New IM types need to be supported. | ||

| + | |||

| + | <b>Source code location:</b> The CyberShake curve calculator is part of the OpenSHA codebase. The specific Java class is org.opensha.sha.cybershake.plot.HazardCurvePlotter (available via https://github.com/opensha/opensha-cybershake/tree/master/src/main/java/org/opensha/sha/cybershake/plot), but it has a complex set of Java depdendencies. To compile and run, you should follow the instructions on http://www.opensha.org/trac/wiki/SettingUpEclipse to access the source. The curve calculator is also wrapped by curve_plot_wrapper.sh, in https://github.com/SCECcode/cybershake-tools/blob/master/HazardCurveGeneration/curve_plot_wrapper.sh . | ||

| + | |||

| + | The OpenSHA project also has configuration files for various GMPEs, config files for UCERF2, and configuration files for output formats preferred by Tom and Rob, in src/org/opensha/sha/cybershake/conf. | ||

| + | |||

| + | <b>Author:</b> Kevin Milner | ||

| + | |||

| + | <b>Dependencies:</b> Standard OpenSHA dependencies | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | curve_plot_wrapper.sh | ||

| + | HazardCurvePlotter.java | ||

| + | |||

| + | <b>Compile instructions:</b> Use the OpenSHA build process if building from source. | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | usage: HazardCurvePlotter [-?] [-af <arg>] [-benchmark] [-c] [-cmp <arg>] | ||

| + | [-comp <arg>] [-cvmvs] [-e <arg>] [-ef <arg>] [-f] [-fvs <arg>] [-h | ||

| + | <arg>] [-imid <arg>] [-imt <arg>] [-n] [-novm] [-o <arg>] [-p | ||

| + | <arg>] [-pf <arg>] [-pl <arg>] [-R <arg>] [-r <arg>] [-s <arg>] | ||

| + | [-sgt <arg>] [-sgtsym] [-t <arg>] [-v <arg>] [-vel <arg>] [-w | ||

| + | <arg>] | ||

| + | -?,--help Display this message | ||

| + | -af,--atten-rel-file <arg> XML Attenuation Relationship | ||

| + | description file(s) for comparison. | ||

| + | Multiple files should be comma | ||

| + | separated | ||

| + | -benchmark,--benchmark-test-recalc Forces recalculation of hazard | ||

| + | curves to test calculation speed. | ||

| + | Newly recalculated curves are not | ||

| + | kept and the original curves are | ||

| + | plotted. | ||

| + | -c,--calc-only Only calculate and insert the | ||

| + | CyberShake curves, don't make plots. | ||

| + | If a curve already exists, it will | ||

| + | be skipped. | ||

| + | -cmp,--component <arg> Intensity measure component. | ||

| + | Options: GEOM,X,Y,RotD100,RotD50, | ||

| + | Default: GEOM | ||

| + | -comp,--compare-to <arg> Compare to aspecific Run ID (or | ||

| + | multiple IDs, comma separated) | ||

| + | -cvmvs,--cvm-vs30 Option to use Vs30 value from the | ||

| + | velocity model itself in GMPE | ||

| + | calculations rather than, for | ||

| + | example, the Wills 2006 value. | ||

| + | -e,--erf-id <arg> ERF ID | ||

| + | -ef,--erf-file <arg> XML ERF description file for | ||

| + | comparison | ||

| + | -f,--force-add Flag to add curves to db without | ||

| + | prompt | ||

| + | -fvs,--force-vs30 <arg> Option to force the given Vs30 value | ||

| + | to be used in GMPE calculations. | ||

| + | -h,--height <arg> Plot height (default = 500) | ||

| + | -imid,--im-type-id <arg> Intensity measure type ID. If not | ||

| + | supplied, will be detected from im | ||

| + | type/component/period parameters | ||

| + | -imt,--im-type <arg> Intensity measure type. Options: SA, | ||

| + | Default: SA | ||

| + | -n,--no-add Flag to not automatically calculate | ||

| + | curves not in the database | ||

| + | -novm,--no-vm-colors Disables Velocity Model coloring | ||

| + | -o,--output-dir <arg> Output directory | ||

| + | -p,--period <arg> Period(s) to calculate. Multiple | ||

| + | periods should be comma separated | ||

| + | (default: 3) | ||

| + | -pf,--password-file <arg> Path to a file that contains the | ||

| + | username and password for inserting | ||

| + | curves into the database. Format | ||

| + | should be "user:pass" | ||

| + | -pl,--plot-chars-file <arg> Specify the path to a plot | ||

| + | characteristics XML file | ||

| + | -R,--run-id <arg> Run ID | ||

| + | -r,--rv-id <arg> Rupture Variation ID | ||

| + | -s,--site <arg> Site short name | ||

| + | -sgt,--sgt-var-id <arg> STG Variation ID | ||

| + | -sgtsym,--sgt-colors Enables SGT specific symbols | ||

| + | -t,--type <arg> Plot save type. Options are png, | ||

| + | pdf, jpg, and txt. Multiple types | ||

| + | can be comma separated (default is | ||

| + | pdf) | ||

| + | -v,--vs30 <arg> Specify default Vs30 for sites with | ||

| + | no Vs30 data, or leave blank for | ||

| + | default value. Otherwise, you will | ||

| + | be prompted to enter vs30 | ||

| + | interactively if needed. | ||

| + | -vel,--vel-model-id <arg> Velocity Model ID | ||

| + | -w,--width <arg> Plot width (default = 600) | ||

| + | </pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial; about 30 seconds per curve. It's wildly dependent on the database and contention from other database processes. | ||

| + | |||

| + | <b>Input files:</b> ERF config file, GMPE config files | ||

| + | |||

| + | <b>Output files:</b> [[CyberShake_Code_Base#Hazard Curve | Hazard Curve]] | ||

| + | |||

| + | === Disaggregate === | ||

| + | |||

| + | <b>Purpose:</b> Disaggregate the curve results to determine the largest contributing sources. | ||

| + | |||

| + | <b>Detailed description:</b> This code takes a run ID, a probability or IM level, and a period to disaggregate at. It produces disaggregation distance-magnitude plots and also a list of the % contribution of each source. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #Username, password, or database host are changed. | ||

| + | #We change the database schema. | ||

| + | #We want to support different kinds of disaggregation, or for a different kind of ERF. | ||

| + | |||

| + | <b>Source code location:</b> The Disaggregator is part of the OpenSHA codebase. The specific Java class is org.opensha.sha.cybershake.plot.DisaggregationPlotter (available via https://github.com/opensha/opensha-cybershake/tree/master/src/main/java/org/opensha/sha/cybershake/plot), but it has a complex set of Java depdendencies. To compile and run, you should follow the instructions on http://www.opensha.org/trac/wiki/SettingUpEclipse to access the source. The curve calculator is also wrapped by disagg_plot_wrapper.sh, in https://github.com/SCECcode/cybershake-tools/blob/master/HazardCurveGeneration/disagg_plot_wrapper.sh . | ||

| + | |||

| + | <b>Author:</b> Kevin Milner, Nitin Gupta, Vipin Gupta | ||

| + | |||

| + | <b>Dependencies:</b> Standard OpenSHA dependencies | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | disagg_plot_wrapper.sh | ||

| + | DisaggregationPlotter.java | ||

| + | |||

| + | <b>Compile instructions:</b> Use the standard OpenSHA building process if building from source. | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre>usage: DisaggregationPlotter [-?] [-af <arg>] [-cmp <arg>] [-e <arg>] | ||

| + | [-fvs <arg>] [-i <arg>] [-imid <arg>] [-imt <arg>] [-o <arg>] [-p | ||

| + | <arg>] [-pr <arg>] [-r <arg>] [-R <arg>] [-s <arg>] [-sgt <arg>] | ||

| + | [-t <arg>] [-vel <arg>] | ||

| + | -?,--help Display this message | ||

| + | -af,--atten-rel-file <arg> XML Attenuation Relationship description | ||

| + | file(s) for comparison. Multiple files | ||

| + | should be comma separated | ||

| + | -cmp,--component <arg> Intensity measure component. Options: | ||

| + | GEOM,X,Y,RotD100,RotD50, Default: GEOM | ||

| + | -e,--erf-id <arg> ERF ID | ||

| + | -fvs,--force-vs30 <arg> Option to force the given Vs30 value to be | ||

| + | used in GMPE calculations. | ||

| + | -i,--imls <arg> Intensity Measure Levels (IMLs) to | ||

| + | disaggregate at. Multiple IMLs should be | ||

| + | comma separated. | ||

| + | -imid,--im-type-id <arg> Intensity measure type ID. If not supplied, | ||

| + | will be detected from im | ||

| + | type/component/period parameters | ||

| + | -imt,--im-type <arg> Intensity measure type. Options: SA, | ||

| + | Default: SA | ||

| + | -o,--output-dir <arg> Output directory | ||

| + | -p,--period <arg> Period(s) to calculate. Multiple periods | ||

| + | should be comma separated (default: 3) | ||

| + | -pr,--probs <arg> Probabilities (1 year) to disaggregate at. | ||

| + | Multiple probabilities should be comma | ||

| + | separated. | ||

| + | -r,--rv-id <arg> Rupture Variation ID | ||

| + | -R,--run-id <arg> Run ID | ||

| + | -s,--site <arg> Site short name | ||

| + | -sgt,--sgt-var-id <arg> STG Variation ID | ||

| + | -t,--type <arg> Plot save type. Options are png, pdf, and | ||

| + | txt. Multiple types can be comma separated | ||

| + | (default is pdf) | ||

| + | -vel,--vel-model-id <arg> Velocity Model ID | ||

| + | </pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial; typically takes about 30 seconds. It's wildly dependent on the database and contention from other database processes. | ||

| + | |||

| + | <b>Input files:</b> none | ||

| + | |||

| + | <b>Output files:</b> [[CyberShake_Code_Base#Disaggregation file | Disaggregation file]] | ||

| + | |||

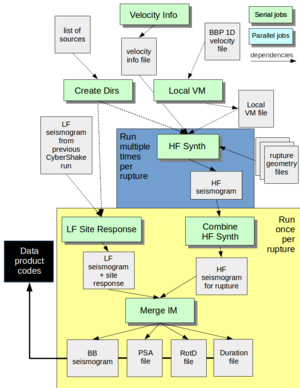

| + | == Stochastic codes == | ||

| + | |||

| + | With CyberShake, we also have the option to augment a completed run with stochastic seismograms. The following codes are used to add stochastic high-frequency content to an already-completed low-frequency deterministic run. | ||

| + | |||

| + | [[File:stochastic workflow overview.png|thumb|right|300px|Overview of the codes involved in the stochastic part of CyberShake, [http://hypocenter.usc.edu/research/cybershake/stochastic_workflow_overview.odg source file (ODG)]]] | ||

| + | |||

| + | === Velocity Info === | ||

| + | |||

| + | <b>Purpose:</b> To determine slowness-averaged VsX values for a CyberShake site, from UCVM. | ||

| + | |||

| + | <b>Detailed description:</b> Velocity Info takes a location, a velocity model, and grid spacing information and queries UCVM to generate three VsX values needed by the site response: | ||

| + | #Vs30, calculated as: 30 / sum( 1 / (Vs sampled from [0.5, 29.5] at 1 meter increments, for 30 values) ) | ||

| + | #Vs5H, like Vs30 but calculated over the shallowest 5*gridspacing meters. So if gridspacing=100m, Vs5H = 500 / sum( 1 / (Vs sampled from [0.5, 499.5] at 1 meter increments, for 500 values) ) | ||

| + | #VsD5H, like Vs30, but calculated over gridspacing increments, instead of 1 meter. The start and end are weighted half as much. So if gridspacing=100m, VsD5H = 5 / sum( 0 / (Vs sampled from [0, 500] at 1 meter increments, for 500 values) ) | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #We need to support more than one model - for instance, if the CyberShake site box (not simulation volume) spans multiple models. The code to parse the model string and load models in initialize_ucvm() would need to be changed. | ||

| + | #We want to support new kinds of velocity values. | ||

| + | |||

| + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/HFSim_mem/src/retrieve_vs.c | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Code_Base#UCVM | UCVM]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | retrieve_vs | ||

| + | |||

| + | <b>Compile instructions:</b>Run 'make retrieve_vs' | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre>Usage: ./retrieve_vs <lon> <lat> <model> <gridspacing> <out filename></pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial; takes about 15 seconds. | ||

| + | |||

| + | <b>Input files:</b> None | ||

| + | |||

| + | <b>Output files:</b> [[CyberShake_Code_Base#Velocity_Info file|Velocity Info file]] | ||

| + | |||

| + | === Local VM === | ||

| + | |||

| + | <b>Purpose:</b> To generate a "local" 1D velocity file, required for the high-frequency codes. | ||

| + | |||

| + | <b>Detailed description:</b> Local VM takes in an input file containing a 1D velocity model. It then calculates Qs from these values and writes all the velocity data to a new file. For all Study 15.12 runs, we used the LA Basin 1D model from the BBP, v14.3.0. It's registered in the RLS, and is located at /home/scec-02/cybershk/runs/genslip_nr_generic1d-gp01.vmod. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #We change the algorithm for calculating Vs. | ||

| + | |||

| + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/HFSim_mem/gen_local_vel.py | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan, modified from Rob Graves' code | ||

| + | |||

| + | <b>Dependencies:</b> None | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | gen_local_vel.py | ||

| + | |||

| + | <b>Compile instructions:</b> None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | |||

| + | <pre>Usage: ./gen_local_vel.py <1D velocity model> <output></pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial; takes less than a second. | ||

| + | |||

| + | <b>Input files:</b> [[CyberShake_Code_Base#BBP velocity file|BBP 1D velocity file]] | ||

| + | |||

| + | <b>Output files:</b> [[CyberShake_Code_Base#Local VM file|Local VM file]] | ||

| + | |||

| + | === Create Dirs === | ||

| + | |||

| + | <b>Purpose:</b> To create a directory for each source. | ||

| + | |||

| + | <b>Detailed description:</b> The high-frequency codes produce many intermediate files. To avoid overloading the filesystem, Create Dirs creates a separate directory for every source. | ||

| + | |||

| + | <b>Needs to be changed if:</b> This code is basically just a wrapper around mkdir, and is unlikely to need changes. | ||

| + | |||

| + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/HFSim_mem/create_dirs.py | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> None | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | create_dirs.py | ||

| + | |||

| + | <b>Compile instructions:</b> None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | Usage: ./create_dirs.py <file with list of dirs> | ||

| + | </pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial, takes just a few seconds. | ||

| + | |||

| + | <b>Input files:</b> File with a directory to create (a source ID) on each line. | ||

| + | |||

| + | <b>Output files:</b> None. | ||

| + | |||

| + | === HF Synth === | ||

| + | |||

| + | <b>Purpose:</b> HF Synth generates a high-frequency stochastic seismogram for one or more rupture variations. | ||

| + | |||

| + | <b>Detailed description:</b> This code wraps multiple broadband platform codes to reduce the number of invocations required. Specifically, it calls: | ||

| + | #srf2stoch_lite(), a reduced-memory version of srf2stoch. We have modified it to call rupgen_genslip() to generate the SRF, rather than reading it in from disk. | ||

| + | #hfsim(), a wrapper for: | ||

| + | ##hb_high(), Rob Graves's original BBP code to produce the seismograms | ||

| + | ##wcc_getpeak(), which calculates PGA for the seismogram | ||

| + | ##wcc_siteamp14(), which performs site amplification. | ||

| + | |||

| + | Vs30 is required, so if it is not passed as a command-line argument, UCVM is called to determine it. | ||

| + | |||

| + | Additionally, hf_synth_lite is able to handle processing on multiple rupture variations, to further reduce the number of invocations. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #A new version of one of Rob's codes - the high-frequency generator or the site amplification - is needed. We have tried to use whatever the most recent version is on the BBP, for consistency. | ||

| + | #New velocity parameters are needed for the site amplification. | ||

| + | #The format of the rupture geometry files changes. | ||

| + | |||

| + | The makefile needs to be changed if the path to libmemcached, UCVM, Getpar, or the rupture generator changes. | ||

| + | |||

| + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/HFSim_mem | ||

| + | |||

| + | <b>Author:</b> wrapper by Scott Callaghan, hb_high(), wcc_getpeak(), and wcc_siteamp14() by Rob Graves | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Code_Base#Getpar | Getpar]], [[CyberShake_Code_Base#UCVM | UCVM]], [[CyberShake_Code_Base#RupGen-api-v3.3.1 | rupture generator]], [[CyberShake_Code_Base#libmemcached | libmemcached]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | hf_synth_lite | ||

| + | |||

| + | <b>Compile instructions:</b> Run 'make' in src. | ||

| + | |||

| + | <b>Usage:</b> There is no 'help' usage string, but here's a sample invocation: | ||

| + | <pre> | ||

| + | /projects/sciteam/jmz/CyberShake/software/HFSim_mem/bin/hf_synth_lite | ||

| + | stat=OSI slat=34.6145 slon=-118.7235 | ||

| + | rup_geom_file=e36_rv6_121_0.txt source_id=121 rupture_id=0 | ||

| + | num_rup_vars=5 rup_vars=(0,0,0);(1,1,0);(2,2,0);(3,3,0);(4,4,0) | ||

| + | outfile=121/Seismogram_OSI_4331_121_0_hf_t0.grm | ||

| + | dx=2.0 dy=2.0 tlen=300.0 dt=0.025 | ||

| + | do_site_response=1 vs30=359.1 debug=0 vmod=LA_Basin_BBP_14.3.0.local | ||

| + | </pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial; takes a few seconds per rupture variation up to a minute, depending on the size of the fault surface. | ||

| + | |||

| + | <b>Input files:</b> [[CyberShake_Code_Base#Local_VM_file | Local velocity file]], [[CyberShake_Rupture_Files#UCERF2_Rupture_Geometry_Files | rupture geometry file]] | ||

| + | |||

| + | <b>Output files:</b> High-frequency seismograms, in the general [[Accessing_CyberShake_Seismograms#Reading_Seismogram_Files | seismogram format]]. | ||

| + | |||

| + | === Combine HF Synth === | ||

| + | |||

| + | <b>Purpose:</b> This code combines the seismograms produced by HF Synth so that there is just 1 seismogram per source/rupture combo. | ||

| + | |||

| + | <b>Detailed description:</b> Since we split up work so that each HF Synth job takes a chunk of rupture variations, we may end up with multiple seismogram files per rupture, each containing some of the rupture variations. This script concatenates the files, using cat, into a single file, ready to be worked on later in the workflow. | ||

| + | |||

| + | <b>Needs to be changed if:</b> I can't think of a circumstance where we would need to change this. | ||

| + | |||

| + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/HFSim_mem/combine_seis.py | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan | ||

| + | |||

| + | <b>Dependencies:</b> None | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | combine_seis.py | ||

| + | cat | ||

| + | |||

| + | <b>Compile instructions:</b> None | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | <pre> | ||

| + | Usage: ./combine_seis.py <seis 0> <seis 1> ... <seis N> <output seis name> | ||

| + | </pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial; takes a few seconds. | ||

| + | |||

| + | <b>Input files:</b> High-frequency seismograms, in the general [[Accessing_CyberShake_Seismograms#Reading_Seismogram_Files | seismogram format]]. | ||

| + | |||

| + | <b>Output files:</b> A single high-frequency seismogram, in the general [[Accessing_CyberShake_Seismograms#Reading_Seismogram_Files | seismogram format]]. | ||

| + | |||

| + | === LF Site Response === | ||

| + | |||

| + | <b>Purpose:</b> This code performs site response modifications to the CyberShake low-frequency seismograms. | ||

| + | |||

| + | <b>Detailed description:</b> The LF Site Response code takes a low-frequency seismogram and some velocity parameters, and outputs a seismogram with site response applied. In Study 15.12, this was a necessary step before combining the low and high frequency seismograms together. Since Vs30 is required, if it's not passed as a command-line argument, then UCVM is called to determine it. | ||

| + | |||

| + | The reason we calculate site response for the low-frequency deterministic seismograms is that we want both the low- and high-frequency results to be for the same site-response condition. For the HF, we used Vs30 directly | ||

| + | for the site-response adjustment, but for the LF we had to use an adjusted VsX value since the grid spacing was 100 m (so Vs30 doesn't make sense). | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #We change the site response algorithm. | ||

| + | #We decide to use different velocity parameters for setting site response. | ||

| + | #The format of the seismogram files changes. | ||

| + | |||

| + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/LF_Site_Response | ||

| + | |||

| + | <b>Author:</b> wrapper by Scott Callaghan, site response by Rob Graves | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Code_Base#Getpar | Getpar]], [[CyberShake_Code_Base#UCVM | UCVM]], [[CyberShake_Code_Base#RupGen-api-v3.3.1 | rupture generator]], [[CyberShake_Code_Base#libmemcached | libmemcached]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | lf_site_response | ||

| + | |||

| + | <b>Compile instructions:</b> Edit the makefile to point to RupGen, libmemcached, and Getpar, then run 'make' in the src directory. | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | Sample invocation: | ||

| + | <pre> | ||

| + | ./lf_site_response | ||

| + | seis_in=Seismogram_OSI_3923_263_3.grm seis_out=263/Seismogram_OSI_3923_263_3_site_response.grm | ||

| + | slat=34.6145 slon=-118.7235 | ||

| + | module=cb2014 | ||

| + | vs30=359.1 vref=344.7 | ||

| + | </pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial; takes less than a second. | ||

| + | |||

| + | <b>Input files:</b> A low-frequency deterministic seismogram, in the general [[Accessing_CyberShake_Seismograms#Reading_Seismogram_Files | seismogram format]]. | ||

| + | |||

| + | <b>Output files:</b> A low-frequency deterministic seismogram with site response, in the general [[Accessing_CyberShake_Seismograms#Reading_Seismogram_Files | seismogram format]]. | ||

| + | |||

| + | === Merge IM === | ||

| + | |||

| + | <b>Purpose:</b> This code combines low-frequency deterministic and high-frequency stochastic seismograms, then processes them to obtain intensity measures. | ||

| + | |||

| + | <b>Detailed description:</b> The Merge IM code takes an LF and HF seismogram and performs the following processing: | ||

| + | #A high-pass filter is applied to the HF seismogram. | ||

| + | #The LF seismogram is resampled to the same dt as the HF seismogram. | ||

| + | #The two seismograms are combined into a single broadband (BB) seismogram. | ||

| + | #The PSA code is run on the resulting seismogram. | ||

| + | #If desired, the RotD and duration codes are also run on the seismogram. | ||

| + | |||

| + | Merge IM works on a seismogram file at the rupture level, so it assumes that the input files contain multiple rupture variations. | ||

| + | |||

| + | <b>Needs to be changed if:</b> | ||

| + | #We change the filter-and-combine algorithm. | ||

| + | #We decide to modify the post-processing and IM types we want to capture. | ||

| + | #The format of the seismogram files changes. | ||

| + | |||

| + | <b>Source code location:</b> https://github.com/SCECcode/cybershake-core/MergeIM | ||

| + | |||

| + | <b>Author:</b> Scott Callaghan, Rob Graves | ||

| + | |||

| + | <b>Dependencies:</b> [[CyberShake_Code_Base#Getpar | Getpar]] | ||

| + | |||

| + | <b>Executable chain:</b> | ||

| + | merge_psa | ||

| + | |||

| + | <b>Compile instructions:</b> Edit the makefile to point to Getpar, then run 'make' in the src directory. | ||

| + | |||

| + | <b>Usage:</b> | ||

| + | Sample invocation: | ||

| + | <pre> | ||

| + | ./merge_psa | ||

| + | lf_seis=182/Seismogram_OSI_3923_182_23_site_response.grm hf_seis=182/Seismogram_OSI_4331_182_23_hf.grm seis_out=182/Seismogram_OSI_4331_182_23_bb.grm | ||

| + | freq=1.0 comps=2 num_rup_vars=16 | ||

| + | simulation_out_pointsX=2 simulation_out_pointsY=1 | ||

| + | simulation_out_timesamples=12000 simulation_out_timeskip=0.025 | ||

| + | surfseis_rspectra_seismogram_units=cmpersec surfseis_rspectra_output_units=cmpersec2 | ||

| + | surfseis_rspectra_output_type=aa surfseis_rspectra_period=all | ||

| + | surfseis_rspectra_apply_filter_highHZ=20.0 surfseis_rspectra_apply_byteswap=no | ||

| + | out=182/PeakVals_OSI_4331_182_23_bb.bsa | ||

| + | run_rotd=1 rotd_out=182/RotD_OSI_4331_182_23_bb.rotd | ||

| + | run_duration=1 duration_out=182/Duration_OSI_4331_182_23_bb.dur | ||

| + | </pre> | ||

| + | |||

| + | <b>Typical run configuration:</b> Serial; takes 5-30 seconds, depending on the number of rupture variations in the files. | ||

| + | |||

| + | <b>Input files:</b> LF deterministic seismogram and HF stochastic seismogram, in the general [[Accessing_CyberShake_Seismograms#Reading_Seismogram_Files | seismogram format]]. | ||

| + | |||

| + | <b>Output files:</b> BB seismogram, in the general [[Accessing_CyberShake_Seismograms#Reading_Seismogram_Files | seismogram format]]; also [[Accessing_CyberShake_Peak_Acceleration_Data#Reading_Peak_Acceleration_Files | PSA files]], [[Accessing_CyberShake_Peak_Acceleration_Data#Reading_Peak_Acceleration_Files | RotD files]], and [[Accessing_CyberShake_Duration_Data | Duration files]] | ||

== File types == | == File types == | ||

| Line 616: | Line 1,427: | ||

Filename convention: v_sgt-<site>.<p, s, or d> | Filename convention: v_sgt-<site>.<p, s, or d> | ||

| − | Format: 3 files, one each for Vp (*.p), Vs (*.s), and rho (*.d). Each is binary, with 4-byte floats, in fast X, Z (surface down), slow Y order. | + | Format: 3 files, one each for Vp (*.p), Vs (*.s), and rho (*.d). Each is binary, with 4-byte floats, in fast X, Z (surface->down), slow Y order. |

Generated by: UCVM | Generated by: UCVM | ||

| Line 729: | Line 1,540: | ||

=== Impulse source descriptions === | === Impulse source descriptions === | ||

| − | We generate the initial source description for CyberShake, with the required dt, nt, and filtering, using gen_source, in | + | We generate the initial source description for CyberShake, with the required dt, nt, and filtering, using gen_source, in https://github.com/SCECcode/cybershake-core/SimSgt_V3.0.3/src/ (run 'make get_source'). gen_source hard-codes its parameters, but you should only change 'nt', 'dt', and 'flo'. We have been setting flo to twice the CyberShake maximum frequency, to reduce filtering affects at the frequency of interest. gen_source wraps Rob Graves's source generator, which we use for consistency. |

| + | |||

| + | To generate a source for a component, run | ||

| + | <pre> | ||

| + | $>./gen_source xsrc=0 ysrc=0 zsrc=0 <fxsrc|fysrc|fzsrc>=1 moment=1e20 | ||

| + | </pre> | ||

Once this RWG source is generated, we then use AWP-GPU-SGT/utils/data/format_source.py to reprocess the RWG source into an AWP-source friendly format. This involves reformatting the file and multiplying all values by 1e15 for unit conversion. Different files must be produced for X and Y coordinates, since in the AWP format different columns are used for different components. | Once this RWG source is generated, we then use AWP-GPU-SGT/utils/data/format_source.py to reprocess the RWG source into an AWP-source friendly format. This involves reformatting the file and multiplying all values by 1e15 for unit conversion. Different files must be produced for X and Y coordinates, since in the AWP format different columns are used for different components. | ||

| Line 807: | Line 1,623: | ||

Generated by: AWP-ODC-SGT CPU and GPU | Generated by: AWP-ODC-SGT CPU and GPU | ||

| − | Used by: PostAWP | + | Used by: PostAWP, NanCheck |

=== RWG SGT === | === RWG SGT === | ||

| Line 834: | Line 1,650: | ||

Generated by: PostAWP | Generated by: PostAWP | ||

| − | Used by: | + | Used by: CheckSgt, DirectSynth |

=== SGT header file === | === SGT header file === | ||

| Line 860: | Line 1,676: | ||

}; | }; | ||

</pre> | </pre> | ||

| − | <li>The sgtindex structures, described below in C. There is one of these for each point in the SGTs, and they're used to determine the X/Y/Z indices of all the SGT points.</li> | + | <li>The sgtindex structures, described below in C. There is one of these for each point in the SGTs, and they're used to determine the X/Y/Z indices of all the SGT points. Note that the current way of packing the X,Y,Z coordinates into the long allows for 6 digits (so maximum 1M grid points) for each component.</li> |

<pre> | <pre> | ||

struct sgtindex /* indices for all 'globnp' SGT locations */ | struct sgtindex /* indices for all 'globnp' SGT locations */ | ||

| Line 925: | Line 1,741: | ||

Generated by: PostAWP | Generated by: PostAWP | ||

| − | Used by: | + | Used by: DirectSynth |

| + | |||

| + | === Velocity Info file === | ||

| + | |||

| + | Purpose: Contains the 3D velocity information needed for stochastic jobs | ||

| + | |||

| + | Filename convention: velocity_info_<site>.txt | ||

| + | |||

| + | Format: Text format, three lines: | ||

| + | |||

| + | <pre> | ||

| + | Vs30 = <Vs30 value> | ||

| + | Vs500 = <Vs500 value> | ||

| + | VsD500 = <VsD500 value> | ||

| + | </pre> | ||

| + | |||

| + | Generated by: Velocity Info job | ||

| + | |||

| + | Used by: Sub Stoch DAX generator, to add these values as command-line arguments to HF Synth and LF Site Response jobs. | ||

| + | |||

| + | === BBP 1D Velocity file === | ||

| + | |||

| + | Purpose: Contains 1D velocity profile information | ||

| + | |||

| + | Filename convention: The only one currently in use in CyberShake is /home/scec-02/cybershk/runs/genslip_nr_generic1d-gp01.vmod . | ||

| + | |||

| + | Format: Text format: | ||

| + | |||

| + | <pre> | ||

| + | <number of thickness layers L> | ||

| + | <layer 1 thickness in km> <Vp> <Vs> <density> <not used> <not used> | ||

| + | <layer 2 thickness in km> <Vp> <Vs> <density> <not used> <not used> | ||

| + | ... | ||

| + | <layer L thickness in km> <Vp> <Vs> <density> <not used> <not used> | ||

| + | </pre> | ||

| + | |||

| + | Note that the last layer has thickness 999.0. | ||

| + | |||

| + | Generated by: Rob Graves | ||

| + | |||

| + | Used by: Local VM job | ||

| + | |||

| + | === Local VM file === | ||

| + | |||

| + | Purpose: Contains 1D velocity profile information for use with stochastic codes | ||

| + | |||

| + | Filename convention: The only one currently in use in CyberShake is LA_Basin_BBP_14.3.0.local . | ||

| + | |||

| + | Format: Text format: | ||

| + | |||

| + | <pre> | ||

| + | <number of thickness layers L> | ||

| + | <layer 1 thickness in km> <Vp> <Vs> <density> <Qs> <Qs> | ||

| + | <layer 2 thickness in km> <Vp> <Vs> <density> <Qs> <Qs> | ||

| + | ... | ||

| + | <layer L thickness in km> <Vp> <Vs> <density> <Qs> <Qs> | ||

| + | </pre> | ||

| + | |||

| + | Note that the last layer has thickness '0.0', indicating it has no bottom. | ||

| + | |||

| + | Generated by: Local VM Job | ||

| + | |||

| + | Used by: | ||

| + | |||

| + | === Missing variations file === | ||

| + | |||

| + | Purpose: Lists the variations which the Check DB stage has found are missing. | ||

| + | |||

| + | Filename convention: DB_Check_Out_<PSA or RotD or Duration>_<site> | ||

| + | |||

| + | Format: For each source and rupture pair with missing variations, the following record is output in text format: | ||

| + | |||

| + | <pre> | ||

| + | <source ID> <rupture ID> <number N of missing rupture variations> | ||

| + | <ID of first missing rupture variation> | ||

| + | <ID of second missing rupture variation> | ||

| + | ... | ||

| + | <ID of Nth missing rupture variation> | ||

| + | </pre> | ||

| + | |||

| + | Originally, a file in this format could be directly fed back into the DAX generator, but that capability has not been used for many years and may not still be functional. | ||

| + | |||

| + | Generated by: Check DB Site | ||

| + | |||

| + | Used by: none | ||

| + | |||

| + | === DB Report file === | ||

| + | |||

| + | Purpose: Provides PSA data for a run in a text format. | ||

| + | |||

| + | Filename convention: <site>_ERF<erf id>_report_<date>.txt | ||

| + | |||

| + | Format: It's a text file with the following header: | ||

| + | Site_Name ERF_ID Source_ID Rupture_ID Rup_Var_ID Rup_Var_Scenario_ID Mag Prob Grid_Spacing Num_Rows Num_Columns Period Component SA | ||

| + | The file is sorted by fast Rup_Var_ID, Rupture_ID, Source_ID, Period, slow Component. | ||

| + | |||

| + | Generated by: DB Report | ||

| + | |||

| + | Used by: none, output data product | ||

| + | |||

| + | === Hazard Curve === | ||

| + | |||

| + | Purpose: Contains a hazard curve, either in text, PNG, or PDF format. | ||

| + | |||

| + | Filename convention: <site>_ERF<erf id>_Run<run id>_<IM type>_<period>sec_<IM component>_<date run completed>.<pdf|txt|png> | ||

| + | |||

| + | Format: The PNG and PDF formats contain an image of the curve. The PDF format also has an extended legend. The TXT file contains a list of (X,Y) points which describe the curve. | ||

| + | |||

| + | Generated by: Curve Calc | ||

| + | |||

| + | Used by: none, output data product | ||

| + | |||

| + | === Disaggregation file === | ||

| + | |||

| + | Purpose: Contains disaggregation results for a single run, in either text, PNG, or PDF format. | ||

| + | |||

| + | Filename convention: <site>_ERF<erf id>_Run<run_id>_Disagg<POE|IM>_<disagg level>_<IM type>_<period>sec_<run date>.<txt|png|pdf> | ||

| + | |||

| + | Format: The PNG and PDF formats contain a plot of the disaggregation results, showing magnitude vs distance and color-coding based on epsilon. The PDF and TXT formats contain additional information about individual source contributions, in the following format: | ||

| + | |||

| + | <pre>Summary data | ||

| + | Parameters used to create disaggregation | ||

| + | Disaggregation bin data: | ||

| + | Dist Mag <breakout by epsilon values> | ||

| + | <Breakdown of contribution by distance, magnitude, and epsilon range> | ||

| + | |||

| + | Disaggregation Source List Info: | ||

| + | Source# %Contribution TotExceedRate SourceName DistRup DistX DistSeis DistJB | ||

| + | <list of contributing sources, in decreasing order of % contribution> | ||

| + | </pre> | ||

| + | |||

| + | Generated by: Disaggregation | ||

| + | |||

| + | Used by: none, output data product | ||

== Dependencies == | == Dependencies == | ||

| + | |||

| + | The following are external software dependencies used by CyberShake software modules. | ||

=== Getpar === | === Getpar === | ||

| + | |||

| + | Purpose: A library written in C which enables parsing of key-value command-line parameters, and enforcement of required parameters. Rob Graves uses it in his codes. | ||

| + | |||

| + | How to obtain: Rob supplied a copy; it is in the CyberShake repository at https://github.com/SCECcode/cybershake-core/tree/main/Getpar . | ||

| + | |||

| + | Special installation instructions: Run 'make' in Getpar/getpar/src; this will make the library, libget.a, and install it in the lib directory, where CyberShake codes will expect it. | ||

=== MySQLdb === | === MySQLdb === | ||

| + | |||

| + | This library has been deprecated in favor of pymysql. | ||

| + | |||

| + | === pymysql === | ||

| + | |||

| + | Purpose: MySQL bindings for Python. | ||

| + | |||

| + | How to obtain: pip3 install pymysql . Documentation is at https://pypi.org/project/PyMySQL/ . | ||

| + | |||

| + | Special installation instructions: None; pip3 shouldn't have any issues. | ||

=== UCVM === | === UCVM === | ||

| + | |||

| + | Purpose: Supplies the query tools needed to populate a mesh with velocity information. | ||

| + | |||

| + | How to obtain: The most recent version of UCVM can be found at [[UCVM#Current_UCVM_Software_Releases|Current UCVM Software Releases]]. As of October 2017, we have only integrated the C version of UCVM into CyberShake. | ||

| + | |||

| + | Special installation instructions: Following the standard installation instructions for a cluster should work (running ./ucvm_setup.py). You will want to install CVM-S4, CVM-S426, CVM-S4.M01, CVM-H, CenCal, CCA-06, and CCA 1D velocity models for CyberShake. | ||

=== libcfu === | === libcfu === | ||

| + | |||

| + | Purpose: Provides a hash table library for a variety of CyberShake codes. | ||

| + | |||

| + | How to obtain: https://sourceforge.net/projects/libcfu/ . Documentation is at http://libcfu.sourceforge.net/libcfu.html . | ||

| + | |||

| + | Special installation instructions: Follow the instructions, and install into the utils directory. | ||

| + | |||

| + | === FFTW === | ||

| + | |||