Difference between revisions of "UCVM User Guide"

(Redirected page to UCVM 14.3.0 User Guide) |

|||

| (27 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| + | #REDIRECT [[UCVM 14.3.0 User Guide]] | ||

| + | |||

= Overview = | = Overview = | ||

| − | The Unified Community Velocity Model Framework [[UCVM]] is a software package | + | The Unified Community Velocity Model Framework [[UCVM]] is a software package which provides a standard interface to several regional community velocity models. Any differences in coordinate projections within each model are hidden so that the researcher queries any of the models by longitude, latitude, and Z (where Z is depth from surface or elevation relative MSL). UCVM also provides several other unique capabilities and products, including: |

| + | |||

| + | * Seamlessly combine two or more models into a composite model for querying | ||

| + | * Optionally include a California statewide geotechnical layer and interpolate it with the crustal velocity models | ||

| + | * Extract a 3D mesh or CVM Etree (octree) of material properties from any combination of models | ||

| + | * Standard California statewide elevation and Vs30 data map is provided | ||

| + | * Miscellaneous tools are provided for creating 2D etree maps, and optimizing etrees | ||

| + | * Numerically smooth discontinuities at the interfaces between different regional models | ||

| + | * Add support for future velocity models with the extendable interface | ||

| + | |||

| − | The software is MPI-capable, and has been shown to scale to 3300+ cores on NICS Kraken in a mesh generator. It allows application programs to tailor which set of velocity models are linked in to minimize memory footprint. It is also compatible with grid resources that have data stage-in policies for jobs, and light-weight kernels that support static libraries only. This package has been tested on NCCS Jaguar, NICS Kraken, and USC HPCC. | + | Current list of supported velocity models: SCEC CVM-H, SCEC CVM-S, SCEC CVM-SI, SCEC CVM-NCI, Magistrale WFCVM, USGS CenCalVM, Graves Cape Mendocino, Lin-Thurber Statewide, Tape SoCal. |

| + | |||

| + | The API itself is written in the C language. The software is MPI-capable, and has been shown to scale to 3300+ cores on NICS Kraken in a mesh generator. It allows application programs to tailor which set of velocity models are linked in to minimize memory footprint. It is also compatible with grid resources that have data stage-in policies for jobs, and light-weight kernels that support static libraries only. This package has been tested on NCCS Jaguar, NICS Kraken, and USC HPCC. | ||

| Line 9: | Line 22: | ||

The system requirements are as follows: | The system requirements are as follows: | ||

| − | # UNIX operating system (Linux | + | # UNIX operating system (Linux) |

# GNU gcc/gfortran compilers (MPI wrappers such as mpicc are OK) | # GNU gcc/gfortran compilers (MPI wrappers such as mpicc are OK) | ||

# tar for opening the compressed files | # tar for opening the compressed files | ||

| − | # | + | # [http://www.cs.cmu.edu/~euclid/ Euclid Etree library] (recommend [http://hypocenter.usc.edu/research/ucvm/euclid3-1.3.tar.gz euclid3-1.3.tar.gz]) |

| − | # Proj.4 | + | # [http://trac.osgeo.org/proj/ Proj.4 Projection Library] (recommend [http://download.osgeo.org/proj/proj-4.7.0.tar.gz proj-4.7.0.tar.gz]) |

| Line 20: | Line 33: | ||

# Standard community velocity models: SCEC CVM-H, SCEC CVM-S, SCEC CVM-SI, SCEC CVM-NCI, Magistrale WFCVM, USGS CenCalVM, Graves Cape Mendocino, Lin-Thurber Statewide, Tape SoCal | # Standard community velocity models: SCEC CVM-H, SCEC CVM-S, SCEC CVM-SI, SCEC CVM-NCI, Magistrale WFCVM, USGS CenCalVM, Graves Cape Mendocino, Lin-Thurber Statewide, Tape SoCal | ||

# NetCDF (network Common Data Form) library [http://www.unidata.ucar.edu/downloads/netcdf/index.jsp NetCDF] | # NetCDF (network Common Data Form) library [http://www.unidata.ucar.edu/downloads/netcdf/index.jsp NetCDF] | ||

| − | |||

= Installation = | = Installation = | ||

| Line 54: | Line 66: | ||

For the Euclid Etree library: | For the Euclid Etree library: | ||

| − | + | <pre> | |

| − | + | $ tar zxvf euclid3-1.3.tar.gz | |

| − | + | $ cd ./euclid3-1.3/libsrc | |

| + | $ make all | ||

| + | </pre> | ||

| − | If installing the Etree library on a Lustre filesystem (NICS Kraken | + | If installing the Etree library on a Lustre filesystem on a Cray system (NICS Kraken, NCCS Jaguar), you must enable the Cray IOBUF module prior to compiling the library. Also, |

| + | edit the ./libsrc/Makefile to add the compile flags "-DUSE_IOBUF -DUSE_IOBUF_LOCAL_MACROS". | ||

| − | For the Proj.4 library: | + | For the Proj.4 library (version 4.7.0): |

| − | + | <pre> | |

| − | + | $ tar xvf proj-4.7.0.tar | |

| − | + | $ cd proj-4.7.0 | |

| − | + | $ ./configure --prefix=<your desired install directory> | |

| + | $ make; make install | ||

| + | </pre> | ||

Be sure to note where you installed these two packages. You will need this information later in the installation process. | Be sure to note where you installed these two packages. You will need this information later in the installation process. | ||

| Line 72: | Line 89: | ||

For the SCEC CVM-H model: | For the SCEC CVM-H model: | ||

<pre> | <pre> | ||

| − | + | $ tar xvf cvmh-11.9.1.tar.gz | |

| − | + | $ cd cvmh-11.9.1 | |

| − | + | $ ./configure --prefix=<your desired_install directory> | |

| − | + | $ make; make install | |

</pre> | </pre> | ||

| − | |||

For the SCEC CVM-S model: | For the SCEC CVM-S model: | ||

<pre> | <pre> | ||

| − | + | $ tar xvf cvms-11.11.0.tar.gz | |

| − | + | $ cd cvms/src | |

| − | + | $ make all | |

</pre> | </pre> | ||

| − | |||

Again, be sure to note where you installed the community velocity models. | Again, be sure to note where you installed the community velocity models. | ||

| Line 99: | Line 114: | ||

UCVM_INSTALL_DIR=<desired UCVM install path> | UCVM_INSTALL_DIR=<desired UCVM install path> | ||

</pre> | </pre> | ||

| − | |||

In a bash shell, for example, the ETREE_DIR environment variable can be declared with the command: | In a bash shell, for example, the ETREE_DIR environment variable can be declared with the command: | ||

| − | + | <pre> | |

| − | + | $ ETREE_DIR=/opt/etree;export ETREE_DIR | |

| + | </pre> | ||

In a csh shell: | In a csh shell: | ||

| − | + | <pre> | |

| − | + | $ setenv ETREE_DIR /opt/etree | |

| + | </pre> | ||

Now unpack the UCVM software distribution: | Now unpack the UCVM software distribution: | ||

| − | + | <pre> | |

| + | $ tar zxvf ucvm-12.2.0.tar.gz | ||

| + | </pre> | ||

The build configuration differs slightly depending on where you wish to run UCVM. On a standard Linux system with gcc, run the following | The build configuration differs slightly depending on where you wish to run UCVM. On a standard Linux system with gcc, run the following | ||

| − | commands to | + | commands to configure the software with CVM-H and CVM-S enabled: |

| − | |||

<pre> | <pre> | ||

| − | + | $ cd ucvm-12.2.0 | |

| − | + | $ ./configure --prefix=${UCVM_INSTALL_DIR} --with-etree-include-path="${ETREE_DIR}/libsrc" | |

--with-etree-lib-path="${ETREE_DIR}/libsrc" --with-proj4-include-path="${PROJ4_DIR}/include" | --with-etree-lib-path="${ETREE_DIR}/libsrc" --with-proj4-include-path="${PROJ4_DIR}/include" | ||

--with-proj4-lib-path="${PROJ4_DIR}/lib" --enable-model-cvmh --enable-model-cvms | --with-proj4-lib-path="${PROJ4_DIR}/lib" --enable-model-cvmh --enable-model-cvms | ||

| Line 123: | Line 140: | ||

--with-gctpc-lib-path="${CVMH_DIR}/lib" --with-cvms-include-path="${CVMS_DIR}/include" | --with-gctpc-lib-path="${CVMH_DIR}/lib" --with-cvms-include-path="${CVMS_DIR}/include" | ||

--with-cvms-lib-path="${CVMS_DIR}/lib" --with-cvmh-model-path="${CVMH_DIR}/model" --with-cvms-model-path="${CVMS_DIR}/src" | --with-cvms-lib-path="${CVMS_DIR}/lib" --with-cvmh-model-path="${CVMH_DIR}/model" --with-cvms-model-path="${CVMS_DIR}/src" | ||

| − | |||

</pre> | </pre> | ||

| Line 129: | Line 145: | ||

Note that unless you force static linking with the "--enable-static" command line option, you must update your LD_LIBRARY_PATH environment variable with the paths to the Etree, Proj.4, and model shared libraries. | Note that unless you force static linking with the "--enable-static" command line option, you must update your LD_LIBRARY_PATH environment variable with the paths to the Etree, Proj.4, and model shared libraries. | ||

| − | |||

The configure command is quite long, so an example shell script with this command is located in ./scripts/autoconf/basic_install.sh. It assumes that you previously defined the environment variables described in this installation guide. The script can be executed with the following shell commands: | The configure command is quite long, so an example shell script with this command is located in ./scripts/autoconf/basic_install.sh. It assumes that you previously defined the environment variables described in this installation guide. The script can be executed with the following shell commands: | ||

| + | <pre> | ||

| + | $ cd scripts/autoconf | ||

| + | $ ./basic_install.sh | ||

| + | </pre> | ||

| + | Compile the software by executing the following command in the main directory: | ||

<pre> | <pre> | ||

| − | + | $ make | |

| − | |||

</pre> | </pre> | ||

| + | Check that the package was built correctly by executing the following command in the main directory: | ||

| + | <pre> | ||

| + | $ make check | ||

| + | </pre> | ||

| − | + | Verify that all unit tests and acceptance tests pass. Depending on your system and your UCVM configuration, testing may take up to 15-30 minutes. | |

| − | + | Install the software to the desired target location by executing the following command in the main directory: | |

| − | + | <pre> | |

| − | + | $ make install | |

| − | + | </pre> | |

| − | Install the software to the desired target location | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | A full list of supported standard velocity models and options can be listed with the command: | ||

| + | <pre> | ||

| + | $ ./configure --help | ||

| + | </pre> | ||

== Updating the Configuration == | == Updating the Configuration == | ||

| Line 163: | Line 182: | ||

Additional options must be passed to the ./configure script on a more exotic Linux system, such as a host that requires static linking (NICS Kraken), or has a Lustre high-performace filesystem (NCCS Jaguar, NICS Kraken). The following example shows how to enable static linking and Cray IOBUF buffering: | Additional options must be passed to the ./configure script on a more exotic Linux system, such as a host that requires static linking (NICS Kraken), or has a Lustre high-performace filesystem (NCCS Jaguar, NICS Kraken). The following example shows how to enable static linking and Cray IOBUF buffering: | ||

| − | + | <pre> | |

| − | + | $ module add iobuf | |

| − | + | $ ./configure --prefix=${UCVM_INSTALL_DIR} --enable-static --enable-iobuf --enable-model-cvmh --enable-model-cvms | |

| − | |||

--with-etree-include-path="${ETREE_DIR}/libsrc" --with-etree-lib-path="${ETREE_DIR}/libsrc" | --with-etree-include-path="${ETREE_DIR}/libsrc" --with-etree-lib-path="${ETREE_DIR}/libsrc" | ||

--with-proj4-include-path="${PROJ4_DIR}/include" --with-proj4-lib-path="${PROJ4_DIR}/lib" | --with-proj4-include-path="${PROJ4_DIR}/include" --with-proj4-lib-path="${PROJ4_DIR}/lib" | ||

| Line 172: | Line 190: | ||

--with-gctpc-lib-path="${CVMH_DIR}/lib" --with-cvms-include-path="${CVMS_DIR}/include" | --with-gctpc-lib-path="${CVMH_DIR}/lib" --with-cvms-include-path="${CVMS_DIR}/include" | ||

--with-cvms-lib-path="${CVMS_DIR}/lib" --with-cvmh-model-path="${CVMH_DIR}/model" --with-cvms-model-path="${CVMS_DIR}/src" CC=cc | --with-cvms-lib-path="${CVMS_DIR}/lib" --with-cvmh-model-path="${CVMH_DIR}/model" --with-cvms-model-path="${CVMS_DIR}/src" CC=cc | ||

| − | + | $ make; make check; make install | |

| + | </pre> | ||

| + | |||

| + | <p>It is generally advisable to compile all components of UCVM using the same compilation system. NICS Kraken, for example, uses the Cray compiler wrappers (cc not gcc). As such, on this system, you must use CC to compile the Euclid library.</p> | ||

A few notes: | A few notes: | ||

| Line 248: | Line 269: | ||

--with-tape-model-path location of the TAPE model files | --with-tape-model-path location of the TAPE model files | ||

</pre> | </pre> | ||

| − | |||

== Unit and Acceptance Tests == | == Unit and Acceptance Tests == | ||

| − | Both the unit tests and acceptance tests may be executed with the command: | + | Both the unit tests and acceptance tests may be executed with the following command from the main directory: |

| − | + | <pre> | |

| + | $ make check | ||

| + | </pre> | ||

All tests should result in a PASS. | All tests should result in a PASS. | ||

| Line 268: | Line 290: | ||

This indicates that UCVM was linked against one or more shared libraries and the dynamic library loader cannot find the actual .so library at run-time. The solution is to update your LD_LIBRARY_PATH to include the directory containing the library mentioned in the error message. For example, the following command adds a new search directory to LD_LIBRARY_PATH in a csh shell: | This indicates that UCVM was linked against one or more shared libraries and the dynamic library loader cannot find the actual .so library at run-time. The solution is to update your LD_LIBRARY_PATH to include the directory containing the library mentioned in the error message. For example, the following command adds a new search directory to LD_LIBRARY_PATH in a csh shell: | ||

| + | <pre> | ||

| + | $ setenv LD_LIBRARY_PATH /home/USER/opt/somepkg/lib:${LD_LIBRARY_PATH} | ||

| + | </pre> | ||

| + | |||

| + | Alternatively, if you do not want to work with shared libraries, UCVM can be compiled statically with the "--enable-static" configuration option. | ||

| − | + | === Proj. 4 Error: major axis or radius = 0 or not given === | |

| − | + | On systems with home filesystems that are not viewable to compute nodes (such as NICS Kraken), you may encounter errors with Proj.4 when trying to run any component of UCVM on compute nodes. This is due to the fact that Proj.4 actually relies on a file called proj_defs.dat which is located in the ${MAKE_INSTALL_LOCATION}/share/proj directory. So for example, suppose you had configured Proj.4 with ./configure --prefix=/not_viewable_to_compute_nodes/proj-4.7.0, Proj. 4 would then search for proj_defs.dat in /not_viewable_to_compute_nodes/proj-4.7.0/share/proj/proj_defs.dat. This will cause UCVM to throw the error "Proj.4 Error: major axis or radius = 0 or not given" and your job will fail. | |

| + | The only way it seems to solve this issue is to actually make sure your --prefix directory is actually visible to the compute nodes and do a make install there. Documentation suggests that you can set the PROJ_LIB environment variable, however this seems to not work correctly without modifications to the Proj.4 source code. | ||

=== Building Against CVM-H 11.2.0 === | === Building Against CVM-H 11.2.0 === | ||

| − | UCVM is designed to work with CVM-H 11.9. | + | UCVM is designed to work with CVM-H 11.9.1 by default, but it can optionally be built with CVM-H 11.2.0. Use this modified configure command to configure the package with CVM-H 11.2.0 and CVM-S: |

<pre> | <pre> | ||

| − | + | $ cd ucvm-12.2.0 | |

| − | + | $ ./configure --prefix=${UCVM_INSTALL_DIR} --with-etree-include-path="${ETREE_DIR}/libsrc" | |

--with-etree-lib-path="${ETREE_DIR}/libsrc" --with-proj4-include-path="${PROJ4_DIR}/include" | --with-etree-lib-path="${ETREE_DIR}/libsrc" --with-proj4-include-path="${PROJ4_DIR}/include" | ||

--with-proj4-lib-path="${PROJ4_DIR}/lib" --enable-model-cvmh --enable-model-cvms | --with-proj4-lib-path="${PROJ4_DIR}/lib" --enable-model-cvmh --enable-model-cvms | ||

| Line 290: | Line 318: | ||

Note that the CVM-H model and gctpc paths have changed, and there is now a CFLAGS argument. After configuring, you may compile, check, and install normally. | Note that the CVM-H model and gctpc paths have changed, and there is now a CFLAGS argument. After configuring, you may compile, check, and install normally. | ||

| − | |||

= Framework Configuration = | = Framework Configuration = | ||

| Line 315: | Line 342: | ||

# | # | ||

# SCEC CVM-H | # SCEC CVM-H | ||

| − | cvmh_modelpath=/home/scec-00/USER/opt/aftershock/cvmh-11.9. | + | cvmh_modelpath=/home/scec-00/USER/opt/aftershock/cvmh-11.9.1/model |

# | # | ||

# USGS Bay Area high-rez and extended etrees | # USGS Bay Area high-rez and extended etrees | ||

| Line 657: | Line 684: | ||

User-defined maps can be created with the ./bin/grd2etree utility. | User-defined maps can be created with the ./bin/grd2etree utility. | ||

| − | |||

= Framework Description = | = Framework Description = | ||

| Line 824: | Line 850: | ||

| − | ==== Parallel | + | ==== Parallel ucvm2etree ==== |

The MPI version is useful for large and very large etrees. It involves three programs: ucvm2etree-extract-MPI, ucvm2etree-sort-MPI, and ucvm2etree-merge-MPI. All three take the same configuration file as the one used by the serial version. The ucvmetree-extract-MPI utility generates the set of points that are to be used in the etree, extracts those points from the specified CVMs, and saves them in a set of N files (where N is the process count). The ucvm2etree-sort-MPI utility sorts the points in each flat file by octant key. The third utility, ucvm2etree-merge-MPI, merges these locally sorted points into the final etree file. | The MPI version is useful for large and very large etrees. It involves three programs: ucvm2etree-extract-MPI, ucvm2etree-sort-MPI, and ucvm2etree-merge-MPI. All three take the same configuration file as the one used by the serial version. The ucvmetree-extract-MPI utility generates the set of points that are to be used in the etree, extracts those points from the specified CVMs, and saves them in a set of N files (where N is the process count). The ucvm2etree-sort-MPI utility sorts the points in each flat file by octant key. The third utility, ucvm2etree-merge-MPI, merges these locally sorted points into the final etree file. | ||

| Line 859: | Line 885: | ||

The program reads in input files that are in flat file format. In can output a merged Etree in either Etree format or flat file format. Although, due to space considerations, it strips the output flat file format to a pre-order list ot octants(16 byte key, 12 byte payload). The missing addr field is redundant and can be regenerated from the key field. | The program reads in input files that are in flat file format. In can output a merged Etree in either Etree format or flat file format. Although, due to space considerations, it strips the output flat file format to a pre-order list ot octants(16 byte key, 12 byte payload). The missing addr field is redundant and can be regenerated from the key field. | ||

| + | |||

| + | ===== MPI Version Buffer Values ===== | ||

| + | |||

| + | There are a number of buffer values in the ucvm.conf file that must be accurately set for extraction, sorting, and merging to take place. Please see below for a list of those values and a description of the purpose of each one. | ||

| + | |||

| + | '''buf_etree_cache''' | ||

| + | |||

| + | Units: MB<br /> | ||

| + | Recommended Value: 128 usually works well, you can increase or decrease as need be<br /> | ||

| + | For Process: ucvm2etree-extract-MPI<br /> | ||

| + | Description: Used in the merging process during the etree_open call. According to the documentation of etree_open, this is more precisely defined as the "internal buffer space being allocated in megabytes". The value defined represents the memory buffer for etree traversing, finding, writing, etc. | ||

| + | |||

| + | '''buf_extract_mem_max_oct''' | ||

| + | |||

| + | Units: Number of octants<br /> | ||

| + | Recommended Value: 2097152<br /> | ||

| + | For Process: ucvm2etree-extract-MPI<br /> | ||

| + | Description: Used in the extraction process as the number of bytes to buffer before writing to disk. It reserves, in memory, a placeholder for this number of octants. The size of each octant is 52 bytes and as such, the actual memory allocation is 52 * this value. | ||

| + | |||

| + | '''buf_extract_ffile_max_oct''' | ||

| + | |||

| + | Units: Number of octants<br /> | ||

| + | Recommended Value: Varies. It must be greater than total output file size divided by the number of processes.<br /> | ||

| + | For Process: ucvm2etree-extract-MPI<br /> | ||

| + | Description: This value is ''very critical''! This defines the maximum output file size that each worker process can write. If this is not large enough, the worker will halt and return a "worker is full message". The best way to set this is to guess how large your extraction file might be (a relatively detailed Chino Hills e-tree, for example, is 250GB, and divide that number by 52 * the number or processes you have. So if I was building that mesh, on 32 processes, this value would need to be at least 162 million. However, this value also ties in with the number below, so '''please read it carefully as well'''. | ||

| + | |||

| + | '''buf_sort_ffile_max_oct''' | ||

| + | |||

| + | Units: Number of octants<br /> | ||

| + | Recommended Value: Must be at least equal to buf_extract_ffile_max_oct<br /> | ||

| + | For Process: ucvm2etree-sort-MPI<br /> | ||

| + | Description: Used in the sorting process to determine the max number of octants to read into memory. Therefore, for each process running on the server, there must be number of processes * 52 * buf_sort_ffile_max_oct bytes of memory available. So in our example above, if we were running those 32 processes on 16 dual core machines, we would need 162 million * 52 * 2 cores bytes of RAM or about 16 GB. All sorting is done in memory, not using the file on the disk. | ||

| + | |||

| + | '''buf_merge_report_min_oct''' | ||

| + | |||

| + | Units: Number of octants<br /> | ||

| + | Recommended Value: Personal preference - default is 10000000.<br /> | ||

| + | For Process: ucvm2etree-merge-MPI<br /> | ||

| + | Description:. This defines the number of octants that must be processed between progress reports in the merge process. This progress reports take the form of "Appended [at least buf_merge_report_min_oct] in N.NNs seconds". If you would like more frequent updates, set this value to be smaller. | ||

| + | |||

| + | '''buf_merge_sendrecv_buf_oct''' | ||

| + | |||

| + | Units: Bytes<br /> | ||

| + | Recommended Value: 4096<br /> | ||

| + | For Process: ucvm2etree-merge-MPI<br /> | ||

| + | Description: The number of bytes to merge per loop during the merge process. It is recommended to keep this value as is. | ||

| + | |||

| + | '''buf_merge_io_buf_oct''' | ||

| + | |||

| + | Units: Number of octants<br /> | ||

| + | Recommended Value: 4194304<br /> | ||

| + | For Process: ucvm2etree-merge-MPI<br /> | ||

| + | Description: Number of octants to read in and store in memory for merging. After being merged, the memory is then flushed to disk and the process repeats. Since this is a value in octants, it will require the machine to have at least buf_merge_io_buf_oct * 52 bytes of memory. | ||

| + | <br /> | ||

| + | <br /> | ||

==== Etree Summary ==== | ==== Etree Summary ==== | ||

| Line 1,054: | Line 1,135: | ||

</pre> | </pre> | ||

| + | ==== Performance Enhancements for ucvm2mesh on Lustre Filesystems ==== | ||

| + | |||

| + | When running ucvm2mesh on Lustre filesystems (and other systems with similar structures), you may experience some performance issues that result in less than optimal performance. By default, Lustre uses one OST or disk per file. As such, running ucvm2mesh-mpi with a significant number of cores results in all those processes attempting to seek and write to the same file on the same OST/disk. This can result in a disk bottleneck that gives very poor performance, where it may take up to 10 minutes to write a few hundred MBs. | ||

| + | |||

| + | This can be fixed by increasing the number of OSTs/disks to which the output directory and files are written. This is called striping. Generally, you will want to set the stripes for the output directory to be either the recommended amount for large files or the maximum amount allowed. The command to set the number of stripes on Kraken is lfs setstripe [dir] --count [count]. Count can be a fixed number or it can be -1 for the maximum supported by the operating system. The Kraken documentation suggests that 80 is a reasonable amount for very large files. So, for example, suppose we were generating a large mesh with our stage out directory being /lustre/scratch/username/meshes/outstripe. We would first make that directory and then run: | ||

| + | |||

| + | lfs setstripe /lustre/scratch/username/meshes/outstripe --count 80 | ||

| + | |||

| + | Then we would have our configuration file output to that directory: | ||

| + | |||

| + | meshfile=/lustre/scratch/username/meshes/outstripe/USC_cvms_awp.media | ||

| + | gridfile=/lustre/scratch/username/meshes/outstripe/USC_cvms_awp.media.grid | ||

| + | |||

| + | When we qsub the job, it will automatically take advantage of striping and distribute the load across multiple targets. | ||

| + | |||

| + | It is also important to remember that more cores is not always better. Additional cores can result in increased write requests that may cause performance decreases. It is important to find the optimal balance between the number of processes writing to the file and the number of stripes. For exampe, for a large 600km x 400km x 40km meshes, a core count of 600 and stripe count of 80 on NICS Kraken will result in reasonably good performance. | ||

=== grd_query === | === grd_query === | ||

| Line 1,619: | Line 1,716: | ||

#'''Wald, D. J., and T. I. Allen (2007)''', Topographic slope as a proxy for seismic site conditions and amplification, Bull. Seism. Soc. Am., 97 (5), 1379-1395, doi:10.1785/0120060267. | #'''Wald, D. J., and T. I. Allen (2007)''', Topographic slope as a proxy for seismic site conditions and amplification, Bull. Seism. Soc. Am., 97 (5), 1379-1395, doi:10.1785/0120060267. | ||

#'''Wills, C. J., and K. B. Clahan (2006)''', Developing a map of geologically defined site-condition categories for California, Bull. Seism. Soc. Am., 96 (4A), 1483-1501, doi:10.1785/0120050179. | #'''Wills, C. J., and K. B. Clahan (2006)''', Developing a map of geologically defined site-condition categories for California, Bull. Seism. Soc. Am., 96 (4A), 1483-1501, doi:10.1785/0120050179. | ||

| − | #'''Yong, A., Hough, S.E., Iwahashi, J., and A. Braverman (2012)''', A terrain-based site conditions map of California with implications for the contiguous United States, Bull. Seism. Soc. Am., | + | #'''Yong, A., Hough, S.E., Iwahashi, J., and A. Braverman (2012)''', A terrain-based site conditions map of California with implications for the contiguous United States, Bull. Seism. Soc. Am., Vol. 102, No. 1, pp. 114–128, February 2012, doi: 10.1785/0120100262. |

Latest revision as of 23:52, 9 June 2014

Redirect to:

Contents

- 1 Overview

- 2 Requirements

- 3 Installation

- 4 Framework Configuration

- 5 Framework Description

- 5.1 Concept of Operation

- 5.2 Querying Models Via Native Interface versus Querying UCVM

- 5.3 Utilities

- 5.4 C Application Programming Interface

- 6 Extracting Values from UCVM

- 7 History of UCVM Releases

- 8 Acknowledgements and Contact Info

- 9 Technical Notes

- 10 References

- 11 License and Disclaimer

Overview

The Unified Community Velocity Model Framework UCVM is a software package which provides a standard interface to several regional community velocity models. Any differences in coordinate projections within each model are hidden so that the researcher queries any of the models by longitude, latitude, and Z (where Z is depth from surface or elevation relative MSL). UCVM also provides several other unique capabilities and products, including:

- Seamlessly combine two or more models into a composite model for querying

- Optionally include a California statewide geotechnical layer and interpolate it with the crustal velocity models

- Extract a 3D mesh or CVM Etree (octree) of material properties from any combination of models

- Standard California statewide elevation and Vs30 data map is provided

- Miscellaneous tools are provided for creating 2D etree maps, and optimizing etrees

- Numerically smooth discontinuities at the interfaces between different regional models

- Add support for future velocity models with the extendable interface

Current list of supported velocity models: SCEC CVM-H, SCEC CVM-S, SCEC CVM-SI, SCEC CVM-NCI, Magistrale WFCVM, USGS CenCalVM, Graves Cape Mendocino, Lin-Thurber Statewide, Tape SoCal.

The API itself is written in the C language. The software is MPI-capable, and has been shown to scale to 3300+ cores on NICS Kraken in a mesh generator. It allows application programs to tailor which set of velocity models are linked in to minimize memory footprint. It is also compatible with grid resources that have data stage-in policies for jobs, and light-weight kernels that support static libraries only. This package has been tested on NCCS Jaguar, NICS Kraken, and USC HPCC.

Requirements

The system requirements are as follows:

- UNIX operating system (Linux)

- GNU gcc/gfortran compilers (MPI wrappers such as mpicc are OK)

- tar for opening the compressed files

- Euclid Etree library (recommend euclid3-1.3.tar.gz)

- Proj.4 Projection Library (recommend proj-4.7.0.tar.gz)

Optional dependencies include any of the following standard velocity models and packages:

- Standard community velocity models: SCEC CVM-H, SCEC CVM-S, SCEC CVM-SI, SCEC CVM-NCI, Magistrale WFCVM, USGS CenCalVM, Graves Cape Mendocino, Lin-Thurber Statewide, Tape SoCal

- NetCDF (network Common Data Form) library NetCDF

Installation

Download

- Start at SCEC website: http://scec.usc.edu/scecpedia/UCVM

- Navigate to the Downloads section of the UCVM web page.

- Click the download link to download the latest source code distribution file and any listed dependencies. This is typically posted as a tar and gzipped file (tgz format). The source code distribution file is large (350 Mb), so the download make take awhile.

CheckSum Test

Verify that the provided md5 checksum file matches the computed md5 for your downloaded tarball:

$ md5sum -c ucvm-12.2.0.tar.gz.md5

Basic Install

The general steps for installing UCVM are:

- Install Etree and Proj.4 packages

- Install one or more velocity models

- Define environment variables describing where Etree/Proj.4/models are located

- Run the UCVM installer

First, install the Etree and Proj.4 packages which are required by UCVM. The INSTALL/README instructions in each distribution describe how to install them,

but generally:

For the Euclid Etree library:

$ tar zxvf euclid3-1.3.tar.gz $ cd ./euclid3-1.3/libsrc $ make all

If installing the Etree library on a Lustre filesystem on a Cray system (NICS Kraken, NCCS Jaguar), you must enable the Cray IOBUF module prior to compiling the library. Also, edit the ./libsrc/Makefile to add the compile flags "-DUSE_IOBUF -DUSE_IOBUF_LOCAL_MACROS".

For the Proj.4 library (version 4.7.0):

$ tar xvf proj-4.7.0.tar $ cd proj-4.7.0 $ ./configure --prefix=<your desired install directory> $ make; make install

Be sure to note where you installed these two packages. You will need this information later in the installation process.

Then, install the velocity models that you wish to use with UCVM according to the documentation provided by those models. Ensure they are compiled with gcc/gfortran.

For the SCEC CVM-H model:

$ tar xvf cvmh-11.9.1.tar.gz $ cd cvmh-11.9.1 $ ./configure --prefix=<your desired_install directory> $ make; make install

For the SCEC CVM-S model:

$ tar xvf cvms-11.11.0.tar.gz $ cd cvms/src $ make all

Again, be sure to note where you installed the community velocity models.

Declare environment variables with the paths to the Etree library, Proj.4 library, and velocity models that you previously installed. Also, update your LD_LIBRARY_PATH environment variable to include the new library directories.

ETREE_DIR=<Etree install path>

PROJ4_DIR=<Proj.4 install path>

CVMH_DIR=<CVM-H install path>

CVMS_DIR=<CVM-S install path>

LD_LIBRARY_PATH=${ETREE_DIR}/libsrc:${PROJ4_DIR}/lib:${CVMH_DIR}/lib:${CVMS_DIR}/lib:${LD_LIBRARY_PATH}

UCVM_INSTALL_DIR=<desired UCVM install path>

In a bash shell, for example, the ETREE_DIR environment variable can be declared with the command:

$ ETREE_DIR=/opt/etree;export ETREE_DIR

In a csh shell:

$ setenv ETREE_DIR /opt/etree

Now unpack the UCVM software distribution:

$ tar zxvf ucvm-12.2.0.tar.gz

The build configuration differs slightly depending on where you wish to run UCVM. On a standard Linux system with gcc, run the following commands to configure the software with CVM-H and CVM-S enabled:

$ cd ucvm-12.2.0

$ ./configure --prefix=${UCVM_INSTALL_DIR} --with-etree-include-path="${ETREE_DIR}/libsrc"

--with-etree-lib-path="${ETREE_DIR}/libsrc" --with-proj4-include-path="${PROJ4_DIR}/include"

--with-proj4-lib-path="${PROJ4_DIR}/lib" --enable-model-cvmh --enable-model-cvms

--with-cvmh-include-path="${CVMH_DIR}/include" --with-cvmh-lib-path="${CVMH_DIR}/lib"

--with-gctpc-lib-path="${CVMH_DIR}/lib" --with-cvms-include-path="${CVMS_DIR}/include"

--with-cvms-lib-path="${CVMS_DIR}/lib" --with-cvmh-model-path="${CVMH_DIR}/model" --with-cvms-model-path="${CVMS_DIR}/src"

The above example enables CVM-H and CVM-S but any of the following models may be enabled: SCEC CVM-H, SCEC CVM-S, SCEC CVM-SI, USGS CenCalVM, and WFCVM. For each velocity model you want to use, the configure script needs to be told to explicitly use that model (with the --enable-model-* flag) and where to find the velocity model's library files (.a or .so), header files (.h), and model files so that they can be linked into UCVM.

Note that unless you force static linking with the "--enable-static" command line option, you must update your LD_LIBRARY_PATH environment variable with the paths to the Etree, Proj.4, and model shared libraries.

The configure command is quite long, so an example shell script with this command is located in ./scripts/autoconf/basic_install.sh. It assumes that you previously defined the environment variables described in this installation guide. The script can be executed with the following shell commands:

$ cd scripts/autoconf $ ./basic_install.sh

Compile the software by executing the following command in the main directory:

$ make

Check that the package was built correctly by executing the following command in the main directory:

$ make check

Verify that all unit tests and acceptance tests pass. Depending on your system and your UCVM configuration, testing may take up to 15-30 minutes.

Install the software to the desired target location by executing the following command in the main directory:

$ make install

A full list of supported standard velocity models and options can be listed with the command:

$ ./configure --help

Updating the Configuration

The UCVM installer will automatically create a configuration file in ${UCVM_INSTALL_DIR}/conf/ucvm.conf with entries for all enabled velocity models and some standard settings. However, you may wish to edit this file to toggle model flags or to add new user models in the future. For example, the CVM-H model has a number of flags that may be set in the UCVM configuration file. Please see UCVM_User_Guide#Framework_Configuration for more details.

Advanced Install

Additional options must be passed to the ./configure script on a more exotic Linux system, such as a host that requires static linking (NICS Kraken), or has a Lustre high-performace filesystem (NCCS Jaguar, NICS Kraken). The following example shows how to enable static linking and Cray IOBUF buffering:

$ module add iobuf

$ ./configure --prefix=${UCVM_INSTALL_DIR} --enable-static --enable-iobuf --enable-model-cvmh --enable-model-cvms

--with-etree-include-path="${ETREE_DIR}/libsrc" --with-etree-lib-path="${ETREE_DIR}/libsrc"

--with-proj4-include-path="${PROJ4_DIR}/include" --with-proj4-lib-path="${PROJ4_DIR}/lib"

--with-cvmh-include-path="${CVMH_DIR}/include" --with-cvmh-lib-path="${CVMH_DIR}/lib"

--with-gctpc-lib-path="${CVMH_DIR}/lib" --with-cvms-include-path="${CVMS_DIR}/include"

--with-cvms-lib-path="${CVMS_DIR}/lib" --with-cvmh-model-path="${CVMH_DIR}/model" --with-cvms-model-path="${CVMS_DIR}/src" CC=cc

$ make; make check; make install

It is generally advisable to compile all components of UCVM using the same compilation system. NICS Kraken, for example, uses the Cray compiler wrappers (cc not gcc). As such, on this system, you must use CC to compile the Euclid library.

A few notes:

- Some systems require applications to be linked statically (example above).

- Lustre filesystems may require linking with Cray IOBUF module (NICS Kraken example above).

- The configure script needs to be told where to find the library file(s), the header file(s), and the model files for each velocity model that you wish to enable. The configure script will check that the libraries and headers are present, and it will setup UCVM with that model enabled and properly configured.

- The script will attempt to auto-detect a GNU compliant compiler. If an MPI wrapper is found, the MPI version of UCVM is compiled, otherwise the serial version is built. The compiler may be overridden with the option 'CC="mpicc"' for example.

- Additional compiler flags and linker flags may be passed to ./configure with the CFLAGS and LDFLAGS options. Generally, this is not needed but allows the package to be built on platforms with specific compiling/linking requirements.

The full list of options follows:

--enable-static enable static linking

--enable-netcdf enable netCDF module

--enable-iobuf enable IOBUF module

--enable-model-cvmh enable model SCEC CVM-H

--enable-model-cvms enable model SCEC CVM-S

--enable-model-cencal enable model USGS CenCalVM

--enable-model-cvmsi enable model SCEC CVM-SI

--enable-model-cvmnci enable model SCEC CVM-NCI

--enable-model-wfcvm enable model WFCVM

--enable-model-cvmlt enable model Lin-Thurber

--enable-model-cmrg enable model CMRG

--enable-model-tape enable model Tape

--with-etree-include-path

location of the Etree headers

--with-etree-lib-path location of the Etree libraries

--with-proj4-include-path

location of the Proj.4 headers

--with-proj4-lib-path location of the Proj.4 libraries

--with-netcdf-include-path

location of the netCDF headers

--with-netcdf-lib-path location of the netCDF libraries

--with-cencal-include-path

location of the USGS CenCalVM headers

--with-cencal-lib-path location of the USGS CenCalVM libraries

--with-cencal-model-path

location of the USGS CenCalVM high-rez etree

--with-cencal-extmodel-path

location of the USGS CenCalVM extended etree

--with-cvmh-include-path

location of the SCEC CVM-H headers

--with-cvmh-lib-path location of the SCEC CVM-H libraries

--with-cvmh-model-path location of the SCEC CVM-H model files

--with-gctpc-lib-path location of the CVM-H GCTPC libraries (CVM-H 11.2.0)

--with-cvms-include-path

location of the SCEC CVM-S headers

--with-cvms-lib-path location of the SCEC CVM-S libraries

--with-cvms-model-path location of the SCEC CVM-S model files

--with-cvmsi-include-path

location of the SCEC CVM-SI headers

--with-cvmsi-lib-path location of the SCEC CVM-SI libraries

--with-cvmsi-model-path location of the SCEC CVM-SI model files

--with-cvmnci-include-path

location of the SCEC CVM-NCI headers

--with-cvmnci-lib-path location of the SCEC CVM-NCI libraries

--with-cvmnci-model-path

location of the SCEC CVM-NCI model files

--with-wfcvm-include-path

location of the WFCVM headers

--with-wfcvm-lib-path location of the WFCVM libraries

--with-wfcvm-model-path location of the WFCVM model files

--with-cvmlt-include-path

location of the CVMLT headers

--with-cvmlt-lib-path location of the CVMLT libraries

--with-cvmlt-model-path location of the CVMLT model files

--with-cmrg-include-path

location of the CMRG headers

--with-cmrg-lib-path location of the CMRG libraries

--with-cmrg-model-path location of the CMRG model files

--with-tape-include-path

location of the TAPE headers

--with-tape-lib-path location of the TAPE libraries

--with-tape-model-path location of the TAPE model files

Unit and Acceptance Tests

Both the unit tests and acceptance tests may be executed with the following command from the main directory:

$ make check

All tests should result in a PASS.

Trouble-shooting

If you see an error similar to the following while running either the tests or the UCVM programs:

error while loading shared libraries: libsomelib.so: cannot open shared object file: No such file or directory

This indicates that UCVM was linked against one or more shared libraries and the dynamic library loader cannot find the actual .so library at run-time. The solution is to update your LD_LIBRARY_PATH to include the directory containing the library mentioned in the error message. For example, the following command adds a new search directory to LD_LIBRARY_PATH in a csh shell:

$ setenv LD_LIBRARY_PATH /home/USER/opt/somepkg/lib:${LD_LIBRARY_PATH}

Alternatively, if you do not want to work with shared libraries, UCVM can be compiled statically with the "--enable-static" configuration option.

Proj. 4 Error: major axis or radius = 0 or not given

On systems with home filesystems that are not viewable to compute nodes (such as NICS Kraken), you may encounter errors with Proj.4 when trying to run any component of UCVM on compute nodes. This is due to the fact that Proj.4 actually relies on a file called proj_defs.dat which is located in the ${MAKE_INSTALL_LOCATION}/share/proj directory. So for example, suppose you had configured Proj.4 with ./configure --prefix=/not_viewable_to_compute_nodes/proj-4.7.0, Proj. 4 would then search for proj_defs.dat in /not_viewable_to_compute_nodes/proj-4.7.0/share/proj/proj_defs.dat. This will cause UCVM to throw the error "Proj.4 Error: major axis or radius = 0 or not given" and your job will fail.

The only way it seems to solve this issue is to actually make sure your --prefix directory is actually visible to the compute nodes and do a make install there. Documentation suggests that you can set the PROJ_LIB environment variable, however this seems to not work correctly without modifications to the Proj.4 source code.

Building Against CVM-H 11.2.0

UCVM is designed to work with CVM-H 11.9.1 by default, but it can optionally be built with CVM-H 11.2.0. Use this modified configure command to configure the package with CVM-H 11.2.0 and CVM-S:

$ cd ucvm-12.2.0

$ ./configure --prefix=${UCVM_INSTALL_DIR} --with-etree-include-path="${ETREE_DIR}/libsrc"

--with-etree-lib-path="${ETREE_DIR}/libsrc" --with-proj4-include-path="${PROJ4_DIR}/include"

--with-proj4-lib-path="${PROJ4_DIR}/lib" --enable-model-cvmh --enable-model-cvms

--with-cvmh-include-path="${CVMH_DIR}/include" --with-cvmh-lib-path="${CVMH_DIR}/lib"

--with-gctpc-lib-path="${CVMH_DIR}/gctpc/lib" --with-cvms-include-path="${CVMS_DIR}/include"

--with-cvms-lib-path="${CVMS_DIR}/lib" --with-cvmh-model-path="${CVMH_DIR}/bin" --with-cvms-model-path="${CVMS_DIR}/src"

CFLAGS="-D_UCVM_MODEL_CVMH_11_2_0"

Note that the CVM-H model and gctpc paths have changed, and there is now a CFLAGS argument. After configuring, you may compile, check, and install normally.

Framework Configuration

The main package configuration file is ${UCVM_INSTALL_DIR}/conf/ucvm.conf. This is where the paths to all configured models and maps are specified, as well as any model flags are defined. The UCVM installer sets up this file automatically. Yet there are a number of situations where you will want to modify it, such as to add a new model.

The following is an example configuration:

# UCVM config file # UCVM model path # # Change to reflect UCVM installation directory. # ucvm_interface=map_etree ucvm_mappath=/home/scec-00/USER/opt/aftershock/ucvm-11.11.0_RC/model/ucvm/ucvm.e # Pre-defined models # # SCEC CVM-S cvms_modelpath=/home/scec-00/USER/opt/aftershock/cvms/src # # SCEC CVM-H cvmh_modelpath=/home/scec-00/USER/opt/aftershock/cvmh-11.9.1/model # # USGS Bay Area high-rez and extended etrees cencal_modelpath=/home/scec-00/USER/opt/etree/USGSBayAreaVM-08.3.0.etree cencal_extmodelpath=/home/scec-00/USER/opt/etree/USGSBayAreaVMExt-08.3.0.etree # # SCEC CVM-SI cvmsi_modelpath=/home/scec-00/USER/opt/aftershock/cvmsi/model/i8 # # SCEC CVM-NCI cvmnci_modelpath=/home/scec-00/USER/opt/aftershock/cvmnci/model/i2 # # Wasatch Front CVM wfcvm_modelpath=/home/scec-00/USER/opt/aftershock/wfcvm/src # # Lin-Thurber Statewide cvmlt_modelpath=/home/scec-00/USER/opt/aftershock/cvmlt/model # # Cape Mendocino RG cmrg_modelpath=/home/scec-00/USER/opt/aftershock/cvm-cmrg/model/cmrg.conf # # Tape SoCal Model tape_modelpath=/home/scec-00/USER/opt/aftershock/cvm-tape/model/m16 # # 1D 1d_modelpath=/home/scec-00/USER/opt/aftershock/ucvm-11.11.0_RC/model/1d/1d.conf # # 1D GTL 1dgtl_modelpath=/home/scec-00/USER/opt/aftershock/ucvm-11.11.0_RC/model/1d/1d.conf # User-defined models # # Change to reflect paths to user model files # # Current supported interfaces: model_etree, model_patch # #user1_interface=model_etree #user1_modelpath=/home/username/model_etree/user1.etree #user2_interface=model_patch #user2_modelpath=/home/username/model_patch/user2.conf # User-defined maps # # Change to reflect paths to user map files # # Current supported interfaces: map_etree # # Optional Yong-Wald vs30 map yong_interface=map_etree yong_mappath=/home/scec-00/USER/opt/aftershock/ucvm-11.11.0_RC/model/ucvm/ucvm_yong_wald.e # Model flags # cvmh_param=USE_1D_BKG,True cvmh_param=USE_GTL,True

The following table describes each configuration item, where "*" indicates the model/map label. Model/map labels can be predefined strings such

as "cmvh" for CVM-H, or any arbitrary string label for the case of a user-defined model.

| Key | Value(s) | Description |

|---|---|---|

| ucvm_interface | map_etree | The interface code needed to parse the UCVM map |

| ucvm_mappath | ${UCVM_INSTALL_DIR}/model/ucvm/ucvm.e | String identifying location of the UCVM map Etree |

| *_modelpath | A directory, URL, or file | String identifying location of model's primary or high-rez data |

| *_extmodelpath | A directory, URL, or file | String identifying location of model's extended data (required for USGS CenCalVM extended Etree). |

| *_interface | Models: model_etree, model_patch Maps: model_map |

The interface code needed to parse this user-defined model/map. |

| *_mappath | A directory, URL, or file | String identifying location of user-defined map Etree |

| *_param | Flag,Value | Set a model-specific configuration Flag to Value. The defined flags are USE_1D_BKG and USE_GTL for CVM-H, for toggling the 1D background model and GTL. |

Supported Standard Crustal Velocity Models

UCVM has built-in support for a number of standard community velocity models (CVMs). These standard models are referenced in ucvm_query and the API by their string labels. The following table lists the labels for these predefined CVMs. These are reserved labels and cannot be used for a user-defined model.

Note that "predefined" does not necessarily mean "installed". These are models that UCVM can query if it is linked with that model's libraries at install time. Some are pre-linked, such as lin-thurber and cmrg, the rest must be explicitly linked at install.

| Label | Model | Type | Active Limit |

|---|---|---|---|

| cvmh | SCEC CVM-H | External, installed separately and linked at install | 1 |

| cvms | SCEC CVM-S | External, installed separately and linked at install | 1 |

| cencal | USGS Bay Area CenCalVM | External, installed separately and linked at install | 1 |

| cvmnci | SCEC CVM-NCI | External, installed separately and linked at install | 1 |

| cvmsi | SCEC CVM-SI | External, installed separately and linked at install | 1 |

| wfcvm | Wasatch Front CVM (Utah) | External, installed separately and linked at install | 1 |

| 1d | Hadley-Kanamori 1D | Internal, included in UCVM and automatically linked | 1 |

| lt | Lin-Thurber California Statewide | External, installed separately and linked at install | 1 |

| cmrg | Cape Mendocino Rob Graves | External, installed separately and linked at install | 1 |

| tape | Carl Tape SoCal | External, installed separately and linked at install | 1 |

| cmuetree | CMU CVM Etree | Internal, included in UCVM, user specifies etree path in ./conf/ucvm.conf | 1 |

UCVM also defines two "model interfaces". A model interface is a specific file format convention for representing a velocity model. Any velocity model written to conform to one of these model interfaces may be easily imported and queried from UCVM. In addition, many such models may be active simultaneously, whereas only one version of a predefined model may be active at any given time. The two model interfaces are: SCEC CVM Etrees, and SCEC Patch Models. SCEC CVM Etrees employ a special schema and metadata format. SCEC Patch Models have a special conf file and binary file format. The section UCVM_User_Guide#Adding_a_User-defined_Velocity_Model discusses how to create and import these velocity models.

The currently supported model interfaces are summarized in the following table:

| Interface | Description | Active Limit |

|---|---|---|

| model_etree | SCEC CVM Etree | 100 |

| model_patch | SCEC Patch Model | 100 |

Supported Standard GTL Velocity Models

UCVM has built-in support for two geotechnical layer models. These near-surface models are intended to provide superior velocity information at shallow depths. The following table lists the labels for these predefined GTLs. These are reserved labels and cannot be used for a user-defined GTL.

| Label | GTL |

|---|---|

| elygtl | Ely Vs30-derived GTL (Ely et al., 2010) |

| 1dgtl | Generic 1D, identical to 1d crustal model |

Interpolation functions are used to smooth GTL material properties with the underlying crustal model material properties. This smoothing is performed over an interpolation zone along the Z axis. Interpolation functions can be assigned on a per-GTL basis. Two predefined interpolation functions are provided to the user:

| Label | Interpolation Function |

|---|---|

| linear | Linear interpolation |

| ely | Ely interpolation relation (Ely et al., 2010) |

If the user enables a GTL model but does not specify an interpolation function, linear interpolation is used by default. If no interpolation zone is specified, a depth range of 0 m - 350m is used.

Supported Standard Maps

UCVM has built-in support for a number of standard maps for California. These standard maps contain elevation data (DEM) and Vs30 data for the region and are referenced in ucvm_query and the API by their string labels. The following table lists the labels for these predefined maps. These are reserved labels and cannot be used for a user-defined map.

| Label | Map |

|---|---|

| ucvm | USGS NED 1 arcsec DEM, and Wills-Wald Vs30 (default) |

| yong | USGS NED 1 arcsec DEM, and Yong-Wald Vs30 |

There is also a special map interface that supports reading SCEC Map Etrees. These are maps that conform to a special Etree schema and metadata format convention. The user may create their own maps in this format and import them into UCVM. Any number of maps may be defined, but only one may be active at any time. Maps are defined by the user in the UCVM configuration file (see UCVM_User_Guide#Adding_a_User-defined_Map).

The map interfaces are summarized in the following table:

| Interface | Description |

|---|---|

| map_etree | SCEC Map Etree |

Supported Model Flags

Underlying models may be configured in one of two ways: with a key/value string in the UCVM configuration file, or by passing configuration parameters with the ucvm_setparam()function. The following model flags are supported:

| Parameter | Value | Models |

|---|---|---|

| USE_1D_BKG | True/False (default False) | CVM-H |

| USE_GTL | True/False (default True) | CVM-H |

Adding New Velocity Models

Enabling Another Standard Velocity Model After Installation

If after installation you wish to link in another standard velocity model, you must re-install UCVM using the process described in UCVM_User_Guide#Installation. Add the configure options appropriate for the new model you wish to link into UCVM.

Upgrading the Version of a Standard Velocity Model After Installation

Generally, UCVM must be recompiled and re-installed using the new model libraries and headers. However, if you are certain that only the model files have changed (and the query interface has NOT changed), you can simply update that model's modelpath configuration in ${UCVM_INSTALL_DIR}/conf/ucvm.conf to reference the new files.

Using Multiple Versions of a Supported Velocity Model Simultaneously

Not supported.

Adding a User-defined Velocity Model

UCVM may be extended to support any user-defined velocity model. The simplest way to add a new model is to store that model as a SCEC Etree or Patch Model, and update ${UCVM_INSTALL_DIR}/conf/ucvm.conf with the model interface and path. For example:

For SCEC Etrees:

user1_interface=model_etree user1_modelpath=/home/username/model_etree/user1.etree

For Patch models:

user2_interface=model_patch user2_modelpath=/home/username/model_patch/user2.conf

SCEC Etrees can be created with the ./bin/ucvm2etree utility. Patch models can be created with the ./bin/patchmodel utility.

For other models that either are represented by tables, or have their own native API, UCVM may be extended to query from these models. However, this requires code modifications to the core UCVM library located in ./src/ucvm and recompilation. The general steps are:

- Define new model label UCVM_MODEL_MODELNAME in ucvm_dtypes.h

- Create ucvm_model_modelname.h/.c containing the glue code that links the UCVM query interface to the native model interface

- Modify ./src/ucvm/ucvm.c:

- Add ucvm_model_modelname.h include at top

- Add model lookup to ucvm_add_model() function

- Add model to ucvm_get_resources() function

- Modify ./src/ucvm/Makefile.am:

- Add ucvm_model_modelname.o to list of libucvm.a dependencies

- Modify ./configure.ac:

- Define "enable-modelname", "with-modelname-lib-path", and "with-modelname-incl-path" configure options

- Add library and header checks for your new model

- Modify installer to customize path to your new model in ucvm.conf:

- Add your new model to ./conf/Makefile.am

- Add config entry for your new model to ./conf/ucvm_template.conf

- Regenerate the Makefiles with ./scripts/autoconf/reconf.sh

- Reconfigure and recompile UCVM, making sure to pass your new model flags to the configure utility

Adding a User-defined Map

Update ${UCVM_INSTALL_DIR}/conf/ucvm.conf with the map interface and Etree path:

usermap1_interface=map_etree usermap2_mappath=/home/username/maps/usermap1.e

User-defined maps can be created with the ./bin/grd2etree utility.

Framework Description

Concept of Operation

Combining Velocity Models into a Composite Model

UCVM combines multiple regional velocity models, along with a DEM map and a Vs30 map, into one composite model for the purposes of querying surface elevation, Vs30, Vp, Vs, and density. Individual model setup and query details are abstracted under a uniform interface, and the application sees only the composite model. This simplifies application code and makes it easier for those programs to support new velocity models in the future.

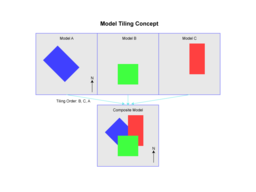

Velocity models are combined into the composite by tiling them on top of one another. When UCVM is initialized, the user selects an ordered list of models to query for data. Query points are then submitted to each velocity model in that list, one model at a time in list order. The first model to return valid velocity data for the point is considered to have fullfilled that data request and subsequent models are not queried. Generally, no smoothing is performed at the interfaces between models (an exception is interpolation between a GTL and crustal model as described below). For each query point, the following data is returned: surface elevation, Vs30, Vp, Vs, and density. The figure at right illustrates how this tiling is performed.

Regional models may include: CVM-H, CVM-H, USGS Bay Area, 1D, or other user-defined models. Most models and maps have a maximum extent, outside of which no data is available. The exception is the generic 1D model as it has infinite extents. Applications must check the returned velocity and density values to ensure they are valid.

Querying with one or more GTLs

The API distinguishes between two types of regional velocity models: GTL models, and crustal models. GTL models are shallow near-surface velocity models called geotechnical layers. Crustal models are deeper velocity models such as tomographic models. When a user selects to query from one or more crustal models only, the API executes the previously described behavior. However, if one or more GTL models are selected, additional processing is performed.

When a GTL model is given in the ordered list of models, the API creates a default interpolation zone along the z-axis over which interpolation between this GTL and the underlying crustal models is performed. It also assigns a default (linear) interpolation function to smooth the velocities of the GTL and underlying crustal models. Both the interpolation zone and interpolation function can be changed by the user.

Querying for a point in this operating mode is now slightly different from the previous crustal-only case. As before, all query points are submitted to the crustal models in the ordered list of models (skipping any GTLs). However, the z coordinate of the points may be shifted down to the lower edge of the interpolation zone if the point falls within it. The crustal models are queried with this possibly modified 3D coordinate and the velocities/density values are saved. The same list of query points is then submitted to the GTL models in the ordered list of models (skipping any crustals) and the z coordinate of those points within the interpolation zone may be shifted up to the top edge. The GTL models are queried with this possibly modified 3D coordinate and the velocity/density values are saved.

The list of query points is then traversed one final time. Those points that fall above the interpolation zone will have their velocities/densities set to those of the GTL as processed by the interpolation function assigned to that GTL. Those points that fall within the interpolation zone and have valid GTL/crustal values will have their GTL/crustal values smoothed according to the assigned interpolation function. Points that fall below the interpolation zone and have valid crustal values will have their velocities/densities set to the crustal values. Note that points with invalid/incompatible velocities in either the GTL or crustal dataset cannot be smoothed and the interpolation results will be marked as invalid.

Querying Models Via Native Interface versus Querying UCVM

For the most part, querying any model through its native interface will yield the same material properties as UCVM. There are a few exceptions, which are noted here:

- When querying by elevation with CVM-H and any GTL, points that fall above the UCVM free surface and within the CVM-H domain are normalized to be relative to the CVM-H free surface. This is done to eliminate small discrepancies between the UCVM DEM and the CVM-H DEM.

- The same correction is performed when querying by elevation with CenCalVM and any GTL.

- When querying CenCalVM by depth, UCVM does not directly pass that point to the cencalvm interface since the definition of depth differs between UCVM and cencalvm. Instead, UCVM derives the elevation of the free surface (ground/air, ground/water) at the point of interest by drilling down through the model at that point and finding the highest octant with valid material properties. UCVM then converts the query point depth to elevation and queries cencalvm by elevation.

Utilities

ucvm_query

This is the command-line tool for querying CVMs. Any set of crustal and GTL velocity models may be selected and queried in order of preference. Points may be queried by (lon,lat,dep) or (lon,lat,elev) and the coordinate conversions for a particular model are handled transparently.

Usage: ucvm_query [-m models<:ifunc>] [-p user_map] [-c coordtype] [-f config] [-z zmin,zmax] < file.in

Flags:

-h This help message.

-m Comma delimited list of crustal/GTL models to query in order

of preference. GTL models may optionally be suffixed with ':ifunc'

to specify interpolation function.

-p User-defined map to use for elevation and vs30 data.

-c Z coordinate mode: geo-depth (gd, default), geo-elev (ge).

-f Configuration file. Default is ./ucvm.conf.

-z Optional depth range for gtl/crust interpolation.

Input format is:

lon lat Z

Output format is:

lon lat Z surf vs30 crustal cr_vp cr_vs cr_rho gtl gtl_vp gtl_vs gtl_rho cmb_algo cmb_vp cmb_vs cmb_rho

Notes:

- If running interactively, type Cntl-D to end input coord list.

Version: 12.2.0

The config file is generally ${UCVM_INSTALL_DIR}/conf/ucvm.conf, but you may pass in any custom configuration file.

ucvm2etree

This utility creates a SCEC-formatted CVM Etree extracted from a set of CVMs. The CVM Etree may then be imported into UCVM and queried like any other velocity model. This confers a number of advantages:

- Several different velocity models can be combined into one composite model and distributed as a single file.

- Any number of CVM Etrees can be easily imported into UCVM without recompilation (just a config change) since Etree support is built in.

- The Etree data structure is efficient and the library provides disk caching and buffering, resulting in very fast query performance.

Two versions of this utility are provided, a serial version and an MPI version.

Serial ucvm2etree

The serial ucvm2etree is suitable for small to medium sized etrees, and is executed as follows:

Usage: ucvm2etree [-h] -f config

Flags:

-f: Configuration file

-h: Help message

Version: 12.2.0

The following is an example ucvm2etree configuration file. It can also be found in ${UCVM_INSTALL_DIR}/conf/example/ucvm2etree_example.conf:

# Domain corners coordinates (clockwise, degrees): proj=geo-bilinear lon_0=-119.288842 lat_0=34.120549 lon_1=-118.354016 lat_1=35.061096 lon_2=-116.846030 lat_2=34.025873 lon_3=-117.780976 lat_3=33.096503 # Domain dimensions (meters): x-size=180000.0000 y-size=135000.0000 z-size=61875.0000 # Blocks partition parameters: nx=32 ny=24 # Max freq, points per wavelength, Vs min max_freq=0.5 ppwl=4.0 vs_min=200.0 # Max allowed size of octants in meters max_octsize=10000.0 # Etree parameters and info title=ChinoHills_0.5Hz_200ms author=P_Small date=05/2011 outputfile=./cmu_cvmh_chino_0.5hz_200ms.e format=etree # UCVM parameters #ucvmstr=cvms ucvmstr=cvmh ucvm_interp_zrange=0.0,350.0 ucvmconf=../../conf/kraken/ucvm.conf # Scratch scratch=/lustre/scratch/patricks/scratch # # Buffering parameters used by MPI version only # # Etree buffer size in MB buf_etree_cache=128 # Max octants to buffer for flat file during extraction buf_extract_mem_max_oct=4194304 # Max octants to save in flat file before reporting full during extraction buf_extract_ffile_max_oct=16000000 # Max octants to read from input flat file during sorting buf_sort_ffile_max_oct=20000000 # Minimum number of octants between reports during merging buf_merge_report_min_oct=10000000 # MPI send/recv octant buffer size during merging buf_merge_sendrecv_buf_oct=4096 # Etree read/write octant buffer size during merging buf_merge_io_buf_oct=4194304

Parallel ucvm2etree

The MPI version is useful for large and very large etrees. It involves three programs: ucvm2etree-extract-MPI, ucvm2etree-sort-MPI, and ucvm2etree-merge-MPI. All three take the same configuration file as the one used by the serial version. The ucvmetree-extract-MPI utility generates the set of points that are to be used in the etree, extracts those points from the specified CVMs, and saves them in a set of N files (where N is the process count). The ucvm2etree-sort-MPI utility sorts the points in each flat file by octant key. The third utility, ucvm2etree-merge-MPI, merges these locally sorted points into the final etree file.

Import the new CVM Etree into UCVM by adding these lines to ${UCVM_INSTALL_DIR}/conf/ucvm.conf:

newmodel_interface=model_etree newmodel_modelpath=config.outputfile where: newmodel : name for your new model config.outputfile : outputfile from config passed into ucvm2etree

The following sections describe these three programs in more detail:

ucvm2etree-extract-MPI

Divides the etree region into C columns for extraction. This is an embarrassingly parallel operation. A dispatcher (rank 0) farms out each column to a worker in a pool of N cores for extraction. Each worker queries UCVM for the points in its column and writes a flat-file formatted etree. After program execution, there are N sub-etree files, each locally unsorted. The extractor must be run on 2^Y + 1 cores where Y>0 and (2^Y) < C. The output flat file format is a list of octants(24 byte addr, 16 byte key, 12 byte payload) in arbitrary Z-order.

Since the number of points in a column depends on the minimum Vs values within that column, some columns will have high octant counts and others will have very low octant counts. Having sub-etrees that vary greatly in size is not optimal for the sorting operations that follow, so ucvm2etree-extract-MPI implements a simple octant balancing mechanism. When a worker has extracted more than X octants (the default 16M octants), it reports to the dispatcher that it cannot extract any more columns and terminates. This strategy approximately balances the sub-etrees so that they may be loaded into memory by ucvm2etree-sort-MPI. In the case of very large extractions where the dispatcher reports that all workers have reported they are full yet columns remain to be extracted, increase the job size by a factor of 2 until there is room for all the columns.

ucvm2etree-sort-MPI

Sorts the sub-etrees produced by ucvm2etree-extract-MPI so that each file is in local pre-order (Z-order). Again, the is an embarrassingly parallel operation. Each rank in the job reads in one of the sub-etrees produced by the previous program, sorts the octants in Z-order, and writes the sorted octants to a new sub-etree. The sorter must be run on 2^Y cores where Y>0. The worker pool must be large enough to allow each worker to load all the octants from its assigned file into memory. By default, this octant limit is 20M octants. If a rank reports that the size of the sub-etree exceeds memory capacity, the 20M buffer size constant may be increased if memory allows, or alternatively, cvmbycols-extract-MPI may be rerun with a larger job size to reduce the number of octants per file.

ucvm2etree-merge-MPI

Merges N locally sorted etrees in flat file format into a final, compacted etree. This is essentially a merge sort on the keys from the addresses read from the local files. The cores at the lowest level of the merge tree each read in octants from two flat files in pre-order, merge sort the two sets of addresses, then pass the locally sorted list of addresses to a parent node for additional merging. This proceeds until the points rise to rank 1 which has a completely sorted list of etree addresses. Rank 0 takes this sorted list and performs a transactional append on the final Etree. The merger must be run on 2^N cores.

The program reads in input files that are in flat file format. In can output a merged Etree in either Etree format or flat file format. Although, due to space considerations, it strips the output flat file format to a pre-order list ot octants(16 byte key, 12 byte payload). The missing addr field is redundant and can be regenerated from the key field.

MPI Version Buffer Values

There are a number of buffer values in the ucvm.conf file that must be accurately set for extraction, sorting, and merging to take place. Please see below for a list of those values and a description of the purpose of each one.

buf_etree_cache

Units: MB

Recommended Value: 128 usually works well, you can increase or decrease as need be

For Process: ucvm2etree-extract-MPI

Description: Used in the merging process during the etree_open call. According to the documentation of etree_open, this is more precisely defined as the "internal buffer space being allocated in megabytes". The value defined represents the memory buffer for etree traversing, finding, writing, etc.

buf_extract_mem_max_oct

Units: Number of octants

Recommended Value: 2097152

For Process: ucvm2etree-extract-MPI

Description: Used in the extraction process as the number of bytes to buffer before writing to disk. It reserves, in memory, a placeholder for this number of octants. The size of each octant is 52 bytes and as such, the actual memory allocation is 52 * this value.

buf_extract_ffile_max_oct

Units: Number of octants

Recommended Value: Varies. It must be greater than total output file size divided by the number of processes.

For Process: ucvm2etree-extract-MPI

Description: This value is very critical! This defines the maximum output file size that each worker process can write. If this is not large enough, the worker will halt and return a "worker is full message". The best way to set this is to guess how large your extraction file might be (a relatively detailed Chino Hills e-tree, for example, is 250GB, and divide that number by 52 * the number or processes you have. So if I was building that mesh, on 32 processes, this value would need to be at least 162 million. However, this value also ties in with the number below, so please read it carefully as well.

buf_sort_ffile_max_oct

Units: Number of octants

Recommended Value: Must be at least equal to buf_extract_ffile_max_oct

For Process: ucvm2etree-sort-MPI

Description: Used in the sorting process to determine the max number of octants to read into memory. Therefore, for each process running on the server, there must be number of processes * 52 * buf_sort_ffile_max_oct bytes of memory available. So in our example above, if we were running those 32 processes on 16 dual core machines, we would need 162 million * 52 * 2 cores bytes of RAM or about 16 GB. All sorting is done in memory, not using the file on the disk.

buf_merge_report_min_oct

Units: Number of octants

Recommended Value: Personal preference - default is 10000000.

For Process: ucvm2etree-merge-MPI

Description:. This defines the number of octants that must be processed between progress reports in the merge process. This progress reports take the form of "Appended [at least buf_merge_report_min_oct] in N.NNs seconds". If you would like more frequent updates, set this value to be smaller.

buf_merge_sendrecv_buf_oct

Units: Bytes

Recommended Value: 4096

For Process: ucvm2etree-merge-MPI

Description: The number of bytes to merge per loop during the merge process. It is recommended to keep this value as is.

buf_merge_io_buf_oct

Units: Number of octants

Recommended Value: 4194304

For Process: ucvm2etree-merge-MPI

Description: Number of octants to read in and store in memory for merging. After being merged, the memory is then flushed to disk and the process repeats. Since this is a value in octants, it will require the machine to have at least buf_merge_io_buf_oct * 52 bytes of memory.

Etree Summary

In order to understand how ucvm2etree works, some knowledge of the Etree data structure is required. The fundamental unit of measure in an Etree is the tick. This is a distance expressed in meters, and is derived from the length of the longest side of the geographic region:

tick size = (max(len x, len y, len z) / 2^31) m

In essence, the geographic region is divided up into a set of 2^31 ticks.

The funamental unit of data in an Etree is the octant. An octant contains an address (location code) and a payload (material properties). Octants are organized into a tree structure called an octree, where each node has up to eight children. Each level in this octree represents an octant size (alternatively: edge size, or resolution). Moving from level N to level N+1 in the octree increases resolution by 2x. Moving from level N to level N-1 reduces resolution by 2x. The resolution for a level can be determined with:

res from level N = (max_len / 2^N) m

The number of ticks in an octant of level N is:

ticks for level N = 2^N

And thus the octant size in level N is:

octant size = (2^N * (max(len x, len y, len z) / 2^31)) m

Given a particular resolution, the appropriate level to represent it in the etree can be determined with:

level N from res = (int)(log(max_len/res)/log(2)) + 1 (rounded up to next integer)

These relations are important in understanding the algorithm described in the following sections.

Algorithm Description

This program uses several algorithmic approaches to increase extraction speed and minimize the amount of disk space required to store the Etree. These are described in the following sections.

Gridding of Geographic Coordinates

Gridding of the geographic bounding box is performed either using bilinear interpolation of lat/long corners, or by a UCVM projection specification (proj4 projection string, rot angle, lat0, lon0). Grid points are located at cell center.

Optimized Etree Resolution Based on Frequency, PPWL, and Minimum Vs

One of the strengths of the Etree (octree) representation is that resolution can be adjusted so that a particular region is sampled at a lower/higher interval than surrounding areas. You are able to achieve high resolution in just those places where it is needed, and the rest of the region can be sampled at a much lower rez thereby saving considerable disk space.

With this flexibility comes the difficulty of determining what resolution is needed at all points within a meshing region to support Computational Seismology simulations. ucvm2etree uses an algorithm to deduce this resolution based off of three input parameters: maximum frequency to support, the number of points per wavelength that is desired, and the minimum Vs to support. The following relation is used to derive the resolution for a region:

res = vs_min / (ppwl * max_freq) where: res: resolution for the region with vs_min, in meters vs_min: Minimum Vs found in region, in meters/sec ppwl: desired points per wavelength max_freq: maximum frequency to support, in Hz

Using the above relation, the 3D space is decomposed into small columns, and the resolution in each column is tailored to the vs values found at each depth. The algorithm can be summarized as follows:

- Divide region in set of x,y columns, where the length of each column equals its width.

- For each column:

- Starting at depth = 0m, extract a 2D grid for the column at the resolution needed to support the max frequency, pounts/wavelength, and minimum Vs, with a depth that is one-half the current octant edge size (that is, Z axis is cell center as well).

- If the local minimum Vs is in this 2D grid allows for a lower resolution, and the current z value is divisible by the lower resolution, requery the CVM at the lower resolution. Otherwise, if the local min Vs in this grid needs a higher resolution, increase the resolution to what is required (up to the maximum resolution dictated by the configured global minimum Vs) and requery the CVM.

- Write this layer to a flat file (a single file when run serially, or a file local to this core when run in parallel)

- Step the depth down by the resolution of this 2D grid and loop to the next layer in the column.

- Loop to the next column