Difference between revisions of "CyberShake Study 21.12"

| Line 135: | Line 135: | ||

==== CARC ==== | ==== CARC ==== | ||

| − | + | We estimate 1.2 TB in output data, which will be transferred back to CARC. | |

==== shock-carc ==== | ==== shock-carc ==== | ||

| + | The study should use approximately 200 GB in workflow log space on /home/shock. This drive has approximately 1.7 TB free. | ||

==== moment database ==== | ==== moment database ==== | ||

Revision as of 00:55, 3 December 2021

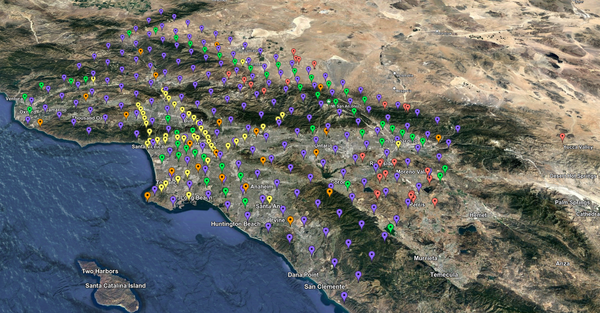

CyberShake 21.12 is a computational study to use a new ERF with CyberShake, generated from an RSQSim catalog. We plan to calculate results for 336 sites in Southern California using the RSQSim ERF, a minimum Vs of 500 m/s, and a frequency of 1 Hz. We will use the CVM-S4.26.M01 model, and the GPU implementation of AWP-ODC-SGT enhanced from the BBP verification testing. We will begin by generating all sets of SGTs, on Summit, then post-process them on a combination of Summit and Frontera.

Contents

Status

This study is in the pre-production phase. Production is scheduled to begin in mid-December, 2021.

Data Products

Science Goals

The science goals for this study are:

- Calculate a regional CyberShake model using an alternative, RSQSim-derived ERF.

- Compare results from an RSQSim ERF to results using a UCERF2 ERF (Study 15.4).

Technical Goals

The technical goals for this study are:

- Perform a study using OLCF Summit as a key compute resource.

- Evaluate the performance of the new workflow submission host, shock-carc.

- Use Globus Online for staging of output data products.

ERF

The ERF was generated from an RSQSim catalog, with the following parameters:

- 715kyr catalog (the first 65k years of events were dropped, so that every fault's first event is excluded)

- 220,927 earthquakes with M6.5+

- All events have equal probability, 1/715k

Additional details are available on the catalog's metadata page.

Sites

We will run a list of 335 sites, taken from the site list that was used in other Southern California studies.

Velocity Model

We will use CVM-S4.26.M01.

To better represent the near-surface layer, we will populate the velocity parameters for the surface point by querying the velocity model at a depth of (grid spacing)/4. For this study, the grid spacing is 100m, so we will query UCVM at a depth of 25m and use that value to populate the surface grid point. The rationale is that the media parameters at the surface grid point are supposed to represent the material properties for [0, 50m], and this is better represented by using the value at 25m than the value at 0m.

Performance Enhancements (over Study 18.8)

- Consider separating SGT and PP workflows in auto-submit tool to better manage the number of each, for improved reservation utilization.

- Create a read-only way to look at the CyberShake Run Manager website.

- Consider reducing levels of the workflow hierarchy, thereby reducing load on shock.

- Establish clear rules and policies about reservation usage.

- Determine advance plan for SGTs for sites which require fewer (200) GPUs.

- Determine advance plan for SGTs for sites which exceed memory on nodes.

- Create new velocity model ID for composite model, capturing metadata.

- Verify all Java processes grab a reasonable amount of memory.

- Clear disk space before study begins to avoid disk contention.

- Add stress test before beginning study, for multiple sites at a time, with cleanup.

- In addition to disk space, check local inode usage.

- If submitting to multiple reservations, make sure enough jobs are eligible to run that no reservation is starved.

- If running primarily SGTs for awhile, make sure they don't get deleted due to quota policies.

Output Data Products

File-based data products

We plan to produce the following data products which will be stored at CARC:

- Seismograms: 2-component seismograms, 6000 timesteps (300 sec) each

- PSA: X and Y spectral acceleration at 44 periods (10, 9.5, 9, 8.5, 8, 7.5, 7, 6.5, 6, 5.5, 5, 4.8, 4.6, 4.4, 4.2, 4, 3.8, 3.6, 3.4, 3.2, 2, 2.8, 2.6, 2.4, 2.2, 2, 1.66667, 1.42857, 1.25, 1.11111, 1, .66667, .5, .4, .33333, .285714, .25, .22222, .2, .16667, .142857, .125, .11111, .1 sec)

- RotD: RotD50, the RotD50 azimuth, and RotD100 at 22 periods (1.0, 1.2, 1.4, 1.5, 1.6, 1.8, 2.0, 2.2, 2.4, 2.6, 2.8, 3.0, 3.5, 4.0, 4.4, 5.0, 5.5, 6.0, 6.5, 7.5, 8.5, 10.0 sec)

- Durations: for X and Y components, energy integral, Arias intensity, cumulative absolute velocity (CAV), and for both velocity and acceleration, 5-75%, 5-95%, and 20-80%.

Database data products

We plan to store the following data products in the database:

- PSA: none

- RotD: RotD50 and RotD100 at 10, 7.5, 5, 4, 3, and 2 sec.

- Durations: acceleration 5-75% and 5-95% for X and Y components

Computational and Data Estimates

Computational Estimates

We based these estimates by scaling from site USC (the average site has 3.8% more events and a volume 9.7% larger).

| UCVM runtime | UCVM nodes | SGT runtime | SGT nodes | Other SGT workflow jobs | Summit Total | |

|---|---|---|---|---|---|---|

| USC | 372 sec | 80 | 2628 sec | 67 | 1510 node-sec | 106.5 node-hrs |

| Average (est) | 408 sec | 80 | 2883 sec | 67 | 1550 node-sec | 116.8 node-hrs |

Adding 10% overrun margin gives us an estimate of 43k node-hours for SGT calculation.

| DirectSynth runtime | DirectSynth nodes | Summit Total | |

|---|---|---|---|

| USC | 1081 | 36 | 10.8 |

| Average (est) | 1122 | 36 | 11.2 |

Adding 10% overrun margin gives an estimate of 4.2k node-hours for post-processing.

Data Estimates

Summit

| Velocity mesh | SGTs size | Temp data | Output data | |

|---|---|---|---|---|

| USC | 243 GB | 196 GB | 439 GB | 3.4 GB |

| Average | 267 GB | 203 GB | 470 GB | 3.5 GB |

| Total | 87 TB | 66 TB | 153 TB | 1.2 TB |

CARC

We estimate 1.2 TB in output data, which will be transferred back to CARC.

shock-carc

The study should use approximately 200 GB in workflow log space on /home/shock. This drive has approximately 1.7 TB free.

moment database

Lessons Learned

Performance Metrics

Production Checklist

Science:

- Confirm that ERF 62 test produces results which closely match ERF 61

- Restore improvements to codes since ERF 58, and rerun USC for ERF 62

- Create prioritized site list.

Technical:

- Do we want to approach OLCF for our usual production requests? We've previously asked for 8 jobs ready to run, 5 jobs in bin 5.

- To be able to bundle jobs, fix issue with Summit glideins.

- To run post-processing, resolve issues using GO to transfer data back to /project at CARC.

- Tag code

- Modify job sizes and runtimes.

- Test auto-submit script.

- Prepare pending file.

- Create XML file describing study for web monitoring tool.

- Get usage stats for Summit.

- Check cronjob on Summit for monitoring jobs.

- Call with OLCF staff?

- Activate script for monitoring x509 certificate.

- Modify workflows to not insert or calculate curves for PSA data.