UCERF3-ETAS Documentation

Here's a spreadsheet extrapolating from tests that would be equivalent to a single experiment (of the 3986 in your proposed simulation window). This calls an "experiment" a set of simulations of seismicity starting at a particular date, and there would be one "experiment" for each day in the time window (but the simulations could extend beyond that day). We are open to other terminology, just a first pass.

https://docs.google.com/spreadsheets/d/1CyecwHhId8itWqUMeVvyFbEOcJxdNzPWTFktsyKTmVM/edit?usp=sharing

The key variables are:

- How many simulations do we do for each experiment? 10k? 20k? 100k? Other? Wall clock time scales fairly linearly with this, and the more we have the better we can resolve the spatial distributions and probabilities of rare events.

- Should the simulated catalogs just be for that single day or do we let them run longer (a week, month, year)? This affects the total output data size the most, and theoretically affects runtime but not by very much (the majority of time is spent on spin up and so running it longer doesn't really affect things much).

This ran on our dedicated hardware at USC's CARC. For the shortest case, 10k 1 day simulations per experiment, we should be able to do it in under 30 days (noting that this is just a single benchmark calculation and actual numbers will vary). It produces a pretty negligible amount of output data, so no worries there. In this case, I would basically set it up to run continuously and then just cancel and later resubmit the jobs whenever there are higher priority jobs that we need to run instead.

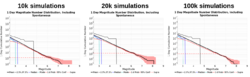

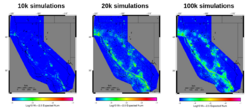

However, we'll probably need more than 10k simulations each, especially if we want to do any spatial tests. Here are reports for the 1 day simulations with various simulation counts so you can get an idea of the statistics that are possible with each (noting that these are for an arbitrary time period: today):

The most useful sections of the report for this discussion are probably "1 Day Magnitude Number Distribution" and "Gridded Nucleation." The former shows rates and sampling uncertainties as a function of magnitude, and the latter shows the spatial component (specifically how sparse it can be). Here are screenshots of those figures organized by simulation count for easier viewing:

[[Image:u3_etas_mags.png] [[Image:u3_etas_ca_maps.png]

In the spatial plots, note that even with 100k simulations many grid cells will have zero events. These plots are also extremely outlier dominated, as a few catalogs triggering a few large events with thousands of aftershocks drowns out the rates of other events. That's why the faults light up here; think long M8's followed and thousands of aftershocks in a few catalogs, not lots of small events happening randomly along the SAF in every catalog.

If we decide that we need more simulations, we will need to either be really patient or to move to a bigger machine, in which case maybe Phil can chime in on available computing allocations.

Storage doesn't look like it'll be a huge issue unless we decide that we want long catalogs for each daily experiment.