Difference between revisions of "CyberShake Study 14.2"

| Line 131: | Line 131: | ||

* Added a cron job on shock to monitor the proxy certificates and send email when the certificates have <24 hours remaining. | * Added a cron job on shock to monitor the proxy certificates and send email when the certificates have <24 hours remaining. | ||

| + | * Switched to running a single job to generate and write the velocity mesh, as opposed to separate jobs for generating and merging into 1 file. | ||

* Switched to SeisPSA_multi, which synthesizes multiple rupture variations per invocation. Planning to use a factor of 5, so only ~83,000 invocations will be needed. Reduces the I/O, since we don't have to read in the extracted SGT files for each rupture variation. | * Switched to SeisPSA_multi, which synthesizes multiple rupture variations per invocation. Planning to use a factor of 5, so only ~83,000 invocations will be needed. Reduces the I/O, since we don't have to read in the extracted SGT files for each rupture variation. | ||

* Increasing the X and Y mesh dimensions to be multiples of 200, then choosing PX and PY for the GPU code so that each processor has 200x200x200 grid points results in 95% slower runtimes, but 8.6% fewer SUs. | * Increasing the X and Y mesh dimensions to be multiples of 200, then choosing PX and PY for the GPU code so that each processor has 200x200x200 grid points results in 95% slower runtimes, but 8.6% fewer SUs. | ||

Revision as of 20:01, 30 January 2014

CyberShake Study 14.2 is a computational study to calculate physics-based probabilistic seismic hazard curves under 4 different conditions: CVM-S4.26 with CPU, CVM-S4.26 with GPU, a 1D model with CPU, and CVM-H without a GTL with GPU. It uses the Graves and Pitarka (2010) rupture variations and the UCERF2 ERF. Both the SGT calculations and the post-processing will be done on Blue Waters. The goal is to calculate the standard Southern California site list (286 sites) used in previous CyberShake studies so we can produce comparison curves and maps, and understand the impact of the SGT codes and velocity models on the CyberShake seismic hazard.

Contents

Computational Status

Study 14.2 is scheduled to begin the week of February 3, 2014.

Data Products

Goals

Science Goals

- Calculate a hazard map using CVM-S4.26.

- Calculate a hazard map using CVM-H without a GTL.

- Calculate a hazard map using a 1D model obtained by averaging.

Technical Goals

- Show that Blue Waters can be used to perform both the SGT and post-processing phases

- Compare time-to-solution with Study 13.4. We define time-to-solution to be equivalent to the makespan of all of the workflows; that is, the time that elapses between when the first workflow is submitted (to HTCondor for execution) and when all jobs in all workflows have successfully completed execution, which includes calculation of all hazard curves. This metric includes any system downtime or workflow stoppages.

- Compare the performance and queue times when using AWP-ODC-SGT CPU vs AWP-ODC-SGT GPU codes.

Verification

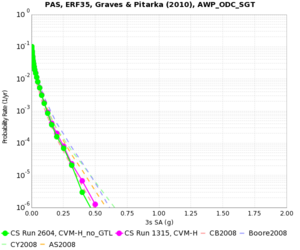

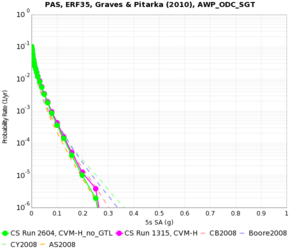

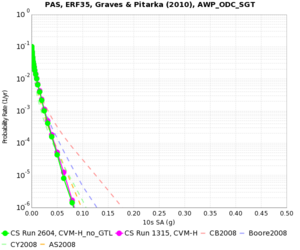

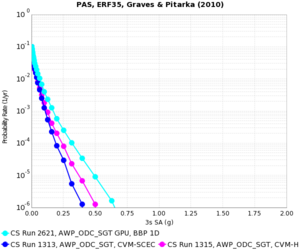

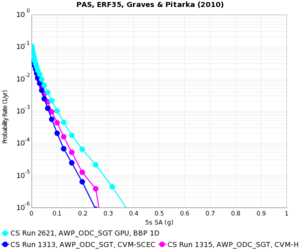

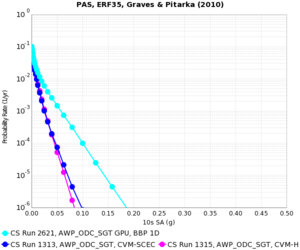

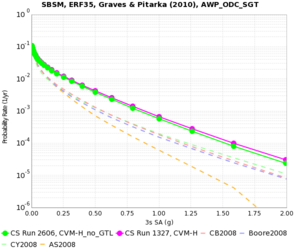

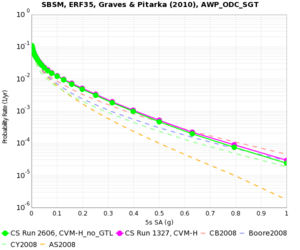

For verification, we will calculate hazard curves for PAS, WNGC, USC, and SBSM under all 4 conditions.

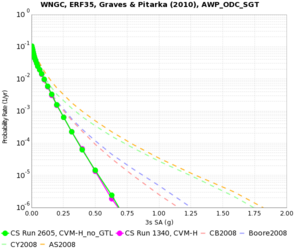

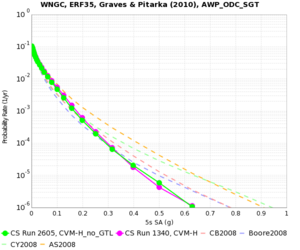

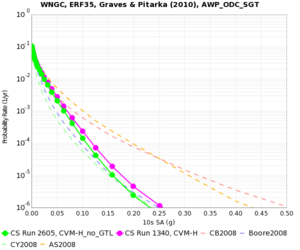

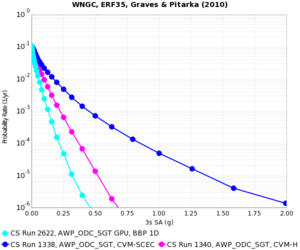

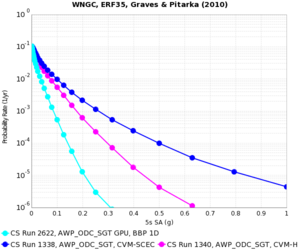

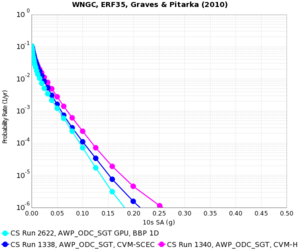

WNGC

| 3s | 5s | 10s | |

|---|---|---|---|

| CVM-H (no GTL) | |||

| 1D | |||

| CVM-S4.26 |

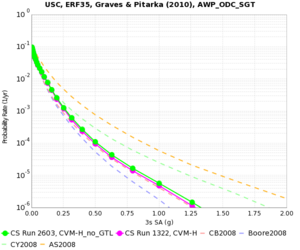

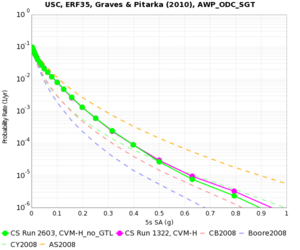

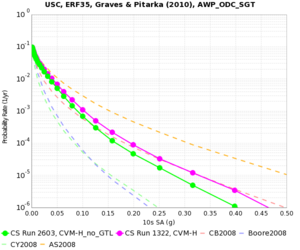

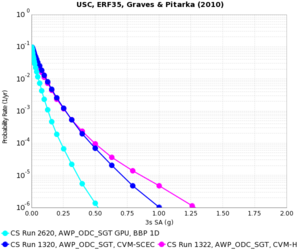

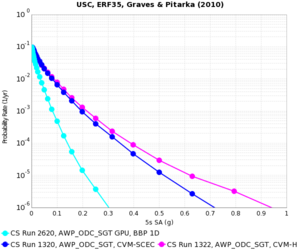

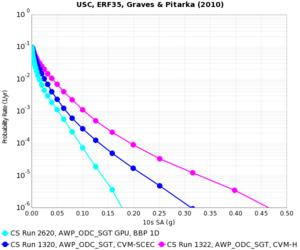

USC

| 3s | 5s | 10s | |

|---|---|---|---|

| CVM-H (no GTL) | |||

| 1D | |||

| CVM-S4.26 |

PAS

| 3s | 5s | 10s | |

|---|---|---|---|

| CVM-H (no GTL) | |||

| 1D | |||

| CVM-S4.26 |

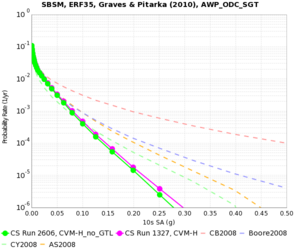

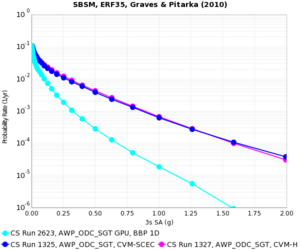

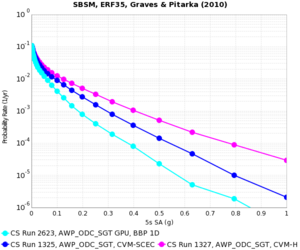

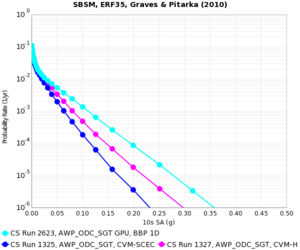

SBSM

| 3s | 5s | 10s | |

|---|---|---|---|

| CVM-H (no GTL) | |||

| 1D | |||

| CVM-S4.26 |

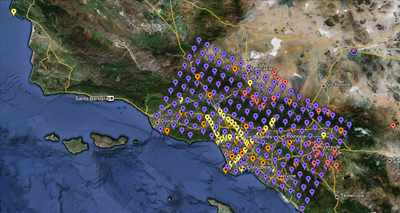

Sites

We are proposing to run 286 sites around Southern California. Those sites include 46 points of interest, 27 precarious rock sites, 23 broadband station locations, 43 20 km gridded sites, and 147 10 km gridded sites. All of them fall within the Southern California box except for Diablo Canyon and Pioneer Town. You can get a CSV file listing the sites here. A KML file listing the sites is available here.

Performance Enhancements (over Study 13.4)

- Added a cron job on shock to monitor the proxy certificates and send email when the certificates have <24 hours remaining.

- Switched to running a single job to generate and write the velocity mesh, as opposed to separate jobs for generating and merging into 1 file.

- Switched to SeisPSA_multi, which synthesizes multiple rupture variations per invocation. Planning to use a factor of 5, so only ~83,000 invocations will be needed. Reduces the I/O, since we don't have to read in the extracted SGT files for each rupture variation.

- Increasing the X and Y mesh dimensions to be multiples of 200, then choosing PX and PY for the GPU code so that each processor has 200x200x200 grid points results in 95% slower runtimes, but 8.6% fewer SUs.

- Increasing the number of processors so that each CPU is responsible for 50x50x50 grid points results in 73% faster runtimes and 31% fewer SUs.