Difference between revisions of "CyberShake Study 18.8"

| (178 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | CyberShake 18. | + | CyberShake 18.8 is a computational study to perform CyberShake in a new region, the extended Bay Area. We plan to use a combination of 3D models (USGS Bay Area detailed and regional, CVM-S4.26.M01, CCA-06) with a minimum Vs of 500 m/s and a frequency of 1 Hz. We will use the GPU implementation of AWP-ODC-SGT, the Graves & Pitarka (2014) rupture variations with 200m spacing and uniform hypocenters, and the UCERF2 ERF. The SGT and post-processing calculations will both be run on both NCSA Blue Waters and OLCF Titan. |

== Status == | == Status == | ||

| − | This study is | + | This study is complete. |

| + | |||

| + | The study began on Friday, August 17, 2018 at 20:17:46 PDT. | ||

| + | |||

| + | It was paused on Monday, October 15, 2018 at about 6:53 PDT, then resumed on Thursday, January 17, 2019 at 11:21:26 PST. | ||

| + | |||

| + | The study was completed on Thursday, March 28, 2019, at 9:04:38 PDT. | ||

| + | |||

| + | == Data Products == | ||

| + | |||

| + | Hazard maps from this study are available [http://opensha.usc.edu/ftp/kmilner/markdown/cybershake-analysis/study_18_8/hazard_maps here]. | ||

| + | |||

| + | Statewide maps are available [http://opensha.usc.edu/ftp/kmilner/cybershake/study_18.8/statewide_maps/ here]. | ||

| + | |||

| + | Hazard curves produced from Study 18.8 have the Hazard_Dataset_ID 87. | ||

| + | |||

| + | Individual runs can be identified in the CyberShake database by searching for runs with Study_ID=9. | ||

| + | |||

| + | Selected data products can be viewed at [[CyberShake Study 18.8 Data Products]]. | ||

== Science Goals == | == Science Goals == | ||

| Line 11: | Line 29: | ||

*Expand CyberShake to the Bay Area. | *Expand CyberShake to the Bay Area. | ||

*Calculate CyberShake results with the USGS Bay Area velocity model as the primary model. | *Calculate CyberShake results with the USGS Bay Area velocity model as the primary model. | ||

| + | *Calculate CyberShake results at selection locations with Vs min = 250 m/s. | ||

== Technical Goals == | == Technical Goals == | ||

| Line 18: | Line 37: | ||

== Sites == | == Sites == | ||

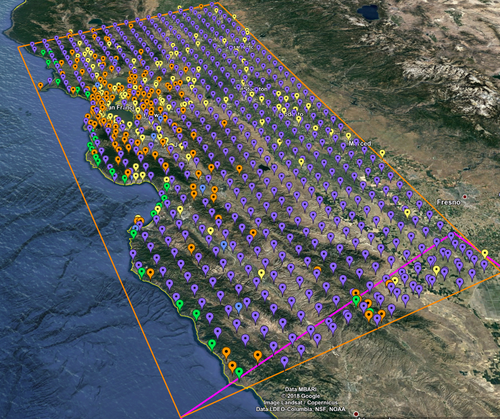

| − | [[File:Study_18_3_sites.png|thumb|500px|Map showing Study 18.3 sites ( | + | [[File:Study_18_3_sites.png|thumb|500px|Map showing Study 18.3 sites (sites of interest=yellow, CISN stations=orange, missions=blue, 10 km grid=purple, 5 km grid=green). The Bay Area box is in orange and the Study 17.3 box is in magenta.]] |

The Study 18.3 box is 180 x 390 km, with the long edge rotated 27 degrees counter-clockwise from vertical. The corners are defined to be: | The Study 18.3 box is 180 x 390 km, with the long edge rotated 27 degrees counter-clockwise from vertical. The corners are defined to be: | ||

| Line 27: | Line 46: | ||

East: (-119.71,36.22) | East: (-119.71,36.22) | ||

| − | We are planning to run 869 sites, | + | We are planning to run 869 sites, 837 of which are new, as part of this study. |

These sites include: | These sites include: | ||

| − | *77 cities (74 new) | + | *77 cities (74 new) + 46 sites of interest |

*10 new missions | *10 new missions | ||

*139 CISN stations (136 new) | *139 CISN stations (136 new) | ||

| − | |||

*597 sites along a 10 km grid (571 new) | *597 sites along a 10 km grid (571 new) | ||

| Line 42: | Line 60: | ||

== Projection Analysis == | == Projection Analysis == | ||

As our simulation region gets larger, we needed to review the impact of the projection we are using for the simulations. An analysis of the impact of various projections by R. Graves is summarized in this posting: | As our simulation region gets larger, we needed to review the impact of the projection we are using for the simulations. An analysis of the impact of various projections by R. Graves is summarized in this posting: | ||

| − | *[https://hypocenter.usc.edu/research/CyberShake/proj_notes20180404.pdf Projection | + | *[https://hypocenter.usc.edu/research/CyberShake/proj_notes20180404.pdf Projection Comparison] |

== Velocity Models == | == Velocity Models == | ||

| − | For Study 18. | + | For Study 18.5, we initially decided to construct the velocity mesh by querying models in the following order (but changed the order later, for production): |

# USGS Bay Area model | # USGS Bay Area model | ||

| − | # CCA-06 | + | # CCA-06 with the Ely GTL applied |

| − | # CVM-S4.26.M01 which | + | # CVM-S4.26.M01 (which includes a 1D background model). |

| − | + | ||

| − | We will | + | A KML file showing the model regions is available [[:File:velocity model regions.kml | here]]. |

| + | |||

| + | We will use a minimum Vs of 500 m/s. Smoothing will be applied 20 km on either side of any velocity model interface. | ||

| + | |||

| + | A thorough investigation was done to determine these parameters; a more detailed discussion is available at [[Study 18.5 Velocity Model Comparisons]]. | ||

| + | |||

| + | === Vs=250m/s experiment === | ||

| + | |||

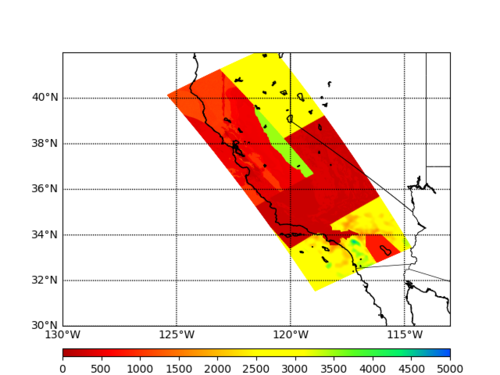

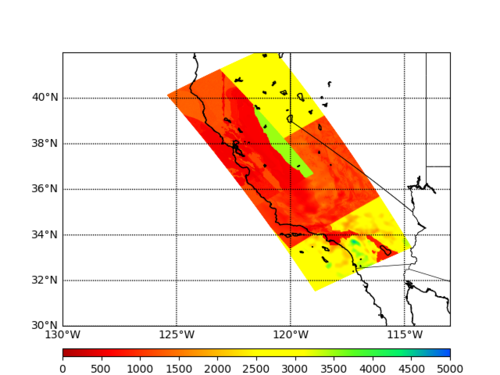

| + | We would like to select a subset of sites and calculate hazard at 1 Hz and minimum Vs=250 m/s. Below are velocity plots with this lower Vs cutoff. | ||

| + | |||

| + | {| | ||

| + | | [[File:horiz_plot_0km.png|thumb|500px|Surface plot with Vs min=250 m/s.]] | ||

| + | | [[File:horiz_plot_0.1km.png|thumb|500px|Plot at 100m depth with Vs min=250 m/s.]] | ||

| + | |} | ||

| + | |||

| + | == Verification == | ||

| + | |||

| + | We have selected the following 4 sites for verification, one from each corner of the box: | ||

| + | |||

| + | *s4518 | ||

| + | *s975 | ||

| + | *s2189 | ||

| + | *s2221 | ||

| + | |||

| + | We will run s975 at both 50 km depth, ND=50 and 80 km depth, ND=80. | ||

| + | |||

| + | We will run s4518 and s2221 on both Blue Waters and Titan. | ||

| + | |||

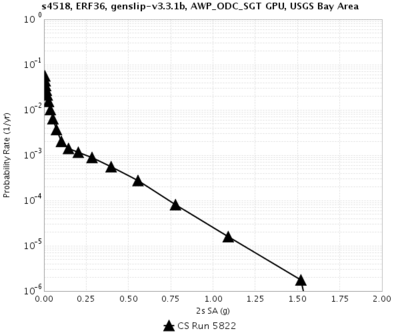

| + | === Hazard Curves === | ||

| + | |||

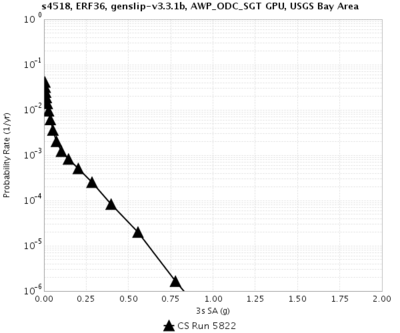

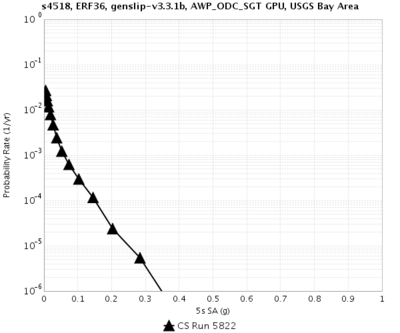

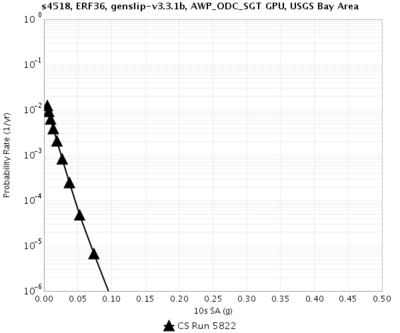

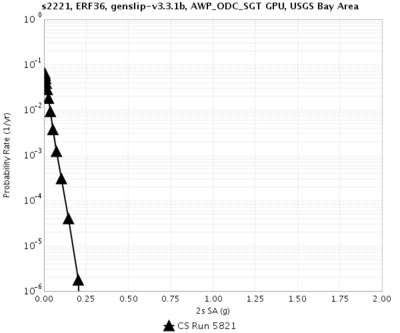

| + | {| | ||

| + | ! Site !! 2 sec RotD50 !! 3 sec RotD50 !! 5 sec RotD50 !! 10 sec RotD50 | ||

| + | |- | ||

| + | ! s4518 (south corner) || [[File:s4518_5822_2secRotD50.png|thumb|400px]] || [[File:s4518_5822_3secRotD50.png|thumb|400px]] || [[File:s4518_5822_5secRotD50.png|thumb|400px]] || [[File:s4518_5822_10secRotD50.png|thumb|400px]] | ||

| + | |- | ||

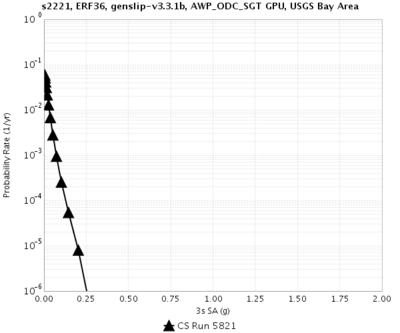

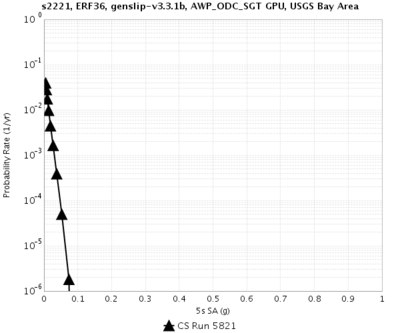

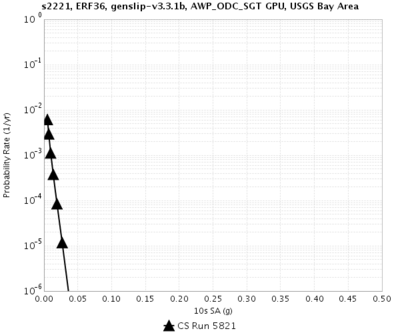

| + | ! s2221 (north corner) || [[File:s2221_5821_2secRotD50.png|thumb|400px]] || [[File:s2221_5821_3secRotD50.png|thumb|400px]] || [[File:s2221_5821_5secRotD50.png|thumb|400px]] || [[File:s2221_5821_10secRotD50.png|thumb|400px]] | ||

| + | |- | ||

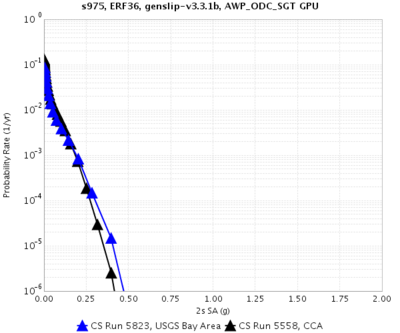

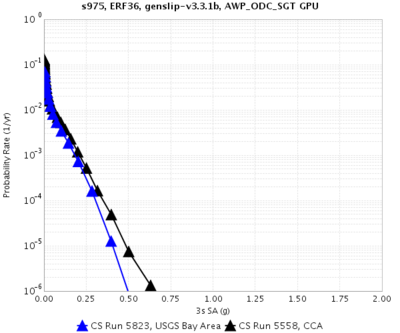

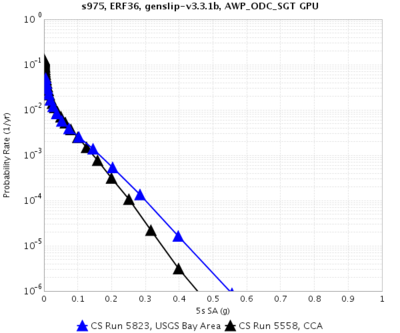

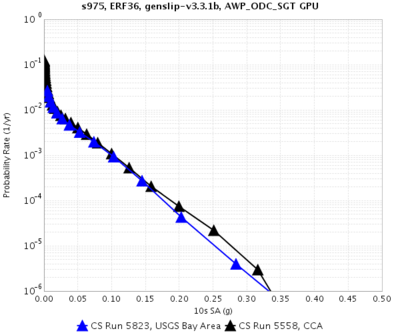

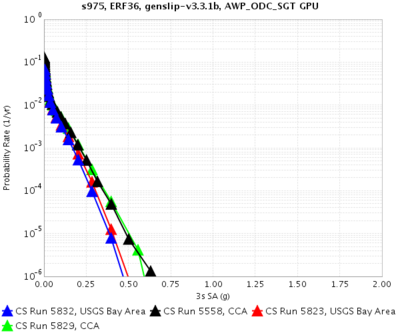

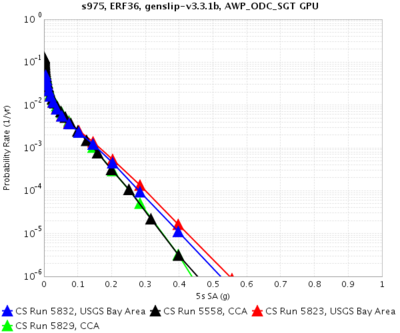

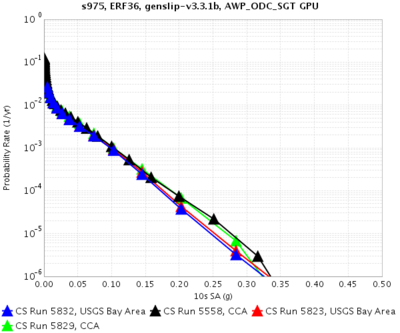

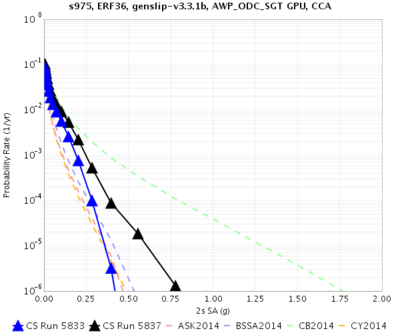

| + | ! s975 (east corner) || [[File:s975_5823_v_5558_2secRotD50.png|thumb|400px|Blue is Bay Area test, black Study 17.3]] || [[File:s975_5823_v_5558_3secRotD50.png|thumb|400px|Blue is Bay Area test, black Study 17.3]] || [[File:s975_5823_v_5558_5secRotD50.png|thumb|400px|Blue is Bay Area test, black Study 17.3]] || [[File:s975_5823_v_5558_10secRotD50.png|thumb|400px|Blue is Bay Area test, black Study 17.3]] | ||

| + | |} | ||

| + | |||

| + | ==== Comparisons with Study 17.3 ==== | ||

| + | |||

| + | {| | ||

| + | ! Site !! 2 sec RotD50 !! 3 sec RotD50 !! 5 sec RotD50 !! 10 sec RotD50 | ||

| + | |- | ||

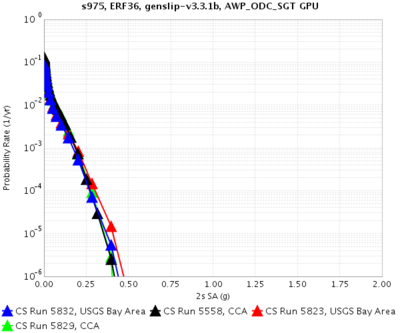

| + | | s975 (east corner) || [[File:s975_5829_5823_5558_2secRotD50.png|thumb|400px|black is Study 17.3; red is Bay Area test; green is Bay Area code with Study 17.3 params; blue is Vsmin=900m/s, USGS/CCA06 no GTL/CVM-S4.26.M01]] || [[File:s975_5829_5823_5558_3secRotD50.png|thumb|400px|black is Study 17.3; red is Bay Area test; green is Bay Area code with Study 17.3 params; blue is Vsmin=900m/s, USGS/CCA06 no GTL/CVM-S4.26.M01]] || [[File:s975_5829_5823_5558_5secRotD50.png|thumb|400px|black is Study 17.3; red is Bay Area test; green is Bay Area code with Study 17.3 params; blue is Vsmin=900m/s, USGS/CCA06 no GTL/CVM-S4.26.M01]] || [[File:s975_5829_5823_5558_10secRotD50.png|thumb|400px|black is Study 17.3; red is Bay Area test; green is Bay Area code with Study 17.3 params; blue is Vsmin=900m/s, USGS/CCA06 no GTL/CVM-S4.26.M01]] | ||

| + | |} | ||

| + | |||

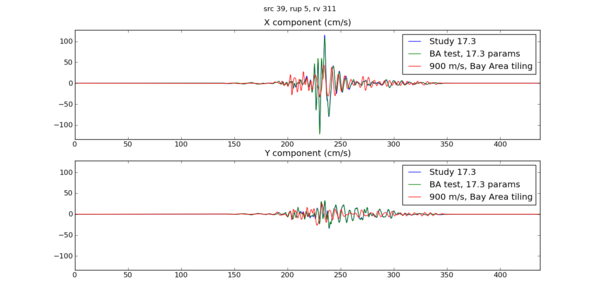

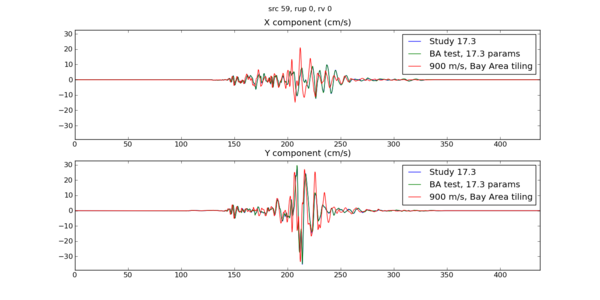

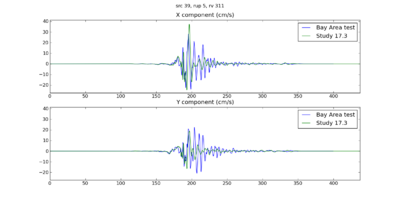

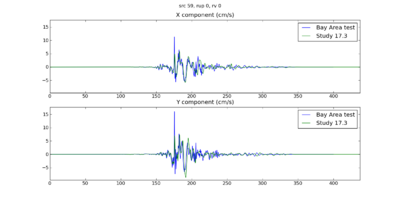

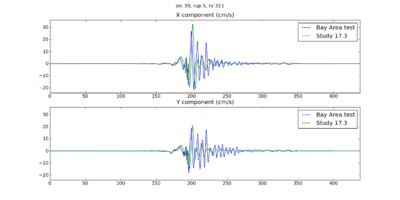

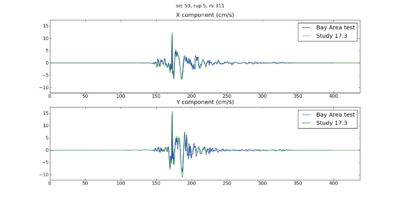

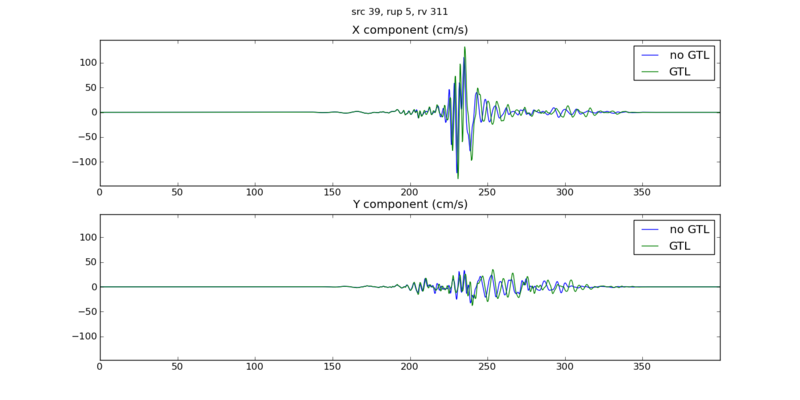

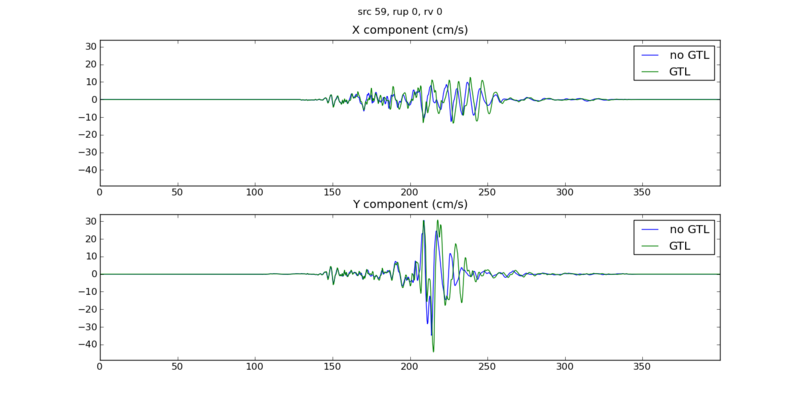

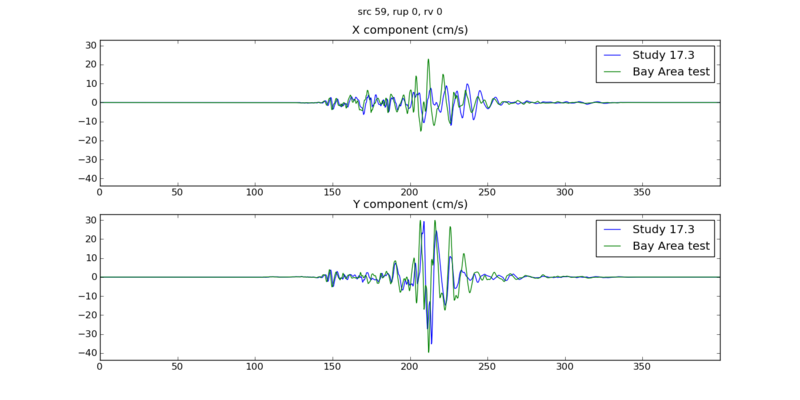

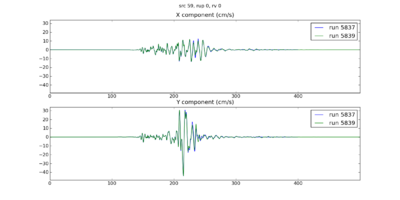

| + | Below are seismograms comparing a northern SAF event (source 39, rupture 5, rupture variation 311, M8.15 with hypocenter just offshore about 60 km south of Eureka) and a southern SAF event (source 59, rupture 0, rupture variation 0, M7.75 with hypocenter near Bombay Beach) between the Bay Area test with Study 17.3 parameters, Study 17.3, and Study 17.3 parameters but Bay Area tiling. The only difference is that the smoothing zone is larger in the Bay Area test + 17.3 parameters (I forgot to change it). Overall, the matches between Study 17.3 and the BA test + 17.3 parameters are excellent. Once the tiling is changed, we start to see differences, especially in the northern CA event. | ||

| + | |||

| + | {| | ||

| + | | [[File:s975_5829_v_5558_s39_r5_rv311.png|thumb|left|600px|N SAF event]] | ||

| + | | [[File:s975_5829_v_5558_s59_r0_rv0.png|thumb|left|600px|S SAF event]] | ||

| + | |} | ||

| + | |||

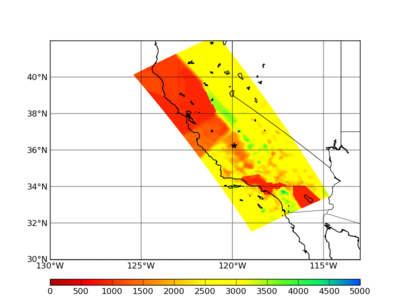

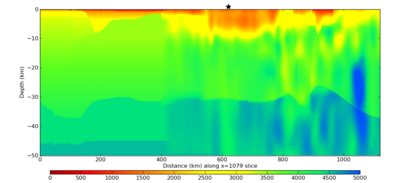

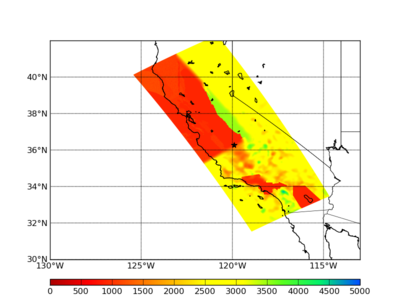

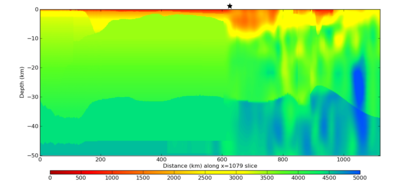

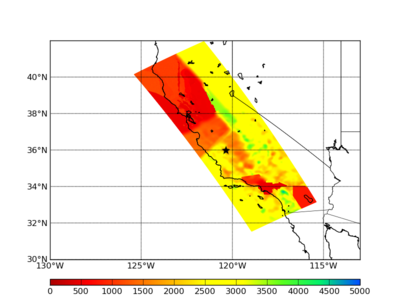

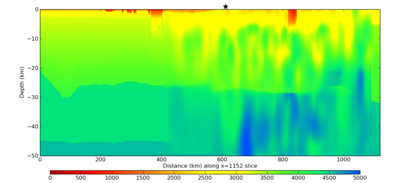

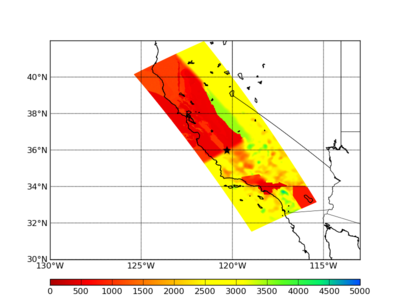

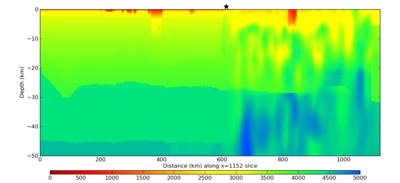

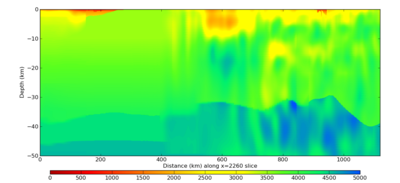

| + | To help illuminate the differences in velocity model, below are surface and vertical plots. The star indicates the location of the s975 site. Both models were created with Vs min = 900 m/s, and no GTL applied to the CCA-06 model. | ||

| + | |||

| + | {| | ||

| + | ! Model !! Horizontal (depth=0) !! Vertical, parallel to box through site | ||

| + | |- | ||

| + | | s975, Study 17.3 tiling:<br/>1)CCA-06, no GTL<br/>2)USGS Bay Area<br/>3)CVM-S4.26.M01 || [[File:horiz_plot_s975_study173.png|thumb|400px]] || [[File:vert_plot_s975_study173.png|thumb|400px|NW is at left, SE at right]] | ||

| + | |- | ||

| + | | s975, Bay Area:<br/>1)USGS Bay Area<br/>2)CCA-06, no GTL<br/>3)CVM-S4.26.M01 || [[File:horiz_plot_s975_bayAreaTiling_1079x.png|thumb|400px]] || [[File:vert_plot_s975_bayAreaTiling_1079x.png|thumb|400px|NW is at left, SE at right]] | ||

| + | |} | ||

| + | |||

| + | |||

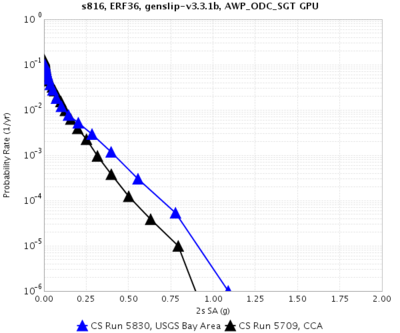

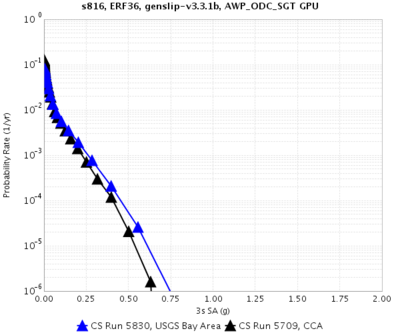

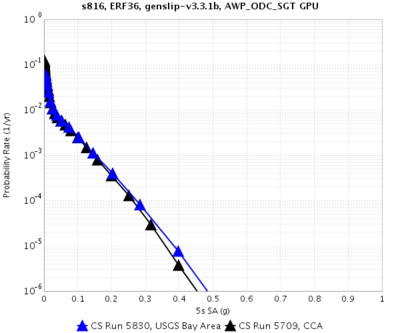

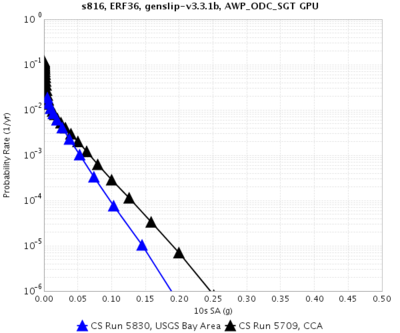

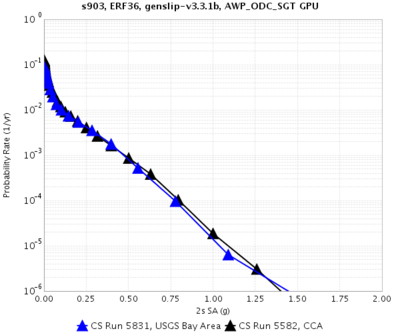

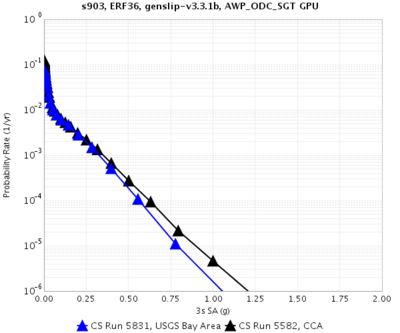

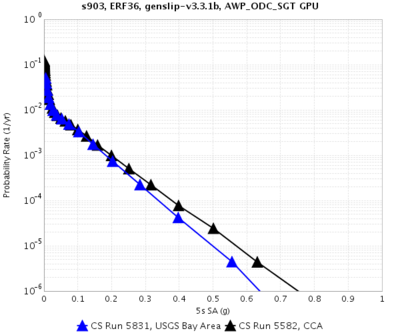

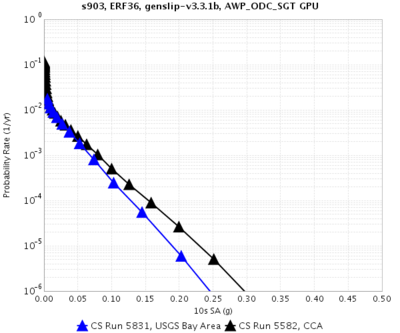

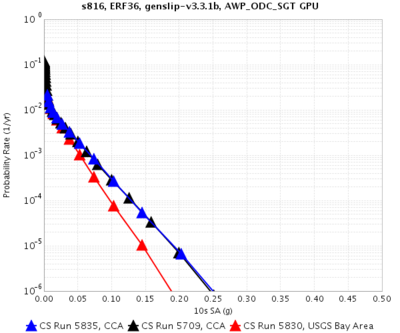

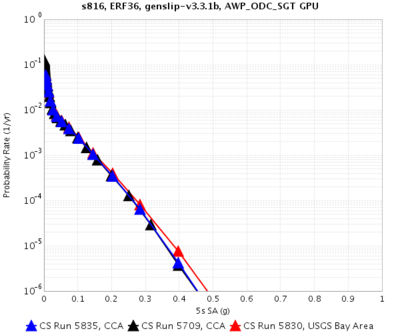

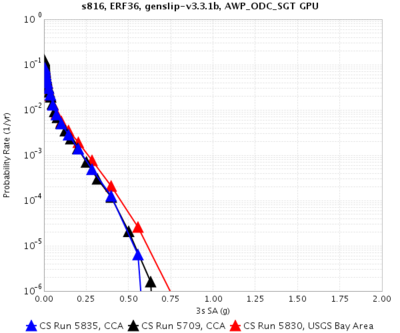

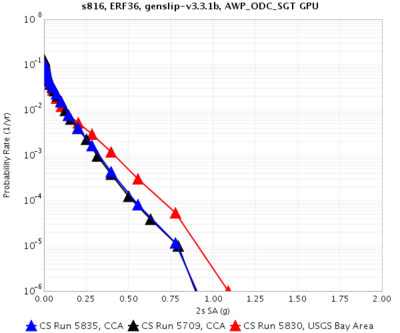

| + | We also calculated curves for 2 overlapping rock sites, s816 and s903. s816 is 22 km from the USGS/CCA model boundary, and outside of the smoothing zone; s903 is 7 km from the boundary. | ||

| + | |||

| + | {| | ||

| + | ! Site !! 2 sec RotD50 !! 3 sec RotD50 !! 5 sec RotD50 !! 10 sec RotD50 | ||

| + | |- | ||

| + | | s816 || [[File:s816_5830_5709_2secRotD50.png|thumb|400px|Blue is Bay Area test, black Study 17.3]] || [[File:s816_5830_5709_3secRotD50.png|thumb|400px|Blue is Bay Area test, black Study 17.3]] || [[File:s816_5830_5709_5secRotD50.png|thumb|400px|Blue is Bay Area test, black Study 17.3]] || [[File:s816_5830_5709_10secRotD50.png|thumb|400px|Blue is Bay Area test, black Study 17.3]] | ||

| + | |- | ||

| + | | s903 || [[File:s903_5831_5582_2secRotD50.png|thumb|400px|Blue is Bay Area test, black Study 17.3]] || [[File:s903_5831_5582_3secRotD50.png|thumb|400px|Blue is Bay Area test, black Study 17.3]] || [[File:s903_5831_5582_5secRotD50.png|thumb|400px|Blue is Bay Area test, black Study 17.3]] || [[File:s903_5831_5582_10secRotD50.png|thumb|400px|Blue is Bay Area test, black Study 17.3]] | ||

| + | |} | ||

| + | |||

| + | Velocity model vertical slides for s816: | ||

| + | |||

| + | {| | ||

| + | ! Model !! Horizontal (depth=0) !! Vertical, parallel to box through site | ||

| + | |- | ||

| + | | s816, Study 17.3 tiling:<br/>1)CCA-06, no GTL<br/>2)USGS Bay Area<br/>3)CVM-S4.26.M01|| [[File:horiz_plot_s816_study173.png|thumb|400px]] || [[File:vert_plot_s816_study173.png|thumb|400px|NW is at left, SE at right]] || | ||

| + | |- | ||

| + | | s816, Bay Area:<br/>1)USGS Bay Area<br/>2)CCA-06, no GTL<br/>3)CVM-S4.26.M01 || [[File:horiz_plot_s816_bayAreaTiling.png|thumb|400px]] || [[File:vert_plot_s816_bayAreaTiling.png|thumb|400px|NW is at left, SE at right]] | ||

| + | |} | ||

| + | |||

| + | These are seismograms for the same two events (NSAF, src 39 rup 5 rv 311 and SSAF, src 59 rup 0 rv 0). The southern events fit a good deal better than the northern. | ||

| + | |||

| + | {| | ||

| + | ! Site !! Northern SAF event !! Southern SAF event | ||

| + | |- | ||

| + | | s816 || [[File:s816_5830_v_5709_39_5_311.png|thumb|400px]] || [[File:s816_5830_v_5709_59_0_0.png|thumb|400px]] | ||

| + | |- | ||

| + | | s903 || [[File:s903_5831_v_5582_39_5_311.png|thumb|400px]] || [[File:s903_5831_v_5582_59_0_0.png|thumb|400px]] | ||

| + | |} | ||

| + | |||

| + | ==== Configuration for this study ==== | ||

| + | |||

| + | Based on these results, we've decided to use the Study 17.3 tiling order for the velocity models: | ||

| + | |||

| + | #CCA-06 with Ely GTL | ||

| + | #USGS Bay Area model | ||

| + | #CVM-S4.26.M01 | ||

| + | |||

| + | All models will use minimum Vs=500 m/s. | ||

| + | |||

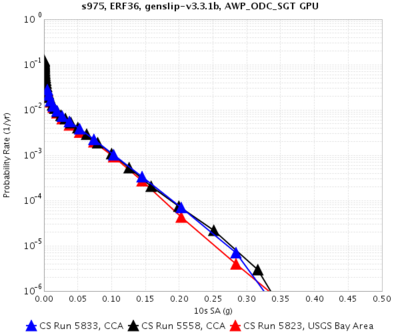

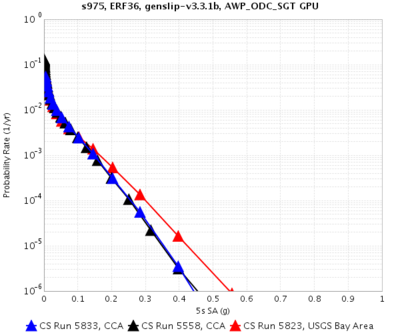

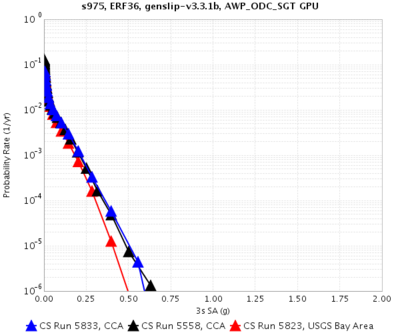

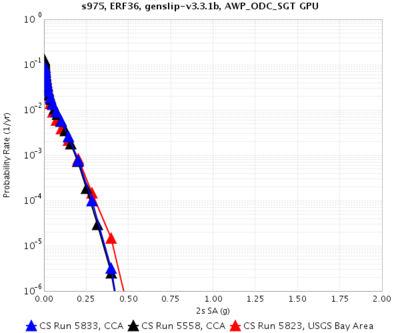

| + | Here are comparison curves *without* the GTL, comparing Study 17.3 results, USGS Bay Area/CCA-06-GTL/CVM-S4.26.M01, and CCA-06-GTL/USGS Bay Area/CVM-S4.26.M01 . | ||

| + | |||

| + | {| | ||

| + | ! Site !! 10 sec RotD50 !! 5 sec RotD50 !! 3 sec RotD50 !! 2 sec RotD50 | ||

| + | |- | ||

| + | | s975 || [[File:s975_5833_5558_5823_10sec_RotD50.png|400px|thumb|Black=Study 17.3, Red=USGS/CCA/CVM-S4.26, Blue=CCA/USGS/CVM-S4.26]] || [[File:s975_5833_5558_5823_5sec_RotD50.png|400px|thumb|Black=Study 17.3, Red=USGS/CCA/CVM-S4.26, Blue=CCA/USGS/CVM-S4.26]] || [[File:s975_5833_5558_5823_3sec_RotD50.png|400px|thumb|Black=Study 17.3, Red=USGS/CCA/CVM-S4.26, Blue=CCA/USGS/CVM-S4.26]] || [[File:s975_5833_5558_5823_2sec_RotD50.png|400px|thumb|Black=Study 17.3, Red=USGS/CCA/CVM-S4.26, Blue=CCA/USGS/CVM-S4.26]] | ||

| + | |- | ||

| + | | s816 || [[File:s816_5835_5709_5830_10sec_RotD50.png|400px|thumb|Black=Study 17.3, Red=USGS/CCA/CVM-S4.26, Blue=CCA/USGS/CVM-S4.26]] || [[File:s816_5835_5709_5830_5sec_RotD50.png|400px|thumb|Black=Study 17.3, Red=USGS/CCA/CVM-S4.26, Blue=CCA/USGS/CVM-S4.26]] || [[File:s816_5835_5709_5830_3sec_RotD50.png|400px|thumb|Black=Study 17.3, Red=USGS/CCA/CVM-S4.26, Blue=CCA/USGS/CVM-S4.26]] || [[File:s816_5835_5709_5830_2sec_RotD50.png|400px|thumb|Black=Study 17.3, Red=USGS/CCA/CVM-S4.26, Blue=CCA/USGS/CVM-S4.26]] | ||

| + | |} | ||

| + | |||

| + | ==== GTL ==== | ||

| + | |||

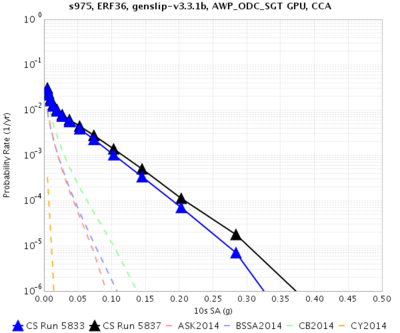

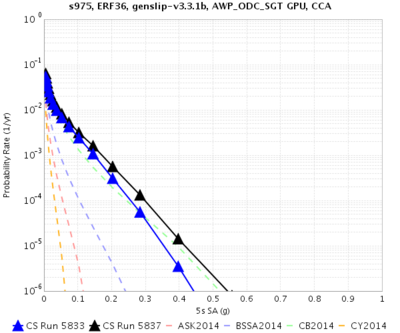

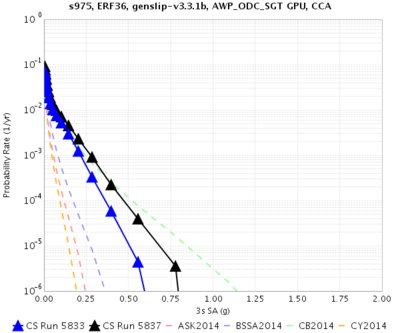

| + | Here are comparison curves between CCA-06-GTL/USGS Bay Area/CVM-S4.26.M01 and CCA-06+GTL/USGS Bay Area/CVM-S4.26.M01, showing the impact of the GTL. | ||

| + | |||

| + | {| | ||

| + | ! Site !! 10 sec RotD50 !! 5 sec RotD50 !! 3 sec RotD50 !! 2 sec RotD50 | ||

| + | |- | ||

| + | | s975 || [[File:s975_5837_5833_10sec_RotD50.png|400px|thumb|Blue=no GTL, Black=GTL]] || [[File:s975_5837_5833_5sec_RotD50.png|400px|thumb|Blue=no GTL, Black=GTL]] || [[File:s975_5837_5833_3sec_RotD50.png|400px|thumb|Blue=no GTL, Black=GTL]] || [[File:s975_5837_5833_2sec_RotD50.png|400px|thumb|Blue=no GTL, Black=GTL]] | ||

| + | |} | ||

| + | |||

| + | Here are seismogram comparisons for the two San Andreas events with and without the GTL. Adding the GTL slightly amplifies and stretches the seismogram, which makes sense. | ||

| + | |||

| + | Northern event | ||

| + | {| | ||

| + | | [[File:s975_5833_5837_39_5_311.png|800px|thumb|Blue=no GTL, Green=GTL]] | ||

| + | |} | ||

| + | Southern event | ||

| + | {| | ||

| + | | [[File:s975_5833_5837_59_0_0.png|800px|thumb|Blue=no GTL, Green=GTL]] | ||

| + | |} | ||

| + | |||

| + | Here are vertical velocity slices through the volume, with NW on the left and SE on the right, both for the entire volume and zoomed in to the top 5 km. | ||

| + | |||

| + | {| | ||

| + | ! No GTL | ||

| + | | [[File:s975_5833_vert_plot_2260y.png|400px|thumb]] || [[File:s975_5833_vert_plot_2260y_zoomed.png|400px|thumb]] | ||

| + | |- | ||

| + | ! GTL | ||

| + | | [[File:s975_5837_vert_plot_2260y.png|400px|thumb]] || [[File:s975_5837_vert_plot_2260y_zoomed.png|400px|thumb]] | ||

| + | |} | ||

| + | |||

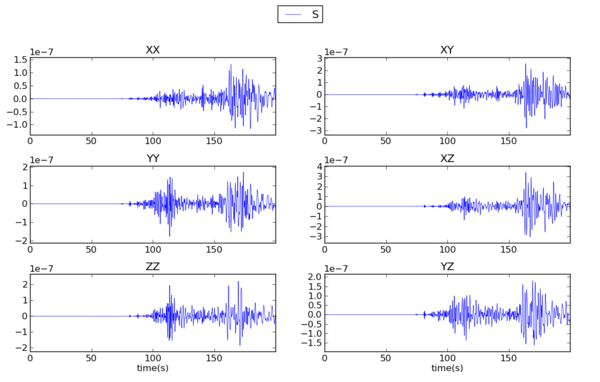

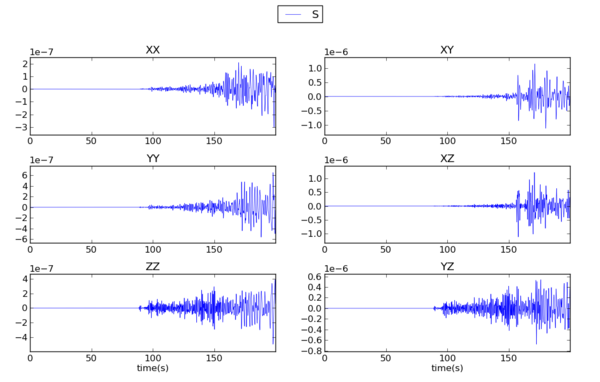

| + | === Seismograms === | ||

| + | |||

| + | We've extracted 2 seismograms for s975, a northern SAF event and a southern, and plotted comparisons of the Bay Area test with the Study 17.3 3D results. Note the following differences between the two runs: | ||

| + | *Minimum vs: 900 m/s for Study 17.3, 500 m/s for the test | ||

| + | *GTL: no GTL in CCA-06 for Study 17.3, a GTL for CCA-06 for the test | ||

| + | *DT: 0.0875 sec for Study 17.3, 0.05 sec for the test | ||

| + | *Velocity model priority: CCA-06, then USGS Bay Area, then CVM-S4.26.M01 for Study 17.3; USGS Bay Area, then CCA-06+GTL, then CVM-S4.26.M01 for the test | ||

| − | + | ==== Northern Event ==== | |

| − | + | This seismogram is from source 39, rupture 5, rupture variation 311, a M8.15 northern San Andreas event with hypocenter just offshore, about 60 km south of Eureka. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | {| | |

| − | + | | [[File:s975_5823_v_5558_s39_r3_rv311.png|thumb|left|800px]] | |

| + | |} | ||

| − | == | + | ==== Southern Event ==== |

| − | + | This seismogram is from source 59, rupture 0, rupture variation 0, a M7.75 southern San Andreas event with hypocenter near Bombay Beach. | |

{| | {| | ||

| − | | [[File: | + | | [[File:s975_5823_v_5558_s59_r0_rv0.png|thumb|left|800px]] |

| − | |||

| − | |||

| − | |||

|} | |} | ||

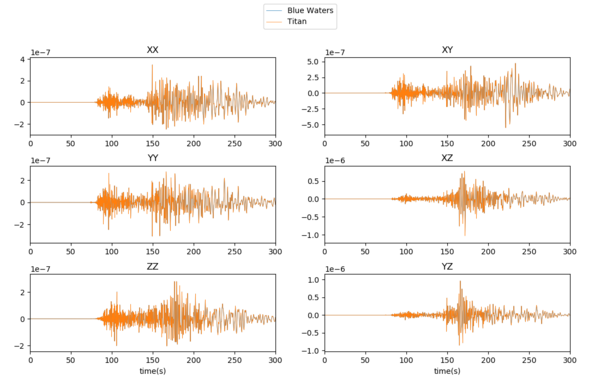

| − | We | + | === Blue Waters and Titan === |

| + | |||

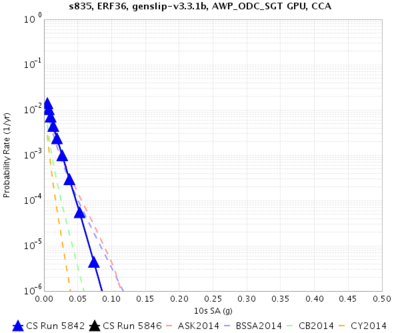

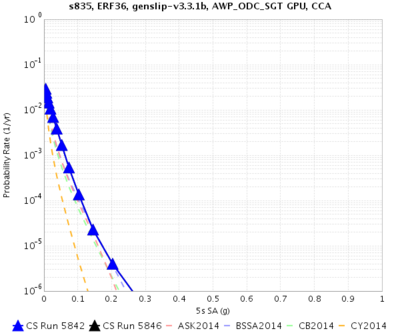

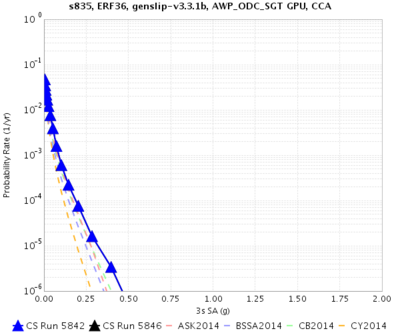

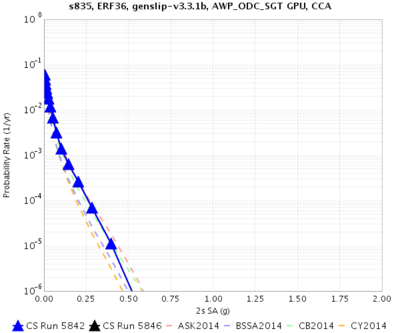

| + | We calculated hazard curves for the same site (s835) on Titan and Blue Waters. The curves overlap. | ||

| + | |||

| + | {| | ||

| + | | [[File:s835_5842_v_5846_10sec.png|thumb|left|400px|blue = Blue Waters, black = Titan]] | ||

| + | | [[File:s835_5842_v_5846_5sec.png|thumb|left|400px|blue = Blue Waters, black = Titan]] | ||

| + | | [[File:s835_5842_v_5846_3sec.png|thumb|left|400px|blue = Blue Waters, black = Titan]] | ||

| + | | [[File:s835_5842_v_5846_2sec.png|thumb|left|400px|blue = Blue Waters, black = Titan]] | ||

| + | |} | ||

| + | |||

| + | == Background Seismicity == | ||

| + | |||

| + | Statewide maps showing the impact of background seismicity are available here: [[CyberShake Background Seismicity]] | ||

| + | |||

| + | == SGT time length == | ||

| + | |||

| + | Below are SGT plots for s945. These SGTs are from the southwest and southeast bottom corner of the region, so as far as you can get from this site. It looks like 200 sec isn't long enough for these distant points. | ||

| + | |||

| + | Based on this, we will increase the SGT simulation time to 300 sec if any hypocenters are more than 450 km away from the site, and increase the seismogram duration from 400 to 500 sec. | ||

| − | + | {| | |

| − | + | | [[File:s975_pt6000345_fx.png|thumb|600px|Southeast bottom corner]] | |

| − | + | |- | |

| − | + | | [[File:s975_pt9366_fx.png|thumb|600px|Southwest bottom corner]] | |

| − | + | |} | |

| − | |||

{| | {| | ||

| − | | [[File: | + | | [[File:sgt_comparison.png|thumb|600px|Comparison of SGTs generated on Titan and Blue Waters, for s975 at the farthest southeast point on a fault surface. Notice the content after 200 sec, the old simulation cutoff.]] |

| − | |||

| − | |||

| − | |||

| − | |||

|} | |} | ||

| − | |||

| − | + | === Longer SGT comparison === | |

| − | |||

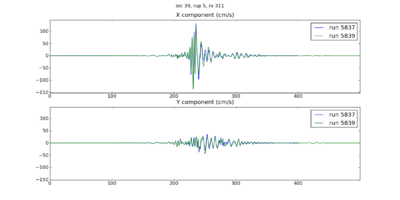

| − | The | + | Below is a comparison of seismograms generating using the original length (200 sec SGTs, 400 sec seismograms, in blue) and the new length (300 sec SGTs, 500 sec seismograms, in green). The slight differences in wiggles are due to the shorter ones being generated with Wills 2015, and the longer with 2006. Notice the additional content after about 350 seconds in the green trace. |

| + | |||

| + | Southern SAF (src 59, rup 0, rv 0): | ||

{| | {| | ||

| − | | [[File: | + | | [[File:5837_v_5839_59_0_0.png|thumb|400px|Blue=original, Green=longer]] |

| − | |||

|} | |} | ||

| − | == | + | Northern SAF (src 39, rup 5, rv 311): |

| + | |||

| + | {| | ||

| + | | [[File:5837_v_5839_39_5_311.png|thumb|400px|Blue=original, Green=longer]] | ||

| + | |} | ||

== Performance Enhancements (over Study 17.3) == | == Performance Enhancements (over Study 17.3) == | ||

| + | |||

| + | === Responses to Study 17.3 Lessons Learned === | ||

| + | |||

| + | *<i>Include plots of velocity models as part of readiness review when moving to new regions.</i> | ||

| + | We have constructed many plots, and will include some in the science readiness review. | ||

| + | |||

| + | *<i>Formalize process of creating impulse. Consider creating it as part of the workflow based on nt and dt.</i> | ||

| + | |||

| + | *<i>Many jobs were not picked up by the reservation, and as a result reservation nodes were idle. Work harder to make sure reservation is kept busy.</i> | ||

| + | |||

| + | *<i>Forgot to turn on monitord during workflow, so had to deal with statistics after the workflow was done. Since we're running far fewer jobs, it's fine to run monitord population during the workflow.</i> | ||

| + | We set pegasus.monitord.events = true in all properties files. | ||

| + | |||

| + | *<i>In Study 17.3b, 2 of the runs (5765 and 5743) had a problem with their output, which left 'holes' of lower hazard on the 1D map. Looking closely, we discovered that the SGT X component of run 5765 was about 30 GB smaller than it should have been, likely causing issues when the seismograms were synthesized. We no longer had the SGTs from 5743, so we couldn't verify that the same problem happened here. Moving forward, include checks on SGT file size as part of the nan check.</i> | ||

| + | We added a file size check as part of the NaN check. | ||

| + | |||

| + | == Output Data Products == | ||

| + | |||

| + | Below is a list of what data products we intend to compute and what we plan to put in the database. | ||

== Computational and Data Estimates == | == Computational and Data Estimates == | ||

| Line 120: | Line 321: | ||

We scaled our results based on the Study 17.3 performance of site s975, a site also in Study 18.3, and the Study 15.4 performance of DBCN, which used a very large volume and 100m spacing. | We scaled our results based on the Study 17.3 performance of site s975, a site also in Study 18.3, and the Study 15.4 performance of DBCN, which used a very large volume and 100m spacing. | ||

| + | |||

| + | We also assume that the sites with southern San Andreas events will be run with 300 sec SGTs, and the other sites with 200 sec. All seismograms will be 500 sec long. | ||

{| class="wikitable" | {| class="wikitable" | ||

| Line 126: | Line 329: | ||

|- | |- | ||

! Inside cutoff, per site | ! Inside cutoff, per site | ||

| − | | 23.1 billion || 192 || 0.85 hrs || 800 || | + | | 23.1 billion || 192 || 0.85 hrs || 800 || 2.03 hrs || 102.1k || 3320 |

|- | |- | ||

! Outside cutoff, per site | ! Outside cutoff, per site | ||

| Line 133: | Line 336: | ||

! Total | ! Total | ||

| || || || || | | || || || || | ||

| − | ! | + | ! 53.9M !! 1.75M |

|} | |} | ||

| Line 145: | Line 348: | ||

|- | |- | ||

! Inside cutoff, per site | ! Inside cutoff, per site | ||

| − | | 5.96 billion || 120 || | + | | 5.96 billion || 120 || 14.0 hrs || 1680 || 240 || 15.5 hrs || 111.3k |

|- | |- | ||

! Outside cutoff, per site | ! Outside cutoff, per site | ||

| Line 152: | Line 355: | ||

! Total | ! Total | ||

| || || | | || || | ||

| − | ! | + | ! 850K || || |

| − | ! | + | ! 56.32M |

|} | |} | ||

| − | + | Our computational plan is to split the SGT calculations 75% BW/25% Titan, and split the PP 75% BW, 25% Titan. With a 20% margin, this would require 33.1M SUs on Titan, and 2.34M node-hrs on Blue Waters. | |

Currently we have 91.7M SUs available on Titan (expires 12/31/18), and 8.14M node-hrs on Blue Waters (expires 8/31/18). Based on the 2016 PRAC (spread out over 2 years), we budgeted approximately 6.2M node-hours for CyberShake on Blue Waters this year, of which we have used 0.01M. | Currently we have 91.7M SUs available on Titan (expires 12/31/18), and 8.14M node-hrs on Blue Waters (expires 8/31/18). Based on the 2016 PRAC (spread out over 2 years), we budgeted approximately 6.2M node-hours for CyberShake on Blue Waters this year, of which we have used 0.01M. | ||

| Line 169: | Line 372: | ||

|- | |- | ||

! Inside cutoff, per site | ! Inside cutoff, per site | ||

| − | | 23.1 billion || 271 GB || | + | | 23.1 billion || 271 GB || 615 GB || 1500 GB || 23.8 GB |

|- | |- | ||

! Outside cutoff, per site | ! Outside cutoff, per site | ||

| − | | 10.2 billion || 120 GB || 133 GB || 385 GB || | + | | 10.2 billion || 120 GB || 133 GB || 385 GB || 11.6 GB |

|- | |- | ||

! Total | ! Total | ||

| | | | ||

| − | ! 158 TB | + | ! 158 TB !! 292 TB !! 742 TB !! 14.4 TB |

| − | ! | ||

| − | ! | ||

| − | ! | ||

|} | |} | ||

| − | If we | + | If we split the SGTs and the PP 25% Titan, 75% Blue Waters, we will need: |

| − | Titan: | + | Titan: 185 TB temp files + 3.6 TB output files = <b>189 TB</b> |

| − | Blue Waters: | + | Blue Waters: 557 TB temp files + 185 TB Titan SGTs + 10.8 TB output files = <b>753 TB</b> |

| − | SCEC storage: 1 TB workflow logs + | + | SCEC storage: 1 TB workflow logs + 14.4 TB output data files = <b>15.4 TB</b>(24 TB free) |

| − | Database usage: (4 rows PSA [@ 2, 3, 5, 10 sec] + 12 rows RotD [RotD100 and RotD50 @ 2, 3, 4, 5, 7.5, 10 sec])/rupture variation x 225K rupture variations/site x 869 sites = 3. | + | Database usage: (4 rows PSA [@ 2, 3, 5, 10 sec] + 12 rows RotD [RotD100 and RotD50 @ 2, 3, 4, 5, 7.5, 10 sec] + 4 rows Duration [X and Y acceleration @ 5-75%, 5-95%])/rupture variation x 225K rupture variations/site x 869 sites = 3.9 billion rows x 125 bytes/row = <b>455 GB</b> (2.0 TB free on moment.usc.edu disk) |

| − | == | + | == Lessons Learned == |

| − | + | *Consider separating SGT and PP workflows in auto-submit tool to better manage the number of each, for improved reservation utilization. | |

| + | *Create a read-only way to look at the CyberShake Run Manager website. | ||

| + | *Consider reducing levels of the workflow hierarchy, thereby reducing load on shock. | ||

| + | *Establish clear rules and policies about reservation usage. | ||

| + | *Determine advance plan for SGTs for sites which require fewer (200) GPUs. | ||

| + | *Determine advance plan for SGTs for sites which exceed memory on nodes. | ||

| + | *Create new velocity model ID for composite model, capturing metadata. | ||

| + | *Verify all Java processes grab a reasonable amount of memory. | ||

| + | *Clear disk space before study begins to avoid disk contention. | ||

| + | *Add stress test before beginning study, for multiple sites at a time, with cleanup. | ||

| + | *In addition to disk space, check local inode usage. | ||

| + | *If submitting to multiple reservations, make sure enough jobs are eligible to run that no reservation is starved. | ||

| + | *If running primarily SGTs for awhile, make sure they don't get deleted due to quota policies. | ||

| − | {| | + | == Performance Metrics == |

| − | | | + | |

| − | | [ | + | === Usage === |

| − | | | + | |

| − | | | + | Just before starting, we grabbed the following usage numbers from the systems: |

| + | |||

| + | ==== Blue Waters ==== | ||

| + | |||

| + | The 'usage' command gives 2792733.80 node-hours used out of 9620000.00 by project baln, and 1014 jobs run. | ||

| + | |||

| + | User scottcal used 77705.03 node-hours on project baln. | ||

| + | |||

| + | On restart, usage gives 3091276.56 node-hours used out of 9620000.00 by project baln, and 2265884.05 used by user scottcal. | ||

| + | |||

| + | At end, usage gives 4903299.91 node-hours used out of 9620000.00 by project baln, and 3083951.19 used by user scottcal. | ||

| + | |||

| + | ==== Titan ==== | ||

| + | |||

| + | Project usage: 78,304,261 SUs (of 96,000,000) in 2018, 22,784,073 in August 2018. | ||

| + | |||

| + | callag usage: 4,046,501 SUs in 2018, 985,434 in August 2018. | ||

| + | |||

| + | callag usage at hiatus: 50,158,853 SUs in 2018, 5,418,846 in August 2018, 26,119,171 in September, 15,559,769 in October. | ||

| + | |||

| + | callag usage upon resume: 0 in January 2019. | ||

| + | |||

| + | callag usage upon end of running on Titan (1/31): 27,999,371 SUs | ||

| + | |||

| + | === Reservations === | ||

| + | |||

| + | We started with 1252 XK, 1316 XK, 2 160 XE, 3 164 XE, and 1 168 XE node reservations at noon PDT on 8/18. Because of a misunderstanding regarding the XE node reservations, jobs weren't matched to them and they terminated in 40 minutes. | ||

| + | |||

| + | We lost the XK reservations around 8 am PDT on 8/20, as they were accidentally killed by Blue Waters staff. | ||

| + | |||

| + | We got new XK reservations (1252 and 1316 nodes) at 11 am PDT on 8/22. | ||

| + | |||

| + | Due to underutilization, both XK reservations and all but 1 of the XE reservations were killed between midnight and 8 am PDT on 8/29. | ||

| + | |||

| + | A new set of XK reservations (1252, 1316) started at 7:30 am PDT on 8/31, 4 new XEs (3x164, 1x160) at 9:15 am on 8/31, and 1 more XE (1x168) at 9:45 am on 8/31. They were killed in the morning of 9/4 due to underutilization. | ||

| + | |||

| + | A new set of reservations (same number and sizes) started at 8 am PDT on 9/14. | ||

| + | |||

| + | === Events during Study === | ||

| + | |||

| + | Until we got our second round of reservations on 8/22, it was difficult to make headway on Blue Waters. Then it became difficult to keep the XE reservations full, so we switched to SGTs-only on Titan. | ||

| + | |||

| + | We finally were granted 8 jobs ready to run and 5 jobs in bin 5 on Titan, which took effect on 8/29. | ||

| + | |||

| + | Early in the morning of 8/29, some kind of network or disk issue decoupled shock and the remote systems. This resulted in a variety of problems, including not enough jobs in the queue on Blue Waters, Condor no longer submitting jobs to grid resources, and the same job being submitted multiple times to Titan. On 8/30, these issues seemed to have worked themselves out. | ||

| + | |||

| + | Titan was down for 8/30-8/31. | ||

| + | |||

| + | The SCEC disks crashed sometime during the night of 8/30-8/31. The disks were restored around 8:30 am PDT on 8/31, but shock required a reboot. A controller was swapped around 3 pm PDT, causing the disks to crash again. They were returned to service at 4 pm, but with extensive I/O errors. The reservations were killed on the morning of 9/4. The I/O issues were resolved in the afternoon of 9/4. | ||

| + | |||

| + | On 9/5 we determined a change made to try to fix the symlink issue (which turned x509userproxy back on for Titan jobs), led to submitting jobs which caused trouble for future jobs being submitted. We changed this back and will throw out runs currently in process on Titan. | ||

| + | |||

| + | On 9/14 we had to restart the rvgahp server on Titan. | ||

| + | |||

| + | On 9/14 at 10 am PDT 2 XK and 6 XE reservations were restarted. | ||

| + | |||

| + | On 9/14 around noon the SCEC disks were unmounted due to an unspecified issue at HPC. | ||

| + | |||

| + | We lost 3 XE reservations on the night of 9/14-9/15. 2 of them were resumed at 10 am on 9/19. | ||

| + | |||

| + | All reservations were terminated in the morning of 9/21 due to a full system job on Blue Waters. | ||

| + | |||

| + | On 9/21 we switched to using bundled SGT jobs on Titan, which increased SGT throughput. | ||

| + | |||

| + | 2 XK reservations were restarted 9/23 at 8 am. 3 XE reservations were restarted 9/25 at 7 am, and 2 more XE reservations were started 9/27 at 10:15 am. | ||

| + | |||

| + | The rvgahp server on Titan needed to be restarted on 9/27. | ||

| + | |||

| + | 2 of the XK reservations were lost around 4 am on 10/1; there was an issue with a disconnect between shock and Blue Waters, so post-processing jobs didn't clear the queue, so new SGT jobs weren't queued. They were restored on 10/4 at 8:30 am. | ||

| + | |||

| + | In the afternoon of 10/1, we exceeded 125% allocation usage on Titan, and our jobs were heavily penalized. After working this out with the OLCF staff, we returned to normal priority levels early in the morning of 10/4. | ||

| + | |||

| + | 2 XE reservations were removed to clear room for additional reservations on 10/4. | ||

| + | |||

| + | 1 XK reservation was removed around 4 pm on 10/5; a second was removed around 5 pm on 10/6. | ||

| + | |||

| + | The rvgahp server on Titan was restarted on 1/24. | ||

| + | |||

| + | On 1/25, we discovered the host certificate on hpc-transfer no longer has the hostname in it, causing our globus-url-copy transfers to fail. We suspended workflows on Blue Waters. | ||

| + | |||

| + | The rvgahp server on Titan was restarted on 1/28. | ||

| + | |||

| + | On 1/29, we modified pegasus-transfer to explicitly add the hpc-transfer subject using -ds to all hpc-transfer transfers, and restarted workflows on Blue Waters. | ||

| + | |||

| + | On 2/1, we stopped production runs on Titan, since our 13th month expired. | ||

| + | |||

| + | On 2/28 at 11 am PST, we resumed 2x1208 XK reservations on Blue Waters. | ||

| + | |||

| + | We lost 1 XK reservation around 11 am PST on 3/1, and the other at 7 am PST on 3/2. | ||

| + | |||

| + | Both reservations were resumed on 3/6 at noon CST. We lost 1 around 4 am on 3/7, due to more than 5 jobs at the top of the queue being for the same reservation, which means the other jobs are blocked. We returned the other reservation on 3/8 at about 9 pm, since all the 1600-node XK jobs had completed. | ||

| + | |||

| + | === Application-level Metrics === | ||

| + | |||

| + | * Makespan: 3079.3 hrs (DST started during the restart) (does not include hiatus) | ||

| + | ** 1402.6 hrs in 2018 | ||

| + | ** 1676.7 hrs in 2019 | ||

| + | * Uptime: ? hrs (downtime during BW and HPC system maintenance) | ||

| + | |||

| + | Note: uptime is what's used for calculating the averages for jobs, nodes, etc. | ||

| + | |||

| + | * 869 runs | ||

| + | |||

| + | The table below indicates how many SGT and PP were run on Blue Waters and Titan. | ||

| + | |||

| + | {| border=1 cellpadding="2" | ||

| + | ! !! BW SGTs !! Titan SGTs !! Total | ||

| + | |- | ||

| + | ! BW PP | ||

| + | | 444 || 290 || 734 | ||

| + | |- | ||

| + | ! Titan PP | ||

| + | | 0 || 135 || 135 | ||

| + | |- | ||

| + | ! Total | ||

| + | | 444 || 425 || 869 | ||

| + | |} | ||

| + | |||

| + | * 8,091 UCERF2 ruptures considered | ||

| + | * 523,490 rupture variations considered | ||

| + | * 202,820,113 seismogram pairs generated | ||

| + | * 30,423,016,950 intensity measures generated (150 per seismogram pair) | ||

| + | |||

| + | * 1625 workflows run | ||

| + | ** 1329 successful | ||

| + | *** 432 integrated | ||

| + | *** 460 SGT | ||

| + | *** 437 PP | ||

| + | ** 296 failed | ||

| + | *** 250 integrated | ||

| + | *** 23 SGT | ||

| + | *** 23 PP | ||

| + | |||

| + | * 18,065 remote jobs submitted (7757 on Titan, 10,308 on Blue Waters) | ||

| + | * 21,220 local jobs submitted | ||

| + | * 6212844.1 node-hrs used (119,630,290 core-hrs) | ||

| + | * 7149 node-hrs per site (137,664 core-hrs) | ||

| + | * On average, 5 jobs running, with a max of 48 | ||

| + | * Average of 1879 nodes used, with a maximum of 16,219 [1480 BW, 14,739 Titan (79%)] (279,984 cores). | ||

| + | |||

| + | * Total data generated: ? | ||

| + | ** ? TB SGTs generated (? GB per single SGT) | ||

| + | ** ? TB intermediate data generated (? GB per velocity file, duplicate SGTs) | ||

| + | ** 14.98 TB output data (8,684,106 files) | ||

| + | |||

| + | Delay per job (using a 14-day, no-restarts cutoff: 999 workflows, 9697 jobs) was mean: 10776 sec, median: 271, min: 0, max: 744494, sd: 37410 | ||

| + | |||

| + | {| border=1 | ||

| + | ! Bins (sec) | ||

| + | | 0 || || 60 || || 120 || || 180 || || 240 || || 300 || || 600 || || 900 || || 1800 || || 3600 || || 7200 || || 14400 || || 43200 || || 86400 || || 172800 || || 259200 || || 604800 | ||

| + | |- | ||

| + | ! Jobs per bin | ||

| + | | || 2400 || || 875 || || 595 || || 610 || || 677 || || 1833 || || 294 || || 188 || || 180 || || 288 || || 326 || || 667 || || 445 || || 234 || || 43 || || 36 | ||

| + | |} | ||

| + | |||

| + | * Application parallel node speedup (node-hours divided by makespan) was 2018x. (Divided by uptime: ?x) | ||

| + | * Application parallel workflow speedup (number of workflows times average workflow makespan divided by application makespan) was 35.3x. (Divided by uptime: ?x) | ||

| + | |||

| + | ==== Titan ==== | ||

| + | * Wallclock time: ? hrs | ||

| + | * Uptime: ? hrs (all downtime was SCEC) | ||

| + | * SGTs generated for ? sites, post-processing for ? sites | ||

| + | * ? jobs submitted to the Titan queue | ||

| + | * Running jobs: average ?, max of ? | ||

| + | * Idle jobs: average ?, max of ? | ||

| + | * Nodes: average ? (? cores), max ? (? cores, ?% of Titan) | ||

| + | * From Titan portal, 74,111,723 SUs used (2470390.77 node-hrs) | ||

| + | |||

| + | Delay per job (14-day cutoff, no restarts, ? jobs): mean ? sec, median: ?, min: 0, max: ?, sd: ? | ||

| + | |||

| + | {| border=1 | ||

| + | ! Bins (sec) | ||

| + | | 0 || || 60 || || 120 || || 180 || || 240 || || 300 || || 600 || || 900 || || 1800 || || 3600 || || 7200 || || 14400 || || 43200 || || 86400 || || 172800 || || 259200 || || 604800 | ||

| + | |- | ||

| + | ! Jobs per bin | ||

| + | | || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? | ||

| + | |} | ||

| + | |||

| + | ==== Blue Waters ==== | ||

| + | |||

| + | The Blue Waters cronjob which generated the queue information file was down for about 2 days, so we reconstructed its contents from the job history query functionality on the Blue Waters portal. | ||

| + | |||

| + | * Wallclock time: ? hrs | ||

| + | * Uptime: ? hrs (? SCEC downtime including USC, ? BW downtime) | ||

| + | * Post-processing for ? sites | ||

| + | * 10308 jobs run on Blue Waters | ||

| + | * Running jobs: average ? (job history), max of ? | ||

| + | * Idle jobs: average ?, max of ? (this comes from the cronjob) | ||

| + | * Nodes: average ? (? cores), max ? (? cores, ?% of Blue Waters) | ||

| + | * Based on the Blue Waters jobs report, 3742453.33 node-hours used (80,104,038 core-hrs) | ||

| + | ** XK usage: 2478382.51 node-hours | ||

| + | ** XE usage: 1264070.81 node-hours | ||

| + | |||

| + | Delay per job (14-day cutoff, no restarts, ? jobs): mean ? sec, median: ?, min: 0, max: ?, sd: ? | ||

| + | |||

| + | {| border=1 | ||

| + | ! Bins (sec) | ||

| + | | 0 || || 60 || || 120 || || 180 || || 240 || || 300 || || 600 || || 900 || || 1800 || || 3600 || || 7200 || || 14400 || || 43200 || || 86400 || || 172800 || || 259200 || || 604800 | ||

| + | |- | ||

| + | ! Jobs per bin | ||

| + | | || 20 || || 6 || || 68 || || 164 || || 201 || || 677 || || 253 || || 170 || || 69 || || 142 || || 204 || || 166 || || 49 || || 0 || || 0 || || 0 | ||

|} | |} | ||

| + | |||

| + | === Workflow-level Metrics === | ||

| + | |||

| + | * The average makespan of a workflow (14-day cutoff, workflows with retries ignored, so 999/1119 workflows considered) was 294815 sec (81.9 hrs), median: 159643 (44.3 hrs), min: 108, max: 1203152, sd: 271303 | ||

| + | **For workflows with PP on Bluewaters (409 workflows), mean: 258152 sec (71.7 hrs), median: 159643 sec (44.3 hrs), min: 108, max: 1176182, sd: 241003 | ||

| + | **For integrated workflows on Titan (221 workflows), mean: 178534 sec (49.6 hrs), median: 424 sec (this is weird), min: 239, max: 936648, sd: 275103 | ||

| + | ** Integrated workflows on Titan were faster than splitting them up, which is the opposite of Study 17.3. | ||

| + | |||

| + | * Workflow parallel core speedup (520 workflows) was mean: 820, median: 464, min: 0, max: 4326, sd: 931 | ||

| + | * Workflow parallel node speedup (520 workflows) was mean: 25.1, median: 14.0 min: 0.1, max: 135.5, sd: 29.1 | ||

| + | |||

| + | === Job-level Metrics === | ||

| + | |||

| + | The parsing results are below. | ||

| + | |||

| + | Job attempts, over 33563 jobs: | ||

| + | Mean: 1.206835, median: 1.000000, min: 1.000000, max: 36.000000, sd: 1.178194 | ||

| + | |||

| + | Remote job attempts, over 17441 remote jobs: | ||

| + | Mean: 1.244539, median: 1.000000, min: 1.000000, max: 36.000000, sd: 1.273279 | ||

| + | |||

| + | Sub-workflow attempts, over 3739 sub-workflows: | ||

| + | Mean: 1.078898, median: 1.000000, min: 1.000000, max: 6.000000, sd: 0.398488 | ||

| + | |||

| + | |||

| + | *PreCVM_PreCVM (664 jobs): | ||

| + | Runtime: mean 252.414157, median: 230.000000, min: 11.000000, max: 595.000000, sd: 115.279853 | ||

| + | Attempts: mean 1.477410, median: 1.000000, min: 1.000000, max: 5.000000, sd: 0.935946 | ||

| + | *GenSGTDax_GenSGTDax (664 jobs): | ||

| + | Runtime: mean 5.192771, median: 2.000000, min: 2.000000, max: 168.000000, sd: 14.013619 | ||

| + | Attempts: mean 1.457831, median: 1.000000, min: 1.000000, max: 5.000000, sd: 0.907482 | ||

| + | *UCVMMesh_UCVMMesh_titan (83 jobs): | ||

| + | Runtime: mean 1258.349398, median: 1423.000000, min: 557.000000, max: 2117.000000, sd: 446.440283 | ||

| + | Attempts: mean 2.120482, median: 2.000000, min: 1.000000, max: 4.000000, sd: 1.091010 | ||

| + | *UCVMMesh_UCVMMesh_bluewaters (440 jobs): | ||

| + | Runtime: mean 1439.618182, median: 1555.500000, min: 128.000000, max: 3794.000000, sd: 525.302467 | ||

| + | Attempts: mean 1.565909, median: 1.000000, min: 1.000000, max: 21.000000, sd: 1.911739 | ||

| + | *Smooth_Smooth (520 jobs): | ||

| + | Runtime: mean 3854.613462, median: 3321.000000, min: 63.000000, max: 9009.000000, sd: 2661.493331 | ||

| + | Attempts: mean 1.401923, median: 1.000000, min: 1.000000, max: 8.000000, sd: 0.985470 | ||

| + | *Velocity_Params (520 jobs): | ||

| + | Runtime: mean 18.851923, median: 17.000000, min: 6.000000, max: 205.000000, sd: 15.779587 | ||

| + | Attempts: mean 1.294231, median: 1.000000, min: 1.000000, max: 6.000000, sd: 0.723218 | ||

| + | *PreSGT_PreSGT (524 jobs): | ||

| + | Runtime: mean 261.293893, median: 262.000000, min: 20.000000, max: 490.000000, sd: 106.403495 | ||

| + | Attempts: mean 1.383588, median: 1.000000, min: 1.000000, max: 21.000000, sd: 1.176989 | ||

| + | *PreAWP_PreAWP (1 jobs): | ||

| + | Runtime: mean 14.000000, median: 14.000000, min: 14.000000, max: 14.000000, sd: 0.000000 | ||

| + | Attempts: mean 11.000000, median: 11.000000, min: 11.000000, max: 11.000000, sd: 0.000000 | ||

| + | *PreAWP_GPU_bluewaters (439 jobs): | ||

| + | Runtime: mean 221.653759, median: 221.000000, min: 30.000000, max: 596.000000, sd: 117.454332 | ||

| + | Attempts: mean 1.136674, median: 1.000000, min: 1.000000, max: 4.000000, sd: 0.495537 | ||

| + | *PreAWP_GPU_titan (80 jobs): | ||

| + | Runtime: mean 135.837500, median: 128.500000, min: 65.000000, max: 292.000000, sd: 56.891881 | ||

| + | Attempts: mean 2.062500, median: 2.000000, min: 1.000000, max: 6.000000, sd: 1.099361 | ||

| + | *AWP_AWP (2 jobs): | ||

| + | Runtime: mean 1069.500000, median: 1069.500000, min: 1063.000000, max: 1076.000000, sd: 6.500000 | ||

| + | Attempts: mean 10.000000, median: 10.000000, min: 10.000000, max: 10.000000, sd: 0.000000 | ||

| + | Cores used in job: mean 1700.000000, median: 1700.000000, min: 1700.000000, max: 1700.000000, sd: 0.000000 | ||

| + | Cores, binned: | ||

| + | [ 0 7000 8000 9000 10000 11000 12000 13000 14000 15000 17000 20000 | ||

| + | 24000] | ||

| + | [2 0 0 0 0 0 0 0 0 0 0 0] | ||

| + | *AWP_GPU_bluewaters (849 jobs): | ||

| + | Runtime: mean 3909.983510, median: 5082.000000, min: 0.000000, max: 7184.000000, sd: 1803.826623 | ||

| + | Attempts: mean 2.065960, median: 1.000000, min: 1.000000, max: 26.000000, sd: 2.612443 | ||

| + | *AWP_GPU_titan (146 jobs): | ||

| + | Runtime: mean 3826.595890, median: 4779.500000, min: 1323.000000, max: 6430.000000, sd: 1827.245035 | ||

| + | Attempts: mean 2.253425, median: 2.000000, min: 1.000000, max: 11.000000, sd: 1.886548 | ||

| + | *MD5_MD5 (140 jobs): | ||

| + | Runtime: mean 2161.507143, median: 2343.000000, min: 791.000000, max: 4049.000000, sd: 1152.705981 | ||

| + | Attempts: mean 1.242857, median: 1.000000, min: 1.000000, max: 7.000000, sd: 0.818286 | ||

| + | *AWP_NaN (988 jobs): | ||

| + | Runtime: mean 1527.632591, median: 1766.000000, min: 79.000000, max: 5322.000000, sd: 778.486319 | ||

| + | Attempts: mean 1.121457, median: 1.000000, min: 1.000000, max: 7.000000, sd: 0.526067 | ||

| + | *PostAWP_PostAWP (988 jobs): | ||

| + | Runtime: mean 3462.478745, median: 2482.000000, min: 193.000000, max: 10561.000000, sd: 2134.121786 | ||

| + | Attempts: mean 1.194332, median: 1.000000, min: 1.000000, max: 27.000000, sd: 1.373067 | ||

| + | *cleanup_AWP (38 jobs): | ||

| + | Runtime: mean 2.000000, median: 2.000000, min: 2.000000, max: 2.000000, sd: 0.000000 | ||

| + | Attempts: mean 1.000000, median: 1.000000, min: 1.000000, max: 1.000000, sd: 0.000000 | ||

| + | *SetJobID_SetJobID (483 jobs): | ||

| + | Runtime: mean 0.012422, median: 0.000000, min: 0.000000, max: 2.000000, sd: 0.128097 | ||

| + | Attempts: mean 1.260870, median: 1.000000, min: 1.000000, max: 5.000000, sd: 0.625759 | ||

| + | *SetPPHost (482 jobs): | ||

| + | Runtime: mean 0.016598, median: 0.000000, min: 0.000000, max: 2.000000, sd: 0.143078 | ||

| + | Attempts: mean 1.051867, median: 1.000000, min: 1.000000, max: 4.000000, sd: 0.314594 | ||

| + | *CheckSgt_CheckSgt (1805 jobs): | ||

| + | Runtime: mean 2586.154017, median: 1654.000000, min: 0.000000, max: 5780.000000, sd: 1392.188892 | ||

| + | Attempts: mean 1.142936, median: 1.000000, min: 1.000000, max: 10.000000, sd: 0.630316 | ||

| + | *DirectSynth_titan (40 jobs): | ||

| + | Runtime: mean 22906.000000, median: 14900.500000, min: 1405.000000, max: 43057.000000, sd: 11931.560965 | ||

| + | Attempts: mean 1.850000, median: 1.000000, min: 1.000000, max: 10.000000, sd: 2.080264 | ||

| + | *DirectSynth_titan-pilot (82 jobs): | ||

| + | Runtime: mean 21543.939024, median: 14309.000000, min: 7373.000000, max: 39383.000000, sd: 11160.537613 | ||

| + | Attempts: mean 1.548780, median: 1.000000, min: 1.000000, max: 13.000000, sd: 1.939024 | ||

| + | *DirectSynth_bluewaters (742 jobs): | ||

| + | Runtime: mean 19419.758760, median: 12736.500000, min: 31.000000, max: 54036.000000, sd: 16390.290755 | ||

| + | Attempts: mean 2.222372, median: 1.000000, min: 1.000000, max: 23.000000, sd: 2.157013 | ||

| + | *Load_Amps (2562 jobs): | ||

| + | Runtime: mean 818.136222, median: 689.000000, min: 2.000000, max: 3706.000000, sd: 529.591668 | ||

| + | Attempts: mean 1.191647, median: 1.000000, min: 1.000000, max: 27.000000, sd: 1.535541 | ||

| + | *Check_DB (2558 jobs): | ||

| + | Runtime: mean 1.234949, median: 1.000000, min: 0.000000, max: 142.000000, sd: 4.002335 | ||

| + | Attempts: mean 1.000782, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.027951 | ||

| + | *Curve_Calc (2559 jobs): | ||

| + | Runtime: mean 78.173505, median: 51.000000, min: 20.000000, max: 928.000000, sd: 89.542157 | ||

| + | Attempts: mean 1.184838, median: 1.000000, min: 1.000000, max: 28.000000, sd: 1.649372 | ||

| + | *Disaggregate_Disaggregate (853 jobs): | ||

| + | Runtime: mean 28.800703, median: 22.000000, min: 14.000000, max: 315.000000, sd: 29.156973 | ||

| + | Attempts: mean 1.117233, median: 1.000000, min: 1.000000, max: 12.000000, sd: 1.006588 | ||

| + | *DB_Report (853 jobs): | ||

| + | Runtime: mean 38.652989, median: 25.000000, min: 1.000000, max: 427.000000, sd: 47.366364 | ||

| + | Attempts: mean 1.361079, median: 1.000000, min: 1.000000, max: 36.000000, sd: 1.888693 | ||

| + | *CyberShakeNotify_CS (849 jobs): | ||

| + | Runtime: mean 0.089517, median: 0.000000, min: 0.000000, max: 35.000000, sd: 1.267142 | ||

| + | Attempts: mean 1.001178, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.034300 | ||

| + | *UpdateRun_UpdateRun (2916 jobs): | ||

| + | Runtime: mean 0.015432, median: 0.000000, min: 0.000000, max: 7.000000, sd: 0.214612 | ||

| + | Attempts: mean 1.118999, median: 1.000000, min: 1.000000, max: 10.000000, sd: 0.520402 | ||

| + | *create_dir (8703 jobs): | ||

| + | Runtime: mean 3.932782, median: 2.000000, min: 2.000000, max: 1919.000000, sd: 25.361338 | ||

| + | Attempts: mean 1.163392, median: 1.000000, min: 1.000000, max: 21.000000, sd: 0.633586 | ||

| + | *register_bluewaters (1499 jobs): | ||

| + | Runtime: mean 333.757171, median: 44.000000, min: 1.000000, max: 1641.000000, sd: 379.561683 | ||

| + | Attempts: mean 1.171448, median: 1.000000, min: 1.000000, max: 19.000000, sd: 1.330842 | ||

| + | *register_titan (180 jobs): | ||

| + | Runtime: mean 138.122222, median: 1.000000, min: 1.000000, max: 1365.000000, sd: 277.654735 | ||

| + | Attempts: mean 1.611111, median: 1.000000, min: 1.000000, max: 10.000000, sd: 2.158675 | ||

| + | *register_titan-pilot (78 jobs): | ||

| + | Runtime: mean 937.794872, median: 883.500000, min: 346.000000, max: 1681.000000, sd: 363.562620 | ||

| + | Attempts: mean 2.166667, median: 1.000000, min: 1.000000, max: 11.000000, sd: 3.044190 | ||

| + | *stage_in (5212 jobs): | ||

| + | Runtime: mean 162.318304, median: 6.000000, min: 2.000000, max: 26501.000000, sd: 755.009697 | ||

| + | Attempts: mean 1.267076, median: 1.000000, min: 1.000000, max: 21.000000, sd: 1.345969 | ||

| + | *stage_out (1760 jobs): | ||

| + | Runtime: mean 5812.590341, median: 1749.000000, min: 7.000000, max: 175542.000000, sd: 18658.778308 | ||

| + | Attempts: mean 1.239773, median: 1.000000, min: 1.000000, max: 21.000000, sd: 1.233716 | ||

| + | *stage_inter (664 jobs): | ||

| + | Runtime: mean 7.253012, median: 4.000000, min: 3.000000, max: 142.000000, sd: 14.167537 | ||

| + | Attempts: mean 1.451807, median: 1.000000, min: 1.000000, max: 5.000000, sd: 0.905520 | ||

| + | *clean_up (98 jobs): | ||

| + | Runtime: mean 62.367347, median: 12.000000, min: 4.000000, max: 597.000000, sd: 127.331192 | ||

| + | Attempts: mean 1.275510, median: 1.000000, min: 1.000000, max: 3.000000, sd: 0.635363 | ||

| + | *cleanup_CyberShake (42 jobs): | ||

| + | Runtime: mean 2.000000, median: 2.000000, min: 2.000000, max: 2.000000, sd: 0.000000 | ||

| + | Attempts: mean 1.023810, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.152455 | ||

== Production Checklist == | == Production Checklist == | ||

| + | *<s>Install UCVM 18.5.0 on Titan</s> | ||

| + | *<s>Compute test hazard curves for 4 sites - on both systems for 2 of them.</s> | ||

| + | *<s>Compute test curves for 2 overlapping sites.</s> | ||

| + | *<s>Integrate Vs30 calculation and database population into workflow</s> | ||

| + | *<s>Create Vs min=250 m/s plots.</s> | ||

| + | *<s>Spec Vsmin=250 runs</s> | ||

| + | *<s>Create XML file describing study for web monitoring tool.</s> | ||

| + | *<s>Make decision regarding sites with 1D model.</s> | ||

| + | *<s>Activate Blue Waters and Titan quota cronjobs.</s> | ||

| + | *<s>Activate Blue Waters and Titan usage cronjobs.</s> | ||

| + | *<s>Improve parallelism/scalability of smoothing code.</s> | ||

| + | *<s>Tag code in repository</s> | ||

| + | *<s>Calculate background seismicity impact for most-likely-to-be-impacted sites.</s> (Will defer until after study completion) | ||

| + | *<s>Verify that Titan and Blue Waters codebases are in sync with each other and the repo.</s> | ||

| + | *<s>Prepare pending file</s> | ||

| + | *<s>Get usage stats from Titan and Blue Waters before beginning study.</s> | ||

| + | *<s>Hold science and technical readiness reviews.</s> | ||

| + | *<s>Calls with Blue Waters and Titan staff</s> | ||

| + | *<s>Switch to more recent version of Rob's projection code</s> | ||

== Presentations, Posters, and Papers == | == Presentations, Posters, and Papers == | ||

| + | * [[File:Science_Readiness_Review.pptx]] | ||

| + | * [[File:Technical_Readiness_Review.pptx]] | ||

| + | * [[File:Overview_for_NCSA.pptx]] | ||

| + | * [[File:Overview_for_OLCF.pptx]] | ||

| + | * [http://hypocenter.usc.edu/research/Goulet/Presentations/Goulet_NorCalCVMWorkshop_CyberShake18.8_20190516.pdf Presentation at USGS NorCal Workshop, May 16, 2019] | ||

== Related Entries == | == Related Entries == | ||

Latest revision as of 06:48, 26 January 2022

CyberShake 18.8 is a computational study to perform CyberShake in a new region, the extended Bay Area. We plan to use a combination of 3D models (USGS Bay Area detailed and regional, CVM-S4.26.M01, CCA-06) with a minimum Vs of 500 m/s and a frequency of 1 Hz. We will use the GPU implementation of AWP-ODC-SGT, the Graves & Pitarka (2014) rupture variations with 200m spacing and uniform hypocenters, and the UCERF2 ERF. The SGT and post-processing calculations will both be run on both NCSA Blue Waters and OLCF Titan.

Contents

- 1 Status

- 2 Data Products

- 3 Science Goals

- 4 Technical Goals

- 5 Sites

- 6 Projection Analysis

- 7 Velocity Models

- 8 Verification

- 9 Background Seismicity

- 10 SGT time length

- 11 Performance Enhancements (over Study 17.3)

- 12 Output Data Products

- 13 Computational and Data Estimates

- 14 Lessons Learned

- 15 Performance Metrics

- 16 Production Checklist

- 17 Presentations, Posters, and Papers

- 18 Related Entries

Status

This study is complete.

The study began on Friday, August 17, 2018 at 20:17:46 PDT.

It was paused on Monday, October 15, 2018 at about 6:53 PDT, then resumed on Thursday, January 17, 2019 at 11:21:26 PST.

The study was completed on Thursday, March 28, 2019, at 9:04:38 PDT.

Data Products

Hazard maps from this study are available here.

Statewide maps are available here.

Hazard curves produced from Study 18.8 have the Hazard_Dataset_ID 87.

Individual runs can be identified in the CyberShake database by searching for runs with Study_ID=9.

Selected data products can be viewed at CyberShake Study 18.8 Data Products.

Science Goals

The science goals for this study are:

- Expand CyberShake to the Bay Area.

- Calculate CyberShake results with the USGS Bay Area velocity model as the primary model.

- Calculate CyberShake results at selection locations with Vs min = 250 m/s.

Technical Goals

- Perform the largest CyberShake study to date.

Sites

The Study 18.3 box is 180 x 390 km, with the long edge rotated 27 degrees counter-clockwise from vertical. The corners are defined to be:

South: (-121.51,35.52) West: (-123.48,38.66) North: (-121.62,39.39) East: (-119.71,36.22)

We are planning to run 869 sites, 837 of which are new, as part of this study.

These sites include:

- 77 cities (74 new) + 46 sites of interest

- 10 new missions

- 139 CISN stations (136 new)

- 597 sites along a 10 km grid (571 new)

Of these sites, 32 overlap with the Study 17.3 region for verification.

A KML file with all these sites is available with names or without names.

Projection Analysis

As our simulation region gets larger, we needed to review the impact of the projection we are using for the simulations. An analysis of the impact of various projections by R. Graves is summarized in this posting:

Velocity Models

For Study 18.5, we initially decided to construct the velocity mesh by querying models in the following order (but changed the order later, for production):

- USGS Bay Area model

- CCA-06 with the Ely GTL applied

- CVM-S4.26.M01 (which includes a 1D background model).

A KML file showing the model regions is available here.

We will use a minimum Vs of 500 m/s. Smoothing will be applied 20 km on either side of any velocity model interface.

A thorough investigation was done to determine these parameters; a more detailed discussion is available at Study 18.5 Velocity Model Comparisons.

Vs=250m/s experiment

We would like to select a subset of sites and calculate hazard at 1 Hz and minimum Vs=250 m/s. Below are velocity plots with this lower Vs cutoff.

Verification

We have selected the following 4 sites for verification, one from each corner of the box:

- s4518

- s975

- s2189

- s2221

We will run s975 at both 50 km depth, ND=50 and 80 km depth, ND=80.

We will run s4518 and s2221 on both Blue Waters and Titan.

Hazard Curves

| Site | 2 sec RotD50 | 3 sec RotD50 | 5 sec RotD50 | 10 sec RotD50 |

|---|---|---|---|---|

| s4518 (south corner) | ||||

| s2221 (north corner) | ||||

| s975 (east corner) |

Comparisons with Study 17.3

| Site | 2 sec RotD50 | 3 sec RotD50 | 5 sec RotD50 | 10 sec RotD50 |

|---|---|---|---|---|

| s975 (east corner) |

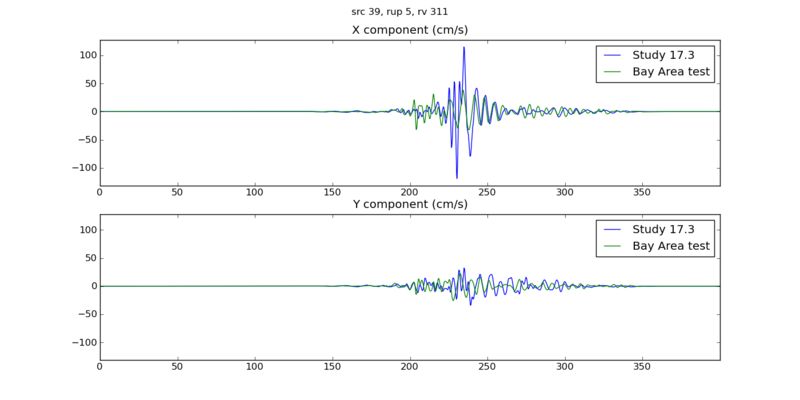

Below are seismograms comparing a northern SAF event (source 39, rupture 5, rupture variation 311, M8.15 with hypocenter just offshore about 60 km south of Eureka) and a southern SAF event (source 59, rupture 0, rupture variation 0, M7.75 with hypocenter near Bombay Beach) between the Bay Area test with Study 17.3 parameters, Study 17.3, and Study 17.3 parameters but Bay Area tiling. The only difference is that the smoothing zone is larger in the Bay Area test + 17.3 parameters (I forgot to change it). Overall, the matches between Study 17.3 and the BA test + 17.3 parameters are excellent. Once the tiling is changed, we start to see differences, especially in the northern CA event.

To help illuminate the differences in velocity model, below are surface and vertical plots. The star indicates the location of the s975 site. Both models were created with Vs min = 900 m/s, and no GTL applied to the CCA-06 model.

| Model | Horizontal (depth=0) | Vertical, parallel to box through site |

|---|---|---|

| s975, Study 17.3 tiling: 1)CCA-06, no GTL 2)USGS Bay Area 3)CVM-S4.26.M01 |

||

| s975, Bay Area: 1)USGS Bay Area 2)CCA-06, no GTL 3)CVM-S4.26.M01 |

We also calculated curves for 2 overlapping rock sites, s816 and s903. s816 is 22 km from the USGS/CCA model boundary, and outside of the smoothing zone; s903 is 7 km from the boundary.

| Site | 2 sec RotD50 | 3 sec RotD50 | 5 sec RotD50 | 10 sec RotD50 |

|---|---|---|---|---|

| s816 | ||||

| s903 |

Velocity model vertical slides for s816:

| Model | Horizontal (depth=0) | Vertical, parallel to box through site | |

|---|---|---|---|

| s816, Study 17.3 tiling: 1)CCA-06, no GTL 2)USGS Bay Area 3)CVM-S4.26.M01 |

|||

| s816, Bay Area: 1)USGS Bay Area 2)CCA-06, no GTL 3)CVM-S4.26.M01 |

These are seismograms for the same two events (NSAF, src 39 rup 5 rv 311 and SSAF, src 59 rup 0 rv 0). The southern events fit a good deal better than the northern.

| Site | Northern SAF event | Southern SAF event |

|---|---|---|

| s816 | ||

| s903 |

Configuration for this study

Based on these results, we've decided to use the Study 17.3 tiling order for the velocity models:

- CCA-06 with Ely GTL

- USGS Bay Area model

- CVM-S4.26.M01

All models will use minimum Vs=500 m/s.

Here are comparison curves *without* the GTL, comparing Study 17.3 results, USGS Bay Area/CCA-06-GTL/CVM-S4.26.M01, and CCA-06-GTL/USGS Bay Area/CVM-S4.26.M01 .

| Site | 10 sec RotD50 | 5 sec RotD50 | 3 sec RotD50 | 2 sec RotD50 |

|---|---|---|---|---|

| s975 | ||||

| s816 |

GTL

Here are comparison curves between CCA-06-GTL/USGS Bay Area/CVM-S4.26.M01 and CCA-06+GTL/USGS Bay Area/CVM-S4.26.M01, showing the impact of the GTL.

| Site | 10 sec RotD50 | 5 sec RotD50 | 3 sec RotD50 | 2 sec RotD50 |

|---|---|---|---|---|

| s975 |

Here are seismogram comparisons for the two San Andreas events with and without the GTL. Adding the GTL slightly amplifies and stretches the seismogram, which makes sense.

Northern event

Southern event

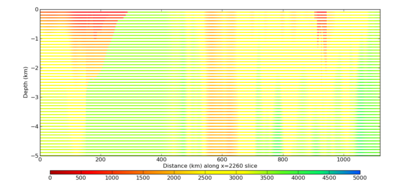

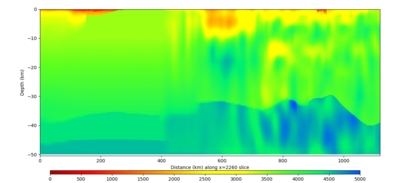

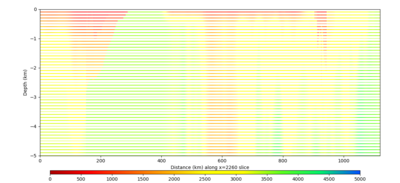

Here are vertical velocity slices through the volume, with NW on the left and SE on the right, both for the entire volume and zoomed in to the top 5 km.

| No GTL | ||

|---|---|---|

| GTL |

Seismograms

We've extracted 2 seismograms for s975, a northern SAF event and a southern, and plotted comparisons of the Bay Area test with the Study 17.3 3D results. Note the following differences between the two runs:

- Minimum vs: 900 m/s for Study 17.3, 500 m/s for the test

- GTL: no GTL in CCA-06 for Study 17.3, a GTL for CCA-06 for the test

- DT: 0.0875 sec for Study 17.3, 0.05 sec for the test

- Velocity model priority: CCA-06, then USGS Bay Area, then CVM-S4.26.M01 for Study 17.3; USGS Bay Area, then CCA-06+GTL, then CVM-S4.26.M01 for the test

Northern Event

This seismogram is from source 39, rupture 5, rupture variation 311, a M8.15 northern San Andreas event with hypocenter just offshore, about 60 km south of Eureka.

Southern Event

This seismogram is from source 59, rupture 0, rupture variation 0, a M7.75 southern San Andreas event with hypocenter near Bombay Beach.

Blue Waters and Titan

We calculated hazard curves for the same site (s835) on Titan and Blue Waters. The curves overlap.

Background Seismicity

Statewide maps showing the impact of background seismicity are available here: CyberShake Background Seismicity

SGT time length

Below are SGT plots for s945. These SGTs are from the southwest and southeast bottom corner of the region, so as far as you can get from this site. It looks like 200 sec isn't long enough for these distant points.

Based on this, we will increase the SGT simulation time to 300 sec if any hypocenters are more than 450 km away from the site, and increase the seismogram duration from 400 to 500 sec.

Longer SGT comparison

Below is a comparison of seismograms generating using the original length (200 sec SGTs, 400 sec seismograms, in blue) and the new length (300 sec SGTs, 500 sec seismograms, in green). The slight differences in wiggles are due to the shorter ones being generated with Wills 2015, and the longer with 2006. Notice the additional content after about 350 seconds in the green trace.

Southern SAF (src 59, rup 0, rv 0):

Northern SAF (src 39, rup 5, rv 311):

Performance Enhancements (over Study 17.3)

Responses to Study 17.3 Lessons Learned

- Include plots of velocity models as part of readiness review when moving to new regions.

We have constructed many plots, and will include some in the science readiness review.

- Formalize process of creating impulse. Consider creating it as part of the workflow based on nt and dt.

- Many jobs were not picked up by the reservation, and as a result reservation nodes were idle. Work harder to make sure reservation is kept busy.

- Forgot to turn on monitord during workflow, so had to deal with statistics after the workflow was done. Since we're running far fewer jobs, it's fine to run monitord population during the workflow.

We set pegasus.monitord.events = true in all properties files.

- In Study 17.3b, 2 of the runs (5765 and 5743) had a problem with their output, which left 'holes' of lower hazard on the 1D map. Looking closely, we discovered that the SGT X component of run 5765 was about 30 GB smaller than it should have been, likely causing issues when the seismograms were synthesized. We no longer had the SGTs from 5743, so we couldn't verify that the same problem happened here. Moving forward, include checks on SGT file size as part of the nan check.

We added a file size check as part of the NaN check.

Output Data Products

Below is a list of what data products we intend to compute and what we plan to put in the database.

Computational and Data Estimates

Computational Estimates

In producing the computational estimates, we selected the four N/S/E/W extreme sites in the box which 1)within the 200 km cutoff for southern SAF events (381 sites) and 2)were outside the cutoff (488 sites). We produced inside and outside averages and scaled these by the number of inside and outside sites.

We also modified the box to be at an angle of 30 degrees counterclockwise of vertical, which makes the boxes about 15% smaller than with the previously used angle of 55 degrees.

We scaled our results based on the Study 17.3 performance of site s975, a site also in Study 18.3, and the Study 15.4 performance of DBCN, which used a very large volume and 100m spacing.

We also assume that the sites with southern San Andreas events will be run with 300 sec SGTs, and the other sites with 200 sec. All seismograms will be 500 sec long.

| # Grid points | #VMesh gen nodes | Mesh gen runtime | # GPUs | SGT job runtime | Titan SUs | BW node-hrs | |

|---|---|---|---|---|---|---|---|

| Inside cutoff, per site | 23.1 billion | 192 | 0.85 hrs | 800 | 2.03 hrs | 102.1k | 3320 |

| Outside cutoff, per site | 10.2 billion | 192 | 0.37 hrs | 800 | 0.60 hrs | 30.8k | 990 |

| Total | 53.9M | 1.75M |

For the post-processing, we quantified the amount of work by determining the number of individual rupture points to process (summing, over all ruptures, the number of rupture variations for that rupture times the number of rupture surface points) and multiplying that by the number of timesteps. We then scaled based on performance of s975 from Study 17.3, and DBCN in Study 15.4.

Below we list the estimates for Blue Waters or Titan.

| #Points to process | #Nodes (BW) | BW runtime | BW node-hrs | #Nodes (Titan) | Titan runtime | Titan SUs | |

|---|---|---|---|---|---|---|---|

| Inside cutoff, per site | 5.96 billion | 120 | 14.0 hrs | 1680 | 240 | 15.5 hrs | 111.3k |

| Outside cutoff, per site | 2.29 billion | 120 | 3.57 hrs | 430 | 240 | 3.95 hrs | 28.5k |

| Total | 850K | 56.32M |

Our computational plan is to split the SGT calculations 75% BW/25% Titan, and split the PP 75% BW, 25% Titan. With a 20% margin, this would require 33.1M SUs on Titan, and 2.34M node-hrs on Blue Waters.

Currently we have 91.7M SUs available on Titan (expires 12/31/18), and 8.14M node-hrs on Blue Waters (expires 8/31/18). Based on the 2016 PRAC (spread out over 2 years), we budgeted approximately 6.2M node-hours for CyberShake on Blue Waters this year, of which we have used 0.01M.

Data Estimates

SGT size estimates are scaled based on the number of points to process.

| #Grid points | Velocity mesh | SGTs size | Temp data | Output data | |

|---|---|---|---|---|---|

| Inside cutoff, per site | 23.1 billion | 271 GB | 615 GB | 1500 GB | 23.8 GB |

| Outside cutoff, per site | 10.2 billion | 120 GB | 133 GB | 385 GB | 11.6 GB |

| Total | 158 TB | 292 TB | 742 TB | 14.4 TB |

If we split the SGTs and the PP 25% Titan, 75% Blue Waters, we will need:

Titan: 185 TB temp files + 3.6 TB output files = 189 TB

Blue Waters: 557 TB temp files + 185 TB Titan SGTs + 10.8 TB output files = 753 TB

SCEC storage: 1 TB workflow logs + 14.4 TB output data files = 15.4 TB(24 TB free)

Database usage: (4 rows PSA [@ 2, 3, 5, 10 sec] + 12 rows RotD [RotD100 and RotD50 @ 2, 3, 4, 5, 7.5, 10 sec] + 4 rows Duration [X and Y acceleration @ 5-75%, 5-95%])/rupture variation x 225K rupture variations/site x 869 sites = 3.9 billion rows x 125 bytes/row = 455 GB (2.0 TB free on moment.usc.edu disk)

Lessons Learned

- Consider separating SGT and PP workflows in auto-submit tool to better manage the number of each, for improved reservation utilization.

- Create a read-only way to look at the CyberShake Run Manager website.

- Consider reducing levels of the workflow hierarchy, thereby reducing load on shock.

- Establish clear rules and policies about reservation usage.

- Determine advance plan for SGTs for sites which require fewer (200) GPUs.

- Determine advance plan for SGTs for sites which exceed memory on nodes.

- Create new velocity model ID for composite model, capturing metadata.

- Verify all Java processes grab a reasonable amount of memory.

- Clear disk space before study begins to avoid disk contention.

- Add stress test before beginning study, for multiple sites at a time, with cleanup.

- In addition to disk space, check local inode usage.

- If submitting to multiple reservations, make sure enough jobs are eligible to run that no reservation is starved.

- If running primarily SGTs for awhile, make sure they don't get deleted due to quota policies.

Performance Metrics

Usage

Just before starting, we grabbed the following usage numbers from the systems:

Blue Waters

The 'usage' command gives 2792733.80 node-hours used out of 9620000.00 by project baln, and 1014 jobs run.

User scottcal used 77705.03 node-hours on project baln.

On restart, usage gives 3091276.56 node-hours used out of 9620000.00 by project baln, and 2265884.05 used by user scottcal.

At end, usage gives 4903299.91 node-hours used out of 9620000.00 by project baln, and 3083951.19 used by user scottcal.

Titan

Project usage: 78,304,261 SUs (of 96,000,000) in 2018, 22,784,073 in August 2018.

callag usage: 4,046,501 SUs in 2018, 985,434 in August 2018.

callag usage at hiatus: 50,158,853 SUs in 2018, 5,418,846 in August 2018, 26,119,171 in September, 15,559,769 in October.

callag usage upon resume: 0 in January 2019.

callag usage upon end of running on Titan (1/31): 27,999,371 SUs

Reservations

We started with 1252 XK, 1316 XK, 2 160 XE, 3 164 XE, and 1 168 XE node reservations at noon PDT on 8/18. Because of a misunderstanding regarding the XE node reservations, jobs weren't matched to them and they terminated in 40 minutes.

We lost the XK reservations around 8 am PDT on 8/20, as they were accidentally killed by Blue Waters staff.

We got new XK reservations (1252 and 1316 nodes) at 11 am PDT on 8/22.

Due to underutilization, both XK reservations and all but 1 of the XE reservations were killed between midnight and 8 am PDT on 8/29.

A new set of XK reservations (1252, 1316) started at 7:30 am PDT on 8/31, 4 new XEs (3x164, 1x160) at 9:15 am on 8/31, and 1 more XE (1x168) at 9:45 am on 8/31. They were killed in the morning of 9/4 due to underutilization.

A new set of reservations (same number and sizes) started at 8 am PDT on 9/14.

Events during Study

Until we got our second round of reservations on 8/22, it was difficult to make headway on Blue Waters. Then it became difficult to keep the XE reservations full, so we switched to SGTs-only on Titan.

We finally were granted 8 jobs ready to run and 5 jobs in bin 5 on Titan, which took effect on 8/29.

Early in the morning of 8/29, some kind of network or disk issue decoupled shock and the remote systems. This resulted in a variety of problems, including not enough jobs in the queue on Blue Waters, Condor no longer submitting jobs to grid resources, and the same job being submitted multiple times to Titan. On 8/30, these issues seemed to have worked themselves out.

Titan was down for 8/30-8/31.

The SCEC disks crashed sometime during the night of 8/30-8/31. The disks were restored around 8:30 am PDT on 8/31, but shock required a reboot. A controller was swapped around 3 pm PDT, causing the disks to crash again. They were returned to service at 4 pm, but with extensive I/O errors. The reservations were killed on the morning of 9/4. The I/O issues were resolved in the afternoon of 9/4.

On 9/5 we determined a change made to try to fix the symlink issue (which turned x509userproxy back on for Titan jobs), led to submitting jobs which caused trouble for future jobs being submitted. We changed this back and will throw out runs currently in process on Titan.

On 9/14 we had to restart the rvgahp server on Titan.

On 9/14 at 10 am PDT 2 XK and 6 XE reservations were restarted.

On 9/14 around noon the SCEC disks were unmounted due to an unspecified issue at HPC.

We lost 3 XE reservations on the night of 9/14-9/15. 2 of them were resumed at 10 am on 9/19.

All reservations were terminated in the morning of 9/21 due to a full system job on Blue Waters.

On 9/21 we switched to using bundled SGT jobs on Titan, which increased SGT throughput.

2 XK reservations were restarted 9/23 at 8 am. 3 XE reservations were restarted 9/25 at 7 am, and 2 more XE reservations were started 9/27 at 10:15 am.

The rvgahp server on Titan needed to be restarted on 9/27.

2 of the XK reservations were lost around 4 am on 10/1; there was an issue with a disconnect between shock and Blue Waters, so post-processing jobs didn't clear the queue, so new SGT jobs weren't queued. They were restored on 10/4 at 8:30 am.

In the afternoon of 10/1, we exceeded 125% allocation usage on Titan, and our jobs were heavily penalized. After working this out with the OLCF staff, we returned to normal priority levels early in the morning of 10/4.

2 XE reservations were removed to clear room for additional reservations on 10/4.

1 XK reservation was removed around 4 pm on 10/5; a second was removed around 5 pm on 10/6.

The rvgahp server on Titan was restarted on 1/24.

On 1/25, we discovered the host certificate on hpc-transfer no longer has the hostname in it, causing our globus-url-copy transfers to fail. We suspended workflows on Blue Waters.

The rvgahp server on Titan was restarted on 1/28.

On 1/29, we modified pegasus-transfer to explicitly add the hpc-transfer subject using -ds to all hpc-transfer transfers, and restarted workflows on Blue Waters.

On 2/1, we stopped production runs on Titan, since our 13th month expired.

On 2/28 at 11 am PST, we resumed 2x1208 XK reservations on Blue Waters.

We lost 1 XK reservation around 11 am PST on 3/1, and the other at 7 am PST on 3/2.

Both reservations were resumed on 3/6 at noon CST. We lost 1 around 4 am on 3/7, due to more than 5 jobs at the top of the queue being for the same reservation, which means the other jobs are blocked. We returned the other reservation on 3/8 at about 9 pm, since all the 1600-node XK jobs had completed.

Application-level Metrics

- Makespan: 3079.3 hrs (DST started during the restart) (does not include hiatus)

- 1402.6 hrs in 2018

- 1676.7 hrs in 2019

- Uptime: ? hrs (downtime during BW and HPC system maintenance)

Note: uptime is what's used for calculating the averages for jobs, nodes, etc.

- 869 runs

The table below indicates how many SGT and PP were run on Blue Waters and Titan.

| BW SGTs | Titan SGTs | Total | |

|---|---|---|---|

| BW PP | 444 | 290 | 734 |

| Titan PP | 0 | 135 | 135 |

| Total | 444 | 425 | 869 |

- 8,091 UCERF2 ruptures considered

- 523,490 rupture variations considered

- 202,820,113 seismogram pairs generated

- 30,423,016,950 intensity measures generated (150 per seismogram pair)

- 1625 workflows run

- 1329 successful

- 432 integrated

- 460 SGT

- 437 PP

- 296 failed

- 250 integrated

- 23 SGT

- 23 PP

- 1329 successful

- 18,065 remote jobs submitted (7757 on Titan, 10,308 on Blue Waters)

- 21,220 local jobs submitted

- 6212844.1 node-hrs used (119,630,290 core-hrs)

- 7149 node-hrs per site (137,664 core-hrs)

- On average, 5 jobs running, with a max of 48

- Average of 1879 nodes used, with a maximum of 16,219 [1480 BW, 14,739 Titan (79%)] (279,984 cores).

- Total data generated: ?

- ? TB SGTs generated (? GB per single SGT)

- ? TB intermediate data generated (? GB per velocity file, duplicate SGTs)

- 14.98 TB output data (8,684,106 files)

Delay per job (using a 14-day, no-restarts cutoff: 999 workflows, 9697 jobs) was mean: 10776 sec, median: 271, min: 0, max: 744494, sd: 37410