Difference between revisions of "CyberShake Study 21.12"

| (81 intermediate revisions by 2 users not shown) | |||

| Line 3: | Line 3: | ||

== Status == | == Status == | ||

| − | This study is | + | This study is complete. |

| + | |||

| + | It began on December 15, 2021 at 8:39:28 am PST. and finished on January 13, 2022 at 1:06:24 am PST. | ||

== Data Products == | == Data Products == | ||

| + | |||

| + | In general, the Study 21.12b maps are preferred, unless showing the effect of changing RSQSim frictional parameters. | ||

| + | |||

| + | Hazard maps for this study are available [http://opensha.usc.edu/ftp/kmilner/markdown/cybershake-analysis/study_21_12_rsqsim_4983_skip65k_1hz/ here]. | ||

| + | |||

| + | Comparisons of Study 21.12 and Study 15.4 are available [http://opensha.usc.edu/ftp/kmilner/markdown/cybershake-analysis/study_21_12_rsqsim_4983_skip65k_1hz/multi_study_hazard_maps/resources/ here]. | ||

| + | |||

| + | Results from Study 21.12b are available [http://opensha.usc.edu/ftp/kmilner/markdown/cybershake-analysis/study_21_12_rsqsim_5413/ here]. | ||

| + | |||

| + | Comparisons of Study 21.12b and Study 15.4 are available [http://opensha.usc.edu/ftp/kmilner/markdown/cybershake-analysis/study_21_12_rsqsim_5413/multi_study_hazard_maps/resources/ here]. | ||

== Science Goals == | == Science Goals == | ||

| Line 31: | Line 43: | ||

*All events have equal probability, 1/715k | *All events have equal probability, 1/715k | ||

| − | Additional details are available on the [http://opensha.usc.edu/ftp/kmilner/markdown/rsqsim-analysis/catalogs/rundir4983_stitched/#bruce-4983-stitched catalog's metadata page]. This is the catalog used in [https://pubs.geoscienceworld.org/ssa/bssa/article/111/2/898/593757/Toward-Physics-Based-Nonergodic-PSHA-A-Prototype Milner et al., 2021], which used 0.5 Hz CyberShake simulations performed in May, 2020. | + | Additional details are available on the [http://opensha.usc.edu/ftp/kmilner/markdown/rsqsim-analysis/catalogs/rundir4983_stitched/#bruce-4983-stitched catalog's metadata page], and the catalog and input fault geometry files can be [https://zenodo.org/record/5542222 downloaded from zenodo]. This is the catalog used in [https://pubs.geoscienceworld.org/ssa/bssa/article/111/2/898/593757/Toward-Physics-Based-Nonergodic-PSHA-A-Prototype Milner et al., 2021], which used 0.5 Hz CyberShake simulations performed in May, 2020. |

== Sites == | == Sites == | ||

| Line 67: | Line 79: | ||

*ERF 62: 1 Hz RSQSim production ERF with the same catalog | *ERF 62: 1 Hz RSQSim production ERF with the same catalog | ||

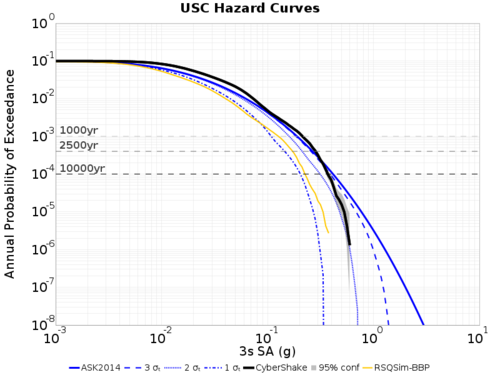

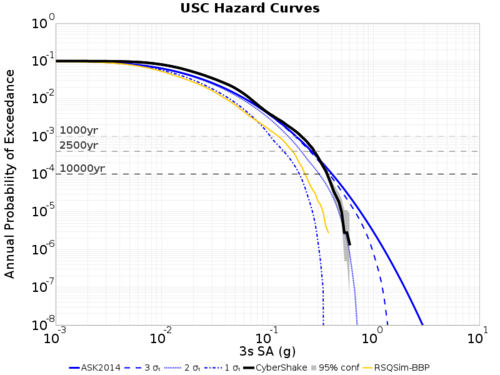

| − | The first test run of ERF 61 used the wrong (older) mesh lower depth of 40 km, which is why the top right plot differs slightly from the top left. The | + | The first test run of ERF 61 used the wrong (older) mesh lower depth of 40 km, which is why the top right plot differs slightly from the top left. The middle left plot agrees perfectly with the top left. |

{| | {| | ||

| Line 82: | Line 94: | ||

| [[File:USC_curves_3s_ERF61.png|thumb|500px]] | | [[File:USC_curves_3s_ERF61.png|thumb|500px]] | ||

| [[File:USC_curves_3s_ERF62_FIRST.png|thumb|500px]] | | [[File:USC_curves_3s_ERF62_FIRST.png|thumb|500px]] | ||

| + | |- | ||

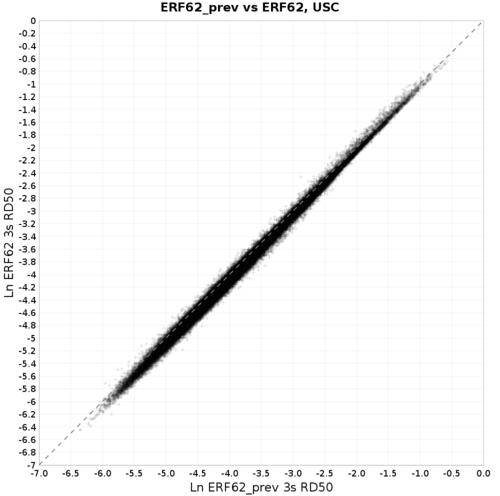

| + | | '''ERF 62 (1 Hz) with proposed (new) simulation parameters''' | ||

| + | | '''ERF 62 ccatter comparing new parameters (y) and old parameters (x)''' | ||

| + | |- | ||

| + | | [[File:USC_curves_3s_ASK2014.png|thumb|500px]] | ||

| + | | [[File:Erf_62prev_62_USC_compare.png|thumb|500px]] | ||

|} | |} | ||

| Line 110: | Line 128: | ||

| [[File:Erf_58_61_USC_compare.png|thumb|500px]] | | [[File:Erf_58_61_USC_compare.png|thumb|500px]] | ||

| [[File:Erf_58_62_USC_compare.png|thumb|500px]] | | [[File:Erf_58_62_USC_compare.png|thumb|500px]] | ||

| + | |} | ||

| + | |||

| + | === 1 Hz vs 0.5 Hz comparisons === | ||

| + | |||

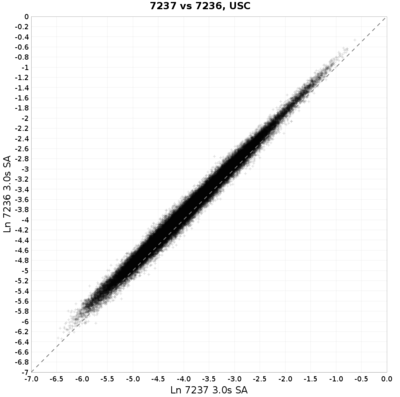

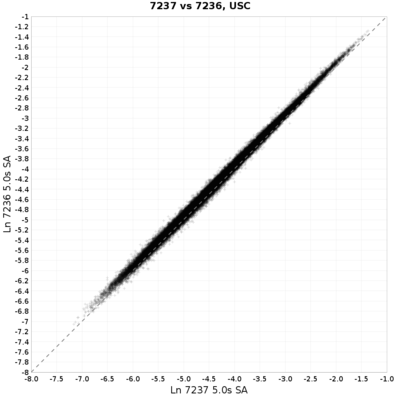

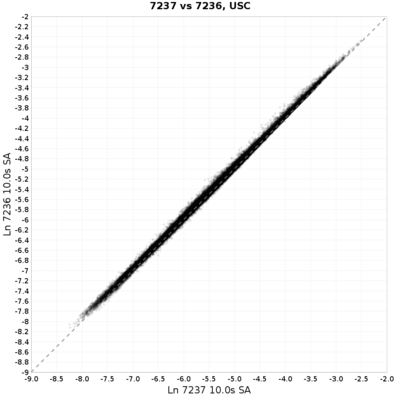

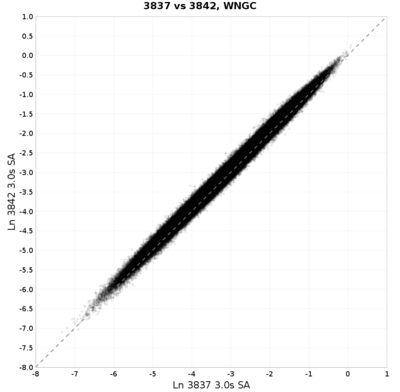

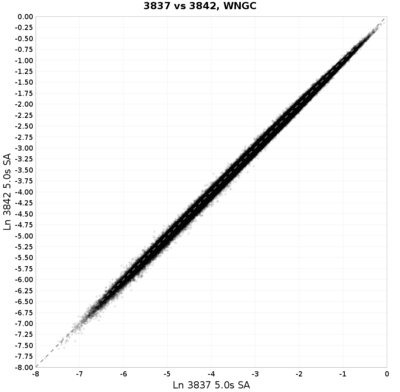

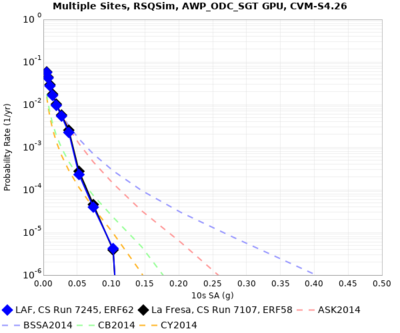

| + | Here is a comparison of 1 Hz runs (y axis) and 0.5 Hz runs (x axis). The top row is a recent USC run with the RSQSim ERF. The bottom row is a previous test with UCERF2-CyberShake and the WNGC site. The columns are 3s, 5s, and 10s SA, respectively, all geometric mean. | ||

| + | |||

| + | {| | ||

| + | | [[File:USC_7236_vs_7237_scatter_3.0s_GEOM_compare.png|thumb|400px]] | ||

| + | | [[File:USC_7236_vs_7237_scatter_5.0s_GEOM_compare.png|thumb|400px]] | ||

| + | | [[File:USC_7236_vs_7237_scatter_10.0s_GEOM_compare.png|thumb|400px]] | ||

| + | |- | ||

| + | | [[File:WNGC_3842_vs_3837_scatter_3.0s_GEOM_compare.png|thumb|400px]] | ||

| + | | [[File:WNGC_3842_vs_3837_scatter_5.0s_GEOM_compare.png|thumb|400px]] | ||

| + | | [[File:WNGC_3842_vs_3837_scatter_10.0s_GEOM_compare.png|thumb|400px]] | ||

|} | |} | ||

| Line 124: | Line 156: | ||

*When calculating Qs in the SGT header generation code, a default Qs of 25 was always used. This has been changed to Qs=0.05Vs. | *When calculating Qs in the SGT header generation code, a default Qs of 25 was always used. This has been changed to Qs=0.05Vs. | ||

*We have turned off the adjustment of mu and lambda. | *We have turned off the adjustment of mu and lambda. | ||

| − | *FP was increased from 0.5 to 1.0. | + | *FP was increased from 0.5 to 1.0. <-- <b>This was not picked up correctly by the wrapper, so 0.5 was still used.</b> |

*We modified the lambda and mu calculations in AWP to use the original media parameter values from the velocity mesh rather than the FP-modified ones when calculating strains, to be consistent with RWG. | *We modified the lambda and mu calculations in AWP to use the original media parameter values from the velocity mesh rather than the FP-modified ones when calculating strains, to be consistent with RWG. | ||

| Line 155: | Line 187: | ||

<ul> | <ul> | ||

<li>Seismograms: 2-component seismograms, 8000 timesteps (400 sec) each</li> | <li>Seismograms: 2-component seismograms, 8000 timesteps (400 sec) each</li> | ||

| − | <li>PSA: X and Y spectral acceleration at 44 periods (10, 9.5, 9, 8.5, 8, 7.5, 7, 6.5, 6, 5.5, 5, 4.8, 4.6, 4.4, 4.2, 4, 3.8, 3.6, 3.4, 3.2, | + | <li>PSA: X and Y spectral acceleration at 44 periods (10, 9.5, 9, 8.5, 8, 7.5, 7, 6.5, 6, 5.5, 5, 4.8, 4.6, 4.4, 4.2, 4, 3.8, 3.6, 3.4, 3.2, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.66667, 1.42857, 1.25, 1.11111, 1, .66667, .5, .4, .33333, .285714, .25, .22222, .2, .16667, .142857, .125, .11111, .1 sec)</li> |

| − | <li>RotD: RotD50, the RotD50 azimuth, and RotD100 at 22 periods (1.0, 1.2, 1.4, 1.5, 1.6, 1.8, 2.0, 2.2, 2.4, 2.6, 2.8, 3.0, 3.5, 4.0, 4.4, 5.0, 5.5, 6.0, 6.5, 7.5, 8.5, 10.0 sec)</li> | + | <li>RotD: PGV, and RotD50, the RotD50 azimuth, and RotD100 at 22 periods (1.0, 1.2, 1.4, 1.5, 1.6, 1.8, 2.0, 2.2, 2.4, 2.6, 2.8, 3.0, 3.5, 4.0, 4.4, 5.0, 5.5, 6.0, 6.5, 7.5, 8.5, 10.0 sec)</li> |

<li>Durations: for X and Y components, energy integral, Arias intensity, cumulative absolute velocity (CAV), and for both velocity and acceleration, 5-75%, 5-95%, and 20-80%.</li> | <li>Durations: for X and Y components, energy integral, Arias intensity, cumulative absolute velocity (CAV), and for both velocity and acceleration, 5-75%, 5-95%, and 20-80%.</li> | ||

</ul> | </ul> | ||

| Line 166: | Line 198: | ||

<ul> | <ul> | ||

<li>PSA: none</li> | <li>PSA: none</li> | ||

| − | <li>RotD: RotD50 and RotD100 at 10, 7.5, 5, 4, 3, and 2 sec.</li> | + | <li>RotD: RotD50 and RotD100 at 10, 7.5, 5, 4, 3, and 2 sec, and PGV.</li> |

<li>Durations: acceleration 5-75% and 5-95% for X and Y components</li> | <li>Durations: acceleration 5-75% and 5-95% for X and Y components</li> | ||

</ul> | </ul> | ||

| Line 233: | Line 265: | ||

The PeakAmplitudes table uses approximately 100 bytes per entry. | The PeakAmplitudes table uses approximately 100 bytes per entry. | ||

| − | 100 bytes/entry * | + | 100 bytes/entry * 17 entries/event * 76786 events/site * 335 sites = 41 GB. The drive on moment with the mysql database has 919 GB free. |

== Lessons Learned == | == Lessons Learned == | ||

| + | |||

| + | == Stress Test == | ||

| + | |||

| + | The stress test began on 12/10/21 at 23:05 PST. We selected 21 sites for running both SGT and PP. | ||

| + | |||

| + | The stress test concluded on 12/12/21 at 19:41 PST. | ||

| + | |||

| + | === Issues Discovered === | ||

| + | |||

| + | *<i>Workflows aren't being recognized as running by the Run Manager and are prematurely moved into an error state.</i> | ||

| + | Fixed issue with correctly extracting Condor ID from run. | ||

| + | *<i>Output SGTs are being registered in a local RC rather than a global one, so the PP workflow can't find them.</i> | ||

| + | Modified parameters in properties file. | ||

| + | *<i>Memory requirements for planning PP Synth workflow is high, so if too many run at once the Java process aborts.</i> | ||

| + | Set JAVA_HEAPMAX to 10240. | ||

| + | *<i>Too many Globus emails.</i> | ||

| + | Auto-moved them to a different folder. | ||

| + | *<i>Wrong ERF file used in curve calculations.</i> | ||

| + | Fixed ERF file used in DAX generator. | ||

| + | |||

| + | === Comparison Curves === | ||

| + | |||

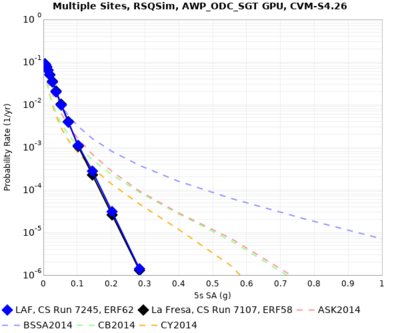

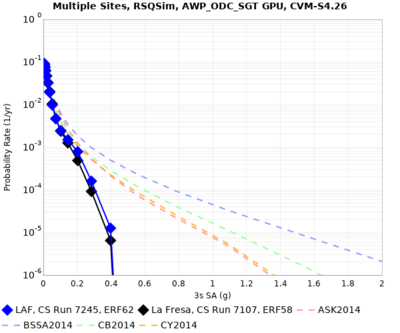

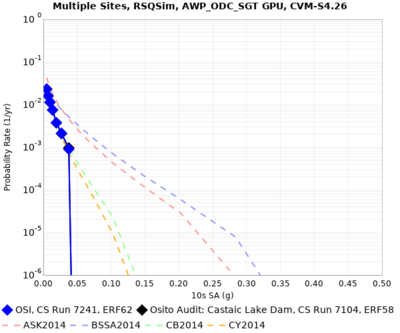

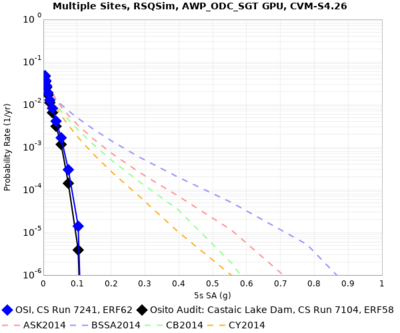

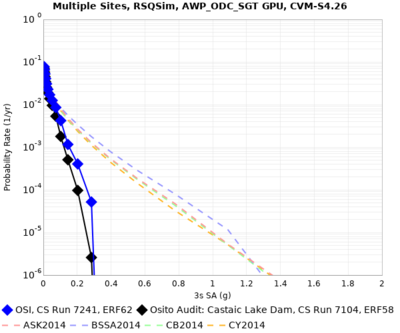

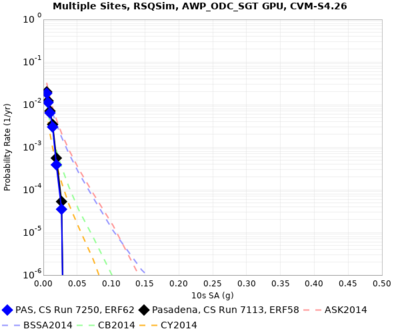

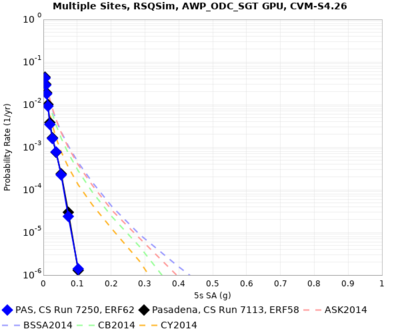

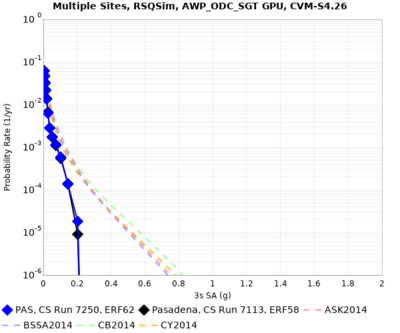

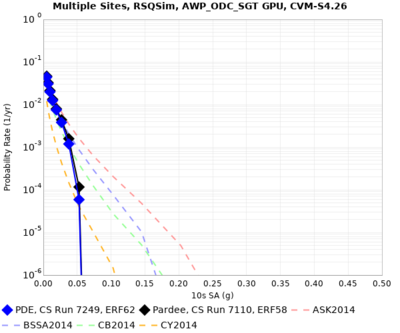

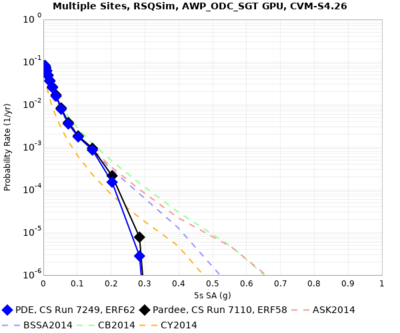

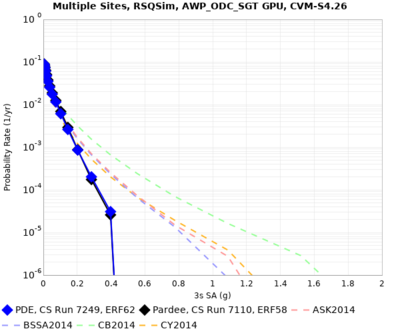

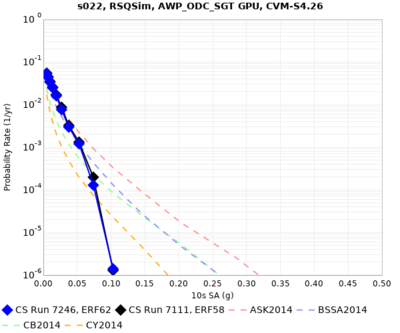

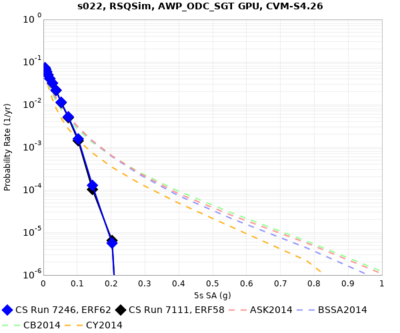

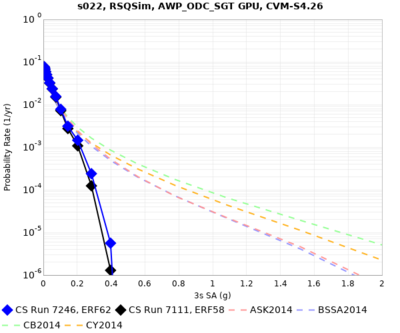

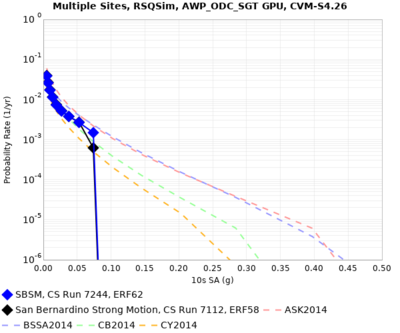

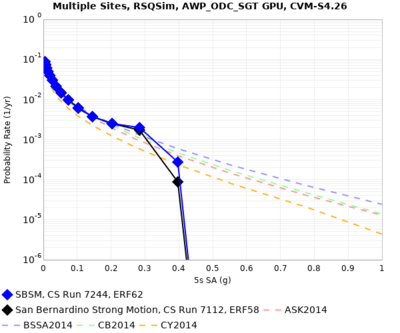

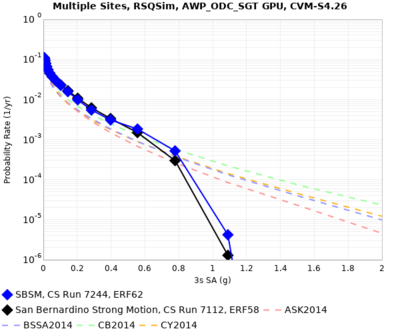

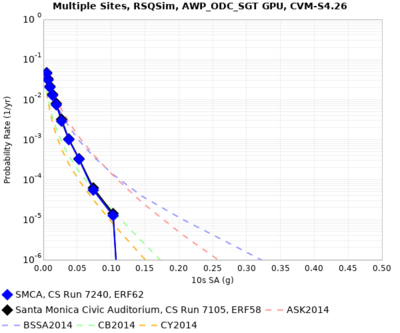

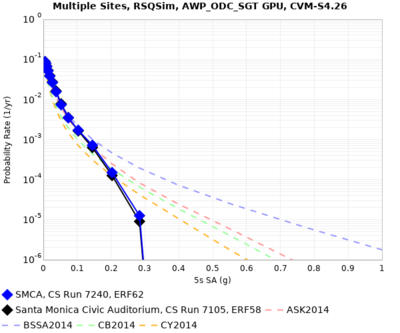

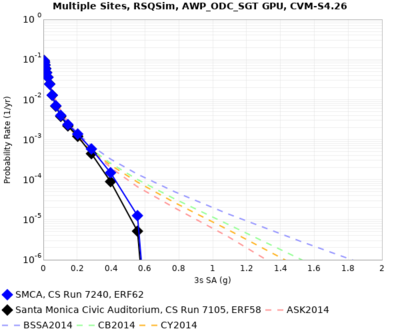

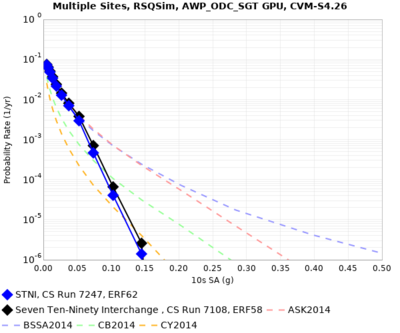

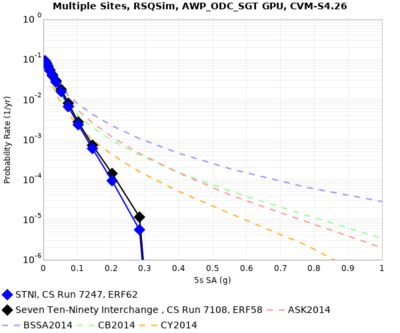

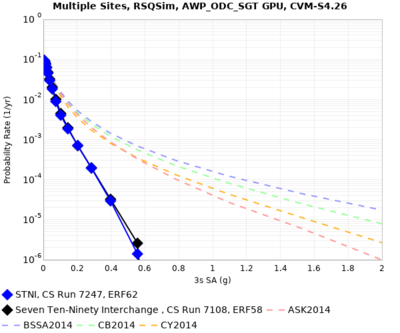

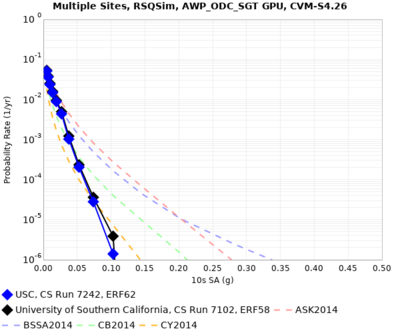

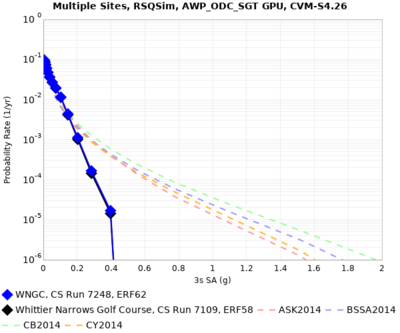

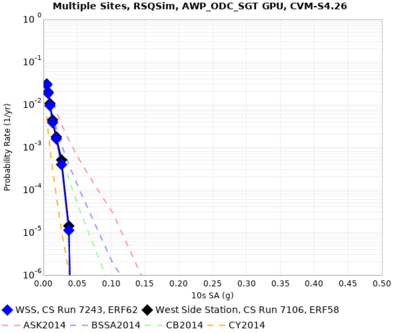

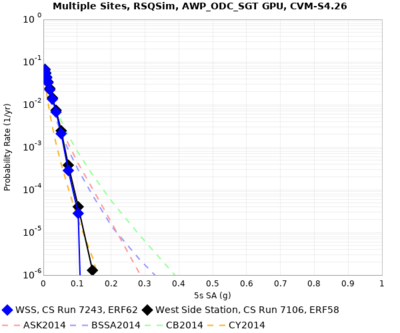

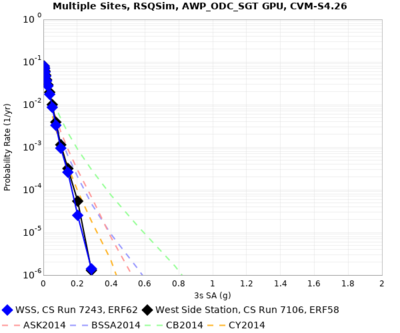

| + | Below are comparison curves between ERF 62 and ERF 58. | ||

| + | |||

| + | {| | ||

| + | ! Site !! 10 sec !! 5 sec !! 3 sec | ||

| + | |- | ||

| + | | LAF || [[File:LAF_e62_v_e58_10sec_RotD50.png|thumb|400px]] || [[File:LAF_e62_v_e58_5sec_RotD50.png|thumb|400px]] || [[File:LAF_e62_v_e58_3sec_RotD50.png|thumb|400px]] | ||

| + | |- | ||

| + | | OSI || [[File:OSI_e62_v_e58_10sec_RotD50.png|thumb|400px]] || [[File:OSI_e62_v_e58_5sec_RotD50.png|thumb|400px]] || [[File:OSI_e62_v_e58_3sec_RotD50.png|thumb|400px]] | ||

| + | |- | ||

| + | | PAS || [[File:PAS_e62_v_e58_10sec_RotD50.png|thumb|400px]] || [[File:PAS_e62_v_e58_5sec_RotD50.png|thumb|400px]] || [[File:PAS_e62_v_e58_3sec_RotD50.png|thumb|400px]] | ||

| + | |- | ||

| + | | PDE || [[File:PDE_e62_v_e58_10sec_RotD50.png|thumb|400px]] || [[File:PDE_e62_v_e58_5sec_RotD50.png|thumb|400px]] || [[File:PDE_e62_v_e58_3sec_RotD50.png|thumb|400px]] | ||

| + | |- | ||

| + | | s022 || [[File:s022_e62_v_e58_10sec_RotD50.png|thumb|400px]] || [[File:s022_e62_v_e58_5sec_RotD50.png|thumb|400px]] || [[File:s022_e62_v_e58_3sec_RotD50.png|thumb|400px]] | ||

| + | |- | ||

| + | | SBSM || [[File:SBSM_e62_v_e58_10sec_RotD50.png|thumb|400px]] || [[File:SBSM_e62_v_e58_5sec_RotD50.png|thumb|400px]] || [[File:SBSM_e62_v_e58_3sec_RotD50.png|thumb|400px]] | ||

| + | |- | ||

| + | | SMCA || [[File:SMCA_e62_v_e58_10sec_RotD50.png|thumb|400px]] || [[File:SMCA_e62_v_e58_5sec_RotD50.png|thumb|400px]] || [[File:SMCA_e62_v_e58_3sec_RotD50.png|thumb|400px]] | ||

| + | |- | ||

| + | | STNI || [[File:STNI_e62_v_e58_10sec_RotD50.png|thumb|400px]] || [[File:STNI_e62_v_e58_5sec_RotD50.png|thumb|400px]] || [[File:STNI_e62_v_e58_3sec_RotD50.png|thumb|400px]] | ||

| + | |- | ||

| + | | USC || [[File:USC_e62_v_e58_10sec_RotD50.png|thumb|400px]] || [[File:USC_e62_v_e58_5sec_RotD50.png|thumb|400px]] || [[File:USC_e62_v_e58_3sec_RotD50.png|thumb|400px]] | ||

| + | |- | ||

| + | | WNGC || [[File:WNGC_e62_v_e58_10sec_RotD50.png|thumb|400px]] || [[File:WNGC_e62_v_e58_5sec_RotD50.png|thumb|400px]] || [[File:WNGC_e62_v_e58_3sec_RotD50.png|thumb|400px]] | ||

| + | |- | ||

| + | | WSS || [[File:WSS_e62_v_e58_10sec_RotD50.png|thumb|400px]] || [[File:WSS_e62_v_e58_5sec_RotD50.png|thumb|400px]] || [[File:WSS_e62_v_e58_3sec_RotD50.png|thumb|400px]] | ||

| + | |} | ||

| + | |||

| + | == Events During Study == | ||

| + | |||

| + | On 12/18, we discovered that some SGT jobs weren't finishing in 60 minutes. We increased the runtime to 90 minutes. | ||

| + | |||

| + | On 12/19, we reduced the runtime of the PostAWP job from 2 hours to 20 minutes, and of the NaN_Check job from 1 hour to 15 minutes. | ||

| + | |||

| + | The dtn36 node was restarted on 12/31 at 00:30 EST, but this was not discovered until 1/1 at 22:50 EST, so all workflow jobs were moved into a held state. The rvgahp daemon was restarted and jobs were released. | ||

| + | |||

| + | The dtn36 node was restarted again on 1/2 at 23:05 EST, so the rvgahp daemon was restarted on 1/3 at 22:05. | ||

== Performance Metrics == | == Performance Metrics == | ||

| + | |||

| + | The production runs began on 12/15/21 at 8:39:28 am PST and finished on 1/13/22 at 1:06:24 am PST, for a duration of 688.4 hrs. | ||

| + | |||

| + | === Usage === | ||

| + | |||

| + | Before starting the stress test, the usage on Summit was 237,103 for GEO112 and 8127 for user callag. | ||

| + | |||

| + | After the stress test, the usage on Summit was 240,817 for GEO112 and 11055 for user callag. That's 139 node-hours per site. | ||

| + | |||

| + | Before starting the production runs, the usage on Summit was 244,307 for GEO112 and 11062.9 for user callag. | ||

| + | |||

| + | After the production run, the usage on Summit for user callag was 76532.8. Total usage was 65469.9 node-hours. | ||

| + | |||

| + | === Application-level Metrics === | ||

| + | |||

| + | * Makespan: 688.4 hrs | ||

| + | * Uptime: 631.1 hrs (rvgahp down due to dtn36 node restarts) | ||

| + | |||

| + | Note: uptime is what's used for calculating the averages for jobs, nodes, etc. | ||

| + | |||

| + | * 313 runs in production + 22 in testing | ||

| + | * 116,800 unique rupture variations considered (335 sites) | ||

| + | * 25,723,289 seismogram pairs generated (335 sites) | ||

| + | * 3,884,216,639 intensity measures generated (151 per seismogram pair) (335 sites) | ||

| + | |||

| + | * 16,415 jobs executed | ||

| + | ** Per run: 11 directory create, 13 local, 9 staging, 2 register, 14 remote | ||

| + | |||

| + | Note: we're including core-hour counts for backward comparisons, but since most of the work was done on GPUs this isn't very meaningful. | ||

| + | * 65469.9 node-hrs used (2749735.8 core-hrs) | ||

| + | * 209.2 node-hrs per site (8785.1 core-hrs). This is 80% more than our original estimate, and 50% more than the stress test showed. | ||

| + | * On average, 2.5 jobs running, with a max of 36 | ||

| + | * Average of 102 nodes used, with a maximum of 2128 (89376 cores/12462 GPUs, 46.2% of Summit) | ||

| + | |||

| + | * 670 workflows run | ||

| + | ** ? successful | ||

| + | *** ? SGT | ||

| + | *** ? PP | ||

| + | ** ? failed | ||

| + | *** ? SGT | ||

| + | *** ? PP | ||

| + | |||

| + | * Total data generated: ? | ||

| + | ** 72 TB SGTs generated (220 GB per single SGT) | ||

| + | ** 165 TB intermediate data generated | ||

| + | ** 1.9 TB output data (102,893,156 files) | ||

| + | |||

| + | Delay per job (using a 7-day, no-restarts cutoff: ? workflows, ? jobs) was mean: ? sec, median: ?, min: ?, max: ?, sd: ? | ||

| + | |||

| + | {| border=1 | ||

| + | ! Bins (sec) | ||

| + | | 0 || || 60 || || 120 || || 180 || || 240 || || 300 || || 600 || || 900 || || 1800 || || 3600 || || 7200 || || 14400 || || 43200 || || 86400 || || 172800 || || 259200 || || 604800 | ||

| + | |- | ||

| + | ! Jobs per bin | ||

| + | | || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? || || ? | ||

| + | |} | ||

| + | |||

| + | * Application parallel node speedup (node-hours divided by makespan) was ?x. (Divided by uptime: ?x) | ||

| + | * Application parallel workflow speedup (number of workflows times average workflow makespan divided by application makespan) was ?x. (Divided by uptime: ?x) | ||

| + | |||

| + | === Workflow-level Metrics === | ||

| + | |||

| + | * The average makespan of a workflow (7-day cutoff, workflows with retries ignored, so ?/? workflows considered) was ? sec (? hrs), median: ? (? hrs), min: ?, max: ?, sd: ? | ||

| + | ** For SGT workflows (736 workflows), mean: ? sec (? hrs), median: ? (? hrs), min: ?, max: ?, sd: ? | ||

| + | ** For PP workflows (735 workflows), mean: ? (? hrs), median: ? (? hrs), min: ?, max: ?, sd: ? | ||

| + | |||

| + | * Workflow parallel core speedup (? workflows) was mean: ?, median: ?, min: ?, max: ?, sd: ? | ||

| + | * Workflow parallel node speedup (? workflows) was mean: ?, median: ?, min: ?, max: ?, sd: ? | ||

| + | |||

| + | <!-- | ||

| + | === Job-level Metrics === | ||

| + | |||

| + | We acquired job-level metrics from two sources: pegasus-statistics, and parsing the workflow logs with a custom script, each of which provides slightly different info, so we include both here. The pegasus-statistics include failed jobs and failed workflows; the parser omits workflows which failed or took longer than the 7-day cutoff time. | ||

| + | |||

| + | Pegasus-statistics results are below. The Min/Max/Mean/Total columns refer to the product of the walltime and the pegasus_cores value. Note that this is not directly comparable to the number of SUs burned, since pegasus_cores does not map directly onto how many nodes were used. | ||

| + | |||

| + | Summary: | ||

| + | |||

| + | {| border="1" | ||

| + | ! Type !! Succeeded !! Failed !! Incomplete !! Total !! Retries !! Total+Retries !! Failed % | ||

| + | |- | ||

| + | ! Tasks | ||

| + | | 14723 || 0 || 750 || 15473 || 477 || 15200 || 0% | ||

| + | |- | ||

| + | ! Jobs | ||

| + | | 12259 || 29 || 18105 || 30393 || 14935 || 27223 || 0.2% | ||

| + | |- | ||

| + | ! Sub-Workflows | ||

| + | | 1005 || 10 || 664 || 1679 || 1458 || 2473 || 1% | ||

| + | |} | ||

| + | |||

| + | Breakdown. For some reason, pegasus-statistics only multiplied the numbers by pegasus_cores for some of the jobs (the jobs in integrated workflows), so we had to modify some of the numbers by hand. | ||

| + | |||

| + | {| border="1" | ||

| + | ! Transformation !! Count !! Succeeded !! Failed !! Min !! Max !! Mean !! Total !! Total time (sec) !! % of overall time !! % of node-hrs | ||

| + | |- | ||

| + | | condor::dagman || 3059 || 1021 || 2038 || 30.0 || 349858.0 || 10215.932 || 31250537.0 | ||

| + | |- | ||

| + | | dagman::post || 43874 || 27244 || 16630 || 0.0 || 9216.0 || 43.668 || 1915908.0 | ||

| + | |- | ||

| + | | dagman::pre || 3132 || 3081 || 51 || 1.0 || 28.0 || 5.079 || 15906.0 | ||

| + | |- | ||

| + | | pegasus::cleanup || 18416 || 2627 || 15789 || 0.0 || 12143.003 || 172.956 || 3185154.125 | ||

| + | |- | ||

| + | | pegasus::dirmanager || 5597 || 5336 || 261 || 2.155 || 1158.816 || 13.294 || 74405.152 | ||

| + | |- | ||

| + | | pegasus::rc-client || 1010 || 1010 || 0 || 1.215 || 2158.446 || 238.223 || 240605.526 | ||

| + | |- | ||

| + | | pegasus::transfer || 3524 || 3422 || 102 || 0.0 || 170119.463 || 815.476 || 2873736.586 | ||

| + | |- | ||

| + | ! Supporting Jobs !! !! !! !! !! !! !! | ||

| + | |- | ||

| + | | scec::AWP_GPU:1.0 || 1690 || 1607 || 83 || 0.0 || 953600.0 || 82880.188 || 140067518.097 | ||

| + | |- | ||

| + | | scec::AWP_NaN_Check:1.0 || 1616 || 1604 || 12 || 0.0 || 38781.0 || 734.095 || 1186297.189 | ||

| + | |- | ||

| + | | scec::CheckSgt:1.0 || 439 || 438 || 1 || 765.915 || 4078.0 || 1475.492 || 647740.898 | ||

| + | |- | ||

| + | | scec::Check_DB_Site:1.0 || 437 || 401 || 36 || 0.319 || 18.101 || 1.509 || 659.587 | ||

| + | |- | ||

| + | | scec::Curve_Calc:1.0 || 516 || 441 || 75 || 5.652 || 213.02 || 61.387 || 31675.755 | ||

| + | |- | ||

| + | | scec::CyberShakeNotify:1.0 || 147 || 147 || 0 || 0.084 || 2.871 || 0.172 || 25.316 | ||

| + | |- | ||

| + | | scec::DB_Report:1.0 || 147 || 147 || 0 || 22.688 || 64.503 || 33.349 || 4902.333 | ||

| + | |- | ||

| + | | scec::DirectSynth:1.0 || 227 || 218 || 9 || 0.0 || 30760.296 || 19847.048 || 4505279.89 | ||

| + | |- | ||

| + | | scec::Disaggregate:1.0 || 147 || 147 || 0 || 24.781 || 92.014 || 31.597 || 4644.807 | ||

| + | |- | ||

| + | | scec::GenSGTDax:1.0 || 804 || 803 || 1 || 2.656 || 7.909 || 3.141 || 2525.575 | ||

| + | |- | ||

| + | | scec::Handoff:1.0 || 660 || 657 || 3 || 0.06 || 1617.433 || 3.659 || 2414.951 | ||

| + | |- | ||

| + | | scec::Load_Amps:1.0 || 564 || 408 || 156 || 47.135 || 1854.35 || 687.071 || 387508.218 | ||

| + | |- | ||

| + | | scec::PostAWP:1.0 || 1619 || 1604 || 15 || 0.0 || 38836.0 || 3833.309 || 6206127.486 | ||

| + | |- | ||

| + | | scec::PreAWP_GPU:1.0 || 808 || 802 || 6 || 0.0 || 4314.0 || 298.634 || 241296.569 | ||

| + | |- | ||

| + | | scec::PreCVM:1.0 || 833 || 803 || 30 || 0.0 || 274.18 || 95.378 || 79450.199 | ||

| + | |- | ||

| + | | scec::PreSGT:1.0 || 811 || 803 || 8 || 0.0 || 19744.0 || 1418.928 || 1150750.713 | ||

| + | |- | ||

| + | | scec::SetJobID:1.0 || 145 || 145 || 0 || 0.078 || 2.362 || 0.187 || 27.087 | ||

| + | |- | ||

| + | | scec::SetPPHost:1.0 || 144 || 144 || 0 || 0.068 || 2.075 || 0.166 || 23.846 | ||

| + | |- | ||

| + | | scec::Smooth:1.0 || 827 || 802 || 25 || 0.0 || 287104.0 || 13608.192 || 11253974.731 | ||

| + | |- | ||

| + | | scec::UCVMMesh:1.0 || 814 || 802 || 12 || 0.0 || 3065260.032 || 170091.123 || 138454174.009 | ||

| + | |- | ||

| + | | scec::UpdateRun:1.0 || 2005 || 2003 || 2 || 0.062 || 51.149 || 0.223 || 447.354 | ||

| + | |} | ||

| + | |||

| + | The parsing results are below. We have DirectSynth cumulative, and also broken out by system. We used a 7-day cutoff, for a total of 44,908 jobs. | ||

| + | |||

| + | Job attempts, over 44908 jobs: Mean: 1.388038, median: 1.000000, min: 1.000000, max: 30.000000, sd: 1.713763 | ||

| + | |||

| + | Remote job attempts, over 25973 remote jobs: Mean: 1.648789, median: 1.000000, min: 1.000000, max: 30.000000, sd: 2.189993 | ||

| + | |||

| + | |||

| + | * AWP_GPU (1771 jobs), 200 nodes: | ||

| + | Runtime: mean 1923.634105, median: 1754.000000, min: 1385.000000, max: 4512.000000, sd: 450.148598 | ||

| + | Attempts: mean 1.122530, median: 1.000000, min: 1.000000, max: 28.000000, sd: 1.121227 | ||

| + | * AWP_NaN (1771 jobs): | ||

| + | Runtime: mean 722.352908, median: 671.000000, min: 2.000000, max: 1819.000000, sd: 175.328731 | ||

| + | Attempts: mean 1.053642, median: 1.000000, min: 1.000000, max: 18.000000, sd: 0.735448 | ||

| + | * Check_DB (1752 jobs): | ||

| + | Runtime: mean 1.063356, median: 1.000000, min: 0.000000, max: 20.000000, sd: 1.664148 | ||

| + | Attempts: mean 1.021689, median: 1.000000, min: 1.000000, max: 10.000000, sd: 0.430816 | ||

| + | * CheckSgt_CheckSgt (1754 jobs): | ||

| + | Runtime: mean 1607.771380, median: 1512.500000, min: 765.000000, max: 7363.000000, sd: 532.358322 | ||

| + | Attempts: mean 1.017104, median: 1.000000, min: 1.000000, max: 3.000000, sd: 0.161038 | ||

| + | * Curve_Calc (2628 jobs): | ||

| + | Runtime: mean 73.477549, median: 66.000000, min: 40.000000, max: 400.000000, sd: 31.115240 | ||

| + | Attempts: mean 1.074201, median: 1.000000, min: 1.000000, max: 10.000000, sd: 0.388442 | ||

| + | * CyberShakeNotify_CS (876 jobs): | ||

| + | Runtime: mean 0.097032, median: 0.000000, min: 0.000000, max: 8.000000, sd: 0.595601 | ||

| + | Attempts: mean 1.001142, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.033768 | ||

| + | * DB_Report (876 jobs): | ||

| + | Runtime: mean 34.850457, median: 32.000000, min: 22.000000, max: 274.000000, sd: 15.243062 | ||

| + | Attempts: mean 1.001142, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.033768 | ||

| + | * DirectSynth (876 jobs), 3840 cores: | ||

| + | Runtime: mean 18050.928082, median: 17687.000000, min: 689.000000, max: 30760.000000, sd: 3571.608554 | ||

| + | Attempts: mean 1.081050, median: 1.000000, min: 1.000000, max: 6.000000, sd: 0.343308 | ||

| + | |||

| + | DirectSynth_titan (145 jobs), 240 nodes: | ||

| + | Runtime: mean 19616.213793, median: 19408.000000, min: 4682.000000, max: 30760.000000, sd: 3587.637235 | ||

| + | Attempts: mean 1.082759, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.275517 | ||

| + | DirectSynth_bluewaters (731 jobs), 120 nodes: | ||

| + | Runtime: mean 17740.440492, median: 17434.000000, min: 689.000000, max: 25058.000000, sd: 3485.860160 | ||

| + | Attempts: mean 1.080711, median: 1.000000, min: 1.000000, max: 6.000000, sd: 0.355219 | ||

| + | * Disaggregate_Disaggregate (876 jobs): | ||

| + | Runtime: mean 32.528539, median: 30.000000, min: 23.000000, max: 172.000000, sd: 12.949334 | ||

| + | Attempts: mean 1.001142, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.033768 | ||

| + | * Handoff (736 jobs): | ||

| + | Runtime: mean 3.086957, median: 0.000000, min: 0.000000, max: 1617.000000, sd: 59.976223 | ||

| + | Attempts: mean 1.008152, median: 1.000000, min: 1.000000, max: 4.000000, sd: 0.127428 | ||

| + | * GenSGTDax_GenSGTDax (887 jobs): | ||

| + | Runtime: mean 2.535513, median: 2.000000, min: 2.000000, max: 7.000000, sd: 0.821520 | ||

| + | Attempts: mean 1.030440, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.171794 | ||

| + | * Load_Amps (1752 jobs): | ||

| + | Runtime: mean 866.063927, median: 889.000000, min: 315.000000, max: 1854.000000, sd: 417.499023 | ||

| + | Attempts: mean 1.101598, median: 1.000000, min: 1.000000, max: 19.000000, sd: 1.149229 | ||

| + | * PostAWP_PostAWP (1771 jobs), 2 nodes: | ||

| + | Runtime: mean 1650.480519, median: 1555.000000, min: 482.000000, max: 3573.000000, sd: 352.539321 | ||

| + | Attempts: mean 1.039526, median: 1.000000, min: 1.000000, max: 14.000000, sd: 0.483046 | ||

| + | * PreAWP_GPU (886 jobs): | ||

| + | Runtime: mean 310.959368, median: 276.000000, min: 158.000000, max: 763.000000, sd: 105.501674 | ||

| + | Attempts: mean 1.022573, median: 1.000000, min: 1.000000, max: 4.000000, sd: 0.169811 | ||

| + | * PreCVM_PreCVM (887 jobs): | ||

| + | Runtime: mean 97.491545, median: 90.000000, min: 72.000000, max: 274.000000, sd: 20.336395 | ||

| + | Attempts: mean 1.069899, median: 1.000000, min: 1.000000, max: 5.000000, sd: 0.367303 | ||

| + | * PreSGT_PreSGT (886 jobs), 8 nodes: | ||

| + | Runtime: mean 207.305869, median: 196.000000, min: 158.000000, max: 576.000000, sd: 45.213291 | ||

| + | Attempts: mean 1.033860, median: 1.000000, min: 1.000000, max: 5.000000, sd: 0.220261 | ||

| + | * SetJobID_SetJobID (146 jobs): | ||

| + | Runtime: mean 0.034247, median: 0.000000, min: 0.000000, max: 2.000000, sd: 0.216269 | ||

| + | Attempts: mean 1.027397, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.163238 | ||

| + | * SetPPHost (144 jobs): | ||

| + | Runtime: mean 0.027778, median: 0.000000, min: 0.000000, max: 2.000000, sd: 0.202225 | ||

| + | Attempts: mean 1.000000, median: 1.000000, min: 1.000000, max: 1.000000, sd: 0.000000 | ||

| + | * Smooth_Smooth (886 jobs), 4 nodes: | ||

| + | Runtime: mean 981.109481, median: 843.000000, min: 321.000000, max: 3236.000000, sd: 615.348535 | ||

| + | Attempts: mean 1.069977, median: 1.000000, min: 1.000000, max: 10.000000, sd: 0.630007 | ||

| + | * UCVMMesh_UCVMMesh (886 jobs), 96 nodes: | ||

| + | Runtime: mean 557.007901, median: 671.500000, min: 57.000000, max: 2829.000000, sd: 544.539803 | ||

| + | Attempts: mean 1.038375, median: 1.000000, min: 1.000000, max: 5.000000, sd: 0.257386 | ||

| + | * UpdateRun_UpdateRun (3526 jobs): | ||

| + | Runtime: mean 0.031764, median: 0.000000, min: 0.000000, max: 38.000000, sd: 0.684162 | ||

| + | Attempts: mean 1.011628, median: 1.000000, min: 1.000000, max: 3.000000, sd: 0.109818 | ||

| + | |||

| + | * create_dir (10440 jobs): | ||

| + | Runtime: mean 3.776724, median: 2.000000, min: 2.000000, max: 1158.000000, sd: 18.502978 | ||

| + | Attempts: mean 1.044540, median: 1.000000, min: 1.000000, max: 4.000000, sd: 0.305174 | ||

| + | * cleanup_AWP (1472 jobs): | ||

| + | Runtime: mean 2.000000, median: 2.000000, min: 2.000000, max: 2.000000, sd: 0.000000 | ||

| + | Attempts: mean 1.004076, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.063714 | ||

| + | * clean_up (3452 jobs): | ||

| + | Runtime: mean 116.827057, median: 182.000000, min: 4.000000, max: 570.000000, sd: 94.090323 | ||

| + | Attempts: mean 5.653824, median: 9.000000, min: 1.000000, max: 42.000000, sd: 4.153044 | ||

| + | * register_bluewaters (731 jobs): | ||

| + | Runtime: mean 1015.307798, median: 970.000000, min: 443.000000, max: 2158.000000, sd: 389.140327 | ||

| + | Attempts: mean 1.222982, median: 1.000000, min: 1.000000, max: 19.000000, sd: 1.660952 | ||

| + | * register_titan (1018 jobs): | ||

| + | Runtime: mean 144.419450, median: 1.000000, min: 1.000000, max: 2085.000000, sd: 385.777688 | ||

| + | Attempts: mean 1.001965, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.044281 | ||

| + | * stage_inter (887 jobs): | ||

| + | Runtime: mean 4.908681, median: 4.000000, min: 4.000000, max: 662.000000, sd: 22.117336 | ||

| + | Attempts: mean 1.029312, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.168680 | ||

| + | * stage_in (4988 jobs): | ||

| + | Runtime: mean 14.967322, median: 5.000000, min: 4.000000, max: 187.000000, sd: 17.389459 | ||

| + | Attempts: mean 1.033681, median: 1.000000, min: 1.000000, max: 9.000000, sd: 0.289603 | ||

| + | * stage_out (1759 jobs): | ||

| + | Runtime: mean 1244.735645, median: 1061.000000, min: 210.000000, max: 34206.000000, sd: 1652.158490 | ||

| + | Attempts: mean 1.085844, median: 1.000000, min: 1.000000, max: 22.000000, sd: 0.758517 | ||

| + | //--> | ||

== Production Checklist == | == Production Checklist == | ||

| Line 243: | Line 592: | ||

<b>Science:</b> | <b>Science:</b> | ||

*<s>Confirm that ERF 62 test produces results which closely match ERF 61</s> | *<s>Confirm that ERF 62 test produces results which closely match ERF 61</s> | ||

| − | *Restore improvements to codes since ERF 58, and rerun USC for ERF 62 | + | *<s>Restore improvements to codes since ERF 58, and rerun USC for ERF 62</s> |

| + | *<s>Fix h/4 issue and rerun USC test.</s> | ||

*<s>Create prioritized site list.</s> | *<s>Create prioritized site list.</s> | ||

| − | *Hold science readiness review. | + | *<s>Hold science readiness review.</s> |

| + | *<s>Add link to fault geometry on Zenodo, either on the wiki or the fault metadata page.</s> | ||

| + | *<s>Add copy of science readiness review slides to wiki.</s> | ||

| + | *<s>Generate 0.5 Hz v 1 Hz scatterplot from UCERF2 ERF run.</s> | ||

| + | *<s>Go through config updates as a pair to confirm they have been correctly applied.</s> | ||

<b>Technical:</b> | <b>Technical:</b> | ||

| − | *Approach OLCF for the following requests: | + | *<s>Approach OLCF for the following requests:</s> |

| − | **Quota increase to | + | **<s>Quota increase to 300 TB</s> |

| − | **8 jobs ready to run | + | **<s>8 jobs ready to run</s> |

| − | **5 jobs in bin 5. | + | **<s>5 jobs in bin 5.</s> |

*To be able to bundle jobs, fix issue with Summit glideins. | *To be able to bundle jobs, fix issue with Summit glideins. | ||

*To run post-processing, resolve issues using GO to transfer data back to /project at CARC. | *To run post-processing, resolve issues using GO to transfer data back to /project at CARC. | ||

| − | *Tag code | + | *<s>Tag code</s> |

*<s>Modify job sizes and runtimes.</s> | *<s>Modify job sizes and runtimes.</s> | ||

| − | *Test auto-submit script. | + | *<s>Test auto-submit script.</s> |

| − | *Prepare pending file. | + | *<s>Prepare pending file.</s> |

| − | *Create XML file describing study for web monitoring tool. | + | *<s>Create XML file describing study for web monitoring tool.</s> |

*Get usage stats for Summit. | *Get usage stats for Summit. | ||

*<s>Prepare cronjob on Summit for monitoring jobs.</s> | *<s>Prepare cronjob on Summit for monitoring jobs.</s> | ||

| − | * | + | *<s>Activate script for monitoring x509 certificate.</s> |

| − | + | *<s>Modify workflows to not insert or calculate curves for PSA data.</s> | |

| − | *Modify workflows to not insert or calculate curves for PSA data. | + | *<s>Test that workflows don't insert or calculate curves for PSA data.</s> |

*<s>Modify dax-generator to use h/4 as default for surface point.</s> | *<s>Modify dax-generator to use h/4 as default for surface point.</s> | ||

*<s>Modify dax-generator to use ERF62 parameter file for generating GMPE comparison curves.</s> | *<s>Modify dax-generator to use ERF62 parameter file for generating GMPE comparison curves.</s> | ||

| − | *Hold technical readiness review. | + | *<s>Hold technical readiness review.</s> |

| − | *Modify nt to 8000 (400 sec). | + | *<s>Modify nt to 8000 (400 sec).</s> |

| + | *<s>Add calculation of PGV.</s> | ||

| + | *<s>Test calculation and insertion of PGV.</s> | ||

| + | *<s>Test SGT-only create/plan/run scripts.</s> | ||

| + | *<s>Test PP-only create/plan/run scripts.</s> | ||

| + | *<s>Fix issue with moving runs into Verified state</s> | ||

| + | *<s>Wrote and tested pegasus-transfer wrapper to handle GO transfers to CARC.</s> | ||

== Presentations, Posters, and Papers == | == Presentations, Posters, and Papers == | ||

| + | |||

| + | Science Readiness Review: [[Media:CyberShake_Study_21.12_Readiness_Review.odp | ODP]], [[Media:CyberShake_Study_21.12_Readiness_Review.pdf | PDF]] | ||

Latest revision as of 19:29, 17 August 2022

CyberShake 21.12 is a computational study to use a new ERF with CyberShake, generated from an RSQSim catalog. We plan to calculate results for 335 sites in Southern California using the RSQSim ERF, a minimum Vs of 500 m/s, and a frequency of 1 Hz. We will use the CVM-S4.26.M01 model, and the GPU implementation of AWP-ODC-SGT enhanced from the BBP verification testing. We will begin by generating all sets of SGTs, on Summit, then post-process them on a combination of Summit and Frontera.

Contents

- 1 Status

- 2 Data Products

- 3 Science Goals

- 4 Technical Goals

- 5 ERF

- 6 Sites

- 7 Velocity Model

- 8 Verification Tests

- 9 Technical and Scientific Updates

- 10 Output Data Products

- 11 Computational and Data Estimates

- 12 Lessons Learned

- 13 Stress Test

- 14 Events During Study

- 15 Performance Metrics

- 16 Production Checklist

- 17 Presentations, Posters, and Papers

Status

This study is complete.

It began on December 15, 2021 at 8:39:28 am PST. and finished on January 13, 2022 at 1:06:24 am PST.

Data Products

In general, the Study 21.12b maps are preferred, unless showing the effect of changing RSQSim frictional parameters.

Hazard maps for this study are available here.

Comparisons of Study 21.12 and Study 15.4 are available here.

Results from Study 21.12b are available here.

Comparisons of Study 21.12b and Study 15.4 are available here.

Science Goals

The science goals for this study are:

- Calculate a regional CyberShake model using an alternative, RSQSim-derived ERF.

- Compare results from an RSQSim ERF to results using a UCERF2 ERF (Study 15.4).

- Quantify effects of source model non-ergodicity

- Compare spatial distribution of ground motions (including directivity) to empirical and kinematic models

Technical Goals

The technical goals for this study are:

- Perform a study using OLCF Summit as a key compute resource.

- Evaluate the performance of the new workflow submission host, shock-carc.

- Use Globus Online for staging of output data products.

ERF

The ERF was generated from an RSQSim catalog, with the following parameters:

- 715kyr catalog (the first 65k years of events were dropped, so that every fault's first event is excluded)

- 220,927 earthquakes with M6.5+

- All events have equal probability, 1/715k

Additional details are available on the catalog's metadata page, and the catalog and input fault geometry files can be downloaded from zenodo. This is the catalog used in Milner et al., 2021, which used 0.5 Hz CyberShake simulations performed in May, 2020.

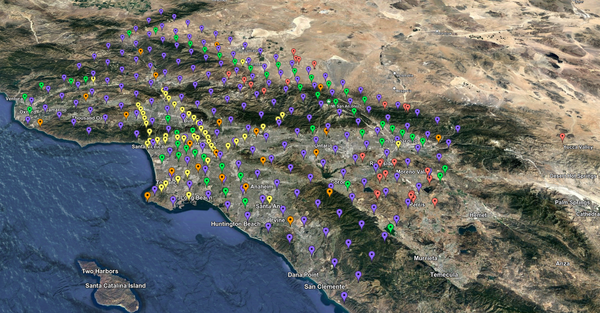

Sites

We will run a list of 335 sites, taken from the site list that was used in other Southern California studies. The order of execution will be:

- 10 sites used in Milner et al. (2021), each with top mesh point Vs at the 500 m/s floor: USC, SMCA, OSI, WSS, SBSM, LAF, s022, STNI, WNGC, PDE

- PAS hard rock site

- 20 km site grid

- 10 km site grid

- Remaining POIs, select 5km grid sites also used in Study 15.4

Velocity Model

We will use CVM-S4.26.M01.

To better represent the near-surface layer, we will populate the velocity parameters for the surface point by querying the velocity model at a depth of (grid spacing)/4. For this study, the grid spacing is 100m, so we will query UCVM at a depth of 25m and use that value to populate the surface grid point. The rationale is that the media parameters at the surface grid point are supposed to represent the material properties for [0, 50m], and this is better represented by using the value at 25m than the value at 0m.

Verification Tests

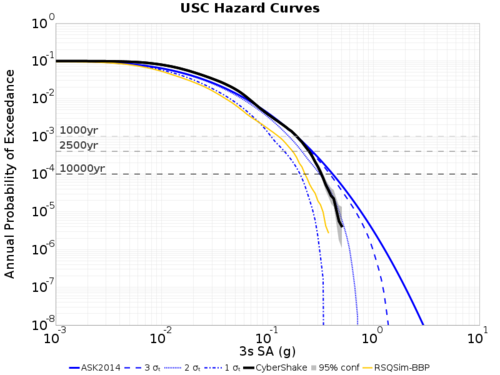

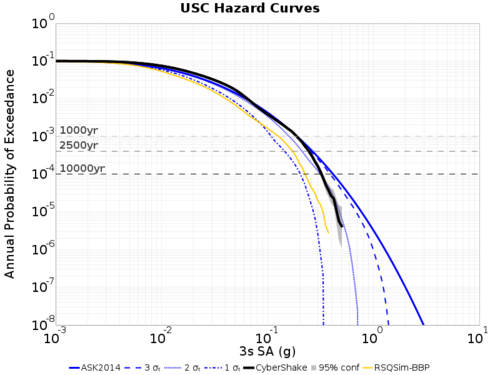

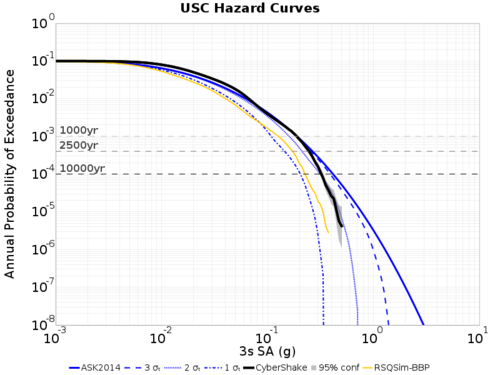

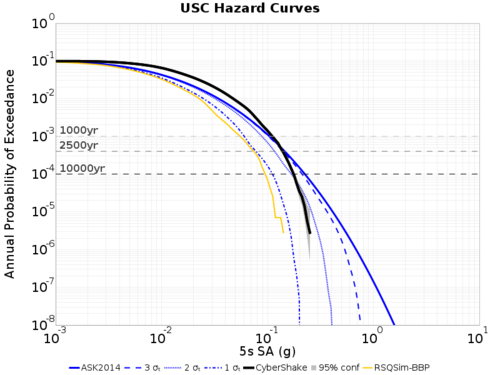

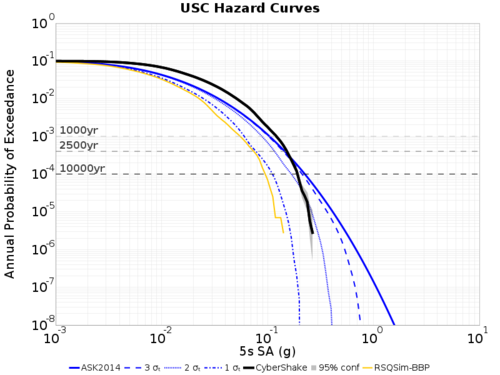

USC Hazard Curves

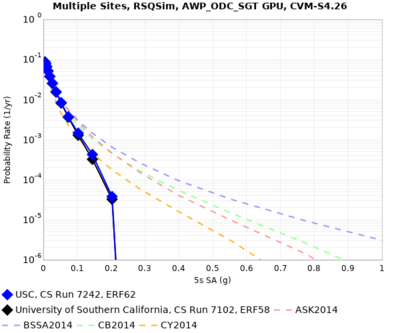

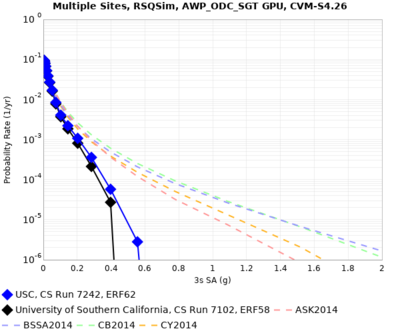

Hazard curve comparisons for site USC, between:

- ERF 58: 0.5 Hz RSQSim ERF used for Milner et al. (2021) calculations, May 2020

- ERF 61: 0.5 Hz RSQSim production ERF with the same catalog

- ERF 62: 1 Hz RSQSim production ERF with the same catalog

The first test run of ERF 61 used the wrong (older) mesh lower depth of 40 km, which is why the top right plot differs slightly from the top left. The middle left plot agrees perfectly with the top left.

| ERF 58, Original | ERF 61 w/ wrong depth |

| ERF 61 w/ corrected depth | ERF 62 (1 Hz) with old simulation parameters |

| ERF 62 (1 Hz) with proposed (new) simulation parameters | ERF 62 ccatter comparing new parameters (y) and old parameters (x) |

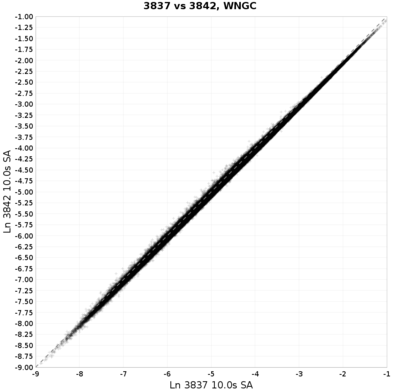

The first 1 Hz test run uses (bottom right above) uses the same simulation paramters as used with ERF 58 and 61, changing only the frequency. Some differences are apparent, which also persist for longer period (5s) curves:

| ERF 61, 5s Sa, 0.5 Hz, old simulation parameters | ERF 62, 5s Sa, 1 Hz, old simulation parameters |

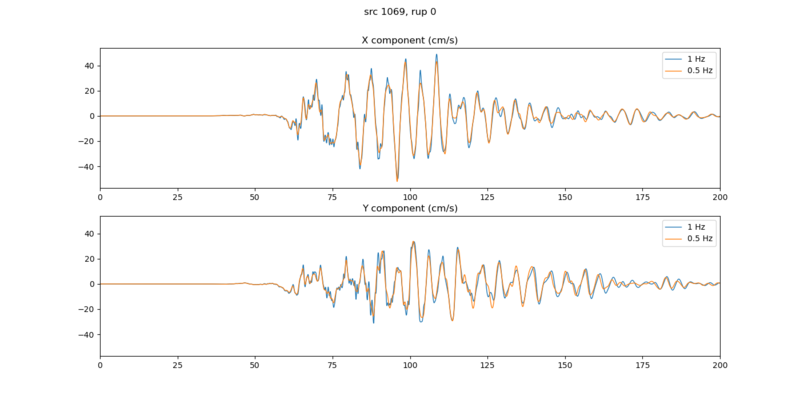

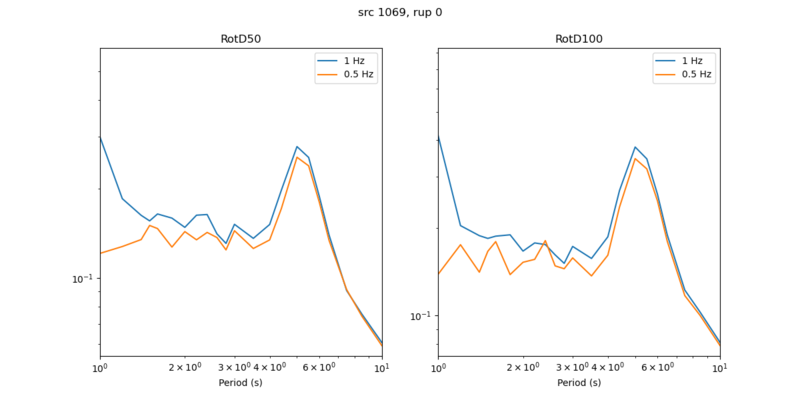

Here are seismograms and RotD's for the largest amplitude rupture for this 0.5 Hz vs 1 Hz test:

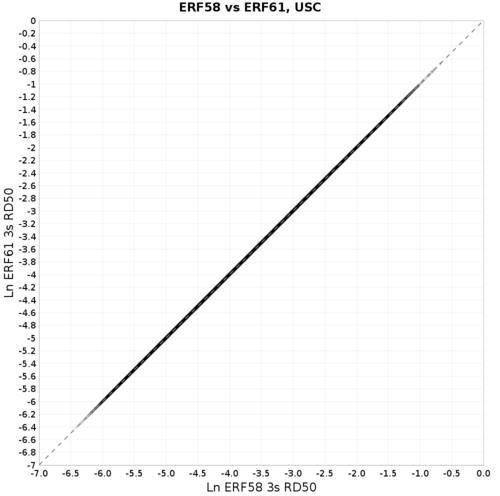

3s Amplitude Scatter plots

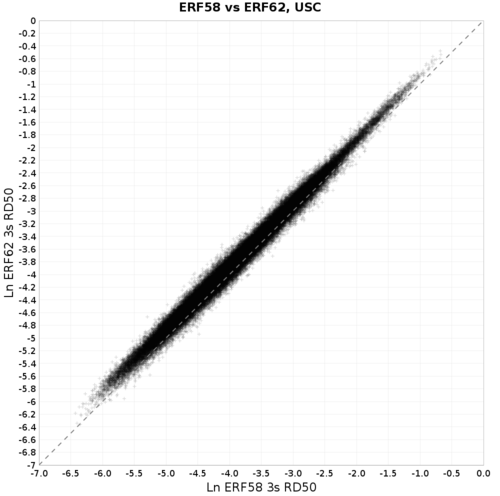

These plots show that (left) ERF 61 exactly reproduces ERF 58, and (right) that there are indeed differences going from 0.5 Hz to 1 Hz:

1 Hz vs 0.5 Hz comparisons

Here is a comparison of 1 Hz runs (y axis) and 0.5 Hz runs (x axis). The top row is a recent USC run with the RSQSim ERF. The bottom row is a previous test with UCERF2-CyberShake and the WNGC site. The columns are 3s, 5s, and 10s SA, respectively, all geometric mean.

Technical and Scientific Updates

Since our last study we have made a number of scientific updates to the platform, many as a result of the BBP verification effort.

- Several bugs were found and fixed in the AWP code.

- We have switched from stress insertion to velocity insertion of the impulse when generating SGTs.

- The sponge zone used in the absorbing boundary condition was increased from 50 to 80 points.

- By default, we use a depth of h/4 when querying UCVM to populate the surface grid point.

- The padding between the nearest fault or site and the edge of the volume was increased from 30 to 50 km.

- We fixed a bug in the coordinate conversion between RWG and AWP: previously we were adding 1 to the RWG z-coordinate to produce the AWP z-coordinate, but both codes use z=1 to represent the surface and therefore no increment should be applied.

- When calculating Qs in the SGT header generation code, a default Qs of 25 was always used. This has been changed to Qs=0.05Vs.

- We have turned off the adjustment of mu and lambda.

- FP was increased from 0.5 to 1.0. <-- This was not picked up correctly by the wrapper, so 0.5 was still used.

- We modified the lambda and mu calculations in AWP to use the original media parameter values from the velocity mesh rather than the FP-modified ones when calculating strains, to be consistent with RWG.

Study 18.8 Lessons Learned

- Consider separating SGT and PP workflows in auto-submit tool to better manage the number of each, for improved reservation utilization.

- Create a read-only way to look at the CyberShake Run Manager website.

- Consider reducing levels of the workflow hierarchy, thereby reducing load on shock.

- Determine advance plan for SGTs for sites which require fewer GPUs.

- Determine advance plan for SGTs for sites which exceed memory on nodes.

- Create new velocity model ID for composite model, capturing metadata.

We modified the database to enable composite models, but for this study we are just using a single model.

- Verify all Java processes grab a reasonable amount of memory.

- Clear disk space before study begins to avoid disk contention.

- Add stress test before beginning study, for multiple sites at a time, with cleanup.

- In addition to disk space, check local inode usage.

Only 1% of the inodes are used on shock-carc; we will assume /project has sufficient inodes, as we can't check them.

- Establish clear rules and policies about reservation usage.

- If submitting to multiple reservations, make sure enough jobs are eligible to run that no reservation is starved.

We are not planning to run this study with reservations.

- If running primarily SGTs for awhile, make sure they don't get deleted due to quota policies.

We will stage the SGTs to HPSS if there is a delay in post-processing them. Summit has a 90-day purge policy, so we will have some time.

Output Data Products

File-based data products

We plan to produce the following data products which will be stored at CARC:

- Seismograms: 2-component seismograms, 8000 timesteps (400 sec) each

- PSA: X and Y spectral acceleration at 44 periods (10, 9.5, 9, 8.5, 8, 7.5, 7, 6.5, 6, 5.5, 5, 4.8, 4.6, 4.4, 4.2, 4, 3.8, 3.6, 3.4, 3.2, 3, 2.8, 2.6, 2.4, 2.2, 2, 1.66667, 1.42857, 1.25, 1.11111, 1, .66667, .5, .4, .33333, .285714, .25, .22222, .2, .16667, .142857, .125, .11111, .1 sec)

- RotD: PGV, and RotD50, the RotD50 azimuth, and RotD100 at 22 periods (1.0, 1.2, 1.4, 1.5, 1.6, 1.8, 2.0, 2.2, 2.4, 2.6, 2.8, 3.0, 3.5, 4.0, 4.4, 5.0, 5.5, 6.0, 6.5, 7.5, 8.5, 10.0 sec)

- Durations: for X and Y components, energy integral, Arias intensity, cumulative absolute velocity (CAV), and for both velocity and acceleration, 5-75%, 5-95%, and 20-80%.

Database data products

We plan to store the following data products in the database:

- PSA: none

- RotD: RotD50 and RotD100 at 10, 7.5, 5, 4, 3, and 2 sec, and PGV.

- Durations: acceleration 5-75% and 5-95% for X and Y components

Computational and Data Estimates

Computational Estimates

We based these estimates by scaling from site USC (the average site has 3.8% more events and a volume 9.7% larger).

| UCVM runtime | UCVM nodes | SGT runtime | SGT nodes | Other SGT workflow jobs | Summit Total | |

|---|---|---|---|---|---|---|

| USC | 372 sec | 80 | 2628 sec | 67 | 1510 node-sec | 106.5 node-hrs |

| Average (est) | 408 sec | 80 | 2883 sec | 67 | 1550 node-sec | 116.8 node-hrs |

Adding 10% overrun margin gives us an estimate of 43k node-hours for SGT calculation.

| DirectSynth runtime | DirectSynth nodes | Summit Total | |

|---|---|---|---|

| USC | 1081 | 36 | 10.8 |

| Average (est) | 1122 | 36 | 11.2 |

Adding 10% overrun margin gives an estimate of 4.2k node-hours for post-processing.

Data Estimates

Summit

| Velocity mesh | SGTs size | Temp data | Output data | |

|---|---|---|---|---|

| USC | 243 GB | 196 GB | 439 GB | 4.5 GB |

| Average | 267 GB | 203 GB | 470 GB | 4.7 GB |

| Total | 87 TB | 66 TB | 153 TB | 1.5 TB |

This is a total of 307 TB, which we could reach if we calculate all the SGTs first and don't delete anything. The default quota on Summit is 50 TB, so I suggest we request a quota increase to at least 300 TB so we don't need to rely on cleanup.

If we need to keep the SGTs for awhile before performing post-processing, the quota on HPSS is 100 TB, so we could store them there.

CARC

We estimate 1.5 TB in output data, which will be transferred back to CARC.

shock-carc

The study should use approximately 200 GB in workflow log space on /home/shock. This drive has approximately 1.7 TB free.

moment database

The PeakAmplitudes table uses approximately 100 bytes per entry.

100 bytes/entry * 17 entries/event * 76786 events/site * 335 sites = 41 GB. The drive on moment with the mysql database has 919 GB free.

Lessons Learned

Stress Test

The stress test began on 12/10/21 at 23:05 PST. We selected 21 sites for running both SGT and PP.

The stress test concluded on 12/12/21 at 19:41 PST.

Issues Discovered

- Workflows aren't being recognized as running by the Run Manager and are prematurely moved into an error state.

Fixed issue with correctly extracting Condor ID from run.

- Output SGTs are being registered in a local RC rather than a global one, so the PP workflow can't find them.

Modified parameters in properties file.

- Memory requirements for planning PP Synth workflow is high, so if too many run at once the Java process aborts.

Set JAVA_HEAPMAX to 10240.

- Too many Globus emails.

Auto-moved them to a different folder.

- Wrong ERF file used in curve calculations.

Fixed ERF file used in DAX generator.

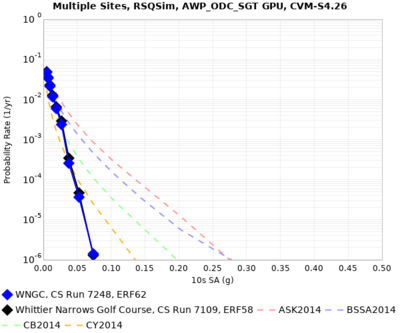

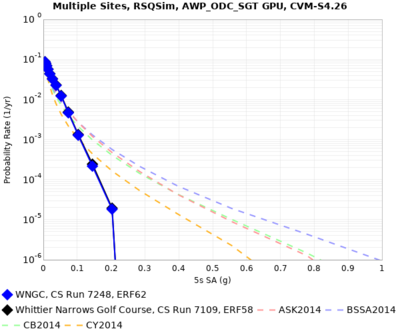

Comparison Curves

Below are comparison curves between ERF 62 and ERF 58.

| Site | 10 sec | 5 sec | 3 sec |

|---|---|---|---|

| LAF | |||

| OSI | |||

| PAS | |||

| PDE | |||

| s022 | |||

| SBSM | |||

| SMCA | |||

| STNI | |||

| USC | |||

| WNGC | |||

| WSS |

Events During Study

On 12/18, we discovered that some SGT jobs weren't finishing in 60 minutes. We increased the runtime to 90 minutes.

On 12/19, we reduced the runtime of the PostAWP job from 2 hours to 20 minutes, and of the NaN_Check job from 1 hour to 15 minutes.

The dtn36 node was restarted on 12/31 at 00:30 EST, but this was not discovered until 1/1 at 22:50 EST, so all workflow jobs were moved into a held state. The rvgahp daemon was restarted and jobs were released.

The dtn36 node was restarted again on 1/2 at 23:05 EST, so the rvgahp daemon was restarted on 1/3 at 22:05.

Performance Metrics

The production runs began on 12/15/21 at 8:39:28 am PST and finished on 1/13/22 at 1:06:24 am PST, for a duration of 688.4 hrs.

Usage

Before starting the stress test, the usage on Summit was 237,103 for GEO112 and 8127 for user callag.

After the stress test, the usage on Summit was 240,817 for GEO112 and 11055 for user callag. That's 139 node-hours per site.

Before starting the production runs, the usage on Summit was 244,307 for GEO112 and 11062.9 for user callag.

After the production run, the usage on Summit for user callag was 76532.8. Total usage was 65469.9 node-hours.

Application-level Metrics

- Makespan: 688.4 hrs

- Uptime: 631.1 hrs (rvgahp down due to dtn36 node restarts)

Note: uptime is what's used for calculating the averages for jobs, nodes, etc.

- 313 runs in production + 22 in testing

- 116,800 unique rupture variations considered (335 sites)

- 25,723,289 seismogram pairs generated (335 sites)

- 3,884,216,639 intensity measures generated (151 per seismogram pair) (335 sites)

- 16,415 jobs executed

- Per run: 11 directory create, 13 local, 9 staging, 2 register, 14 remote

Note: we're including core-hour counts for backward comparisons, but since most of the work was done on GPUs this isn't very meaningful.

- 65469.9 node-hrs used (2749735.8 core-hrs)

- 209.2 node-hrs per site (8785.1 core-hrs). This is 80% more than our original estimate, and 50% more than the stress test showed.

- On average, 2.5 jobs running, with a max of 36

- Average of 102 nodes used, with a maximum of 2128 (89376 cores/12462 GPUs, 46.2% of Summit)

- 670 workflows run

- ? successful

- ? SGT

- ? PP

- ? failed

- ? SGT

- ? PP

- ? successful

- Total data generated: ?

- 72 TB SGTs generated (220 GB per single SGT)

- 165 TB intermediate data generated

- 1.9 TB output data (102,893,156 files)

Delay per job (using a 7-day, no-restarts cutoff: ? workflows, ? jobs) was mean: ? sec, median: ?, min: ?, max: ?, sd: ?

| Bins (sec) | 0 | 60 | 120 | 180 | 240 | 300 | 600 | 900 | 1800 | 3600 | 7200 | 14400 | 43200 | 86400 | 172800 | 259200 | 604800 | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Jobs per bin | ? | ? | ? | ? | ? | ? | ? | ? | ? | ? | ? | ? | ? | ? | ? | ? |

- Application parallel node speedup (node-hours divided by makespan) was ?x. (Divided by uptime: ?x)

- Application parallel workflow speedup (number of workflows times average workflow makespan divided by application makespan) was ?x. (Divided by uptime: ?x)

Workflow-level Metrics

- The average makespan of a workflow (7-day cutoff, workflows with retries ignored, so ?/? workflows considered) was ? sec (? hrs), median: ? (? hrs), min: ?, max: ?, sd: ?

- For SGT workflows (736 workflows), mean: ? sec (? hrs), median: ? (? hrs), min: ?, max: ?, sd: ?

- For PP workflows (735 workflows), mean: ? (? hrs), median: ? (? hrs), min: ?, max: ?, sd: ?

- Workflow parallel core speedup (? workflows) was mean: ?, median: ?, min: ?, max: ?, sd: ?

- Workflow parallel node speedup (? workflows) was mean: ?, median: ?, min: ?, max: ?, sd: ?

Production Checklist

Science:

Confirm that ERF 62 test produces results which closely match ERF 61Restore improvements to codes since ERF 58, and rerun USC for ERF 62Fix h/4 issue and rerun USC test.Create prioritized site list.Hold science readiness review.Add link to fault geometry on Zenodo, either on the wiki or the fault metadata page.Add copy of science readiness review slides to wiki.Generate 0.5 Hz v 1 Hz scatterplot from UCERF2 ERF run.Go through config updates as a pair to confirm they have been correctly applied.

Technical:

Approach OLCF for the following requests:Quota increase to 300 TB8 jobs ready to run5 jobs in bin 5.

- To be able to bundle jobs, fix issue with Summit glideins.

- To run post-processing, resolve issues using GO to transfer data back to /project at CARC.

Tag codeModify job sizes and runtimes.Test auto-submit script.Prepare pending file.Create XML file describing study for web monitoring tool.- Get usage stats for Summit.

Prepare cronjob on Summit for monitoring jobs.Activate script for monitoring x509 certificate.Modify workflows to not insert or calculate curves for PSA data.Test that workflows don't insert or calculate curves for PSA data.Modify dax-generator to use h/4 as default for surface point.Modify dax-generator to use ERF62 parameter file for generating GMPE comparison curves.Hold technical readiness review.Modify nt to 8000 (400 sec).Add calculation of PGV.Test calculation and insertion of PGV.Test SGT-only create/plan/run scripts.Test PP-only create/plan/run scripts.Fix issue with moving runs into Verified stateWrote and tested pegasus-transfer wrapper to handle GO transfers to CARC.