Difference between revisions of "CyberShake Workflow Framework"

| Line 84: | Line 84: | ||

Purpose: The Globus Toolkit provides tools for job submission, file transfer, and management of certificates and proxies. | Purpose: The Globus Toolkit provides tools for job submission, file transfer, and management of certificates and proxies. | ||

| − | How to obtain: | + | How to obtain: You can download it from http://toolkit.globus.org/toolkit/downloads/latest-stable/ . |

| + | |||

| + | Special installation instructions: Configuration files are located in install_dir/etc/*.conf. You should review them, especially the certificate-related configuration, and make sure that they are correct. | ||

| + | |||

| + | The Globus Toolkit reached end-of-life in January 2018, so it's not clear that it will be needed going forward. Currently, it is installed on shock at /usr/local/globus . | ||

| − | |||

==== rvGAHP ==== | ==== rvGAHP ==== | ||

Revision as of 23:17, 26 February 2018

This page provides documentation on the workflow framework that we use to execute CyberShake.

As of 2018, we use shock.usc.edu as our workflow submit host for CyberShake, but this setup could be replicated on any machine.

Overview

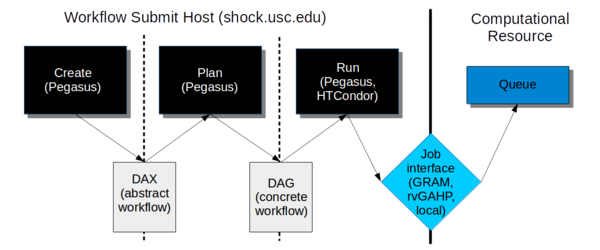

The workflow framework consists of three phases:

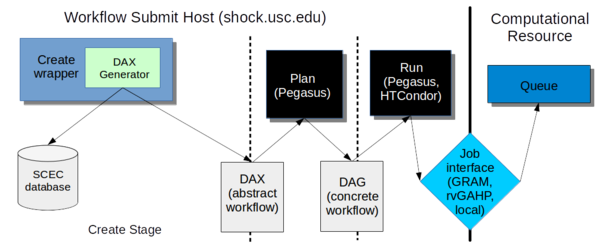

- Create. In this phase, we use the Pegasus API to create an "abstract description" of the workflow, so named because it does not have the specific paths or runtime configuration information for any system. It is a general description of the tasks to execute in the workflow, the input and output files, and the dependencies between them. This description is called a DAX and is in XML format.

- Plan. In this phase, we use the Pegasus planner to take our abstract description and convert it into a "concrete description" for execution on one or more specific computational resources. At this stage, paths to executables and specific configuration information, such as the correct certificate to use, what account to charge, etc. are added into the workflow. This description is called a DAG and consists of multiple files in a format expected by HTCondor.

- Run. In this phase, we use Pegasus to hand our DAG off to HTCondor, which supervises the execution of the workflow. HTCondor maintains a queue of jobs. Jobs with all their dependencies satisfied are eligible to run and are sent to their execution system.

We will go into each phase in more detail below.

Software Dependencies

Before attempting to create and execute a workflow, the following software dependencies must be satisfied.

Workflow Submit Host

In addition to Java 1.7+, we require the following software packages on the workflow submission host:

Pegasus-WMS

Purpose: We use Pegasus to create our workflow description and plan it for execution with HTCondor. Pegasus also provides a number of other features, such as automatically adding transfer jobs, wrapping jobs in kickstart for runtime statistics, using monitord to populate a database with metrics, and registering output files in a catalog.

How to obtain: You can download the latest version (binaries) from https://pegasus.isi.edu/downloads/, or clone the git repository at https://github.com/pegasus-isi/pegasus . Usually we clone the repo, as with some regularity we need new features implemented and tested. We are currently running 4.7.3dev on shock, but you should install the latest version.

Special installation instructions: Follow the instructions to build from source - it's straightforward. On shock, we install Pegasus into /opt/pegasus, and update the default link to point to our preferred version.

HTCondor

Purpose: HTCondor performs the actual execution of the workflow. Part of Condor, DAGMan, maintains a queue and keeps track of job dependencies, submitting jobs to the appropriate resource when it's time for them to run.

How to obtain: HTCondor is available at https://research.cs.wisc.edu/htcondor/downloads/, but installing from a repo is the preferred approach. We are currently running 8.4.8 on shock. You should install the 'Current Stable Release'.

Special installation instructions: We've been asking the system administrator to install it in shared space.

HTCondor has some configuration information which must be setup correctly. On shock, the two configuration files are stored at /etc/condor/condor_config and /etc/condor/condor_config.local.

In condor_config, make the following changes:

- LOCAL_CONFIG_FILE = /path/to/condor_config.local

- ALLOW_READ = *

- ALLOW_WRITE should be set to IPs or hostnames of systems which are allowed to add nodes to the Condor pool. For example, on shock it is currently ALLOW_WRITE = *.ccs.ornl.gov, *.usc.edu

- CONDOR_IDS = uid.gid of 'Condor' user

- All the MAX_*_LOG entries should be set to something fairly large. On shock we use 100000000.

- DAEMON_LIST = MASTER, SCHEDD, COLLECTOR, NEGOTIATOR

- HIGHPORT and LOWPORT should be set to define the port range Condor is allowed to use

- DELEGATE_JOB_GSI_CREDENTIALS=False ; when set to True, Condor sometimes picked up the wrong proxy for file transfers.

In condor_config.local, make the following changes:

- NETWORK_INTERFACE = <machine ip>

- DAGMAN_MAX_SUBMITS_PER_INTERVAL = 80

- DAGMAN_SUBMIT_DELAY = 1

- DAGMAN_LOG_ON_NFS_IS_ERROR = False

- START_LOCAL_UNIVERSE = TotalLocalJobsRunning<80

- START_SCHEDULER_UNIVERSE = TotalSchedulerJobsRunning<200

- JOB_START_COUNT = 50

- JOB_START_DELAY = 1

- MAX_PENDING_STARTD_CONTACTS = 80

- SCHEDD_INTERVAL = 120

- RESERVED_SWAP = 500

- CONDOR_HOST = <workflow management host>

- LOG_ON_NFS_IS_ERROR = False

- DAEMON_LIST = $(DAEMON_LIST) STARTD

- NUM_CPUS = 50

- START = True

- SUSPEND = False

- CONTINUE = True

- PREEMPT = False

- KILL = False

- REMOTE_GAHP = /path/to/rvgahp_client

- GRIDMANAGER_MAX_SUBMITTED_JOBS_PER_RESOURCE = 100, /tmp/cybershk.titan.sock, 30

- GRIDMANAGER_MAX_PENDING_SUBMITS_PER_RESOURCE = 20

- GRIDMANAGER_MAX_JOBMANAGERS_PER_RESOURCE = 30

Globus Toolkit

Purpose: The Globus Toolkit provides tools for job submission, file transfer, and management of certificates and proxies.

How to obtain: You can download it from http://toolkit.globus.org/toolkit/downloads/latest-stable/ .

Special installation instructions: Configuration files are located in install_dir/etc/*.conf. You should review them, especially the certificate-related configuration, and make sure that they are correct.

The Globus Toolkit reached end-of-life in January 2018, so it's not clear that it will be needed going forward. Currently, it is installed on shock at /usr/local/globus .

rvGAHP

To enable rvGAHP submission for systems which don't support remote authentication using proxies (currently Titan), you should configure rvGAHP on the submission host as described here: https://github.com/juve/rvgahp .

Remote Resource

Pegasus-WMS

On the remote resource, only the Pegasus-worker packages are technically required, but I recommend installing all of Pegasus anyway. This may also require installing Ant. Typically, we install Pegasus to <CyberShake home>/utils/pegasus.

GRAM submission

To support GRAM submission, the Globus Toolkit (http://toolkit.globus.org/toolkit/) needs to be installed on the remote system. It's already installed at Titan and Blue Waters. Since the toolkit reached end-of-life in January 2018, there is some uncertainty as to what we will move to.

rvGAHP submission

For rvGAHP submission - our approach for systems which do not permit the use of proxies for remote authentication - you should follow the instructions at https://github.com/juve/rvgahp to set up rvGAHP correctly.

Create

In the creation stage, we invoke a wrapper script which calls the DAX Generator, which creates the DAX file for a CyberShake workflow.

At creation, we decide which workflow we want to run -- SGT only, post-processing only, or both ('integrated'). The reason for this option is that often we want to run the SGT and post-processing workflows in different places. For example, we might want to run the SGT workflow on Titan because of the large number of GPUs, and then the post-processing on Blue Waters because of its XE nodes. If you create an integrated workflow, both the SGT and post-processing parts will run on the same machine. (This is because of the way that we've set up the planning scripts; this could be changed in the future if needed).

Wrapper Script

Four wrapper scripts are available, depending on which kind of workflow you are creating.

create_sgt_dax.sh

Purpose: To create the DAX files for an SGT-only workflow.

Detailed description: create_sgt_dax.sh takes the command-line arguments and, by querying the database determines a Run ID to assign to the run. The entry corresponding to this run in the database is either updated or created. This run ID is then added to the file <site short name>_SGT_dax/run_table.txt. It then compiles the DAX Generator (in case any changes have been made) and runs it with the correct command-line arguments, creating the DAXes. Next, a directory, <site short name>_SGT_dax/run_<run id>, is created, and the DAX files are moved into that directory. Finally, the run in the database is edited to reflect that creation is complete.

Needs to be changed if:

- New velocity models are added. The wrapper encodes a mapping between a string and the velocity model ID in lines 129-152, and a new entry must be added for a new model.

- New science parameters need to be tracked. Currently the site name, ERF id, SGT variation id, rupture variation scenario id, velocity model id, frequency, and source frequency are used. If new parameters are important, they would need to be captured, included in the run_table file, and passed to the Run Manager (which would also need to be edited to include it).

- Arguments to the DAX Generator change.

Source code location: http://source.usc.edu/svn/cybershake/import/trunk/runs/create_sgt_dax.sh

Author: Scott Callaghan

Dependencies: Run Manager

Executable chain:

create_sgt_dax.sh CyberShake_SGT_DAXGen

Compile instructions:None

Usage:

Usage: ./create_sgt_dax.sh [-h] <-v VELOCITY_MODEL> <-e ERF_ID> <-r RV_ID> <-g SGT_ID> <-f FREQ> <-s SITE> [-q SRC_FREQ] [SGT dax generator args]

-h display this help and exit

-v VELOCITY_MODEL select velocity model, one of v4 (CVM-S4), vh (CVM-H), vsi (CVM-S4.26), vs1 (SCEC 1D model), vhng (CVM-H, no GTL), or vbbp (BBP 1D model).

-e ERF_ID ERF ID

-r RUP_VAR_ID Rupture Variation ID

-g SGT_ID SGT ID

-f FREQ Simulation frequency (0.5 or 1.0 supported)

-q SRC_FREQ Optional: SGT source filter frequency

-s SITE Site short name

Can be followed by optional SGT arguments:

usage: CyberShake_SGT_DAXGen <output filename> <destination directory>

[options] [-f <runID file, one per line> | -r <runID1> <runID2> ... ]

-d,--handoff Run handoff job, which puts SGT into pending file

on shock when completed.

-f <runID_file> File containing list of Run IDs to use.

-h,--help Print help for CyberShake_SGT_DAXGen

-mc <max_cores> Maximum number of cores to use for AWP SGT code.

-mv <minvs> Override the minimum Vs value

-ns,--no-smoothing Turn off smoothing (default is to smooth)

-r <runID_list> List of Run IDs to use.

-sm,--separate-md5 Run md5 jobs separately from PostAWP jobs

(default is to combine).

-sp <spacing> Override the default grid spacing, in km.

-sr,--server <server> Server to use for site parameters and to insert

PSA values into

-ss <sgt_site> Site to run SGT workflows on (optional)

-sv,--split-velocity Use separate velocity generation and merge jobs

(default is to use combined job)

Input files: None

Output files: DAX files (schema is defined here: https://pegasus.isi.edu/documentation/schemas/dax-3.6/dax-3.6.html). Specifically, CyberShake_SGT_<site>.dax.

create_stoch_dax.sh

Purpose: To create the DAX files for an stochastic-only post-processing workflow.

Detailed description: create_stoch_dax.sh takes the command-line arguments and, by querying the database determines a Run ID to assign to the run. The entry corresponding to this run in the database is either updated or created. This run ID is then added to the file <site short name>_Stoch_dax/run_table.txt. It then compiles the DAX Generator (in case any changes have been made) and runs it with the correct command-line arguments, including a low-frequency run ID to be augmented, and the DAXes are created. Next, a directory, <site short name>_Stoch_dax/run_<run id>, is created, and the DAX files are moved into that directory. Finally, the run in the database is edited to reflect that creation is complete.

Needs to be changed if:

- Arguments to the DAX Generator change.

Source code location: http://source.usc.edu/svn/cybershake/import/trunk/runs/create_stoch_dax.sh

Author: Scott Callaghan

Dependencies: Run Manager

Executable chain:

create_stoch_dax.sh CyberShake_Stochastic_DAXGen

Compile instructions:None

Usage:

Usage: ./create_stoch_wf.sh [-h] <-l LF_RUN_ID> <-e ERF_ID> <-r RV_ID> <-f MERGE_FREQ> <-s SITE> [--daxargs DAX generator args]

-h display this help and exit

-l LF_RUN_ID Low-frequency run ID to combine

-e ERF_ID ERF ID

-r RUP_VAR_ID Rupture Variation ID

-f MERGE_FREQ Frequency to merge LF and stochastic results

-s SITE Site short name

Can be followed by DAX arguments:

usage: CyberShake_Stochastic_DAXGen <runID> <directory>

-d,--debug Debug flag.

-h,--help Print help for CyberShake_HF_DAXGen

-mf <merge_frequency> Frequency at which to merge the LF and HF

seismograms.

-nd,--no-duration Omit duration calculations.

-nls,--no-low-site-response Omit site response calculation for

low-frequency seismograms.

-nr,--no-rotd Omit RotD calculations.

-nsr,--no-site-response Omit site response calculation.

Input files: None

Output files: DAX files (schema is defined here: https://pegasus.isi.edu/documentation/schemas/dax-3.6/dax-3.6.html). Specifically, CyberShake_Stoch_<site>_top.dax and CyberShake_Stoch_<site>_DB_Products.dax.

create_pp_wf.sh

Purpose: To create the DAX files for a deterministic-only post-processing workflow.

Detailed description: create_pp_wf.sh takes the command-line arguments and, by querying the database determines a Run ID to assign to the run. The entry corresponding to this run in the database is either updated or created. This run ID is then added to the file <site short name>_PP_dax/run_table.txt. It then compiles the DAX Generator (in case any changes have been made) and runs it with the correct command-line arguments, including a low-frequency run ID to be augmented, and the DAXes are created. Next, a directory, <site short name>_PP_dax/run_<run id>, is created, and the DAX files are moved into that directory. Finally, the run in the database is edited to reflect that creation is complete.

Needs to be changed if:

- New velocity models are added. The wrapper encodes a mapping between a string and the velocity model ID in lines 110-130, and a new entry must be added for a new model.

- New science parameters need to be tracked. Currently the site name, ERF id, SGT variation id, rupture variation scenario id, velocity model id, frequency, and source frequency are used. If new parameters are important, they would need to be captured, included in the run_table file, and passed to the Run Manager (which would also need to be edited to include it).

- Arguments to the DAX Generator change.

Source code location: http://source.usc.edu/svn/cybershake/import/trunk/runs/create_pp_wf.sh

Author: Scott Callaghan

Dependencies: Run Manager

Executable chain:

create_pp_wf.sh CyberShake_PP_DAXGen

Compile instructions:None

Usage:

Usage: ./create_pp_wf.sh [-h] <-v VELOCITY MODEL> <-e ERF_ID> <-r RV_ID> <-g SGT_ID> <-f FREQ> <-s SITE> [-q SRC_FREQ] [--ppargs DAX generator args]

-h display this help and exit

-v VELOCITY_MODEL select velocity model, one of v4 (CVM-S4), vh (CVM-H), vsi (CVM-S4.26), vs1 (SCEC 1D model), vhng (CVM-H, no GTL), or vbbp (BBP 1D model).

-e ERF_ID ERF ID

-r RUP_VAR_ID Rupture Variation ID

-g SGT_ID SGT ID

-f FREQ Simulation frequency (0.5 or 1.0 supported)

-s SITE Site short name

-q SRC_FREQ Optional: SGT source filter frequency

Can be followed by optional PP arguments:

usage: CyberShake_PP_DAXGen <runID> <directory>

-d,--debug Debug flag.

-ds,--direct-synth Use DirectSynth code instead of extract_sgt

and SeisPSA to perform post-processing.

-du,--duration Calculate duration metrics and insert them

into the database.

-ff,--file-forward Use file-forwarding option. Requires PMC.

-ge,--global-extract-mpi Use 1 extract SGT MPI job, run as part of pre

workflow.

-h,--help Print help for CyberShake_PP_DAXGen

-hf <high-frequency> Lower-bound frequency cutoff for stochastic

high-frequency seismograms (default 1.0), required for high frequency run

-k,--skip-md5 Skip md5 checksum step. This option should

only be used when debugging.

-lm,--large-mem Use version of SeisPSA which can handle

ruptures with large numbers of points.

-mr <factor> Use SeisPSA version which supports multiple

synthesis tasks per invocation; number of seis_psa jobs per invocation.

-nb,--nonblocking-md5 Move md5 checksum step out of the critical

path. Entire workflow will still abort on error.

-nc,--no-mpi-cluster Do not use pegasus-mpi-cluster

-nf,--no-forward Use no forwarding.

-nh,--no-hierarchy Use directory hierarchy on compute resource

for seismograms and PSA files.

-nh,--no-hf-synth Use separate executables for high-frequency

srf2stoch and hfsim, rather than hfsynth

-ni,--no-insert Don't insert ruptures into database (used for

testing)

-nm,--no-mergepsa Use separate executables for merging broadband

seismograms and PSA, rather than mergePSA

-ns,--no-seispsa Use separate executables for both synthesis

and PSA

-p <partitions> Number of partitions to create.

-ps <pp_site> Site to run PP workflows on (optional)

-q,--sql Create sqlite file containing (source,

rupture, rv) to sub workflow mapping

-r,--rotd Calculate RotD50, the RotD50 angle, and

RotD100 for rupture variations and insert them into the database.

-s,--separate-zip Run zip jobs as separate seismogram and PSA

zip jobs.

-se,--serial-extract Use serial version of extraction code rather

than extract SGT MPI.

-sf,--source-forward Aggregate files at the source level instead of

the default rupture level.

-sp <spacing> Override the default grid spacing, in km.

-sr,--server <server> Server to use for site parameters and to

insert PSA values into

-z,--zip Zip seismogram and PSA files before

transferring.

Input files: None

Output files: DAX files (schema is defined here: https://pegasus.isi.edu/documentation/schemas/dax-3.6/dax-3.6.html). Specifically, CyberShake_<site>.dax, CyberShake_<site>_pre.dax, CyberShake_<site>_Synth.dax, CyberShake_<site>_DB_Products.dax, and CyberShake_<site>_post.dax.

create_full_wf.sh

Purpose: To create the DAX files for a deterministic-only full (SGT + post-processing) workflow.

Detailed description: create_full_wf.sh takes the command-line arguments and, by querying the database determines a Run ID to assign to the run. The entry corresponding to this run in the database is either updated or created. This run ID is then added to the file <site short name>_Integrated_dax/run_table.txt. It then compiles the DAX Generator (in case any changes have been made) and runs it with the correct command-line arguments, including a low-frequency run ID to be augmented, and the DAXes are created. Next, a directory, <site short name>_Integrated_dax/run_<run id>, is created, and the DAX files are moved into that directory. Finally, the run in the database is edited to reflect that creation is complete.

Needs to be changed if:

- New velocity models are added. The wrapper encodes a mapping between a string and the velocity model ID in lines 107-129, and a new entry must be added for a new model.

- New science parameters need to be tracked. Currently the site name, ERF id, SGT variation id, rupture variation scenario id, velocity model id, frequency, and source frequency are used. If new parameters are important, they would need to be captured, included in the run_table file, and passed to the Run Manager (which would also need to be edited to include it).

- Arguments to the DAX Generator change.

Source code location: http://source.usc.edu/svn/cybershake/import/trunk/runs/create_full_wf.sh

Author: Scott Callaghan

Dependencies: Run Manager

Executable chain:

create_full_wf.sh CyberShake_Integrated_DAXGen

Compile instructions:None

Usage:

Usage: ./create_full_wf.sh [-h] <-v VELOCITY_MODEL> <-e ERF_ID> <-r RUP_VAR_ID> <-g SGT_ID> <-f FREQ> <-s SITE> [-q SRC_FREQ] [--sgtargs <SGT dax generator args>] [--ppargs <post-processing args>]

-h display this help and exit

-v VELOCITY_MODEL select velocity model, one of v4 (CVM-S4), vh (CVM-H), vsi (CVM-S4.26), vs1 (SCEC 1D model), vhng (CVM-H, no GTL), vbbp (BBP 1D model), vcca1d (CCA 1D model), or vcca (CCA-06 3D model).

-e ERF_ID ERF ID

-r RUP_VAR_ID Rupture Variation ID

-g SGT_ID SGT ID

-f FREQ Simulation frequency (0.5 or 1.0 supported)

-s SITE Site short name

-q SRC_FREQ Optional: SGT source filter frequency

Can be followed by optional arguments:

usage: CyberShake_Integrated_DAXGen <output filename> <destination

directory> [options] [-f <runID file, one per line> | -r <runID1> <runID2>

... ]

-h,--help Print help for CyberShake_Integrated_DAXGen

--ppargs <ppargs> Arguments to pass through to post-processing.

-rf <runID_file> File containing list of Run IDs to use.

-rl <runID_list> List of Run IDs to use.

--server <server> Server to use for site parameters and to insert

PSA values into

--sgtargs <sgtargs> Arguments to pass through to SGT workflow.

SGT DAX Generator arguments:

usage: CyberShake_SGT_DAXGen <output filename> <destination directory>

[options] [-f <runID file, one per line> | -r <runID1> <runID2> ... ]

-d,--handoff Run handoff job, which puts SGT into pending file

on shock when completed.

-f <runID_file> File containing list of Run IDs to use.

-h,--help Print help for CyberShake_SGT_DAXGen

-mc <max_cores> Maximum number of cores to use for AWP SGT code.

-mv <minvs> Override the minimum Vs value

-ns,--no-smoothing Turn off smoothing (default is to smooth)

-r <runID_list> List of Run IDs to use.

-sm,--separate-md5 Run md5 jobs separately from PostAWP jobs

(default is to combine).

-sp <spacing> Override the default grid spacing, in km.

-sr,--server <server> Server to use for site parameters and to insert

PSA values into

-ss <sgt_site> Site to run SGT workflows on (optional)

-sv,--split-velocity Use separate velocity generation and merge jobs

(default is to use combined job)

PP DAX Generator arguments:

usage: CyberShake_PP_DAXGen <runID> <directory>

-d,--debug Debug flag.

-ds,--direct-synth Use DirectSynth code instead of extract_sgt

and SeisPSA to perform post-processing.

-du,--duration Calculate duration metrics and insert them

into the database.

-ff,--file-forward Use file-forwarding option. Requires PMC.

-ge,--global-extract-mpi Use 1 extract SGT MPI job, run as part of pre

workflow.

-h,--help Print help for CyberShake_PP_DAXGen

-hf <high-frequency> Lower-bound frequency cutoff for stochastic

high-frequency seismograms (default 1.0), required for high frequency run

-k,--skip-md5 Skip md5 checksum step. This option should

only be used when debugging.

-lm,--large-mem Use version of SeisPSA which can handle

ruptures with large numbers of points.

-mr <factor> Use SeisPSA version which supports multiple

synthesis tasks per invocation; number of seis_psa jobs per invocation.

-nb,--nonblocking-md5 Move md5 checksum step out of the critical

path. Entire workflow will still abort on error.

-nc,--no-mpi-cluster Do not use pegasus-mpi-cluster

-nf,--no-forward Use no forwarding.

-nh,--no-hierarchy Use directory hierarchy on compute resource

for seismograms and PSA files.

-nh,--no-hf-synth Use separate executables for high-frequency

srf2stoch and hfsim, rather than hfsynth

-ni,--no-insert Don't insert ruptures into database (used for

testing)

-nm,--no-mergepsa Use separate executables for merging broadband

seismograms and PSA, rather than mergePSA

-ns,--no-seispsa Use separate executables for both synthesis

and PSA

-p <partitions> Number of partitions to create.

-ps <pp_site> Site to run PP workflows on (optional)

-q,--sql Create sqlite file containing (source,

rupture, rv) to sub workflow mapping

-r,--rotd Calculate RotD50, the RotD50 angle, and

RotD100 for rupture variations and insert them into the database.

-s,--separate-zip Run zip jobs as separate seismogram and PSA

zip jobs.

-se,--serial-extract Use serial version of extraction code rather

than extract SGT MPI.

-sf,--source-forward Aggregate files at the source level instead of

the default rupture level.

-sp <spacing> Override the default grid spacing, in km.

-sr,--server <server> Server to use for site parameters and to

insert PSA values into

-z,--zip Zip seismogram and PSA files before

transferring.

Input files: None

Output files: DAX files (schema is defined here: https://pegasus.isi.edu/documentation/schemas/dax-3.6/dax-3.6.html).. Specifically, CyberShake_Integrated_<site>.dax, CyberShake_<site>_pre.dax, CyberShake_<site>_Synth.dax, CyberShake_<site>_DB_Products.dax, CyberShake_<site>_post.dax.

DAX Generator

The DAX Generator consists of multiple complex Java classes. It supports a large number of arguments, enabling the user to select the CyberShake science parameters (site, erf, velocity model, rupture variation scenario id, frequency, spacing, minimum vs cutoff) and the technical parameters (which post-processing code to select, which SGT code, etc.) to perform the run with. Different entry classes support creating just an SGT workflow, just a post-processing (+ data product creation) workflow, or both combined into an integrated workflow.

A detailed overview of the DAX Generator is available here: CyberShake DAX Generator.

Plan

Transformation Catalog

Site Catalog

Replica Location Service (RLS)

Run

X509 certificates

Run Manager

The Run Manager is a collection of python scripts which wrap database commands used to query or modify the status of a CyberShake run. They are used both in create/plan/run scripts, and also in the Run Manager website. The Run Manager also enforces a state transition table, documented here. These scripts are rarely called directly, but are used extensively in the create/plan/run scripts. These scripts include:

- create_run.py: Create a new run in the database given a series of science parameters.

- create_stoch_run.py: Similar, but creates a new run with stochastic parameters, which requires tracking of different things.

- edit_run.py: Modify the status or comment field for an existing run.

- find_run.py: Search the database for a preexisting run, given a series of science parameters.

- find_stoch_run.py: Finds a preexisting run with stochastic components.

- valid_run.py: Checks to see if a run is in a valid state before applying a state transition.

The RunManager helper scripts are at http://source.usc.edu/svn/cybershake/import/trunk/runs/runmanager , with the shared core at http://source.usc.edu/svn/cybershake/import/trunk/RunManager/ .

The RunManager scripts would need to be edited if:

- The database parameters change (Config.py, line 6)

- A new compute site is added (Config.py, add to HOST_LIST in line 15)

- A new user is added (Config.py, add to USER_LIST in line 20)

- New science parameters are added to the database, which are used to differentiate between runs. Changes would need to be made to create_run.py, find_run.py, and RunManager.py.

On shock, these scripts are installed in /home/scec-02/cybershk/runs/runmanager .