Difference between revisions of "Fling Study"

| Line 143: | Line 143: | ||

|} | |} | ||

| − | Example PBS script running the sample Fling simulations: | + | |

| + | Example PBS script running the sample Fling simulations on 16 cores: | ||

<pre> | <pre> | ||

Revision as of 22:56, 10 May 2012

Contents

Overview

The Fling study is a set of broadband platform simulations defined by PG&E and PEER researchers and run by SCEC Broadband platform group. Full specification of the Fling study will be posted when available.

Certification of USC HPCC Cluster for Broadband Calculations

The verification and validation of the currently released Broadband platform is based on results generated on a SCEC server called broadband.usc.edu. When we move the Broadband platform software, re-build it, and re-run it in a different computing environment, the results the platform produces can be slightly different than results produced on the SCEC server. Differences can come from computing hardware, from operating system characteristics, from compiler version, and other sources.

Before accepting results generated in a new computing environment, we must first certify that the new computing environment produces results that are equivalent to the results from the original server where the platform was originally developed and tested.

To speed up execution of the Fling study, we plan to run it on the USC HPCC cluster, so we must certify that USC HPCC cluster results are valid and comparable to those generated on broadband.usc.edu.

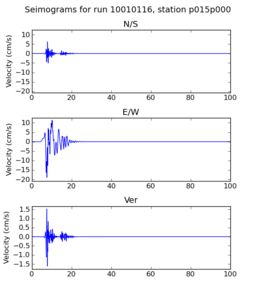

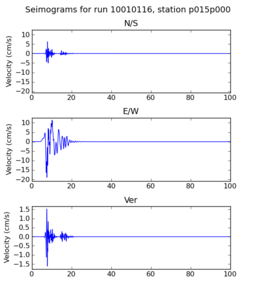

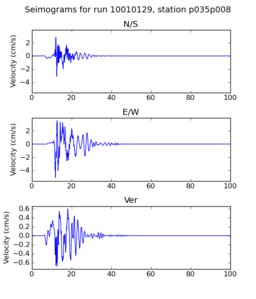

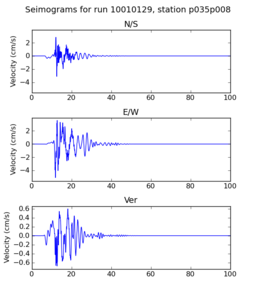

Here are results from our initial certification tests. A researcher ran a small subset of the Fling study on the SCEC broadband server. Then, we ran the same subset on the USC HPCC cluster. Below we compare the output seismograms from both runs, showing that the two results are very similar.

In our discussions, we decided that the certification criteria for this study will include a number of small magnitude ruptures, and a number of large magnitude ruptures, which we will post when they are available.

Comparison of Seismogram from Server and Cluster

| Simulation | broadband.usc.edu | USC HPCC cluster |

|---|---|---|

| 10010116 | ||

| 10010129 |

scenario_id magnitude dip rake Ztor fault_length fault_width Priority 101 6 90 180 0 14 8 1 102 6.5 90 180 0 24 13 103 7 90 180 0 68 15 104 7.5 90 180 0 210 15 105 7.8 90 180 0 420 15 106 8.2 90 180 0 470 15 107 6 70 180 0 14 8 2 108 6.5 70 180 0 24 13 109 7 70 180 0 68 15 110 7.5 70 180 0 210 15 111 7.8 70 180 0 420 15 112 8.2 70 180 0 470 15 113 6 70 180 0 14 8 3 114 6.5 70 180 0 24 13 115 7 70 180 0 40 25 116 7.5 70 180 0 100 32 117 7.8 70 180 0 160 40 118 8.2 70 180 0 400 40 119 6 90 180 0 14 8 4 120 6.5 90 180 0 24 13 121 7 90 180 0 40 25 122 7.5 90 180 0 100 32 123 7.8 90 180 0 160 40 124 8.2 90 180 0 400 40 125 6 45 90 0 10 10 5 126 6 45 90 5 10 10 127 6.5 45 90 0 18 18 128 6.5 45 90 5 18 18 129 7 45 90 0 44 23 130 7.5 45 90 0 126 25 131 7.8 45 90 0 180 25 132 6 60 90 0 10 10 133 6 60 90 5 10 10 134 6.5 60 90 0 18 18 135 6.5 60 90 5 18 18 136 7 60 90 0 50 20 137 7.5 60 90 0 150 20 138 7.8 60 90 0 200 20 139 7.0 45 90 0 18 18 140 6.5 45 90 0 18 18 141 6.5 45 90 5 18 18 142 7.0 45 90 0 44 23

Notes

Ronnie and I have put together a subset of fling simulations to submit to the BB Platform. Attached, please find a list containing all of the scenarios that we will eventually be providing. However, Scenarios 140, 141 and 142 were identified as high priority simulations at our NGA-West2 meeting last Friday. If possible, we would like to get them running right away.

Scenarios 140 – 142 can be found in my directory:

/home/scec-01/Wooddell/broadband/fling

I have created a separate folder for each of the Scenarios.

I would like to submit the remainder of the fling simulations all at once, but I have not yet had the opportunity to finalize them all. I will send you a message when they are completed, probably sometime tomorrow or Thursday.

Running on USC HPCC

A sample set of simulations from the Fling study were run on USC HPCC. The original fling generation scripts, source descriptions, station lists, and batch scripts were copied over from broadband.usc.edu to /home/rcf-104. Then small modifications were made to update paths and block the actual execution of the platform (the platform will be run in a PBS job):

Sample scripts can be found at the following locations:

| Script | Location | Description | Modified |

|---|---|---|---|

| build_xml.py | /auto/rcf-104/patrices/bbp/batch_tools | Builds XML workflows for a simulation | No |

| batch_run_bbp.py | /auto/rcf-104/patrices/bbp/batch_tools | Executes BBP workflow | Modified to only write BBP command-lines for simulations to a log for later execution by run_parallel.py |

| run_parallel.py | /auto/rcf-104/patrices/bbp/batch_tools | Helper script to run N programs on a set of M cores | New script |

| gen_source_input.csh | /auto/rcf-104/patrices/bbp/fling | Generate full study inputs | No |

| run_bbp-parallel.csh | /auto/rcf-104/patrices/bbp/fling | Execute the study with the platform | Some paths changed, also added ${ROOT_PATH} to some relative path locations to make them absolute paths |

Example PBS script running the sample Fling simulations on 16 cores:

#!/bin/bash #PBS -q nbns #PBS -l arch=x86_64,pmem=2000mb,pvmem=3000mb,walltime=6:00:00,nodes=4:ppn=4 #PBS -V #PBS -e /home/rcf-104/patrices/bbp/fling/Xml1/Set1/run_set1.err #PBS -o /home/rcf-104/patrices/bbp/fling/Xml1/Set1/run_set1.out PYTHONPATH=/home/rcf-104/patrices/bbp/11.2.2/bbp_2g/comps HOME=/home/rcf-104/patrices/bbp/fling echo "Jobs start" date cd $HOME python $HOME/Xml1/Set1/run_parallel.py /home/rcf-104/patrices/bbp/11.2.2/setup_bbp_env.sh $HOME /Xml1/Set1/batch_run_bbp_sims.log $PBS_NODEFILE 1 python $HOME/Xml1/Set1/run_parallel.py /home/rcf-104/patrices/bbp/11.2.2/setup_bbp_env.sh $HOME /Xml1/Set1/batch_run_bbp_moves.log $PBS_NODEFILE 1 echo "Jobs end" date

- gen_source_input.csh

- run_bbp-parallel.csh