Difference between revisions of "CyberShake Study 17.3"

| (207 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | CyberShake Study | + | CyberShake Study 17.3 is a computational study to calculate 2 CyberShake hazard models - one with a 1D velocity model, one with a 3D - at 1 Hz in a new region, [[CyberShake Central California]]. We will use the GPU implementation of AWP-ODC-SGT, the Graves & Pitarka (2014) rupture variations with 200m spacing and uniform hypocenters, and the UCERF2 ERF. The SGT and post-processing calculations will both be run on both NCSA Blue Waters and OLCF Titan. |

== Status == | == Status == | ||

| − | + | Study 17.3 began on March 6, 2017 at 12:14:10 PST. | |

| + | |||

| + | Study 17.3 completed on April 6, 2017 at 7:37:16 PDT. | ||

| + | |||

| + | == Data Products == | ||

| + | |||

| + | Hazard maps from Study 17.3 are available here: [[Study 17.3 Data Products]] | ||

| + | |||

| + | Hazard curves produced from Study 17.3 using the CCA 1D model have dataset ID=80, and with the CCA-06 3D model dataset ID=81. | ||

| + | |||

| + | Individual runs can be identified in the CyberShake database by searching for runs with Study_ID=8. | ||

| + | |||

| + | == Study 17.3b == | ||

| + | |||

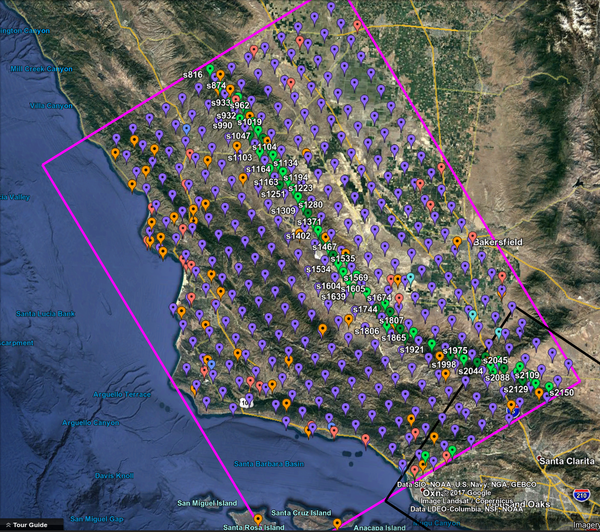

| + | After the conclusion of Study 17.3, we had additional resources on Blue Waters, so we selected 58 additional sites to perform 1D and 3D calculations on. A KML file with these sites is available [[:File:study_17_3b_sites.kml | with names]] or [[:File:study_17_3b_sites_no_names.kml | without names]]. The site locations are shown in green on the image below, along with the 438 Study 17.3 sites. | ||

| + | |||

| + | [[File:Study_17_3b_sites.png|thumb|600px|Additional 58 sites in Study 17.3b, shown with the original Study 17.3 sites]] | ||

== Science Goals == | == Science Goals == | ||

| − | The science goals for Study | + | The science goals for Study 17.3 are: |

*Expand CyberShake to include Central California sites. | *Expand CyberShake to include Central California sites. | ||

*Create CyberShake models using both a Central California 1D velocity model and a 3D model (CCA-06). | *Create CyberShake models using both a Central California 1D velocity model and a 3D model (CCA-06). | ||

| − | *Calculate hazard curves for | + | *Calculate hazard curves for population centers, seismic network sites, and electrical and water infrastructure sites. |

== Technical Goals == | == Technical Goals == | ||

| − | The technical goals for Study | + | The technical goals for Study 17.3 are: |

*Run end-to-end CyberShake workflows on Titan, including post-processing. | *Run end-to-end CyberShake workflows on Titan, including post-processing. | ||

| Line 22: | Line 38: | ||

== Sites == | == Sites == | ||

| − | We will run a total of | + | We will run a total of 438 sites as part of Study 17.3. A KML file of these sites, along with the Central and Southern California boxes, is available [[:File:study_17_3_sites.kml | here (with names)]] or [[:File:study_17_3_sites_no_names.kml | here (without names)]]. |

We created a Central California CyberShake box, defined [[CyberShake Central California | here]]. | We created a Central California CyberShake box, defined [[CyberShake Central California | here]]. | ||

| − | We have identified a list of | + | We have identified a list of 408 sites which fall within the box and outside of the CyberShake Southern California box. These include: |

* 310 sites on a 10 km grid | * 310 sites on a 10 km grid | ||

| − | * | + | * 54 CISN broadband or PG&E stations, decimated so they are at least 5 km apart, and no closer than 2 km from another station. |

| − | * | + | * 30 cities used by the USGS in locating earthquakes |

* 4 PG&E pumping stations | * 4 PG&E pumping stations | ||

* 6 historic Spanish missions | * 6 historic Spanish missions | ||

| − | * | + | * 4 OBS stations |

| − | In addition, we will include | + | In addition, we will include 30 sites which overlap with the Southern California box (24 10 km grid, 5 5 km grid, 1 SCSN), enabling direct comparison of results. |

We will prioritize the pumping stations and the overlapping sites. | We will prioritize the pumping stations and the overlapping sites. | ||

| Line 41: | Line 57: | ||

== Velocity Models == | == Velocity Models == | ||

| − | We are planning to use 2 velocity models in Study | + | We are planning to use 2 velocity models in Study 17.3. We will enforce a Vs minimum of 900 m/s, a minimum Vp of 1600 m/s, and a minimum rho of 1600 kg/m^3. |

| − | # [[CCA]]-06, a 3D model created via tomographic inversion by En-Jui Lee. This model has | + | # [[CCA]]-06, a 3D model created via tomographic inversion by En-Jui Lee. This model has no GTL. Our order of preference will be: |

## CCA-06 | ## CCA-06 | ||

## CVM-S4.26 | ## CVM-S4.26 | ||

## SCEC background 1D model | ## SCEC background 1D model | ||

| − | # CCA-1D, a 1D model created by averaging CCA-06 throughout the Central California region. | + | # [[CyberShake_Central_California_1D_Model | CCA-1D]], a 1D model created by averaging CCA-06 throughout the Central California region. |

We will run the 1D and 3D model concurrently. | We will run the 1D and 3D model concurrently. | ||

| Line 53: | Line 69: | ||

== Verification == | == Verification == | ||

| − | As part of our verification work, we plan to | + | Since we are moving to a new region, we calculated GMPE maps for this region, available here: [[Central California GMPE Maps]] |

| + | |||

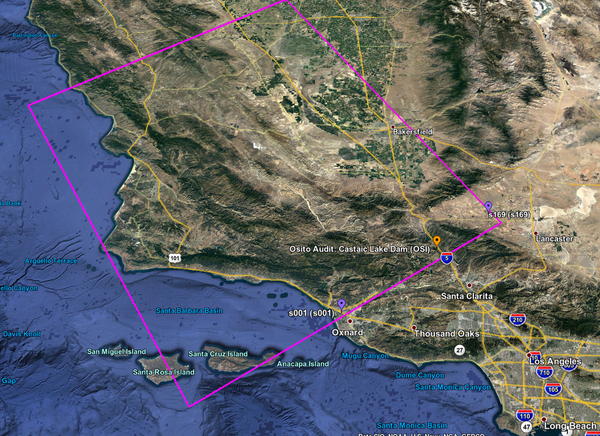

| + | As part of our verification work, we plan to do runs using both the 1D and 3D model for the following 3 sites in the overlapping region: | ||

*s001 | *s001 | ||

| Line 59: | Line 77: | ||

*s169 | *s169 | ||

| − | + | [[File:3 sites locations.png|600px]] | |

| − | + | === Blue Waters/Titan Verification === | |

| − | + | ||

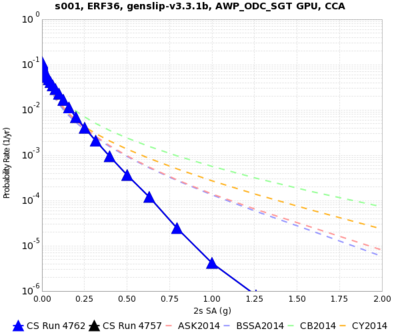

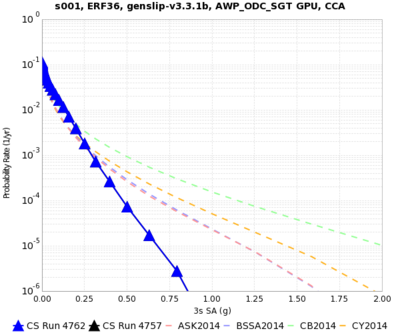

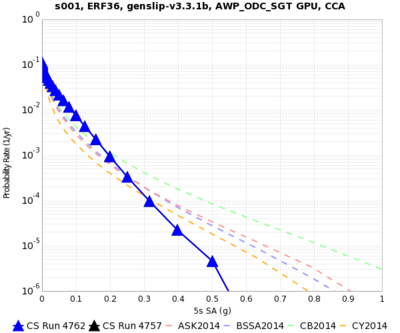

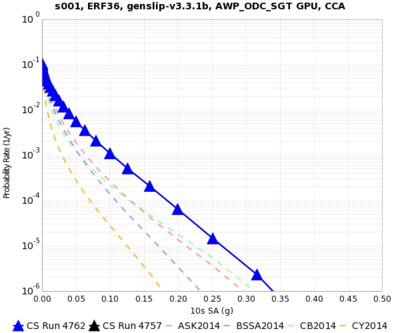

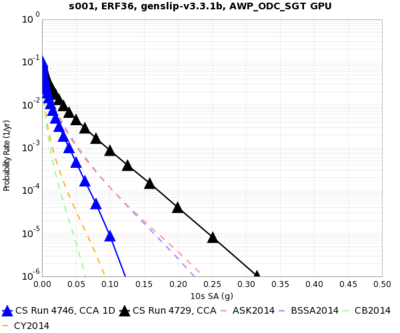

| − | + | We ran s001 on both Blue Waters and Titan. Hazard curves are below, and very closely match: | |

| + | |||

| + | {| | ||

| + | | [[File:s001_4762_4757_2sec.png|thumb|400px|2 sec SA]] | ||

| + | | [[File:s001_4762_4757_3sec.png|thumb|400px|3 sec SA]] | ||

| + | | [[File:s001_4762_4757_5sec.png|thumb|400px|5 sec SA]] | ||

| + | | [[File:s001_4762_4757_10sec.png|thumb|400px|10 sec SA]] | ||

| + | |} | ||

| + | |||

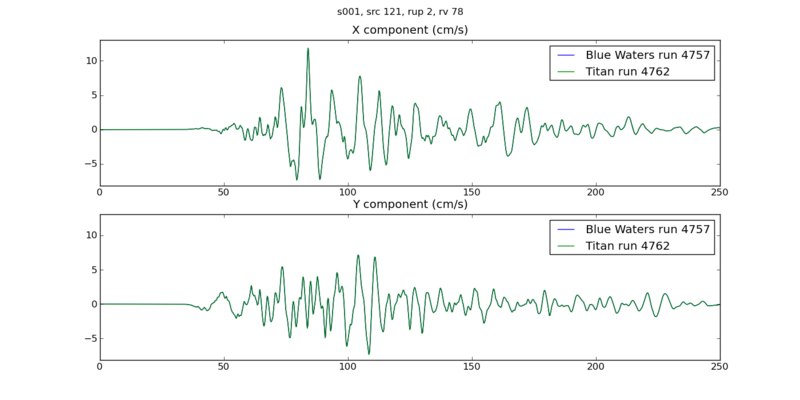

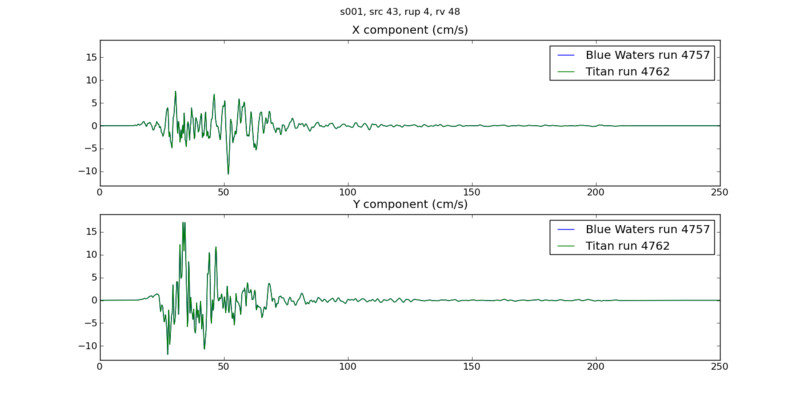

| + | Below are two seismograms, which also closely match: | ||

| + | |||

| + | {| | ||

| + | | [[File:s001_4762_4757_s121_r2_rv78.png|thumb|800px|src 121, rup 2, rv 78]] | ||

| + | |- | ||

| + | | [[File:s001_4762_4757_s43_r4_rv48.png|thumb|800px|src 43, rup 4, rv 48]] | ||

| + | |} | ||

| + | |||

| + | === NT check === | ||

| + | |||

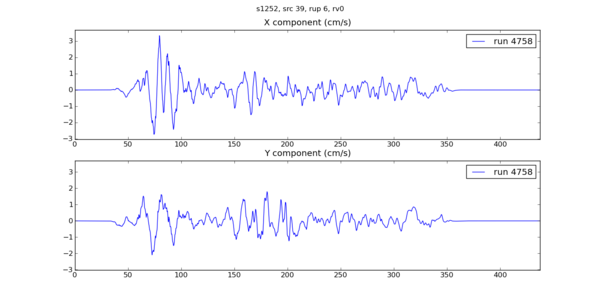

| + | To verify that NT for the study (5000 timesteps = 437.5 sec) is long enough, I extracted a northern SAF seismogram for s1252, one of the southernmost sites to include northern SAF events. The seismogram is below; it tapers off around 350 seconds. | ||

| + | |||

| + | {| | ||

| + | | [[File:s1252_4758_s39_r6_rv0.png|thumb|600 px|src 39, rup 6, rv0]] | ||

| + | |} | ||

| + | |||

| + | === Study 15.4 Verification === | ||

| + | |||

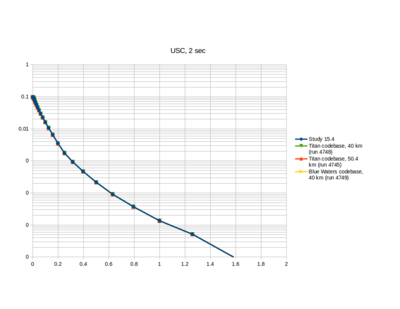

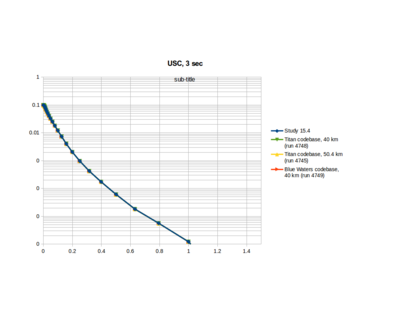

| + | We ran the USC site through the Study 16.9 code base on both Blue Waters and Titan with the Study 15.4 parameters. Hazard curves are below, and very closely match: | ||

| + | |||

| + | {| | ||

| + | | [[File:USC_3790_4745_4748_4749_2sec.png|thumb|400px|2 sec SA]] | ||

| + | | [[File:USC_3790_4745_4748_4749_3sec.png|thumb|400px|3 sec SA]] | ||

| + | |} | ||

| + | |||

| + | Plots of the seismograms show excellent agreement: | ||

| + | |||

| + | [[File:USC_3790_4748_4749_s121_r2_rv78.png]] | ||

| + | |||

| + | [[File:USC_3790_4748_4749_s43_r4_rv48.png]] | ||

| + | |||

| + | We accidentally ran with a depth of 50.4 km first. Here are seismogram plots illustrating the difference between running SGTs with depth of 40 km vs 50.4 km. | ||

| + | |||

| + | [[File:USC_4745_4748_s121_r2_rv78.png]] | ||

| + | |||

| + | [[File:USC_4745_4748_s43_r4_rv48.png]] | ||

| + | |||

| + | === Velocity Model Verification === | ||

| + | |||

| + | Cross-section plots of the velocity models are available [[Study 16.9 Velocity Plots | here]]. | ||

| + | |||

| + | |||

| + | === 200 km cutoff effects === | ||

| + | |||

| + | We are investigating the impact of the 200 km cutoff as it pertains to including/excluding northern SAF events. This is documented here: [[CCA N SAF Tests]]. | ||

| + | |||

| + | |||

| + | === Impulse difference === | ||

| + | |||

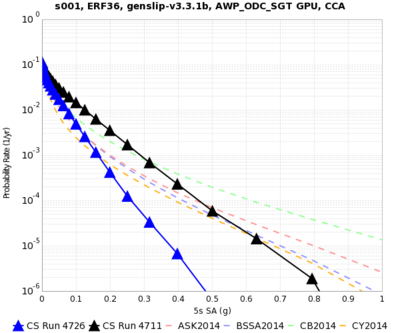

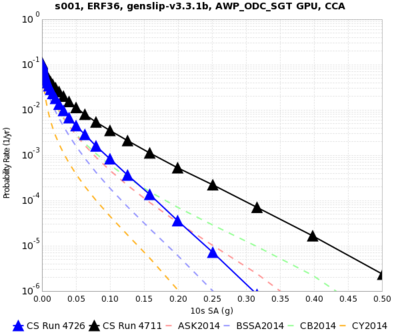

| + | Here's a curve comparison showing the impact of fixing the impulse for s001 at 3, 5, and 10 sec. | ||

| + | |||

| + | {| | ||

| + | | [[File:s001_4726_v_4711_3secSA.png|thumb|400px|3 sec SA, black is wrong impulse, blue is correct]] | ||

| + | | [[File:s001_4726_v_4711_5secSA.png|thumb|400px|5 sec SA, black is wrong impulse, blue is correct]] | ||

| + | | [[File:s001_4726_v_4711_10secSA.png|thumb|400px|10 sec SA, black is wrong impulse, blue is correct]] | ||

| + | |} | ||

| + | |||

| + | |||

| + | === Curve Results === | ||

| + | |||

| + | {| border="1" | ||

| + | ! site | ||

| + | ! velocity model | ||

| + | ! 3 sec SA | ||

| + | ! 5 sec SA | ||

| + | ! 10 sec SA | ||

| + | |- | ||

| + | ! rowspan="2" | s001 | ||

| + | | 1D | ||

| + | | [[File:s001_4746_v_4729_3secSA.png|thumb|400px|blue=CCA 1D, black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | | [[File:s001_4746_v_4729_5secSA.png|thumb|400px|blue=CCA 1D, black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | | [[File:s001_4746_v_4729_10secSA.png|thumb|400px|blue=CCA 1D, black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | |- | ||

| + | | 3D | ||

| + | | [[File:s001_4729_3secSA.png|thumb|400px|black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | | [[File:s001_4729_5secSA.png|thumb|400px|black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | | [[File:s001_4729_10secSA.png|thumb|400px|black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | |- | ||

| + | ! rowspan="2" | OSI | ||

| + | | 1D | ||

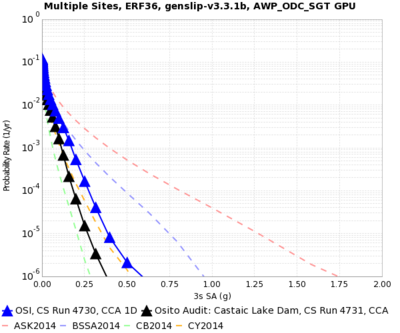

| + | | [[File:OSI_4730_v_4731_3secSA.png|thumb|400px|blue=CCA 1D, black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

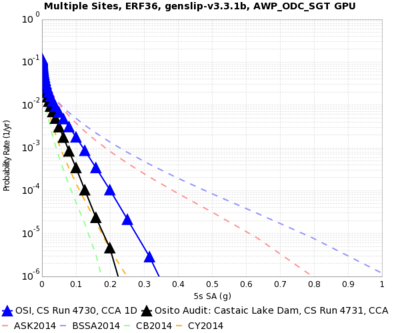

| + | | [[File:OSI_4730_v_4731_5secSA.png|thumb|400px|blue=CCA 1D, black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

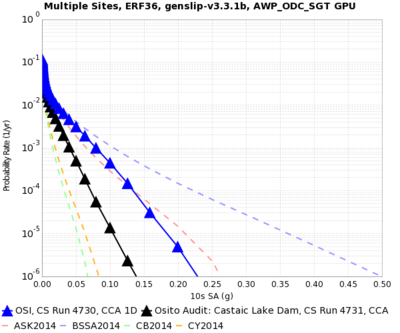

| + | | [[File:OSI_4730_v_4731_10secSA.png|thumb|400px|blue=CCA 1D, black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | |- | ||

| + | | 3D | ||

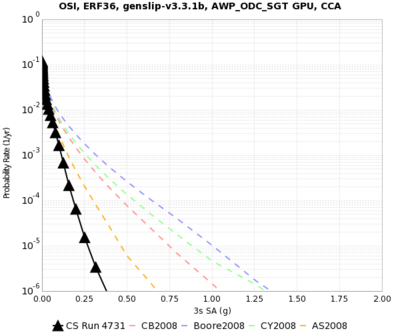

| + | | [[File:OSI_4731_3secSA.png|thumb|400px|black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | | [[File:OSI_4731_5secSA.png|thumb|400px|black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | | [[File:OSI_4731_10secSA.png|thumb|400px|black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | |- | ||

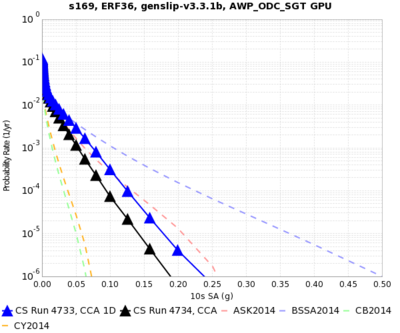

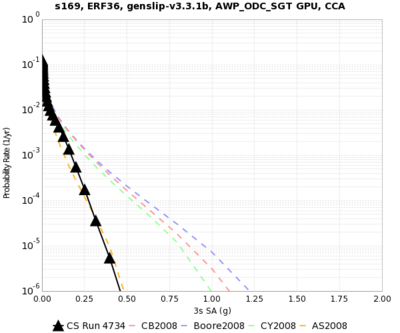

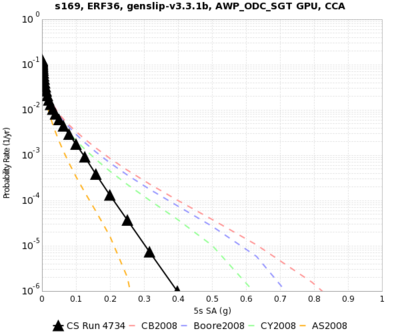

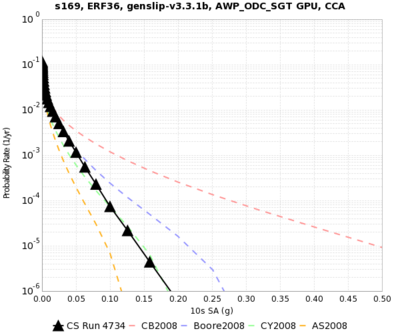

| + | ! rowspan="2" | s169 | ||

| + | | 1D | ||

| + | | [[File:s169_4733_v_4734_3secSA.png|thumb|400px|blue=CCA 1D, black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | | [[File:s169_4733_v_4734_5secSA.png|thumb|400px|blue=CCA 1D, black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | | [[File:s169_4733_v_4734_10secSA.png|thumb|400px|blue=CCA 1D, black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | |- | ||

| + | | 3D | ||

| + | | [[File:s169_4734_3secSA.png|thumb|400px|black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | | [[File:s169_4734_5secSA.png|thumb|400px|black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | | [[File:s169_4734_10secSA.png|thumb|400px|black=CCA 3D (+USGS Bay Area, CVM-S4.26)]] | ||

| + | |} | ||

| + | |||

| + | These results were calculated with the incorrect impulse. | ||

| + | |||

| + | {| border="1" | ||

| + | ! site | ||

| + | ! velocity model | ||

| + | ! 3 sec SA | ||

| + | ! 5 sec SA | ||

| + | ! 10 sec SA | ||

| + | |- | ||

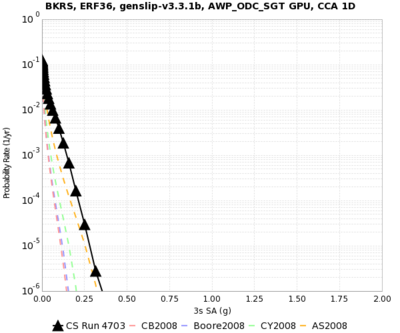

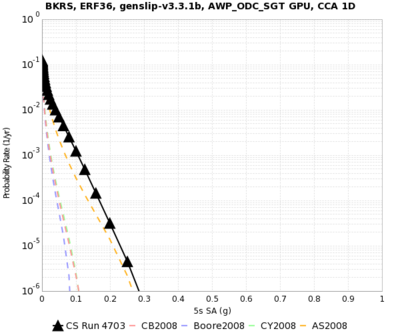

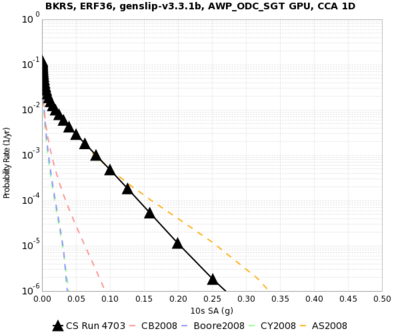

| + | ! rowspan="2" | BKRS | ||

| + | | 1D | ||

| + | | [[File:BKRS_4703_3secSA.png|400px]] | ||

| + | | [[File:BKRS_4703_5secSA.png|400px]] | ||

| + | | [[File:BKRS_4703_10secSA.png|400px]] | ||

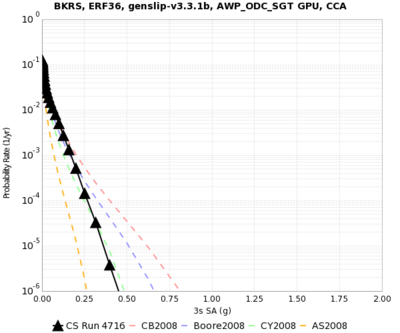

| + | |- | ||

| + | | 3D | ||

| + | | [[File:BKRS_4716_3secSA.png|400px]] | ||

| + | | [[File:BKRS_4716_5secSA.png|400px]] | ||

| + | | [[File:BKRS_4716_10secSA.png|400px]] | ||

| + | |- | ||

| + | ! rowspan="2" | SBR | ||

| + | | 1D | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | |- | ||

| + | | 3D | ||

| + | | | ||

| + | | | ||

| + | | | ||

| + | |- | ||

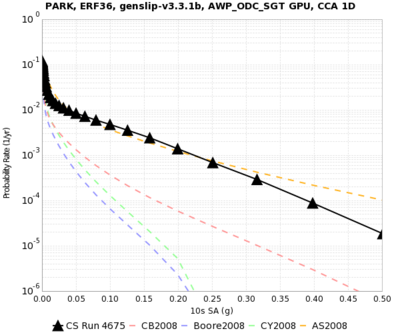

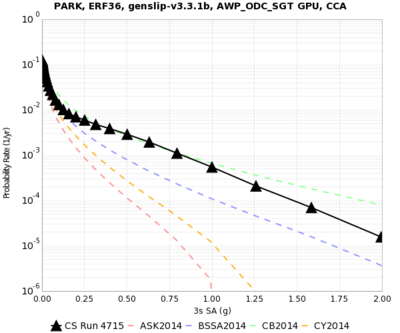

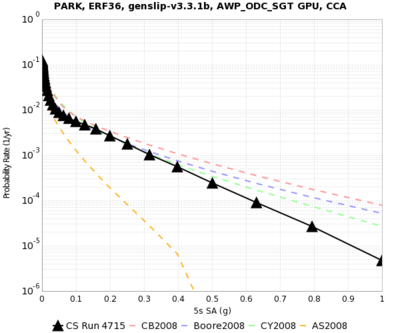

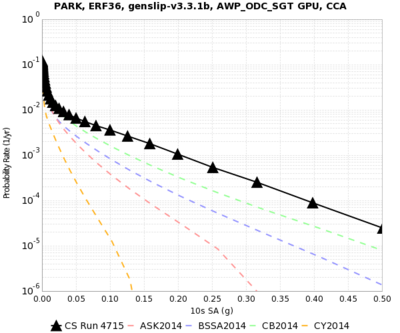

| + | ! rowspan="2" | PARK | ||

| + | | 1D | ||

| + | | [[File:PARK_4675_3secSA.png|400px]] | ||

| + | | [[File:PARK_4675_5secSA.png|400px]] | ||

| + | | [[File:PARK_4675_10secSA.png|400px]] | ||

| + | |- | ||

| + | | 3D | ||

| + | | [[File:PARK_4715_3secSA.png|400px]] | ||

| + | | [[File:PARK_4715_5secSA.png|400px]] | ||

| + | | [[File:PARK_4715_10secSA.png|400px]] | ||

| + | |} | ||

| + | |||

| + | === Velocity Profiles === | ||

| + | |||

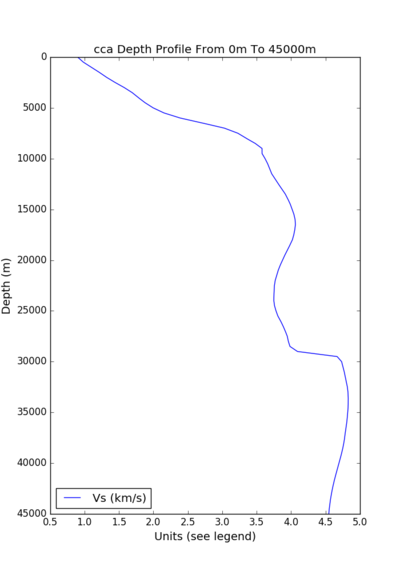

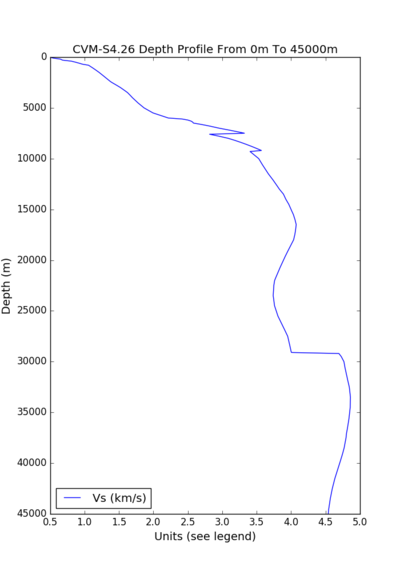

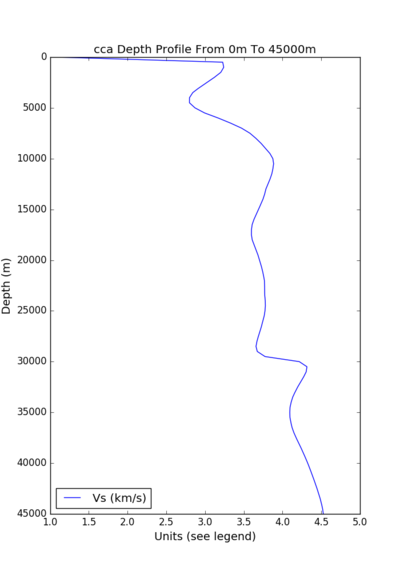

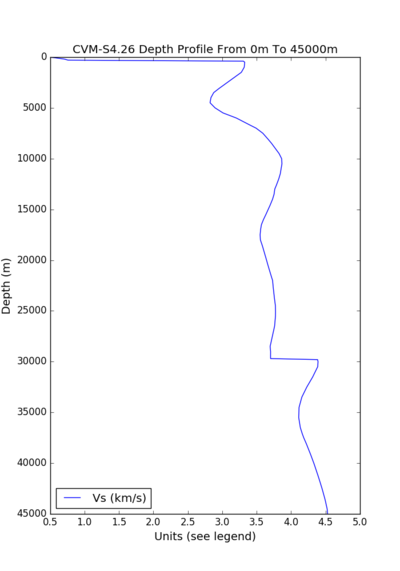

| + | {| border="1" | ||

| + | ! site | ||

| + | ! CCA profile (min Vs=900 m/s) | ||

| + | ! CVM-S4.26 profile (min Vs=500 m/s) | ||

| + | |- | ||

| + | ! s001 | ||

| + | | [[File:s001_cca.png|400px]] | ||

| + | | [[File:s001_cvmsi.png|400px]] | ||

| + | |- | ||

| + | ! OSI | ||

| + | | [[File:OSI_cca.png|400px]] | ||

| + | | [[File:OSI_cvmsi.png|400px]] | ||

| + | |- | ||

| + | ! s169 | ||

| + | | [[File:s169_cca.png|400px]] | ||

| + | | [[File:s169_cvmsi.png|400px]] | ||

| + | |} | ||

| + | |||

| + | === Seismogram plots === | ||

| + | |||

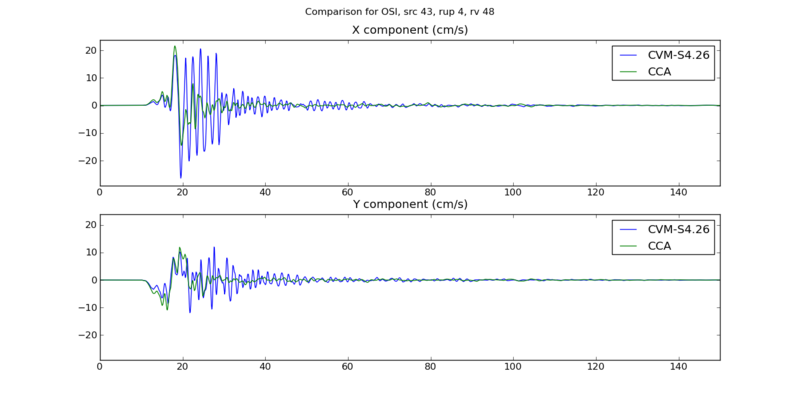

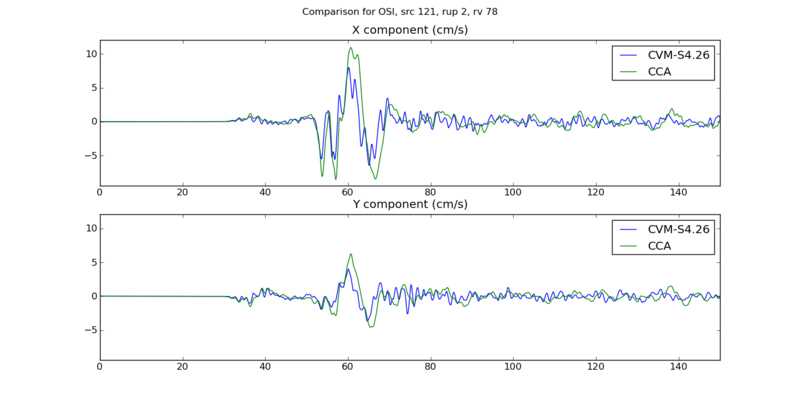

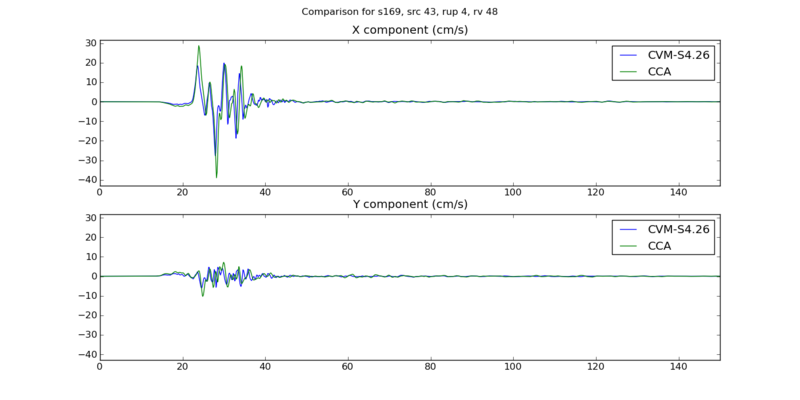

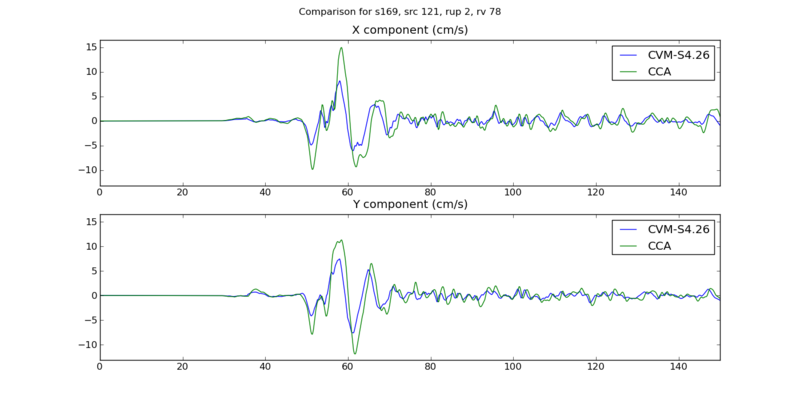

| + | Below are plots comparing results from Study 15.4 to test results, which differ in velocity model, Vs min cutoff, dt, and grid spacing. We've selected two events: source 43, rupture 4, rupture variation 48, a M7.05 southern SAF event, and source 121, rupture 2, rupture variation 78, and M7.65 San Jacinto event. | ||

| + | |||

| + | {| border="1" | ||

| + | ! site | ||

| + | ! San Andreas event | ||

| + | ! San Jacinto event | ||

| + | |- | ||

| + | ! s001 | ||

| + | | [[File:Seismogram_s001_3972v4711_43_4_48.png|800px]] | ||

| + | | [[File:Seismogram_s001_3972v4711_121_2_78.png|800px]] | ||

| + | |- | ||

| + | ! OSI | ||

| + | | [[File:Seismogram_OSI_3923v4712_43_4_48.png|800px]] | ||

| + | | [[File:Seismogram_OSI_3923v4712_121_2_78.png|800px]] | ||

| + | |- | ||

| + | ! s169 | ||

| + | | [[File:Seismogram_s169_4022v4713_43_4_48.png|800px]] | ||

| + | | [[File:Seismogram_s169_4022v4713_121_2_78.png|800px]] | ||

| + | |} | ||

| + | |||

| + | === Rupture Variation Generator v5.2.3 (Graves & Pitarka 2015) === | ||

| + | |||

| + | Plots related to verifying the rupture variation generator intended for use in this study are available here: [[Rupture Variation Generator v5.2.3 Verification]] . | ||

| + | |||

| + | After review, we decided to stick with v3.3.1 for this study. | ||

== Performance Enhancements (over Study 15.4) == | == Performance Enhancements (over Study 15.4) == | ||

| Line 83: | Line 300: | ||

<i>* On Titan, a few of the PostSGT and MD5 jobs didn't finish in the 2 hours, so they had to be run on Rhea by hand, which has a longer permitted wallclock time. We should think about moving these kind of processing jobs to Rhea in the future.</i> | <i>* On Titan, a few of the PostSGT and MD5 jobs didn't finish in the 2 hours, so they had to be run on Rhea by hand, which has a longer permitted wallclock time. We should think about moving these kind of processing jobs to Rhea in the future.</i> | ||

| − | The SGTs for Study 16. | + | The SGTs for Study 16.9 will be smaller, so these jobs should finish faster. PostSGT has two components, reformatting the SGTs and generating the headers. By increasing from 2 nodes to 4, we can decrease the SGT reformatting time to about 15 minutes, and the header generation also takes about 15 minutes. We investigated setting up the workflow to run the PostSGT and MD5 jobs only on Rhea, but had difficulty getting the rvgahp server working there. Reducing the runtime of the SGT reformatting and separating out the MD5 sum should fix this issue for this study. |

<i>* When we went back to do to CyberShake Study 15.12, we discovered that it was common for a small number of seismogram files in many of the runs to have an issue wherein some rupture variation records were repeated. We should more carefully check the code in DirectSynth responsible for writing and confirming correctness of output files, and possibly add a way to delete and recreate RVs with issues.</i> | <i>* When we went back to do to CyberShake Study 15.12, we discovered that it was common for a small number of seismogram files in many of the runs to have an issue wherein some rupture variation records were repeated. We should more carefully check the code in DirectSynth responsible for writing and confirming correctness of output files, and possibly add a way to delete and recreate RVs with issues.</i> | ||

| Line 98: | Line 315: | ||

* We have migrated data from past studies off the production database, which will hopefully improve database performance from Study 15.12. | * We have migrated data from past studies off the production database, which will hopefully improve database performance from Study 15.12. | ||

| + | |||

| + | == Lessons Learned == | ||

| + | |||

| + | * Include plots of velocity models as part of readiness review when moving to new regions. | ||

| + | * Formalize process of creating impulse. Consider creating it as part of the workflow based on nt and dt. | ||

| + | * Many jobs were not picked up by the reservation, and as a result reservation nodes were idle. Work harder to make sure reservation is kept busy. | ||

| + | * Forgot to turn on monitord during workflow, so had to deal with statistics after the workflow was done. Since we're running far fewer jobs, it's fine to run monitord population during the workflow. | ||

| + | * In Study 17.3b, 2 of the runs (5765 and 5743) had a problem with their output, which left 'holes' of lower hazard on the 1D map. Looking closely, we discovered that the SGT X component of run 5765 was about 30 GB smaller than it should have been, likely causing issues when the seismograms were synthesized. We no longer had the SGTs from 5743, so we couldn't verify that the same problem happened here. Moving forward, include checks on SGT file size as part of the nan check. | ||

== Codes == | == Codes == | ||

| + | |||

| + | == Output Data Products == | ||

| + | |||

| + | Below is a table of planned output products, both what we plan to compute and what we plan to put in the database. | ||

| + | |||

| + | {| border="1" | ||

| + | ! Type of product | ||

| + | ! Periods/subtypes computed and saved in files | ||

| + | ! Periods/subtypes inserted in database | ||

| + | |- | ||

| + | ! PSA | ||

| + | | 88 values<br/>X and Y components at 44 periods:<br/>10, 9.5, 9, 8.5, 8, 7.5, 7, 6.5, 6, 5.5, 5, 4.8, 4.6, 4.4, 4.2, 4, 3.8, 3.6, 3.4, 3.2, 2, 2.8, 2.6, 2.4, 2.2, 2, 1.66667, 1.42857, 1.25, 1.11111, 1, .66667, .5, .4, .33333, .285714, .25, .22222, .2, .16667, .142857, .125, .11111, .1 sec | ||

| + | | 4 values<br/>Geometric mean for 4 periods:<br/>10, 5, 3, 2 sec | ||

| + | |- | ||

| + | ! RotD | ||

| + | | 44 values<br/>RotD100, RotD50, and RotD50 angle for 22 periods:<br/>1.0, 1.2, 1.4, 1.5, 1.6, 1.8, 2.0, 2.2, 2.4, 2.6, 2.8, 3.0, 3.5, 4.0, 4.4, 5.0, 5.5, 6.0, 6.5, 7.5, 8.5, 10.0 sec | ||

| + | | 12 values<br/>RotD100, RotD50, and RotD50 angle for 6 periods:<br/>10, 7.5, 5, 4, 3, 2 sec | ||

| + | |- | ||

| + | ! Durations | ||

| + | | 18 values<br/>For X and Y components, energy integral, Arias intensity, cumulative absolute velocity (CAV), and for both velocity and acceleration, 5-75%, 5-95%, and 20-80%. | ||

| + | | None | ||

| + | |- | ||

| + | ! Hazard Curves | ||

| + | | N/A | ||

| + | | 16 curves<br/>Geometric mean: 10, 5, 3, 2 sec<br/>RotD100: 10, 7.5, 5, 4, 3, 2 sec<br/>RotD50: 10, 7.5, 5, 4, 3, 2 sec | ||

| + | |} | ||

== Computational and Data Estimates == | == Computational and Data Estimates == | ||

| Line 105: | Line 356: | ||

=== Computational Time === | === Computational Time === | ||

| − | Since we are using a min Vs=900 m/s, we will use a grid spacing of 175 m, and dt=0.00875 in the SGT simulation (and 0.0875 in the seismogram synthesis). | + | Since we are using a min Vs=900 m/s, we will use a grid spacing of 175 m, and dt=0.00875, nt=23000 in the SGT simulation (and 0.0875 in the seismogram synthesis). |

| − | For computing these estimates, we are using a volume of 420 km x 1160 km x 50 km, or 2400 x 6630 x 286 grid points. This is about 4.5 billion grid points, approximately half the size of the Study 15.4 typical volume. | + | For computing these estimates, we are using a volume of 420 km x 1160 km x 50 km, or 2400 x 6630 x 286 grid points. This is about 4.5 billion grid points, approximately half the size of the Study 15.4 typical volume. We will run the SGTs on 160-200 GPUs. |

We estimate that we will run 75% of the sites from each model on Blue Waters, and 25% on Titan. This is because we are charged less for Blue Waters sites (we are charged for the Titan GPUs even if we don't use them), and we have more time available on Blue Waters. However, we will use a dynamic approach during runtime, so the resulting numbers may differ. | We estimate that we will run 75% of the sites from each model on Blue Waters, and 25% on Titan. This is because we are charged less for Blue Waters sites (we are charged for the Titan GPUs even if we don't use them), and we have more time available on Blue Waters. However, we will use a dynamic approach during runtime, so the resulting numbers may differ. | ||

| + | |||

| + | Study 15.4 SGTs took 740 node-hours per component. From this, we assume: | ||

| + | |||

| + | 750 node-hours x (4.5 billion grid points in 16.9 / 10 billion grid points in 15.4) x ( 23k timesteps in 16.9 / 40k timesteps in 15.4 ) ~ 200 node-hours per component for Study 16.9. | ||

| + | |||

| + | Study 15.4 post-processing took 40k core-hrs. From this, we assume: | ||

| + | |||

| + | 40k core-hrs x ( 2.3k timesteps in 16.9 / 4k timesteps in 15.4 ) = 23k core-hrs = 720 node-hrs on Blue Waters, 1440 node-hrs on Titan. | ||

| + | |||

| + | |||

| + | Because we're limited on Blue Waters node-hours, we will burn no more than 600K node-hrs. This should be enough to do 75% of the post-processing on Blue Waters. All the SGTs and the other post-processing will be done on Titan. | ||

| + | |||

==== Titan ==== | ==== Titan ==== | ||

| − | SGTs (GPU): | + | Pre-processing (CPU): 100 node-hrs/site x 876 sites = 87,600 node-hours. |

| + | |||

| + | SGTs (GPU): 400 node-hrs per site x 876 sites = 350,400 node-hours. | ||

| − | Post-processing (CPU): | + | Post-processing (CPU): 1440 node-hrs per site x 219 sites = 315,360 node-hours. |

| − | Total: ''' | + | Total: '''28.3M SUs''' ((87,600 + 350,400 + 315,360) x 30 SUs/node-hr + 25% margin) |

| − | We have | + | We have 91.7M SUs available on Titan. |

==== Blue Waters ==== | ==== Blue Waters ==== | ||

| − | + | Post-processing (CPU): 720 node-hrs per site x 657 sites = 473,040 node-hours. | |

| − | |||

| − | |||

| − | |||

| − | Post-processing (CPU): | ||

| − | Total: ''' | + | Total: '''591.3K node-hrs''' ((473,040) + 25% margin) |

| − | We have | + | We have 989K node-hrs available on Blue Waters. |

=== Storage Requirements === | === Storage Requirements === | ||

| Line 139: | Line 400: | ||

==== Titan ==== | ==== Titan ==== | ||

| − | Purged space to store intermediate data products: (900 GB SGTs + 60 GB mesh + 900 GB reformatted SGTs)/site x | + | Purged space to store intermediate data products: (900 GB SGTs + 60 GB mesh + 900 GB reformatted SGTs)/site x 219 sites = 1591 TB |

| − | Purged space to store output data: (15 GB seismograms + 0.2 GB PSA + 0.2 GB RotD + 0.2 GB duration) x | + | Purged space to store output data: (15 GB seismograms + 0.2 GB PSA + 0.2 GB RotD + 0.2 GB duration) x 219 sites = 3.3 TB |

==== Blue Waters ==== | ==== Blue Waters ==== | ||

| − | Purged space to store intermediate data products: (900 GB | + | Purged space to store intermediate data products: (900 GB SGTs)/site x 657 sites = 577 TB |

| − | Purged space to store output data: (15 GB seismograms + 0.2 GB PSA + 0.2 GB RotD + 0.2 GB duration) x | + | Purged space to store output data: (15 GB seismograms + 0.2 GB PSA + 0.2 GB RotD + 0.2 GB duration) x 657 sites = 10.0 TB |

==== SCEC ==== | ==== SCEC ==== | ||

| − | Archival disk usage: | + | Archival disk usage: 13.3 TB seismograms + 0.1 TB PSA files + 0.1 TB RotD files + 0.1 TB duration files on scec-02 (has 109 TB free) & 24 GB curves, disaggregations, reports, etc. on scec-00 (109 TB free) |

| + | |||

| + | Database usage: (4 rows PSA [@ 2, 3, 5, 10 sec] + 12 rows RotD [RotD100 and RotD50 @ 2, 3, 4, 5, 7.5, 10 sec])/rupture variation x 500K rupture variations/site x 876 sites = 7 billion rows x 125 bytes/row = 816 GB (3.2 TB free on moment.usc.edu disk) | ||

| + | |||

| + | Temporary disk usage: 1 TB workflow logs. scec-02 has 94 TB free. | ||

| − | + | == Performance Metrics == | |

| − | + | === Usage === | |

| + | |||

| + | Just before starting, we grabbed the current project usage for Blue Waters and Titan to get accurate measures of the SUs burned. | ||

| + | |||

| + | ==== Starting usage, Titan ==== | ||

| + | |||

| + | Project usage = 4,313,662 SUs in 2017 (153,289 in March) | ||

| + | |||

| + | User callag used 1,089,050 SUs in 2017 (98,606 in March) | ||

| + | |||

| + | ==== Starting usage, Blue Waters ==== | ||

| + | |||

| + | 'usage' command reports project PRAC_bahm used 11,571.4 node-hrs and ran 321 jobs. | ||

| + | |||

| + | 'usage' command reports scottcal used 11,320.49 node-hrs on PRAC_bahm. | ||

| + | |||

| + | ==== Ending usage, Titan ==== | ||

| + | |||

| + | Project usage = 22,178,513 SUs in 2017 (12,586,176 in March, 5,431,964 in April) | ||

| + | |||

| + | User callag used 14,579,559 SUs in 2017 (10,128,109 in March, 3,461,006 in April) | ||

| + | |||

| + | ==== Ending usage, Blue Waters ==== | ||

| + | |||

| + | usage command reports 366773.73 node-hrs used by PRAC_bahm. | ||

| + | |||

| + | usage command reports 366504.84 node-hrs used by scottcal. | ||

| + | |||

| + | ==== Reservations ==== | ||

| + | |||

| + | 4 reservations on Blue Waters (3 for 128 nodes, 1 for 124) began on 3/9/17 on 11:00:00 CST. These reservations expired after a week. | ||

| + | |||

| + | A 2nd set of reservations (same configuration) for 1 week started again on 3/16/17 at 22:00:00 CDT. We gave these reservations back on 3/20/17 at 11:14 CDT because shock went down and we couldn't keep them busy. | ||

| + | |||

| + | Another set of reservations started at 2:27 CDT on 3/29/17, but were revoked around 16:30 CDT on 3/30/17. | ||

| + | |||

| + | ==== Events during Study ==== | ||

| + | |||

| + | We requested and received a 5-day priority boost, 5 jobs running in bin 5, and 8 jobs eligible to run on Titan starting sometime in the morning of Sunday, March 12. This greatly increased our throughput. | ||

| + | |||

| + | In the evening of Wednesday, March 15, we increased GRIDMANAGER_MAX_JOBMANAGERS_PER_RESOURCE from 10 to 20 to increase the number of jobs in the Blue Waters queues. | ||

| + | |||

| + | Condor on shock was killed on 3/20/17 at 3:45 CDT. We started resubmitting workflows at 13:44 CDT. | ||

| + | |||

| + | In preparation for the USC downtime, we ran condor_off at 22:00 CDT on 3/27/17. We turned condor back on (condor_on) at 21:01 CDT on 3/29/17. | ||

| + | |||

| + | Blue Waters deleted the cron job, so we have no job statistics on Blue Waters from 3/25/17 at 17:00 to 3/28/17 at 00:00 (when Blue Waters went down), then again from 3/29/17 at 2:27 (when Blue Waters came back) until 3/29/17 at 21:19. | ||

| + | |||

| + | === Application-level Metrics === | ||

| + | |||

| + | * Makespan: 738.4 hrs (DST started during the run) | ||

| + | * Uptime: 691.4 hrs (downtime during BW and HPC system maintenance) | ||

| + | |||

| + | Note: uptime is what's used for calculating the averages for jobs, nodes, etc. | ||

| + | |||

| + | * 876 runs | ||

| + | * 876 pairs of SGTs generated on Titan | ||

| + | * 876 post-processing runs | ||

| + | ** 145 performed on Titan (16.6%), 731 performed on Blue Waters (83.4%) | ||

| + | * 284,839,014 seismogram pairs generated | ||

| + | * 42,725,852,100 intensity measures generated (150 per seismogram pair) | ||

| + | |||

| + | * 15,581 jobs submitted | ||

| + | * 898,805.3 node-hrs used (21,566,832 core-hrs) | ||

| + | * 1026 node-hrs per site (24,620 core-hrs) | ||

| + | * On average, 12.1 jobs running, with a max of 41 | ||

| + | * Average of 1295 nodes used, with a maximum of 5374 (101,472 cores). | ||

| + | |||

| + | * Total data generated: ? | ||

| + | ** 370 TB SGTs generated (216 GB per single SGT) | ||

| + | ** 777 TB intermediate data generated (44 GB per velocity file, duplicate SGTs) | ||

| + | ** 10.7 TB output data (15,202,088 files) | ||

| + | |||

| + | Delay per job (using a 7-day, no-restarts cutoff: 1618 workflows, 19143 jobs) was mean: 1836 sec, median: 467, min: 0, max: 91350, sd: 38209 | ||

| + | |||

| + | {| border=1 | ||

| + | ! Bins (sec) | ||

| + | | 0 || || 60 || || 120 || || 180 || || 240 || || 300 || || 600 || || 900 || || 1800 || || 3600 || || 7200 || || 14400 || || 43200 || || 86400 || || 172800 || || 259200 || || 604800 | ||

| + | |- | ||

| + | ! Jobs per bin | ||

| + | | || 5511 || || 1435 || || 401 || || 469 || || 503 || || 2082 || || 1428 || || 2612 || || 2373 || || 1390 || || 567 || || 302 || || 68 || || 2 || || 0 || || 0 | ||

| + | |} | ||

| + | |||

| + | * Application parallel node speedup (node-hours divided by makespan) was 1217x. (Divided by uptime: 1300x) | ||

| + | * Application parallel workflow speedup (number of workflows times average workflow makespan divided by application makespan) was 16.0x. (Divided by uptime: 17.1x) | ||

| + | |||

| + | ==== Titan ==== | ||

| + | * Wallclock time: 720.3 hrs | ||

| + | * Uptime: 628.0 hrs (all downtime was SCEC) | ||

| + | * SGTs generated for 876 sites, post-processing for 145 sites | ||

| + | * 13,334 jobs submitted to the Titan queue | ||

| + | * Running jobs: average 5.7, max of 25 | ||

| + | * Idle jobs: average 11.1, max of 38 | ||

| + | * Nodes: average 669 (10,704 cores), max 4406 (70,496 cores, 23.6% of Titan) | ||

| + | * Titan portal and Titan internal reporting agree: 13,490,509 SUs used (449,683.6 node-hrs) | ||

| + | |||

| + | Delay per job (7-day cutoff, no restarts, 11603 jobs): mean 2109 sec, median: 1106, min: 0, max: 91350, sd: 3818 | ||

| + | |||

| + | {| border=1 | ||

| + | ! Bins (sec) | ||

| + | | 0 || || 60 || || 120 || || 180 || || 240 || || 300 || || 600 || || 900 || || 1800 || || 3600 || || 7200 || || 14400 || || 43200 || || 86400 || || 172800 || || 259200 || || 604800 | ||

| + | |- | ||

| + | ! Jobs per bin | ||

| + | | || 149 || || 1420 || || 333 || || 305 || || 302 || || 1405 || || 1175 || || 2442 || || 2304 || || 1248 || || 363 || || 136 || || 19 || || 2 || || 0 || || 0 | ||

| + | |} | ||

| + | ==== Blue Waters ==== | ||

| + | |||

| + | The Blue Waters cronjob which generated the queue information file was down for about 2 days, so we reconstructed its contents from the job history query functionality on the Blue Waters portal. | ||

| + | |||

| + | * Wallclock time: 668.1 hrs | ||

| + | * Uptime: 601.3 hrs (34.5 SCEC downtime including USC, 32.3 BW downtime) | ||

| + | * Post-processing for 731 sites | ||

| + | * 2,248 jobs submitted to the Blue Waters queue | ||

| + | * Running jobs: average 7.4 (job history), max of 30 | ||

| + | * Idle jobs: average 5.1, max of 30 (this comes from the cronjob) | ||

| + | * Nodes: average 749 (23,968 cores), max 2047 (65504 cores, 9.1% of Blue Waters) | ||

| + | * Based on the Blue Waters jobs report, 449,121.7 node-hours used (14,371,894 core-hrs), but 355,184 hours (11,365,888 core-hrs) charged (25% reductions granted for backfill and wallclock accuracy) | ||

| + | ** 7.2% of jobs got accuracy discount | ||

| + | ** 62.1% of jobs got backfill discount | ||

| + | ** 21.1% of 120-node (DirectSynth) jobs got accuracy discount | ||

| + | ** 62.2% of 120-node jobs got backfill discount | ||

| + | ** 18.7% of 120-node jobs got both | ||

| + | |||

| + | |||

| + | Delay per job (7-day cutoff, no restarts, 2189 jobs): mean 4860 sec, median: 573, min: 0, max: 63482, sd: 10219 | ||

| + | |||

| + | {| border=1 | ||

| + | ! Bins (sec) | ||

| + | | 0 || || 60 || || 120 || || 180 || || 240 || || 300 || || 600 || || 900 || || 1800 || || 3600 || || 7200 || || 14400 || || 43200 || || 86400 || || 172800 || || 259200 || || 604800 | ||

| + | |- | ||

| + | ! Jobs per bin | ||

| + | | || 20 || || 6 || || 68 || || 164 || || 201 || || 677 || || 253 || || 170 || || 69 || || 142 || || 204 || || 166 || || 49 || || 0 || || 0 || || 0 | ||

| + | |} | ||

| + | |||

| + | === Workflow-level Metrics === | ||

| + | |||

| + | * The average makespan of a workflow (7-day cutoff, workflows with retries ignored, so 1618/1625 workflows considered) was 48567 sec (13.5 hrs), median: 32330 (9.0 hrs), min: 251, max: 427416, sd: 48648 | ||

| + | ** For integrated workflows on Titan (147 workflows), mean: 91931 sec (25.5 hrs), median: 64992 (18.1 hrs), min: 316, max: 427216, sd: 74671 | ||

| + | ** For SGT workflows on Titan (736 workflows), mean: 37358 sec (10.4 hrs), median: 23172 (6.4 hrs), min: 8584, max: 241659, sd: 38209 | ||

| + | ** For PP workflows on Blue Waters (735 workflows), mean: 51120 (14.2 hrs), median: 35016 (9.7 hrs), min: 251, max: 393699, sd: 46090 | ||

| + | ** It was 4% slower on means, and 12% slower on medians, to run integrated workflows on Titan than to split them up. This is probably because the median delay per job on Blue Waters is only about half of what it is on Titan, and this may have affected DirectSynth jobs particularly hard. Additionally, DirectSynth jobs ran about 10% faster on Blue Waters. | ||

| + | |||

| + | * Workflow parallel core speedup (690 workflows) was mean: 1709, median: 1983, min: 0, max: 3205 sd: 993 | ||

| + | * Workflow parallel node speedup (690 workflows) was mean: 53.7, median: 62.2 min: 0.1, max: 100.4, sd: 31.0 | ||

| + | |||

| + | === Job-level Metrics === | ||

| + | |||

| + | We acquired job-level metrics from two sources: pegasus-statistics, and parsing the workflow logs with a custom script, each of which provides slightly different info, so we include both here. The pegasus-statistics include failed jobs and failed workflows; the parser omits workflows which failed or took longer than the 7-day cutoff time. | ||

| + | |||

| + | Pegasus-statistics results are below. The Min/Max/Mean/Total columns refer to the product of the walltime and the pegasus_cores value. Note that this is not directly comparable to the number of SUs burned, since pegasus_cores does not map directly onto how many nodes were used. | ||

| + | |||

| + | Summary: | ||

| + | |||

| + | {| border="1" | ||

| + | ! Type !! Succeeded !! Failed !! Incomplete !! Total !! Retries !! Total+Retries !! Failed % | ||

| + | |- | ||

| + | ! Tasks | ||

| + | | 14723 || 0 || 750 || 15473 || 477 || 15200 || 0% | ||

| + | |- | ||

| + | ! Jobs | ||

| + | | 12259 || 29 || 18105 || 30393 || 14935 || 27223 || 0.2% | ||

| + | |- | ||

| + | ! Sub-Workflows | ||

| + | | 1005 || 10 || 664 || 1679 || 1458 || 2473 || 1% | ||

| + | |} | ||

| + | |||

| + | Breakdown. For some reason, pegasus-statistics only multiplied the numbers by pegasus_cores for some of the jobs (the jobs in integrated workflows), so we had to modify some of the numbers by hand. | ||

| + | |||

| + | {| border="1" | ||

| + | ! Transformation !! Count !! Succeeded !! Failed !! Min !! Max !! Mean !! Total !! Total time (sec) !! % of overall time !! % of node-hrs | ||

| + | |- | ||

| + | | condor::dagman || 3059 || 1021 || 2038 || 30.0 || 349858.0 || 10215.932 || 31250537.0 | ||

| + | |- | ||

| + | | dagman::post || 43874 || 27244 || 16630 || 0.0 || 9216.0 || 43.668 || 1915908.0 | ||

| + | |- | ||

| + | | dagman::pre || 3132 || 3081 || 51 || 1.0 || 28.0 || 5.079 || 15906.0 | ||

| + | |- | ||

| + | | pegasus::cleanup || 18416 || 2627 || 15789 || 0.0 || 12143.003 || 172.956 || 3185154.125 | ||

| + | |- | ||

| + | | pegasus::dirmanager || 5597 || 5336 || 261 || 2.155 || 1158.816 || 13.294 || 74405.152 | ||

| + | |- | ||

| + | | pegasus::rc-client || 1010 || 1010 || 0 || 1.215 || 2158.446 || 238.223 || 240605.526 | ||

| + | |- | ||

| + | | pegasus::transfer || 3524 || 3422 || 102 || 0.0 || 170119.463 || 815.476 || 2873736.586 | ||

| + | |- | ||

| + | ! Supporting Jobs !! !! !! !! !! !! !! | ||

| + | |- | ||

| + | | scec::AWP_GPU:1.0 || 1690 || 1607 || 83 || 0.0 || 953600.0 || 82880.188 || 140067518.097 | ||

| + | |- | ||

| + | | scec::AWP_NaN_Check:1.0 || 1616 || 1604 || 12 || 0.0 || 38781.0 || 734.095 || 1186297.189 | ||

| + | |- | ||

| + | | scec::CheckSgt:1.0 || 439 || 438 || 1 || 765.915 || 4078.0 || 1475.492 || 647740.898 | ||

| + | |- | ||

| + | | scec::Check_DB_Site:1.0 || 437 || 401 || 36 || 0.319 || 18.101 || 1.509 || 659.587 | ||

| + | |- | ||

| + | | scec::Curve_Calc:1.0 || 516 || 441 || 75 || 5.652 || 213.02 || 61.387 || 31675.755 | ||

| + | |- | ||

| + | | scec::CyberShakeNotify:1.0 || 147 || 147 || 0 || 0.084 || 2.871 || 0.172 || 25.316 | ||

| + | |- | ||

| + | | scec::DB_Report:1.0 || 147 || 147 || 0 || 22.688 || 64.503 || 33.349 || 4902.333 | ||

| + | |- | ||

| + | | scec::DirectSynth:1.0 || 227 || 218 || 9 || 0.0 || 30760.296 || 19847.048 || 4505279.89 | ||

| + | |- | ||

| + | | scec::Disaggregate:1.0 || 147 || 147 || 0 || 24.781 || 92.014 || 31.597 || 4644.807 | ||

| + | |- | ||

| + | | scec::GenSGTDax:1.0 || 804 || 803 || 1 || 2.656 || 7.909 || 3.141 || 2525.575 | ||

| + | |- | ||

| + | | scec::Handoff:1.0 || 660 || 657 || 3 || 0.06 || 1617.433 || 3.659 || 2414.951 | ||

| + | |- | ||

| + | | scec::Load_Amps:1.0 || 564 || 408 || 156 || 47.135 || 1854.35 || 687.071 || 387508.218 | ||

| + | |- | ||

| + | | scec::PostAWP:1.0 || 1619 || 1604 || 15 || 0.0 || 38836.0 || 3833.309 || 6206127.486 | ||

| + | |- | ||

| + | | scec::PreAWP_GPU:1.0 || 808 || 802 || 6 || 0.0 || 4314.0 || 298.634 || 241296.569 | ||

| + | |- | ||

| + | | scec::PreCVM:1.0 || 833 || 803 || 30 || 0.0 || 274.18 || 95.378 || 79450.199 | ||

| + | |- | ||

| + | | scec::PreSGT:1.0 || 811 || 803 || 8 || 0.0 || 19744.0 || 1418.928 || 1150750.713 | ||

| + | |- | ||

| + | | scec::SetJobID:1.0 || 145 || 145 || 0 || 0.078 || 2.362 || 0.187 || 27.087 | ||

| + | |- | ||

| + | | scec::SetPPHost:1.0 || 144 || 144 || 0 || 0.068 || 2.075 || 0.166 || 23.846 | ||

| + | |- | ||

| + | | scec::Smooth:1.0 || 827 || 802 || 25 || 0.0 || 287104.0 || 13608.192 || 11253974.731 | ||

| + | |- | ||

| + | | scec::UCVMMesh:1.0 || 814 || 802 || 12 || 0.0 || 3065260.032 || 170091.123 || 138454174.009 | ||

| + | |- | ||

| + | | scec::UpdateRun:1.0 || 2005 || 2003 || 2 || 0.062 || 51.149 || 0.223 || 447.354 | ||

| + | |} | ||

| + | |||

| + | The parsing results are below. We have DirectSynth cumulative, and also broken out by system. We used a 7-day cutoff, for a total of 44,908 jobs. | ||

| + | |||

| + | Job attempts, over 44908 jobs: Mean: 1.388038, median: 1.000000, min: 1.000000, max: 30.000000, sd: 1.713763 | ||

| + | |||

| + | Remote job attempts, over 25973 remote jobs: Mean: 1.648789, median: 1.000000, min: 1.000000, max: 30.000000, sd: 2.189993 | ||

| + | |||

| + | |||

| + | * AWP_GPU (1771 jobs), 200 nodes: | ||

| + | Runtime: mean 1923.634105, median: 1754.000000, min: 1385.000000, max: 4512.000000, sd: 450.148598 | ||

| + | Attempts: mean 1.122530, median: 1.000000, min: 1.000000, max: 28.000000, sd: 1.121227 | ||

| + | * AWP_NaN (1771 jobs): | ||

| + | Runtime: mean 722.352908, median: 671.000000, min: 2.000000, max: 1819.000000, sd: 175.328731 | ||

| + | Attempts: mean 1.053642, median: 1.000000, min: 1.000000, max: 18.000000, sd: 0.735448 | ||

| + | * Check_DB (1752 jobs): | ||

| + | Runtime: mean 1.063356, median: 1.000000, min: 0.000000, max: 20.000000, sd: 1.664148 | ||

| + | Attempts: mean 1.021689, median: 1.000000, min: 1.000000, max: 10.000000, sd: 0.430816 | ||

| + | * CheckSgt_CheckSgt (1754 jobs): | ||

| + | Runtime: mean 1607.771380, median: 1512.500000, min: 765.000000, max: 7363.000000, sd: 532.358322 | ||

| + | Attempts: mean 1.017104, median: 1.000000, min: 1.000000, max: 3.000000, sd: 0.161038 | ||

| + | * Curve_Calc (2628 jobs): | ||

| + | Runtime: mean 73.477549, median: 66.000000, min: 40.000000, max: 400.000000, sd: 31.115240 | ||

| + | Attempts: mean 1.074201, median: 1.000000, min: 1.000000, max: 10.000000, sd: 0.388442 | ||

| + | * CyberShakeNotify_CS (876 jobs): | ||

| + | Runtime: mean 0.097032, median: 0.000000, min: 0.000000, max: 8.000000, sd: 0.595601 | ||

| + | Attempts: mean 1.001142, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.033768 | ||

| + | * DB_Report (876 jobs): | ||

| + | Runtime: mean 34.850457, median: 32.000000, min: 22.000000, max: 274.000000, sd: 15.243062 | ||

| + | Attempts: mean 1.001142, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.033768 | ||

| + | * DirectSynth (876 jobs), 3840 cores: | ||

| + | Runtime: mean 18050.928082, median: 17687.000000, min: 689.000000, max: 30760.000000, sd: 3571.608554 | ||

| + | Attempts: mean 1.081050, median: 1.000000, min: 1.000000, max: 6.000000, sd: 0.343308 | ||

| + | |||

| + | DirectSynth_titan (145 jobs), 240 nodes: | ||

| + | Runtime: mean 19616.213793, median: 19408.000000, min: 4682.000000, max: 30760.000000, sd: 3587.637235 | ||

| + | Attempts: mean 1.082759, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.275517 | ||

| + | DirectSynth_bluewaters (731 jobs), 120 nodes: | ||

| + | Runtime: mean 17740.440492, median: 17434.000000, min: 689.000000, max: 25058.000000, sd: 3485.860160 | ||

| + | Attempts: mean 1.080711, median: 1.000000, min: 1.000000, max: 6.000000, sd: 0.355219 | ||

| + | * Disaggregate_Disaggregate (876 jobs): | ||

| + | Runtime: mean 32.528539, median: 30.000000, min: 23.000000, max: 172.000000, sd: 12.949334 | ||

| + | Attempts: mean 1.001142, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.033768 | ||

| + | * Handoff (736 jobs): | ||

| + | Runtime: mean 3.086957, median: 0.000000, min: 0.000000, max: 1617.000000, sd: 59.976223 | ||

| + | Attempts: mean 1.008152, median: 1.000000, min: 1.000000, max: 4.000000, sd: 0.127428 | ||

| + | * GenSGTDax_GenSGTDax (887 jobs): | ||

| + | Runtime: mean 2.535513, median: 2.000000, min: 2.000000, max: 7.000000, sd: 0.821520 | ||

| + | Attempts: mean 1.030440, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.171794 | ||

| + | * Load_Amps (1752 jobs): | ||

| + | Runtime: mean 866.063927, median: 889.000000, min: 315.000000, max: 1854.000000, sd: 417.499023 | ||

| + | Attempts: mean 1.101598, median: 1.000000, min: 1.000000, max: 19.000000, sd: 1.149229 | ||

| + | * PostAWP_PostAWP (1771 jobs), 2 nodes: | ||

| + | Runtime: mean 1650.480519, median: 1555.000000, min: 482.000000, max: 3573.000000, sd: 352.539321 | ||

| + | Attempts: mean 1.039526, median: 1.000000, min: 1.000000, max: 14.000000, sd: 0.483046 | ||

| + | * PreAWP_GPU (886 jobs): | ||

| + | Runtime: mean 310.959368, median: 276.000000, min: 158.000000, max: 763.000000, sd: 105.501674 | ||

| + | Attempts: mean 1.022573, median: 1.000000, min: 1.000000, max: 4.000000, sd: 0.169811 | ||

| + | * PreCVM_PreCVM (887 jobs): | ||

| + | Runtime: mean 97.491545, median: 90.000000, min: 72.000000, max: 274.000000, sd: 20.336395 | ||

| + | Attempts: mean 1.069899, median: 1.000000, min: 1.000000, max: 5.000000, sd: 0.367303 | ||

| + | * PreSGT_PreSGT (886 jobs), 8 nodes: | ||

| + | Runtime: mean 207.305869, median: 196.000000, min: 158.000000, max: 576.000000, sd: 45.213291 | ||

| + | Attempts: mean 1.033860, median: 1.000000, min: 1.000000, max: 5.000000, sd: 0.220261 | ||

| + | * SetJobID_SetJobID (146 jobs): | ||

| + | Runtime: mean 0.034247, median: 0.000000, min: 0.000000, max: 2.000000, sd: 0.216269 | ||

| + | Attempts: mean 1.027397, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.163238 | ||

| + | * SetPPHost (144 jobs): | ||

| + | Runtime: mean 0.027778, median: 0.000000, min: 0.000000, max: 2.000000, sd: 0.202225 | ||

| + | Attempts: mean 1.000000, median: 1.000000, min: 1.000000, max: 1.000000, sd: 0.000000 | ||

| + | * Smooth_Smooth (886 jobs), 4 nodes: | ||

| + | Runtime: mean 981.109481, median: 843.000000, min: 321.000000, max: 3236.000000, sd: 615.348535 | ||

| + | Attempts: mean 1.069977, median: 1.000000, min: 1.000000, max: 10.000000, sd: 0.630007 | ||

| + | * UCVMMesh_UCVMMesh (886 jobs), 96 nodes: | ||

| + | Runtime: mean 557.007901, median: 671.500000, min: 57.000000, max: 2829.000000, sd: 544.539803 | ||

| + | Attempts: mean 1.038375, median: 1.000000, min: 1.000000, max: 5.000000, sd: 0.257386 | ||

| + | * UpdateRun_UpdateRun (3526 jobs): | ||

| + | Runtime: mean 0.031764, median: 0.000000, min: 0.000000, max: 38.000000, sd: 0.684162 | ||

| + | Attempts: mean 1.011628, median: 1.000000, min: 1.000000, max: 3.000000, sd: 0.109818 | ||

| + | |||

| + | * create_dir (10440 jobs): | ||

| + | Runtime: mean 3.776724, median: 2.000000, min: 2.000000, max: 1158.000000, sd: 18.502978 | ||

| + | Attempts: mean 1.044540, median: 1.000000, min: 1.000000, max: 4.000000, sd: 0.305174 | ||

| + | * cleanup_AWP (1472 jobs): | ||

| + | Runtime: mean 2.000000, median: 2.000000, min: 2.000000, max: 2.000000, sd: 0.000000 | ||

| + | Attempts: mean 1.004076, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.063714 | ||

| + | * clean_up (3452 jobs): | ||

| + | Runtime: mean 116.827057, median: 182.000000, min: 4.000000, max: 570.000000, sd: 94.090323 | ||

| + | Attempts: mean 5.653824, median: 9.000000, min: 1.000000, max: 42.000000, sd: 4.153044 | ||

| + | * register_bluewaters (731 jobs): | ||

| + | Runtime: mean 1015.307798, median: 970.000000, min: 443.000000, max: 2158.000000, sd: 389.140327 | ||

| + | Attempts: mean 1.222982, median: 1.000000, min: 1.000000, max: 19.000000, sd: 1.660952 | ||

| + | * register_titan (1018 jobs): | ||

| + | Runtime: mean 144.419450, median: 1.000000, min: 1.000000, max: 2085.000000, sd: 385.777688 | ||

| + | Attempts: mean 1.001965, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.044281 | ||

| + | * stage_inter (887 jobs): | ||

| + | Runtime: mean 4.908681, median: 4.000000, min: 4.000000, max: 662.000000, sd: 22.117336 | ||

| + | Attempts: mean 1.029312, median: 1.000000, min: 1.000000, max: 2.000000, sd: 0.168680 | ||

| + | * stage_in (4988 jobs): | ||

| + | Runtime: mean 14.967322, median: 5.000000, min: 4.000000, max: 187.000000, sd: 17.389459 | ||

| + | Attempts: mean 1.033681, median: 1.000000, min: 1.000000, max: 9.000000, sd: 0.289603 | ||

| + | * stage_out (1759 jobs): | ||

| + | Runtime: mean 1244.735645, median: 1061.000000, min: 210.000000, max: 34206.000000, sd: 1652.158490 | ||

| + | Attempts: mean 1.085844, median: 1.000000, min: 1.000000, max: 22.000000, sd: 0.758517 | ||

| + | |||

| + | |||

| + | === Study 17.3b === | ||

| + | |||

| + | Study 17.3b began at 13:27:11 PDT on May 16, 2017 and finished at 6:32:25 on June 8, 2017. | ||

| + | |||

| + | Since this is just a sub-study, we will only calculate a few metrics. | ||

| + | |||

| + | *Wallclock time: 281.1 hrs | ||

| + | *Resources used: 796,696 SUs on Titan + 79,117.6 node-hrs on Blue Waters (72,467.8 XE, 6,649.8 XK) | ||

| + | *116 sites | ||

| + | *14 sites with SGTs on Blue Waters, 102 on Titan | ||

== Production Checklist == | == Production Checklist == | ||

| Line 161: | Line 769: | ||

*<s>Check CISN stations; preference for broadband and PG&E stations</s> | *<s>Check CISN stations; preference for broadband and PG&E stations</s> | ||

*<s>Add DBCN to station list.</s> | *<s>Add DBCN to station list.</s> | ||

| − | |||

*<s>Install 1D model in UCVM 15.10.0 on Blue Waters and Titan</s>. | *<s>Install 1D model in UCVM 15.10.0 on Blue Waters and Titan</s>. | ||

*<s>Decide on 3D velocity model to use.</s> | *<s>Decide on 3D velocity model to use.</s> | ||

| − | *Upgrade Condor on shock to v8.4.8. | + | *<s>Upgrade Condor on shock to v8.4.8.</s> |

| − | * | + | *<s>Generate test hazard curves for 1D and 3D velocity models for 3 overlapping box sites</s> |

| − | + | *<s>Run the same site on both Blue Waters and Titan.</s> | |

| − | *Generate test hazard curves for 1D and 3D velocity models for 3 central CA sites with and without | + | *<s>Generate test hazard curves for 1D and 3D velocity models for 3 central CA sites.</s> |

| − | *Confirm test results with science group. | + | *<s>Calculate hazard curves for sites which include northern SAF events inside 200 km cutoff, with and without those events.</s> |

| − | *Determine CyberShake volume for corner points in Central CA region, and if we need to modify the 200 km cutoff. | + | *<s>Confirm test results with science group.</s> |

| − | *Modify submit job on shock to distribute end-to-end workflows between Blue Waters and Titan. | + | *<s>Determine CyberShake volume for corner points in Central CA region, and if we need to modify the 200 km cutoff.</s> |

| − | *Add new velocity models into CyberShake database. | + | *<s>Modify submit job on shock to distribute end-to-end workflows between Blue Waters and Titan.</s> |

| − | *Create XML file describing study for web monitoring tool | + | *<s>Add new velocity models into CyberShake database.</s> |

| − | *Add new sites to database. | + | *<s>Create XML file describing study for web monitoring tool</s> |

| − | *Determine size of | + | *<s>Add new sites to database.</s> |

| − | *Create Blue Waters and Titan daily cronjobs to monitor quota. | + | *<s>Determine size and length of jobs.</s> |

| − | *Check scalability of reformat-awp-mpi code. | + | *<s>Create Blue Waters and Titan daily cronjobs to monitor quota.</s> |

| − | *Test rvgahp server on Rhea for PostSGT and MD5 jobs. | + | *<s>Check scalability of reformat-awp-mpi code.</s> |

| − | *Edit submission script to disable MD5 checks if we are submitting an integrated workflow to 1 system. | + | *<s>Test rvgahp server on Rhea for PostSGT and MD5 jobs.</s> |

| − | *Upgrade Pegasus on shock to 4.6.2. | + | *<s>Edit submission script to disable MD5 checks if we are submitting an integrated workflow to 1 system.</s> |

| + | *<s>Upgrade Pegasus on shock to 4.6.2.</s> | ||

| + | *<s>Add duration calculation to DirectSynth.</s> | ||

| + | *<s>Verify DirectSynth duration calculation.</s> | ||

| + | *<s>Reduce DirectSynth output.</s> | ||

| + | *<s>Generate hazard maps for region using GMPEs with and without 200 km cutoff.</s> | ||

| + | *Tag code in repository. | ||

| + | *<s>Integrate version of rupture variation generator in the BBP into CyberShake.</s> | ||

| + | *<s>Add new rupture variation generator to DB.</s> | ||

| + | *<s>Populate DB with new rupture variations.</s> | ||

| + | *<s>Check that monitord is being populated to sqlite databases.</s> | ||

| + | *<s>Change curve calc script to use NGA-2s.</s> | ||

| + | *<s>Add database argument to all OpenSHA jobs.</s> | ||

| + | *<s>Test running rvgahp daemon on dtn nodes.</s> | ||

| + | *<s>Verify with Rob that we should be using multi-segment version of rupture variation generator.</s> | ||

| + | *<s>Look at applying concurrency limits to transfer jobs.</s> | ||

| + | *<s>Ask HPC staff if we should use hpc-transfer instead of hpc-scec.</s> | ||

| + | *<s>Switch workflow to using hpc-transfer.</s> | ||

| + | *<s>Added check in case NZ is not a multiple of BLOCK_SIZE_Z</s> | ||

| + | *<s>Check using northern SAF seismogram that study NT is large enough.</s> | ||

| + | *<s>Verify that Titan and Blue Waters codebases are in sync with each other and the repo.</s> | ||

| + | *<s>Test running rvgahp server on DTN node</s> | ||

| + | *<s>Prepare pending file</s> | ||

| + | *<s>Grab usage stats from Titan and Blue Waters</s> | ||

| + | *Update compute estimates | ||

| + | |||

| + | == Presentations, Posters, and Papers == | ||

| + | |||

| + | *[http://hypocenter.usc.edu/research/BlueWaters/SCEC_NCSA_OLCF_CyberShake_Review.pdf CyberShake Study 16.9 Study Coordination Planning] | ||

| + | * [http://hypocenter.usc.edu/research/cybershake/study_16_9/Study_17_3_Science_Readiness_Review.pptx Science Readiness Review] | ||

| + | |||

| + | == Related Entries == | ||

| + | *[[Hadley-Kanamori]] | ||

| + | *[[UCVM]] | ||

| + | *[[CyberShake]] | ||

| + | *[[1D Model Development]] | ||

Latest revision as of 19:36, 5 December 2023

CyberShake Study 17.3 is a computational study to calculate 2 CyberShake hazard models - one with a 1D velocity model, one with a 3D - at 1 Hz in a new region, CyberShake Central California. We will use the GPU implementation of AWP-ODC-SGT, the Graves & Pitarka (2014) rupture variations with 200m spacing and uniform hypocenters, and the UCERF2 ERF. The SGT and post-processing calculations will both be run on both NCSA Blue Waters and OLCF Titan.

Contents

- 1 Status

- 2 Data Products

- 3 Study 17.3b

- 4 Science Goals

- 5 Technical Goals

- 6 Sites

- 7 Velocity Models

- 8 Verification

- 9 Performance Enhancements (over Study 15.4)

- 10 Lessons Learned

- 11 Codes

- 12 Output Data Products

- 13 Computational and Data Estimates

- 14 Performance Metrics

- 15 Production Checklist

- 16 Presentations, Posters, and Papers

- 17 Related Entries

Status

Study 17.3 began on March 6, 2017 at 12:14:10 PST.

Study 17.3 completed on April 6, 2017 at 7:37:16 PDT.

Data Products

Hazard maps from Study 17.3 are available here: Study 17.3 Data Products

Hazard curves produced from Study 17.3 using the CCA 1D model have dataset ID=80, and with the CCA-06 3D model dataset ID=81.

Individual runs can be identified in the CyberShake database by searching for runs with Study_ID=8.

Study 17.3b

After the conclusion of Study 17.3, we had additional resources on Blue Waters, so we selected 58 additional sites to perform 1D and 3D calculations on. A KML file with these sites is available with names or without names. The site locations are shown in green on the image below, along with the 438 Study 17.3 sites.

Science Goals

The science goals for Study 17.3 are:

- Expand CyberShake to include Central California sites.

- Create CyberShake models using both a Central California 1D velocity model and a 3D model (CCA-06).

- Calculate hazard curves for population centers, seismic network sites, and electrical and water infrastructure sites.

Technical Goals

The technical goals for Study 17.3 are:

- Run end-to-end CyberShake workflows on Titan, including post-processing.

- Show that the database migration improved database performance.

Sites

We will run a total of 438 sites as part of Study 17.3. A KML file of these sites, along with the Central and Southern California boxes, is available here (with names) or here (without names).

We created a Central California CyberShake box, defined here.

We have identified a list of 408 sites which fall within the box and outside of the CyberShake Southern California box. These include:

- 310 sites on a 10 km grid

- 54 CISN broadband or PG&E stations, decimated so they are at least 5 km apart, and no closer than 2 km from another station.

- 30 cities used by the USGS in locating earthquakes

- 4 PG&E pumping stations

- 6 historic Spanish missions

- 4 OBS stations

In addition, we will include 30 sites which overlap with the Southern California box (24 10 km grid, 5 5 km grid, 1 SCSN), enabling direct comparison of results.

We will prioritize the pumping stations and the overlapping sites.

Velocity Models

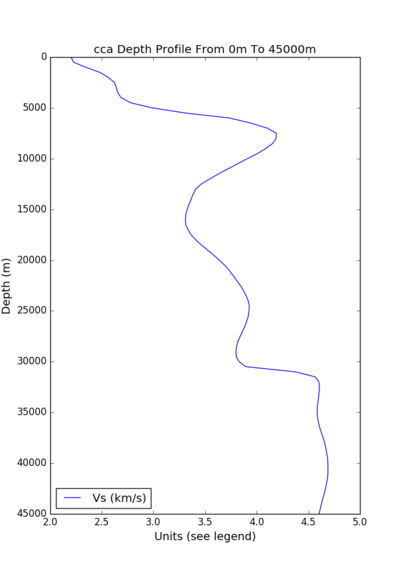

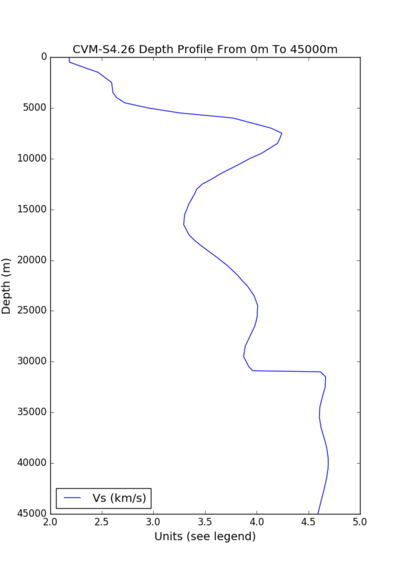

We are planning to use 2 velocity models in Study 17.3. We will enforce a Vs minimum of 900 m/s, a minimum Vp of 1600 m/s, and a minimum rho of 1600 kg/m^3.

- CCA-06, a 3D model created via tomographic inversion by En-Jui Lee. This model has no GTL. Our order of preference will be:

- CCA-06

- CVM-S4.26

- SCEC background 1D model

- CCA-1D, a 1D model created by averaging CCA-06 throughout the Central California region.

We will run the 1D and 3D model concurrently.

Verification

Since we are moving to a new region, we calculated GMPE maps for this region, available here: Central California GMPE Maps

As part of our verification work, we plan to do runs using both the 1D and 3D model for the following 3 sites in the overlapping region:

- s001

- OSI

- s169

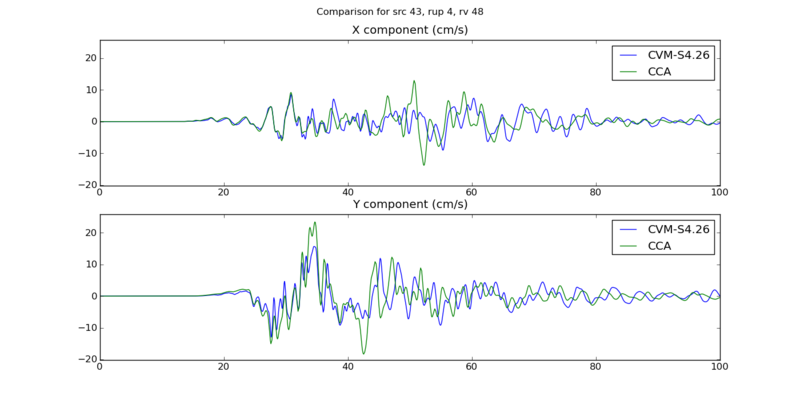

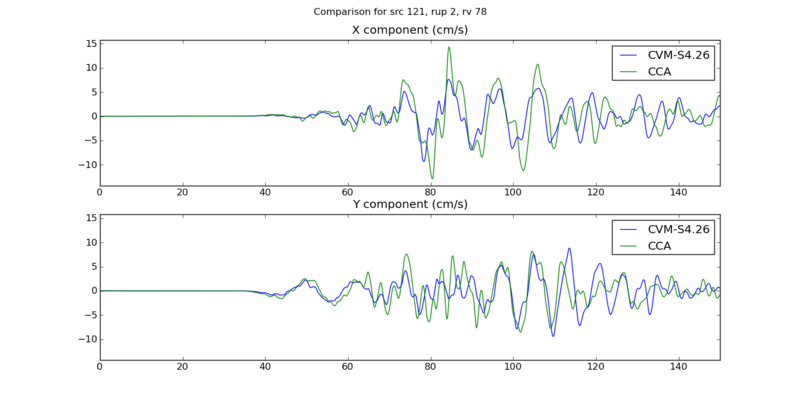

Blue Waters/Titan Verification

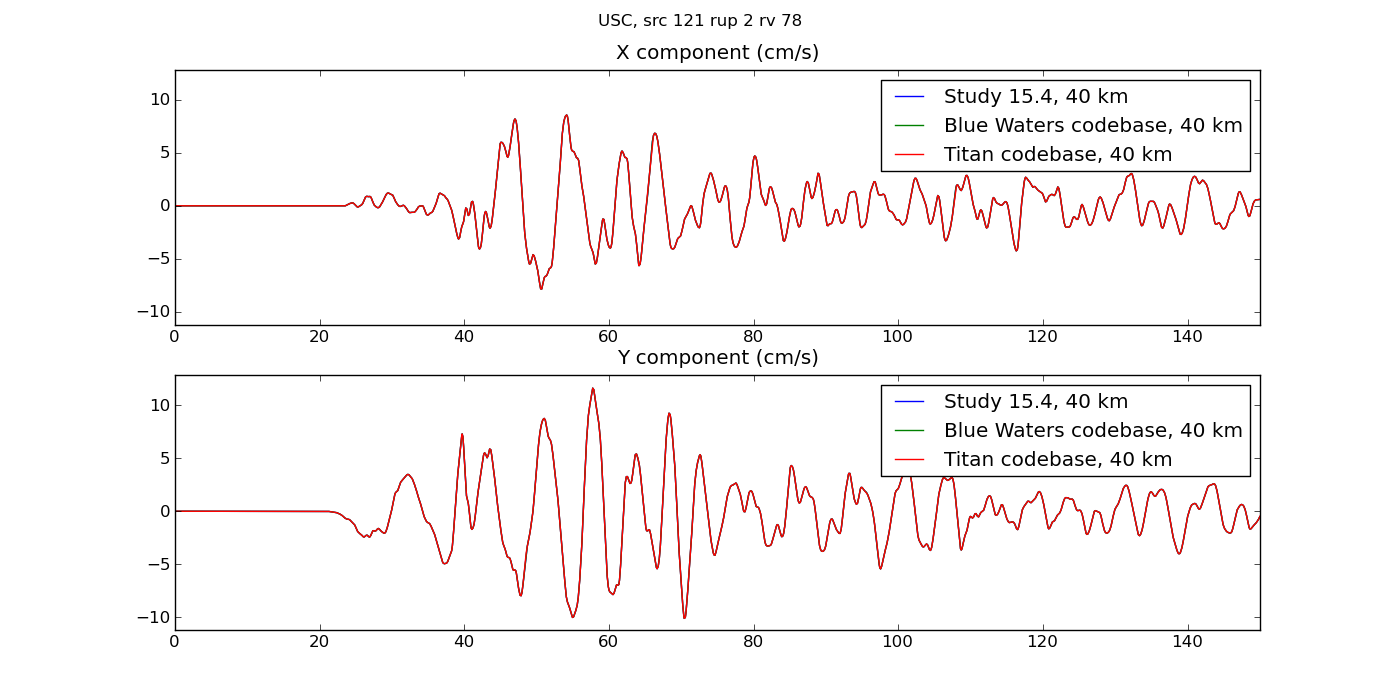

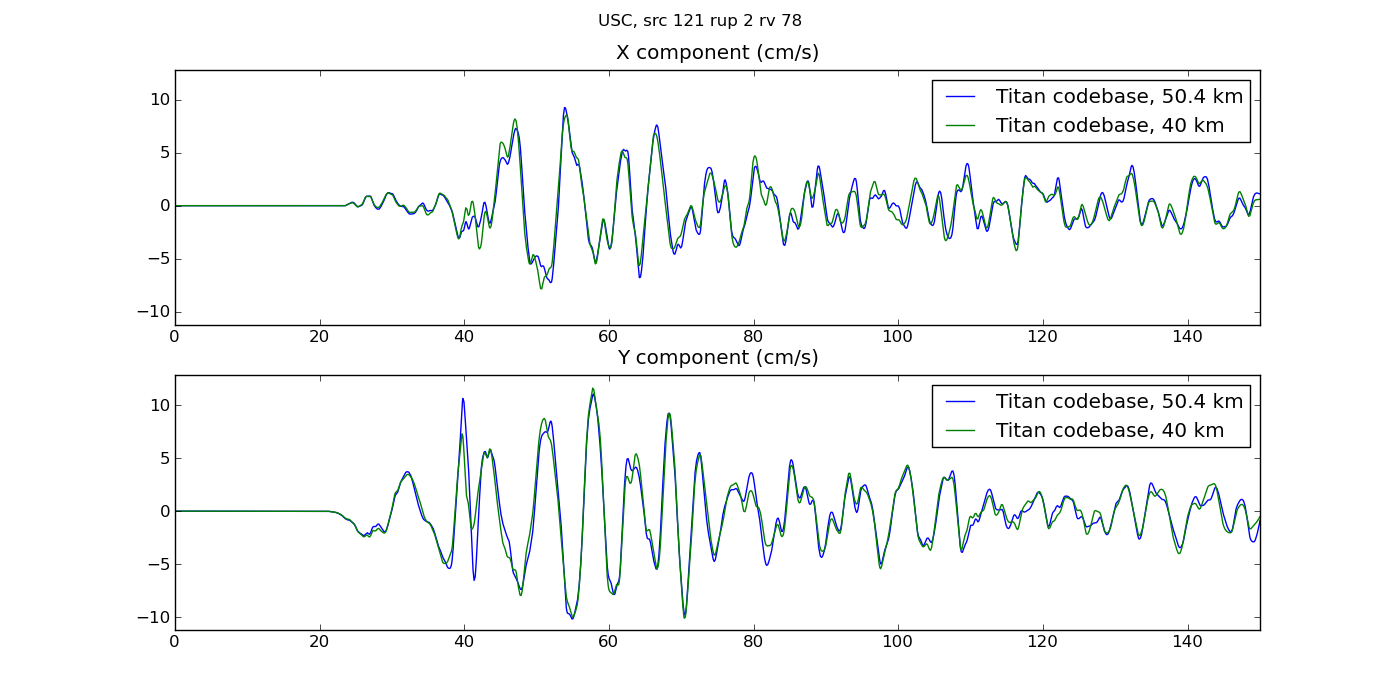

We ran s001 on both Blue Waters and Titan. Hazard curves are below, and very closely match:

Below are two seismograms, which also closely match:

NT check

To verify that NT for the study (5000 timesteps = 437.5 sec) is long enough, I extracted a northern SAF seismogram for s1252, one of the southernmost sites to include northern SAF events. The seismogram is below; it tapers off around 350 seconds.

Study 15.4 Verification

We ran the USC site through the Study 16.9 code base on both Blue Waters and Titan with the Study 15.4 parameters. Hazard curves are below, and very closely match:

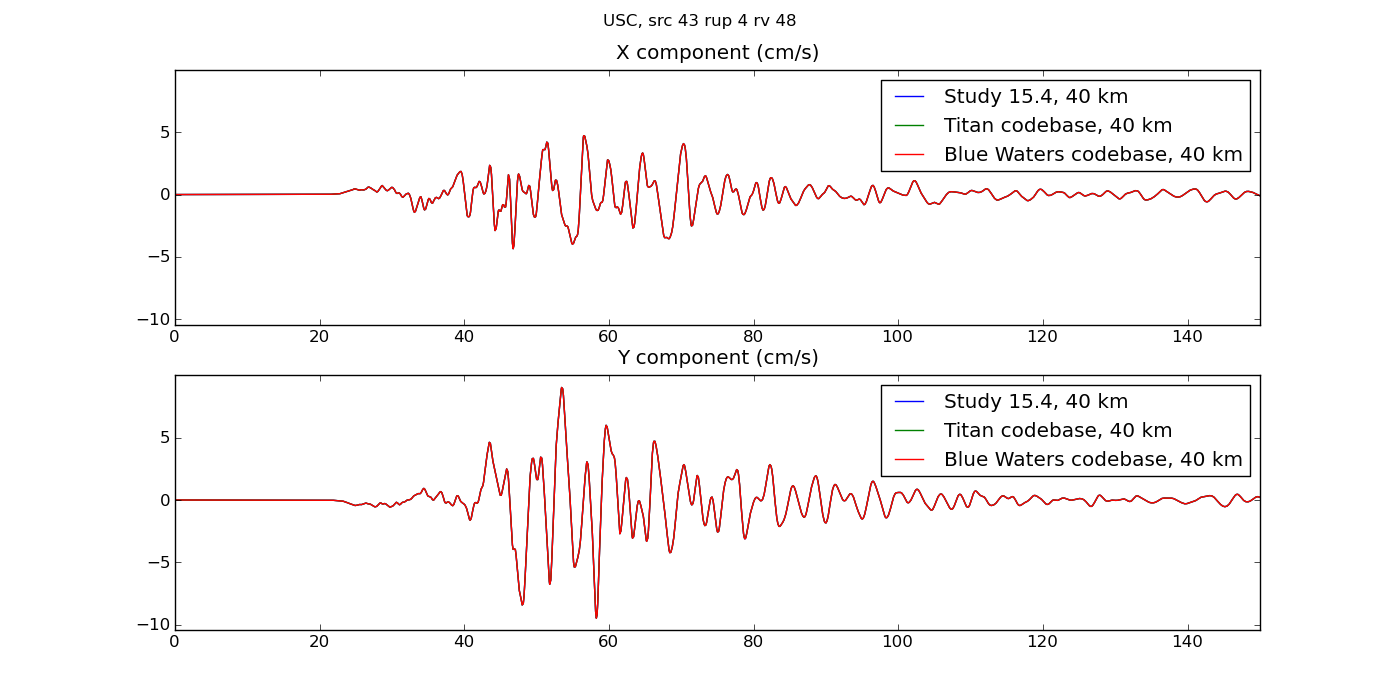

Plots of the seismograms show excellent agreement:

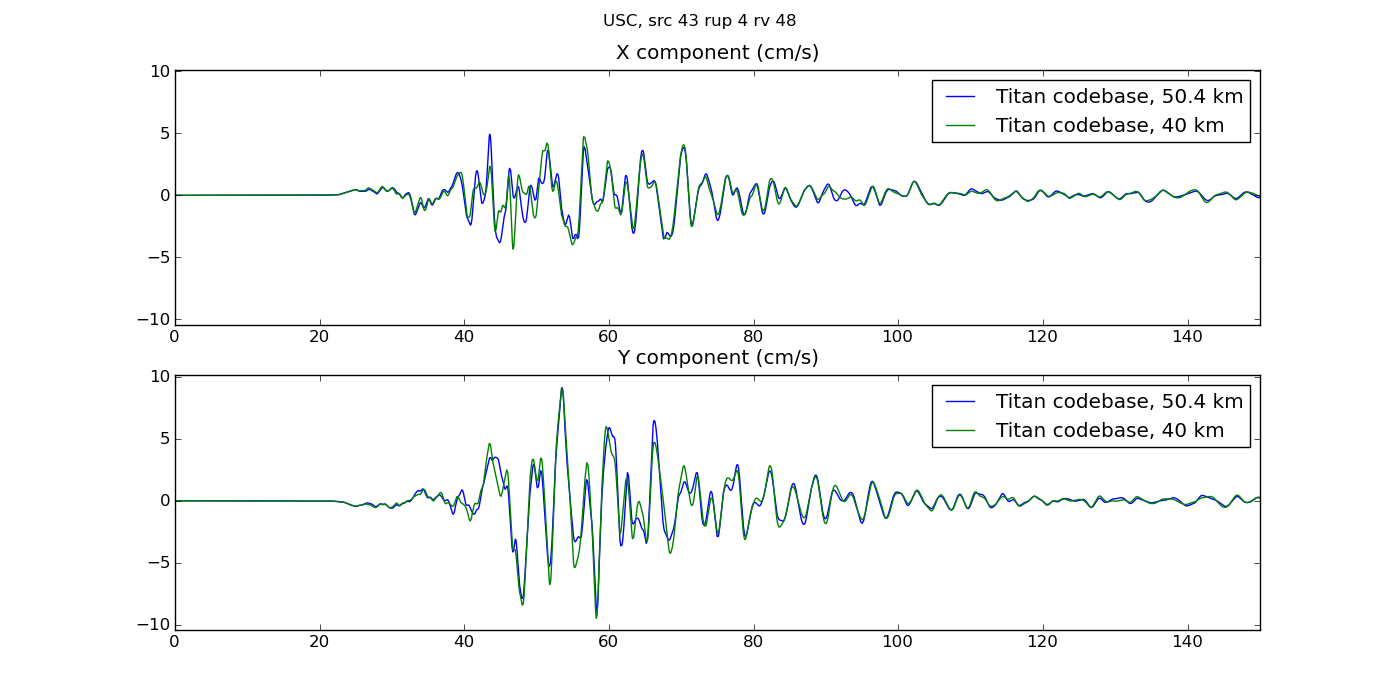

We accidentally ran with a depth of 50.4 km first. Here are seismogram plots illustrating the difference between running SGTs with depth of 40 km vs 50.4 km.

Velocity Model Verification

Cross-section plots of the velocity models are available here.

200 km cutoff effects

We are investigating the impact of the 200 km cutoff as it pertains to including/excluding northern SAF events. This is documented here: CCA N SAF Tests.

Impulse difference

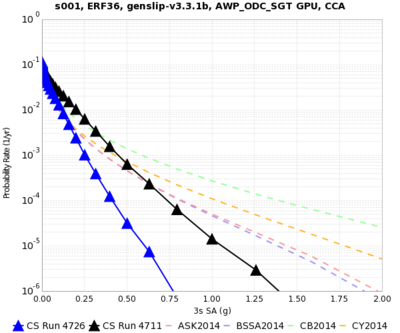

Here's a curve comparison showing the impact of fixing the impulse for s001 at 3, 5, and 10 sec.

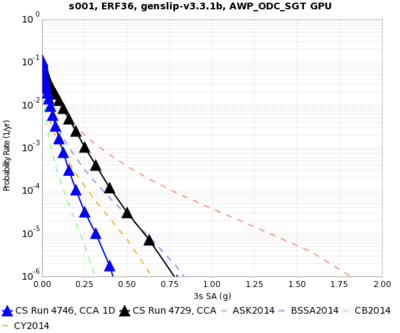

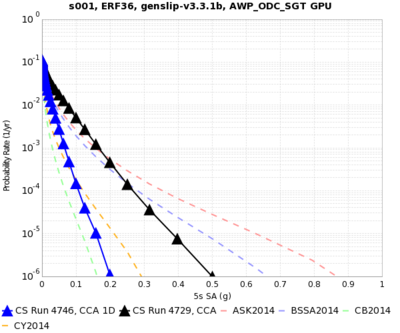

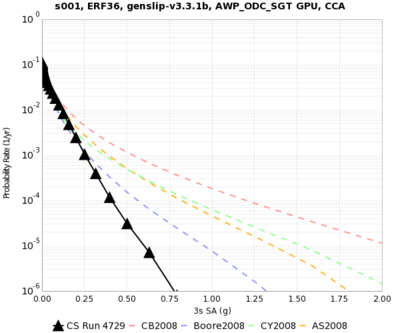

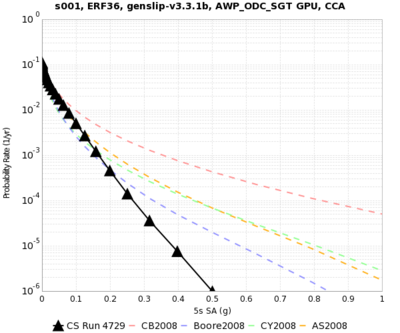

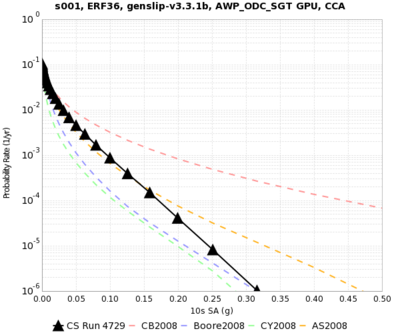

Curve Results

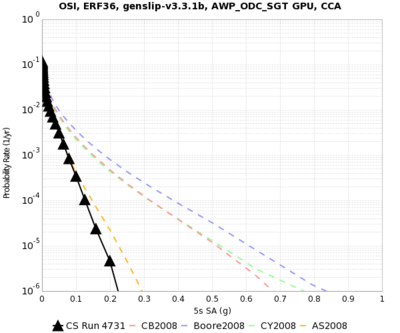

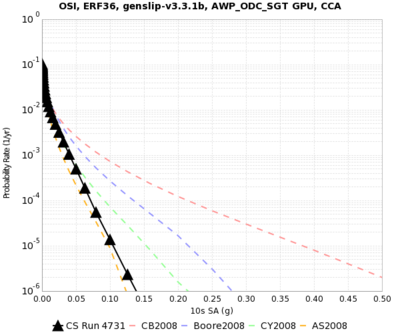

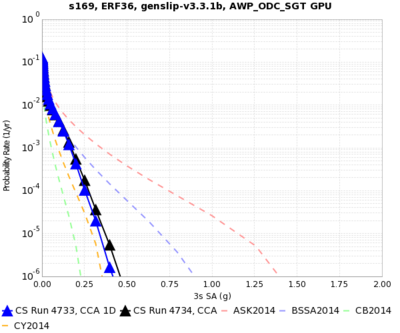

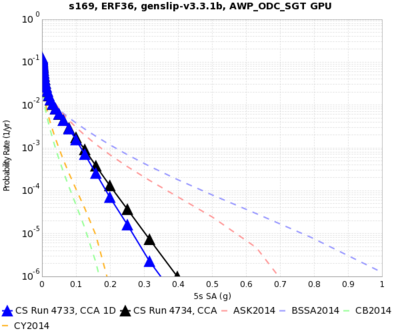

| site | velocity model | 3 sec SA | 5 sec SA | 10 sec SA |

|---|---|---|---|---|

| s001 | 1D | |||

| 3D | ||||

| OSI | 1D | |||

| 3D | ||||

| s169 | 1D | |||

| 3D |

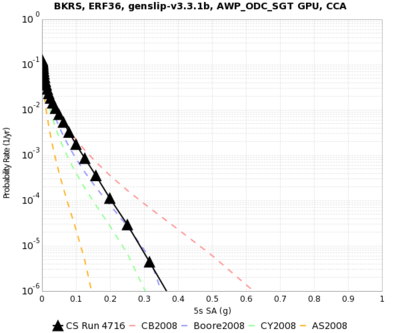

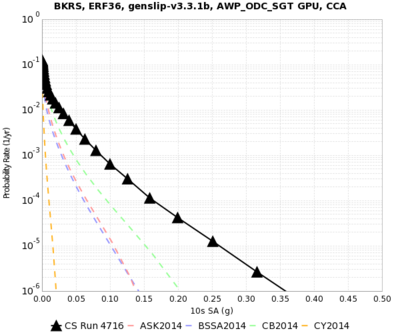

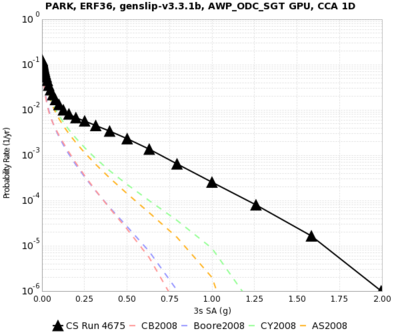

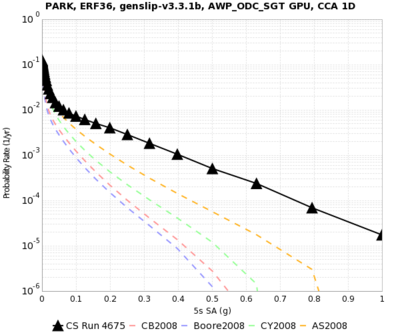

These results were calculated with the incorrect impulse.

| site | velocity model | 3 sec SA | 5 sec SA | 10 sec SA |

|---|---|---|---|---|

| BKRS | 1D |

|

|

|

| 3D |

|

|

| |

| SBR | 1D | |||

| 3D | ||||

| PARK | 1D |

|

|

|

| 3D |

|

|

|

Velocity Profiles

| site | CCA profile (min Vs=900 m/s) | CVM-S4.26 profile (min Vs=500 m/s) |

|---|---|---|

| s001 |

|

|

| OSI |

|

|

| s169 |

|

|

Seismogram plots

Below are plots comparing results from Study 15.4 to test results, which differ in velocity model, Vs min cutoff, dt, and grid spacing. We've selected two events: source 43, rupture 4, rupture variation 48, a M7.05 southern SAF event, and source 121, rupture 2, rupture variation 78, and M7.65 San Jacinto event.

| site | San Andreas event | San Jacinto event |

|---|---|---|

| s001 |

|

|

| OSI |

|

|

| s169 |

|

|

Rupture Variation Generator v5.2.3 (Graves & Pitarka 2015)

Plots related to verifying the rupture variation generator intended for use in this study are available here: Rupture Variation Generator v5.2.3 Verification .

After review, we decided to stick with v3.3.1 for this study.

Performance Enhancements (over Study 15.4)

Responses to Study 15.4 Lessons Learned

* Some of the DirectSynth jobs couldn't fit their SGTs into the number of SGT handlers, nor finish in the wallclock time. In the future, test against a larger range of volumes and sites.

We aren't quite sure which site will produce the largest volume, so we will take the largest volume produced among the test sites and add 10% when choosing DirectSynth job sizes.

* Some of the cleanup jobs aren't fully cleaning up.

We have had difficulty reproducing this in a non-production environment. We will add a cron job to send a daily email with quota usage, so we'll know if we're nearing quota.

* On Titan, when a pilot job doesn't complete successfully, the dependent pilot jobs remain in a held state. This isn't reflected in qstat, so a quick look doesn't show that some of these jobs are being held and will never run. Additionally, I suspect that pilot jobs exit with a non-zero exit code when there's a pile-up of workflow jobs, and some try to sneak in after the first set of workflow jobs runs on the pilot jobs, meaning that the job gets kicked out for exceeding wallclock time. We should address this next time.

We're not going to use pilot jobs this time, so it won't be an issue.

* On Titan, a few of the PostSGT and MD5 jobs didn't finish in the 2 hours, so they had to be run on Rhea by hand, which has a longer permitted wallclock time. We should think about moving these kind of processing jobs to Rhea in the future.

The SGTs for Study 16.9 will be smaller, so these jobs should finish faster. PostSGT has two components, reformatting the SGTs and generating the headers. By increasing from 2 nodes to 4, we can decrease the SGT reformatting time to about 15 minutes, and the header generation also takes about 15 minutes. We investigated setting up the workflow to run the PostSGT and MD5 jobs only on Rhea, but had difficulty getting the rvgahp server working there. Reducing the runtime of the SGT reformatting and separating out the MD5 sum should fix this issue for this study.

* When we went back to do to CyberShake Study 15.12, we discovered that it was common for a small number of seismogram files in many of the runs to have an issue wherein some rupture variation records were repeated. We should more carefully check the code in DirectSynth responsible for writing and confirming correctness of output files, and possibly add a way to delete and recreate RVs with issues.

We've fixed the bug that caused this in DirectSynth. We have changed the insertion code to abort if duplicates are detected.

Workflows

- We will run CyberShake workflows end-to-end on Titan, using the RVGAHP approach with Condor rather than pilot jobs.

- We will bypass the MD5sum check at the start of post-processing if the SGT and post-processing are being run back-to-back on the same machine.

Database

- We have migrated data from past studies off the production database, which will hopefully improve database performance from Study 15.12.

Lessons Learned

- Include plots of velocity models as part of readiness review when moving to new regions.

- Formalize process of creating impulse. Consider creating it as part of the workflow based on nt and dt.

- Many jobs were not picked up by the reservation, and as a result reservation nodes were idle. Work harder to make sure reservation is kept busy.

- Forgot to turn on monitord during workflow, so had to deal with statistics after the workflow was done. Since we're running far fewer jobs, it's fine to run monitord population during the workflow.

- In Study 17.3b, 2 of the runs (5765 and 5743) had a problem with their output, which left 'holes' of lower hazard on the 1D map. Looking closely, we discovered that the SGT X component of run 5765 was about 30 GB smaller than it should have been, likely causing issues when the seismograms were synthesized. We no longer had the SGTs from 5743, so we couldn't verify that the same problem happened here. Moving forward, include checks on SGT file size as part of the nan check.

Codes

Output Data Products

Below is a table of planned output products, both what we plan to compute and what we plan to put in the database.

| Type of product | Periods/subtypes computed and saved in files | Periods/subtypes inserted in database |

|---|---|---|

| PSA | 88 values X and Y components at 44 periods: 10, 9.5, 9, 8.5, 8, 7.5, 7, 6.5, 6, 5.5, 5, 4.8, 4.6, 4.4, 4.2, 4, 3.8, 3.6, 3.4, 3.2, 2, 2.8, 2.6, 2.4, 2.2, 2, 1.66667, 1.42857, 1.25, 1.11111, 1, .66667, .5, .4, .33333, .285714, .25, .22222, .2, .16667, .142857, .125, .11111, .1 sec |

4 values Geometric mean for 4 periods: 10, 5, 3, 2 sec |

| RotD | 44 values RotD100, RotD50, and RotD50 angle for 22 periods: 1.0, 1.2, 1.4, 1.5, 1.6, 1.8, 2.0, 2.2, 2.4, 2.6, 2.8, 3.0, 3.5, 4.0, 4.4, 5.0, 5.5, 6.0, 6.5, 7.5, 8.5, 10.0 sec |

12 values RotD100, RotD50, and RotD50 angle for 6 periods: 10, 7.5, 5, 4, 3, 2 sec |

| Durations | 18 values For X and Y components, energy integral, Arias intensity, cumulative absolute velocity (CAV), and for both velocity and acceleration, 5-75%, 5-95%, and 20-80%. |

None |

| Hazard Curves | N/A | 16 curves Geometric mean: 10, 5, 3, 2 sec RotD100: 10, 7.5, 5, 4, 3, 2 sec RotD50: 10, 7.5, 5, 4, 3, 2 sec |

Computational and Data Estimates

Computational Time

Since we are using a min Vs=900 m/s, we will use a grid spacing of 175 m, and dt=0.00875, nt=23000 in the SGT simulation (and 0.0875 in the seismogram synthesis).

For computing these estimates, we are using a volume of 420 km x 1160 km x 50 km, or 2400 x 6630 x 286 grid points. This is about 4.5 billion grid points, approximately half the size of the Study 15.4 typical volume. We will run the SGTs on 160-200 GPUs.

We estimate that we will run 75% of the sites from each model on Blue Waters, and 25% on Titan. This is because we are charged less for Blue Waters sites (we are charged for the Titan GPUs even if we don't use them), and we have more time available on Blue Waters. However, we will use a dynamic approach during runtime, so the resulting numbers may differ.

Study 15.4 SGTs took 740 node-hours per component. From this, we assume:

750 node-hours x (4.5 billion grid points in 16.9 / 10 billion grid points in 15.4) x ( 23k timesteps in 16.9 / 40k timesteps in 15.4 ) ~ 200 node-hours per component for Study 16.9.

Study 15.4 post-processing took 40k core-hrs. From this, we assume:

40k core-hrs x ( 2.3k timesteps in 16.9 / 4k timesteps in 15.4 ) = 23k core-hrs = 720 node-hrs on Blue Waters, 1440 node-hrs on Titan.

Because we're limited on Blue Waters node-hours, we will burn no more than 600K node-hrs. This should be enough to do 75% of the post-processing on Blue Waters. All the SGTs and the other post-processing will be done on Titan.

Titan

Pre-processing (CPU): 100 node-hrs/site x 876 sites = 87,600 node-hours.

SGTs (GPU): 400 node-hrs per site x 876 sites = 350,400 node-hours.

Post-processing (CPU): 1440 node-hrs per site x 219 sites = 315,360 node-hours.

Total: 28.3M SUs ((87,600 + 350,400 + 315,360) x 30 SUs/node-hr + 25% margin)

We have 91.7M SUs available on Titan.

Blue Waters

Post-processing (CPU): 720 node-hrs per site x 657 sites = 473,040 node-hours.

Total: 591.3K node-hrs ((473,040) + 25% margin)

We have 989K node-hrs available on Blue Waters.

Storage Requirements

We plan to calculate geometric mean, RotD values, and duration metrics for all seismograms. We will use Pegasus's cleanup capabilities to avoid exceeding quotas.

Titan

Purged space to store intermediate data products: (900 GB SGTs + 60 GB mesh + 900 GB reformatted SGTs)/site x 219 sites = 1591 TB

Purged space to store output data: (15 GB seismograms + 0.2 GB PSA + 0.2 GB RotD + 0.2 GB duration) x 219 sites = 3.3 TB

Blue Waters

Purged space to store intermediate data products: (900 GB SGTs)/site x 657 sites = 577 TB

Purged space to store output data: (15 GB seismograms + 0.2 GB PSA + 0.2 GB RotD + 0.2 GB duration) x 657 sites = 10.0 TB

SCEC

Archival disk usage: 13.3 TB seismograms + 0.1 TB PSA files + 0.1 TB RotD files + 0.1 TB duration files on scec-02 (has 109 TB free) & 24 GB curves, disaggregations, reports, etc. on scec-00 (109 TB free)

Database usage: (4 rows PSA [@ 2, 3, 5, 10 sec] + 12 rows RotD [RotD100 and RotD50 @ 2, 3, 4, 5, 7.5, 10 sec])/rupture variation x 500K rupture variations/site x 876 sites = 7 billion rows x 125 bytes/row = 816 GB (3.2 TB free on moment.usc.edu disk)

Temporary disk usage: 1 TB workflow logs. scec-02 has 94 TB free.

Performance Metrics

Usage

Just before starting, we grabbed the current project usage for Blue Waters and Titan to get accurate measures of the SUs burned.

Starting usage, Titan

Project usage = 4,313,662 SUs in 2017 (153,289 in March)

User callag used 1,089,050 SUs in 2017 (98,606 in March)

Starting usage, Blue Waters

'usage' command reports project PRAC_bahm used 11,571.4 node-hrs and ran 321 jobs.

'usage' command reports scottcal used 11,320.49 node-hrs on PRAC_bahm.

Ending usage, Titan

Project usage = 22,178,513 SUs in 2017 (12,586,176 in March, 5,431,964 in April)

User callag used 14,579,559 SUs in 2017 (10,128,109 in March, 3,461,006 in April)

Ending usage, Blue Waters

usage command reports 366773.73 node-hrs used by PRAC_bahm.

usage command reports 366504.84 node-hrs used by scottcal.

Reservations

4 reservations on Blue Waters (3 for 128 nodes, 1 for 124) began on 3/9/17 on 11:00:00 CST. These reservations expired after a week.

A 2nd set of reservations (same configuration) for 1 week started again on 3/16/17 at 22:00:00 CDT. We gave these reservations back on 3/20/17 at 11:14 CDT because shock went down and we couldn't keep them busy.

Another set of reservations started at 2:27 CDT on 3/29/17, but were revoked around 16:30 CDT on 3/30/17.

Events during Study

We requested and received a 5-day priority boost, 5 jobs running in bin 5, and 8 jobs eligible to run on Titan starting sometime in the morning of Sunday, March 12. This greatly increased our throughput.

In the evening of Wednesday, March 15, we increased GRIDMANAGER_MAX_JOBMANAGERS_PER_RESOURCE from 10 to 20 to increase the number of jobs in the Blue Waters queues.

Condor on shock was killed on 3/20/17 at 3:45 CDT. We started resubmitting workflows at 13:44 CDT.

In preparation for the USC downtime, we ran condor_off at 22:00 CDT on 3/27/17. We turned condor back on (condor_on) at 21:01 CDT on 3/29/17.

Blue Waters deleted the cron job, so we have no job statistics on Blue Waters from 3/25/17 at 17:00 to 3/28/17 at 00:00 (when Blue Waters went down), then again from 3/29/17 at 2:27 (when Blue Waters came back) until 3/29/17 at 21:19.

Application-level Metrics

- Makespan: 738.4 hrs (DST started during the run)

- Uptime: 691.4 hrs (downtime during BW and HPC system maintenance)

Note: uptime is what's used for calculating the averages for jobs, nodes, etc.

- 876 runs

- 876 pairs of SGTs generated on Titan

- 876 post-processing runs

- 145 performed on Titan (16.6%), 731 performed on Blue Waters (83.4%)

- 284,839,014 seismogram pairs generated

- 42,725,852,100 intensity measures generated (150 per seismogram pair)

- 15,581 jobs submitted

- 898,805.3 node-hrs used (21,566,832 core-hrs)

- 1026 node-hrs per site (24,620 core-hrs)

- On average, 12.1 jobs running, with a max of 41

- Average of 1295 nodes used, with a maximum of 5374 (101,472 cores).

- Total data generated: ?

- 370 TB SGTs generated (216 GB per single SGT)

- 777 TB intermediate data generated (44 GB per velocity file, duplicate SGTs)

- 10.7 TB output data (15,202,088 files)

Delay per job (using a 7-day, no-restarts cutoff: 1618 workflows, 19143 jobs) was mean: 1836 sec, median: 467, min: 0, max: 91350, sd: 38209

| Bins (sec) | 0 | 60 | 120 | 180 | 240 | 300 | 600 | 900 | 1800 | 3600 | 7200 | 14400 | 43200 | 86400 | 172800 | 259200 | 604800 | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Jobs per bin | 5511 | 1435 | 401 | 469 | 503 | 2082 | 1428 | 2612 | 2373 | 1390 | 567 | 302 | 68 | 2 | 0 | 0 |

- Application parallel node speedup (node-hours divided by makespan) was 1217x. (Divided by uptime: 1300x)

- Application parallel workflow speedup (number of workflows times average workflow makespan divided by application makespan) was 16.0x. (Divided by uptime: 17.1x)

Titan

- Wallclock time: 720.3 hrs

- Uptime: 628.0 hrs (all downtime was SCEC)

- SGTs generated for 876 sites, post-processing for 145 sites

- 13,334 jobs submitted to the Titan queue

- Running jobs: average 5.7, max of 25

- Idle jobs: average 11.1, max of 38

- Nodes: average 669 (10,704 cores), max 4406 (70,496 cores, 23.6% of Titan)

- Titan portal and Titan internal reporting agree: 13,490,509 SUs used (449,683.6 node-hrs)

Delay per job (7-day cutoff, no restarts, 11603 jobs): mean 2109 sec, median: 1106, min: 0, max: 91350, sd: 3818

| Bins (sec) | 0 | 60 | 120 | 180 | 240 | 300 | 600 | 900 | 1800 | 3600 | 7200 | 14400 | 43200 | 86400 | 172800 | 259200 | 604800 | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Jobs per bin | 149 | 1420 | 333 | 305 | 302 | 1405 | 1175 | 2442 | 2304 | 1248 | 363 | 136 | 19 | 2 | 0 | 0 |

Blue Waters

The Blue Waters cronjob which generated the queue information file was down for about 2 days, so we reconstructed its contents from the job history query functionality on the Blue Waters portal.

- Wallclock time: 668.1 hrs

- Uptime: 601.3 hrs (34.5 SCEC downtime including USC, 32.3 BW downtime)

- Post-processing for 731 sites

- 2,248 jobs submitted to the Blue Waters queue

- Running jobs: average 7.4 (job history), max of 30

- Idle jobs: average 5.1, max of 30 (this comes from the cronjob)

- Nodes: average 749 (23,968 cores), max 2047 (65504 cores, 9.1% of Blue Waters)

- Based on the Blue Waters jobs report, 449,121.7 node-hours used (14,371,894 core-hrs), but 355,184 hours (11,365,888 core-hrs) charged (25% reductions granted for backfill and wallclock accuracy)

- 7.2% of jobs got accuracy discount

- 62.1% of jobs got backfill discount

- 21.1% of 120-node (DirectSynth) jobs got accuracy discount

- 62.2% of 120-node jobs got backfill discount

- 18.7% of 120-node jobs got both

Delay per job (7-day cutoff, no restarts, 2189 jobs): mean 4860 sec, median: 573, min: 0, max: 63482, sd: 10219

| Bins (sec) | 0 | 60 | 120 | 180 | 240 | 300 | 600 | 900 | 1800 | 3600 | 7200 | 14400 | 43200 | 86400 | 172800 | 259200 | 604800 | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Jobs per bin | 20 | 6 | 68 | 164 | 201 | 677 | 253 | 170 | 69 | 142 | 204 | 166 | 49 | 0 | 0 | 0 |

Workflow-level Metrics

- The average makespan of a workflow (7-day cutoff, workflows with retries ignored, so 1618/1625 workflows considered) was 48567 sec (13.5 hrs), median: 32330 (9.0 hrs), min: 251, max: 427416, sd: 48648

- For integrated workflows on Titan (147 workflows), mean: 91931 sec (25.5 hrs), median: 64992 (18.1 hrs), min: 316, max: 427216, sd: 74671

- For SGT workflows on Titan (736 workflows), mean: 37358 sec (10.4 hrs), median: 23172 (6.4 hrs), min: 8584, max: 241659, sd: 38209

- For PP workflows on Blue Waters (735 workflows), mean: 51120 (14.2 hrs), median: 35016 (9.7 hrs), min: 251, max: 393699, sd: 46090